k8s RBAC 多租户权限控制实现

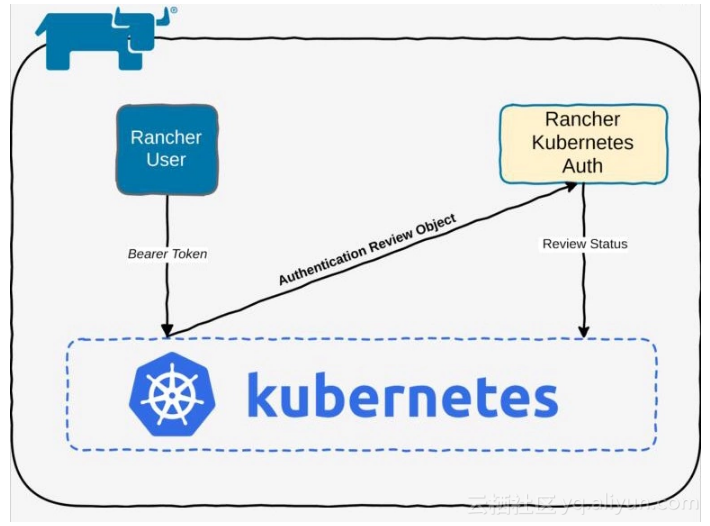

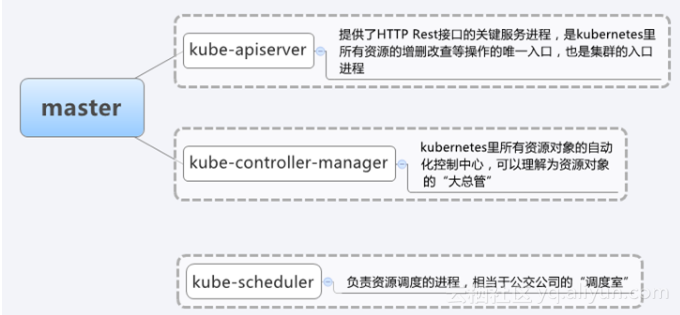

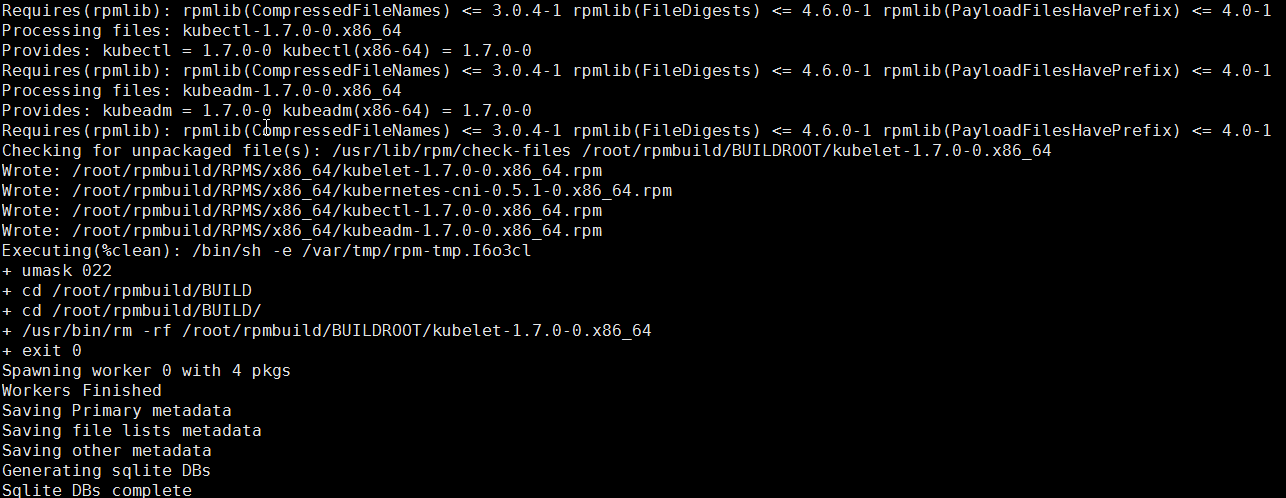

访问到权限分两部分 1.Authenticating认证授权配置由kube-apiserver管理 这里使用的是 Static Password File方式。apiserver启动yaml里配置basic-auth-file即可,容器 启动apiserver的话要注意这个文件需要是在容器内能访问到的。(可以通过挂载文件,或者在已经挂载的路径里增加配置文件)basic-auth-file文件格式见官方文档https://kubernetes.io/docs/admin/authentication/#static-password-file注意password在第一个,之前没注意被卡了很久。修改了文件以后需要重启apiserver才能生效。这里kubectldelete删除pod有问题,使用docker命令查看apiserver容器其实没有重启。解决方案是使用dockerkill杀掉容器。自动重启。 [root@tensorflow1 ~]# curl -u admin:admin "https://localhost:6443/api/v1/pods" -k { "kind": "Status", "apiVersion": "v1", "metadata": { }, "status": "Failure", "message": "pods is forbidden: User \"system:anonymous\" cannot list pods at the cluster scope", "reason": "Forbidden", "details": { "kind": "pods" }, "code": 403 } 这个就是没有通过认证的报错 2.Authorization授权 k8sRBAC授权规则https://kubernetes.io/docs/admin/authorization/rbac/ 简单来说就是 权限--角色--用户绑定需要配置两个文件一个是role/clusterRole,定义角色以及其权限一个是roleBinding/clusterRoleBinding,定义用户和角色的关系 { "kind": "Status", "apiVersion": "v1", "metadata": { }, "status": "Failure", "message": "pods is forbidden: User \"admin\" cannot list pods at the cluster scope", "reason": "Forbidden", "details": { "kind": "pods" }, "code": 403 } 这个就是没有权限访问,需要配置RBAC 文件如下:方案1:role + roleBinding kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: user1 name: pod-reader rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["*"] - apiGroups: [""] # "" indicates the core API group resources: ["namespaces"] verbs: ["*"] kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: user1-rolebinding namespace: user1 subjects: - kind: User name: user1 # Name is case sensitive apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: myauth-user apiGroup: rbac.authorization.k8s.io 方案2:clusterRole + roleBinding (推荐) clusterRole定义全局权限,roleBinding将权限限制到namespace。clusterRole仅需要定义一次。 kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: myauth-user rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["*"] - apiGroups: [""] # "" indicates the core API group resources: ["namespaces"] verbs: ["*"] - apiGroups: [""] # "" indicates the core API group resources: ["resourcequotas"] verbs: ["*"] kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: user2-rolebinding namespace: user2 subjects: - kind: User name: user2 # Name is case sensitive apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: myauth-user apiGroup: rbac.authorization.k8s.io kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: user1-rolebinding namespace: user1 subjects: - kind: User name: user1 # Name is case sensitive apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: myauth-user apiGroup: rbac.authorization.k8s.io 3.配置 新建静态密码文件 # vi /etc/kubernetes/pki/basic_auth_file admin,admin,1004 jane2,jane,1005 user1,user1,1006 user2,user2,1007 修改apiserver配置修改/etc/kubernetes/manifests/kube-apiserver.yaml在一堆参数配置下面增加 --basic-auth-file=/etc/kubernetes/pki/basic_auth_file eg: - --etcd-servers=https://127.0.0.1:2379 - --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt - --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt - --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key - --basic-auth-file=/etc/kubernetes/pki/basic_auth_file 这里将静态文件放在/etc/kubernetes/pki/目录下的原因是,apiserver通过容器启动,这个路径已经挂载容器中了,可以被访问到。放在其他路径需要额外配置挂载路径。apiserver只会启动在master节点上,故仅需要在master节点上配置即可。 启动好以后在master机器上执行kubectlgetall,多半是执行不成功的,因为修改apiserver配置文件后,apiserver自动重启,然后启动失败,所以就无法访问集群了。使用dockerps查看发现k8s_kube-scheduler和k8s_kube-controller-manager重启了,但是apiserver没起来。执行systemctlrestartkubelet,再dockerps就能看到apiserver启动成功了。如果还没启动起来,那再看看上面哪里配置有问题。 应用 使用--username={username}--password={password}参数访问kubectl 参考https://kubernetes-v1-4.github.io/docs/user-guide/kubectl/kubectl_create/ kubectl get all -n user1 --username=user1 --password=user1 kubectl create -f tf1-rc.yaml --username=user1 --password=user1 使用 curl -u {username}:{password} `js"https://{masterIP}:6443/api/v1/" -k访问httprestfulapicurl -u jane:jane2 "https://localhost:6443/api/v1/namespaces/user1/pods" -kcurl -u jane:jane2 "https://localhost:6443/api/v1/namespaces/user1" -k curl -u user1:user1 "https://localhost:6443/api/v1/namespaces/user1" -kcurl -u user1:user1 "https://localhost:6443/api/v1/namespaces/user1/pods" -kcurl -u user1:user1 "https://localhost:6443/api/v1/namespaces/user1/resourcequotas/" -k curl -u user2:user2 "https://localhost:6443/api/v1/namespaces/user2" -kcurl -u user2:user2 "https://localhost:6443/api/v1/namespaces/user2/pods" -kcurl -u user2:user2 "https://localhost:6443/api/v1/namespaces/user2/resourcequotas/" -k