Hbase集群master.HMasterCommandLine: Master exiting

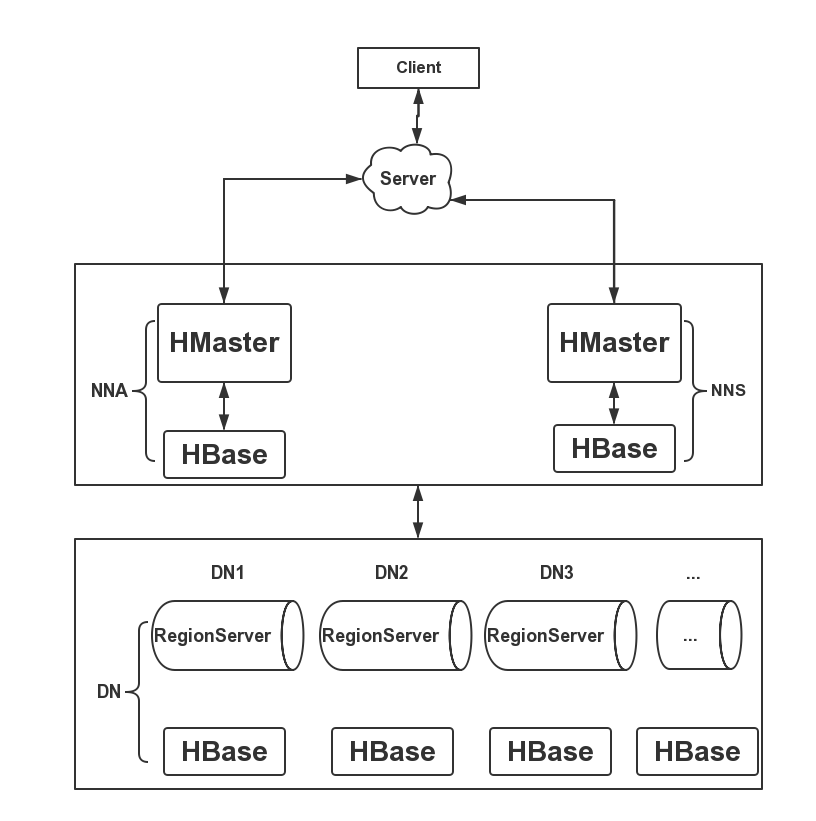

2016-12-15 17:01:57,473 INFO [main] impl.MetricsSystemImpl: HBase metrics system started 2016-12-15 17:01:59,649 ERROR [main] master.HMasterCommandLine: Master exiting java.lang.RuntimeException: Failed construction of Master: class org.apache.hadoop.hbase.master.HMaster. at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:2426) at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:231) at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:137) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126) at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:2436) Caused by: java.util.ServiceConfigurationError: org.apache.hadoop.fs.FileSystem: Provider org.apache.hadoop.fs.s3a.S3AFileSystem could not be instantiated at java.util.ServiceLoader.fail(ServiceLoader.java:232) at java.util.ServiceLoader.access$100(ServiceLoader.java:185) at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:384) at java.util.ServiceLoader$LazyIterator.next(ServiceLoader.java:404) at java.util.ServiceLoader$1.next(ServiceLoader.java:480) at org.apache.hadoop.fs.FileSystem.loadFileSystems(FileSystem.java:2563) at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2574) at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2591) at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:91) at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2630) at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2612) at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:370) at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296) at org.apache.hadoop.hbase.util.FSUtils.getRootDir(FSUtils.java:1003) at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:570) at org.apache.hadoop.hbase.master.HMaster.<init>(HMaster.java:381) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:2419) ... 5 more Caused by: java.lang.NoClassDefFoundError: com/amazonaws/auth/AWSCredentialsProvider at java.lang.Class.getDeclaredConstructors0(Native Method) at java.lang.Class.privateGetDeclaredConstructors(Class.java:2671) at java.lang.Class.getConstructor0(Class.java:3075) at java.lang.Class.newInstance(Class.java:412) at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:380) ... 23 more Caused by: java.lang.ClassNotFoundException: com.amazonaws.auth.AWSCredentialsProvider at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 28 more 看第一个错误发现说已存在,这不可能啊,百度替换了hadoop包,去zkCil.sh(后面不能家start,否则进不去zookeeper)下,"ls \"发现并没有hbase,说明,并不是zookeeper过期,期三个错误是亚马逊弹性云计算错误,fuck,这和亚马逊什么关系,我是自己的电脑,然后百度第二个错误看英文的资料发现 把这个jar包引入hbase下的lib即可。 注意regisionserver(拼写或许有误,没检查),这里面只配置次节点就好。也就是只配置SLave1。然后主节点执行start-hbase.sh即可。 服务器 192.168.58.180 192.168.58.181 192.168.58.182 192.168.58.183 Name CentOSMaster Slvae1 Slave2 StandByNameNode NameNode Yes Yes DataNode Yes Yes Yes journalNode Yes Yes Yes ZooKeeper Yes Yes Yes ZKFC Yes Yes Spark Yes Yes hbase Yes Yes