make

make install

如果安装成功的话进入【/usr/local/lib】目录下(默认位置),可以看到已经生成了snappy的库文件

![]()

此刻,已经是该装好的,装好了,准备执行!

10. 在编译hadoop源码之前,替换掉MAVEN_HOME/conf目录下的setting.xml

![]()

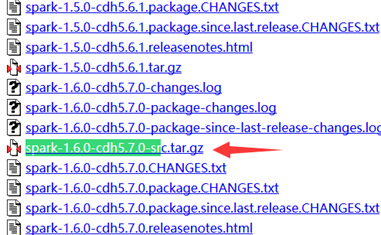

11 下载对应的Hadoop源码

其实啊,这之后也是hadoop-2.6.0-cdh5.4.5-src.tar.gz

编译,生成hadoop-2.6.0-cdh5.4.5.tar.gz,然后进行集群安装!

hadoop-2.6.0-cdh5.4.5-src.tar.gz

源码编译正在开始!

http://archive.cloudera.com/cdh5/cdh/5/

下载hadoop-2.6.0-cdh5.4.5-src.tar.gz

解压hadoop-2.6.0-cdh5.4.5-src.tar.gz源代码包

删除hadoop-2.6.0-cdh5.4.5-src.tar.gz源代码包

然后进入hadoop-2.6.0-cdh5.4.5目录,

mvn package -Pdist,native -DskipTests -Dtar

mvn编译时间很长,虚拟机里面编译了11分钟。

mvn package -Pdist,native -DskipTests -Dtar -Dbundle.snappy -Dsnappy.lib=/usr/local/lib(也可以尝试)

mvn package [-Pdist] [-Pdocs] [-Psrc] [-Pnative] [Dtar] 这是构建发布版

进入

mvn package -Pdist,native -DskipTests -Dtar

![]()

![]()

[INFO] Apache Hadoop Extras ............................... SKIPPED

[INFO] Apache Hadoop Pipes ................................ SKIPPED

[INFO] Apache Hadoop OpenStack support .................... SKIPPED

[INFO] Apache Hadoop Amazon Web Services support .......... SKIPPED

[INFO] Apache Hadoop Client ............................... SKIPPED

[INFO] Apache Hadoop Mini-Cluster ......................... SKIPPED

[INFO] Apache Hadoop Scheduler Load Simulator ............. SKIPPED

[INFO] Apache Hadoop Tools Dist ........................... SKIPPED

[INFO] Apache Hadoop Tools ................................ SKIPPED

[INFO] Apache Hadoop Distribution ......................... SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:24 h

[INFO] Finished at: 2016-09-14T02:04:36+08:00

[INFO] Final Memory: 49M/120M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-surefire-plugin:2.16:test (default-test) on project hadoop-annotations: Execution default-test of goal org.apache.maven.plugins:maven-surefire-plugin:2.16:test failed: Plugin org.apache.maven.plugins:maven-surefire-plugin:2.16 or one of its dependencies could not be resolved: Failed to collect dependencies at org.apache.maven.plugins:maven-surefire-plugin:jar:2.16 -> org.apache.maven.surefire:maven-surefire-common:jar:2.16 -> org.apache.maven.plugin-tools:maven-plugin-annotations:jar:3.2: Failed to read artifact descriptor for org.apache.maven.plugin-tools:maven-plugin-annotations:jar:3.2: Could not transfer artifact org.apache.maven.plugin-tools:maven-plugin-annotations:pom:3.2 from/to nexus-osc (http://nexus.rc.dataengine.com/nexus/content/groups/public): Access denied to: http://nexus.rc.dataengine.com/nexus/content/groups/public/org/apache/maven/plugin-tools/maven-plugin-annotations/3.2/maven-plugin-annotations-3.2.pom , ReasonPhrase:Forbidden. -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/PluginResolutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <goals> -rf :hadoop-annotations

[root@Compiler hadoop-2.6.0-src]#

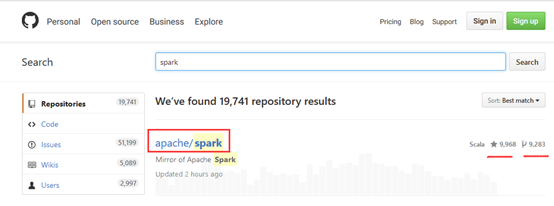

其实,同spark源码编译一样,见我的博客链接

遇到问题,就解决问题。

Spark源码的编译过程详细解读(各版本)

然后,我们来解决这个问题:

解决方法:

vim ./hadoop-common-project/hadoop-auth/pom.xml

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-annotations</artifactId>

<scope>compile</scope>

</dependency>

将节点hadoop-annotations的值由provide 改成compile