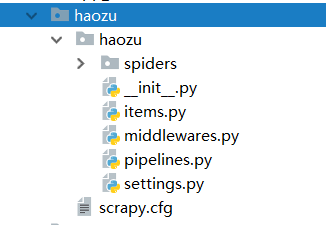

1.创建项目

在控制台通过scrapy startproject 创建项目

我们通过scrapy startproject haozu 创建爬虫项目

![haozu haozu]()

2.创建爬虫文件

在控制台 进入spiders 文件夹下 通过scrapy genspider <网站域名>

scrapy genspider haozu_xzl www.haozu.com 创建爬虫文件

3.在爬虫文件中 haozu_xzl.py写代码 python version=3.6.0

-- coding: utf-8 --

import scrapy

import requests

from lxml import html

etree =html.etree

from ..items import HaozuItem

import random

class HaozuXzlSpider(scrapy.Spider):

# scrapy crawl haozu_xzl

name = 'haozu_xzl'

# allowed_domains = ['www.haozu.com/sz/zuxiezilou/']

start_urls = "http://www.haozu.com/sz/zuxiezilou/"

province_list = ['bj', 'sh', 'gz', 'sz', 'cd', 'cq', 'cs','dl','fz','hz','hf','nj','jian','jn','km','nb','sy',

'su','sjz','tj','wh','wx','xa','zz']

def start_requests(self):

user_agent = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2'

headers = {'User-Agent': user_agent}

for s in self.province_list:

start_url = "http://www.haozu.com/{}/zuxiezilou/".format(s)

# 包含yield语句的函数是一个生成器,每次产生一个值,函数被冻结,被唤醒后再次产生一个值

yield scrapy.Request(url=start_url, headers=headers, method='GET', callback=self.parse, \

meta={"headers": headers,"city":s})

def parse(self, response):

lists = response.body.decode('utf-8')

selector = etree.HTML(lists)

elem_list = selector.xpath('/html/body/div[2]/div[2]/div/dl[1]/dd/div[2]/div[1]/a')

print(elem_list,type(elem_list))

for elem in elem_list[1:-1]:

try:

district = str(elem.xpath("text()"))[1:-1].replace("'",'')

# district.remove(district[0])

# district.pop()

print(district,type(district))

district_href =str(elem.xpath("@href"))[1:-1].replace("'",'')

# district_href.remove(district_href[0])

print(district_href,type(district_href))

elem_url ="http://www.haozu.com{}".format(district_href)

print(elem_url)

yield scrapy.Request(url=elem_url, headers=response.meta["headers"], method='GET', callback=self.detail_url,

meta={"district": district,"url":elem_url,"headers":response.meta["headers"],"city":response.meta["city"]})

except Exception as e:

print(e)

pass

def detail_url(self, response):

print("===================================================================")

for i in range(1,50):

# 组建url

re_url = "{}o{}/".format(response.meta["url"],i)

print(re_url)

try:

response_elem = requests.get(re_url,headers=response.meta["headers"])

seles= etree.HTML(response_elem.content)

sele_list = seles.xpath("/html/body/div[3]/div[1]/ul[1]/li")

for sele in sele_list:

href = str(sele.xpath("./div[2]/h1/a/@href"))[1:-1].replace("'",'')

print(href)

href_url = "http://www.haozu.com{}".format(href)

print(href_url)

yield scrapy.Request(url=href_url, headers=response.meta["headers"], method='GET',

callback=self.final_url,

meta={"district": response.meta["district"],"city":response.meta["city"]})

except Exception as e:

print(e)

pass

def final_url(self,response):

try:

body = response.body.decode('utf-8')

sele_body = etree.HTML(body)

#获取价格 名称 地址

item = HaozuItem()

item["city"]= response.meta["city"]

item['district']=response.meta["district"]

item['addr'] = str(sele_body.xpath("/html/body/div[2]/div[2]/div/div/div[2]/span[1]/text()[2]"))[1:-1].replace("'",'')

item['title'] = str(sele_body.xpath("/html/body/div[2]/div[2]/div/div/div[1]/h1/span/text()"))[1:-1].replace("'",'')

price = str(sele_body.xpath("/html/body/div[2]/div[3]/div[2]/div[1]/span/text()"))[1:-1].replace("'",'')

price_danwei=str(sele_body.xpath("/html/body/div[2]/div[3]/div[2]/div[1]/div/div/i/text()"))[1:-1].replace("'",'')

print(price+price_danwei)

item['price']=price+price_danwei

yield item

except Exception as e:

print(e)

pass

4.修改items.py 文件

-- coding: utf-8 --

Define here the models for your scraped items

See documentation in:

import scrapy

class HaozuItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

city = scrapy.Field()

district =scrapy.Field()

title = scrapy.Field()

addr =scrapy.Field()

price = scrapy.Field()

5修改settings.py

打开

ITEM_PIPELINES = {

'haozu.pipelines.HaozuPipeline': 300,

}

6 修改pipelines.py文件 这里可以自定义存储文件格式

-- coding: utf-8 --

Define your item pipelines here

Don't forget to add your pipeline to the ITEM_PIPELINES setting

import csv

class HaozuPipeline(object):

def process_item(self, item, spider):

f = open('./xiezilou2.csv', 'a+',encoding='utf-8',newline='')

write = csv.writer(f)

write.writerow((item['city'],item['district'],item['addr'],item['title'],item['price']))

print(item)

return item

7.启动框架

在控制台 输入 scrapy crawl haozu_xzl 启动程序