首先在node1(10.10.172.201)上容器容器,如下,登陆容器发现已经按照上面flannel配置的分配了一个ip段(每个宿主机都会分配一个182.48.0.0

/16

的网段)

[root@node1 ~]

2dea24b1562f3d4dc89cde3fa00c707276edcfba1c8f3b0ab4c62e075e63de2f

[root@node1 ~]

root@2dea24b1562f:/

root@2dea24b1562f:/

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link

/loopback

00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1

/8

scope host lo

valid_lft forever preferred_lft forever

inet6 ::1

/128

scope host

valid_lft forever preferred_lft forever

7: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1472 qdisc noqueue state UP group default

link

/ether

02:42:0a:0a:5c:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.92.2

/24

scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:fe0a:5c02

/64

scope link

valid_lft forever preferred_lft forever

接着在node2(10.10.172.202)上容器容器

[root@node2 ~]

374417e9ce5bcd11222d8138e684707c00ca8a4b7a16243ac5cfb1a8bd19ee6e

[root@node2 ~]

root@374417e9ce5b:/

9.3

root@374417e9ce5b:/

root@374417e9ce5b:/

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link

/loopback

00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1

/8

scope host lo

valid_lft forever preferred_lft forever

inet6 ::1

/128

scope host

valid_lft forever preferred_lft forever

469: eth0@if470: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1472 qdisc noqueue state UP group default

link

/ether

02:42:0a:0a:1d:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.29.2

/24

scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:fe0a:1d02

/64

scope link

valid_lft forever preferred_lft forever

root@374417e9ce5b:/

PING 10.10.92.2 (10.10.92.2) 56(84) bytes of data.

64 bytes from 10.10.92.2: icmp_seq=1 ttl=60

time

=0.657 ms

64 bytes from 10.10.92.2: icmp_seq=2 ttl=60

time

=0.487 ms

.......

root@374417e9ce5b:/

PING www.a.shifen.com (115.239.210.27) 56(84) bytes of data.

64 bytes from 115.239.210.27 (115.239.210.27): icmp_seq=1 ttl=48

time

=11.7 ms

64 bytes from 115.239.210.27 (115.239.210.27): icmp_seq=2 ttl=48

time

=12.4 ms

.......

发现,在两个宿主机的容器内,互相

ping

对方容器的ip,是可以

ping

通的!也可以直接连接外网(桥接模式)

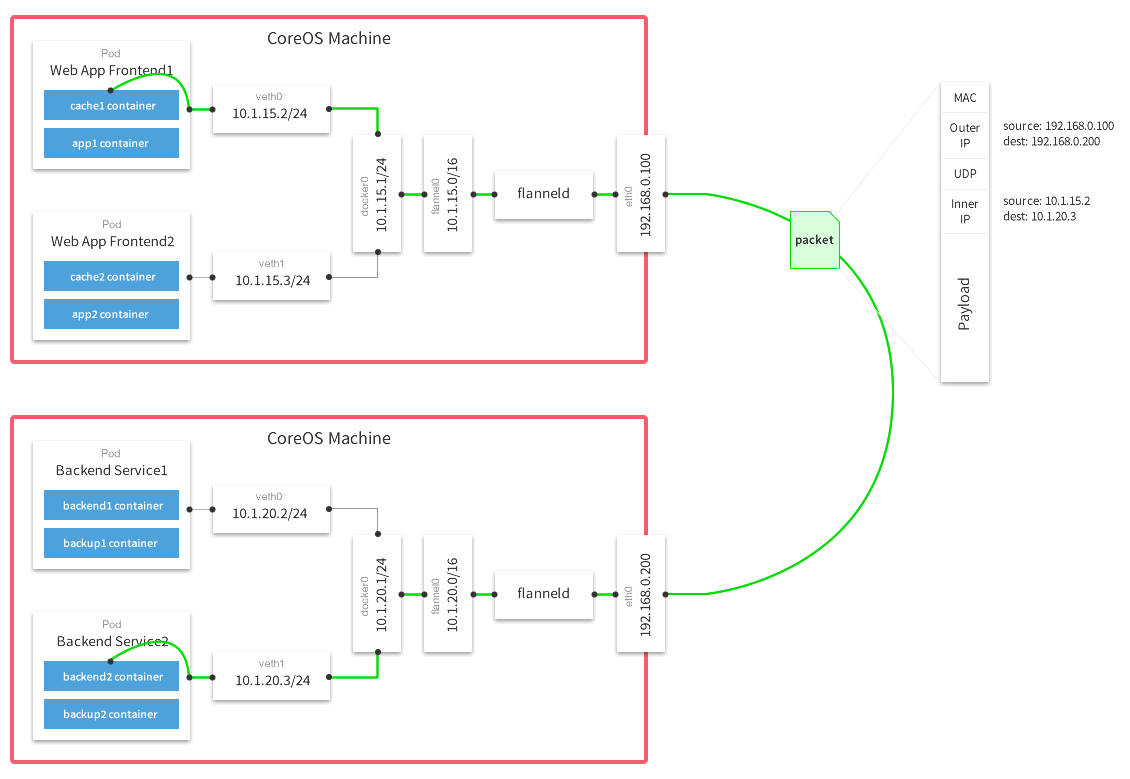

查看两台宿主机的网卡信息,发现docker0虚拟网卡的ip(相当于容器的网关)也已经变成了flannel配置的ip段,并且多了flannel0的虚拟网卡信息

[root@node1 ~]

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1472

inet 10.10.92.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::42:ebff:fe16:438d prefixlen 64 scopeid 0x20<link>

ether 02:42:eb:16:43:8d txqueuelen 0 (Ethernet)

RX packets 5944 bytes 337155 (329.2 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6854 bytes 12310552 (11.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eno16777984: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.10.172.201 netmask 255.255.255.0 broadcast 10.10.172.255

inet6 fe80::250:56ff:fe86:2135 prefixlen 64 scopeid 0x20<link>

ether 00:50:56:86:21:35 txqueuelen 1000 (Ethernet)

RX packets 98110 bytes 134147911 (127.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 60177 bytes 5038428 (4.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.10.92.0 netmask 255.255.0.0 destination 10.10.92.0

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 2 bytes 168 (168.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2 bytes 168 (168.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

.......

通过下面命令,可以查看到本机的容器的ip所在的范围

[root@node1 ~]

root 16589 0.3 1.3 734912 27840 ? Ssl 13:23 0:04

/usr/bin/dockerd-current

--add-runtime docker-runc=

/usr/libexec/docker/docker-runc-current

--default-runtime=docker-runc --

exec

-opt native.cgroupdriver=systemd --userland-proxy-path=

/usr/libexec/docker/docker-proxy-current

--selinux-enabled --log-driver=journald --signature-verification=

false

--bip=10.10.92.1

/24

--ip-masq=

true

--mtu=1472

这里面的“--bip=10.10.92.1

/24

”这个参数,它限制了所在节点容器获得的IP范围。

这个IP范围是由Flannel自动分配的,由Flannel通过保存在Etcd服务中的记录确保它们不会重复。

[root@node2 ~]

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1472

inet 10.10.29.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::42:42ff:fe21:d694 prefixlen 64 scopeid 0x20<link>

ether 02:42:42:21:d6:94 txqueuelen 0 (Ethernet)

RX packets 644697 bytes 41398904 (39.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 675608 bytes 1683878582 (1.5 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.10.172.202 netmask 255.255.255.0 broadcast 10.10.172.255

inet6 fe80::250:56ff:fe86:6833 prefixlen 64 scopeid 0x20<link>

ether 00:50:56:86:68:33 txqueuelen 1000 (Ethernet)

RX packets 2552698 bytes 2441204870 (2.2 GiB)

RX errors 0 dropped 104 overruns 0 frame 0

TX packets 1407162 bytes 3205869301 (2.9 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.10.29.0 netmask 255.255.0.0 destination 10.10.29.0

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 2 bytes 168 (168.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2 bytes 168 (168.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

.......

[root@node2 ~]

root 31925 0.3 1.7 747404 35864 ? Ssl 13:21 0:05

/usr/bin/dockerd-current

--add-runtime docker-runc=

/usr/libexec/docker/docker-runc-current

--default-runtime=docker-runc --

exec

-opt native.cgroupdriver=systemd --userland-proxy-path=

/usr/libexec/docker/docker-proxy-current

--selinux-enabled --log-driver=journald --signature-verification=

false

--bip=10.10.29.1

/24

--ip-masq=

true

--mtu=1472