ubuntu安装spark2.1 hadoop2.7.3集群

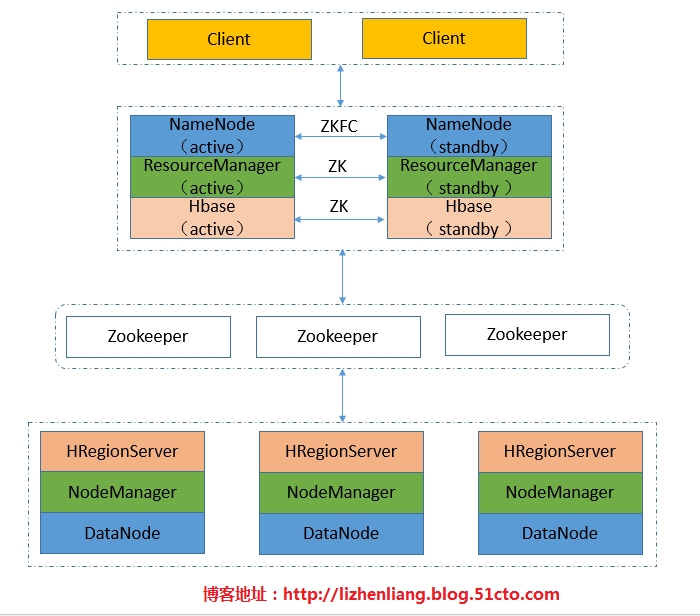

0: 设置系统登录相关 Master要执行 1 cat $HOME/. ssh /id_rsa .pub>>$HOME/. ssh /authorized_keys 如果用root用户 1 sed -ri 's/^(PermitRootLogin).*$/\1yes/' /etc/ssh/sshd_config 编辑/etc/hosts 1 2 3 4 5 6 7 8 9 10 11 127.0.0.1localhost #别把spark1放在这 192.168.100.25spark1 #spark1isMaster 192.168.100.26spark2 192.168.100.27spark3 127.0.1.1ubuntu #ThefollowinglinesaredesirableforIPv6capablehosts ::1localhostip6-localhostip6-loopback ff02::1ip6-allnodes ff02::2ip6-allrouters 如果把 spark1 放在/etc/hosts第一行, 会发现在slave 有下面的错误 1 org.apache.hadoop.ipc.Client:Retryingconnecttoserver:spark1 /192 .168.100.25:9000.Alreadytried0 time (s) 然后在spark1 运行 1 2 ss-lnt LISTEN0128localhost:9000 会发现监听的是本地. 删除 hosts中的相关文本重新启动hadoop,解决问题 1: 安装java 可以直接apt-get 1 2 3 4 apt-get install python-software-properties-y add-apt-repositoryppa:webupd8team /java apt-getupdate apt-get install oracle-java7-installer 或者下载 1 2 3 4 5 6 7 8 9 10 11 12 13 wgethttp: //download .oracle.com /otn-pub/java/jdk/7u80-b15/jdk-7u80-linux-x64 . tar .gz mkdir /usr/lib/jvm tar xvfjdk-7u80-linux-x64. tar .gz mv jdk1.7.0_80 /usr/lib/jvm #配置相关路径 update-alternatives-- install "/usr/bin/java" "java" "/usr/lib/jvm/jdk1.7.0_80/bin/java" 1 update-alternatives-- install "/usr/bin/javac" "javac" "/usr/lib/jvm/jdk1.7.0_80/bin/javac" 1 update-alternatives-- install "/usr/bin/javaws" "javaws" "/usr/lib/jvm/jdk1.7.0_80/bin/javaws" 1 update-alternatives--configjava #验证一下 java-version javac-version javaws-version 添加环境变量 1 2 3 4 5 6 cat >> /etc/profile <<EOF export JAVA_HOME= /usr/lib/jvm/jdk1 .7.0_80 export JRE_HOME= /usr/lib/jvm/jdk1 .7.0_80 /jre export CLASSPATH=.:$CLASSPATH:$JAVA_HOME /lib :$JRE_HOME /lib export PATH=$PATH:$JAVA_HOME /bin :$JRE_HOME /bin EOF 2: 安装 hadoop 1 2 3 4 tar xvfhadoop-2.7.3. tar .gz mv hadoop-2.7.3 /usr/local/hadoop cd /usr/local/hadoop mkdir -phdfs/{data,name,tmp} 添加环境变量 1 2 3 4 cat >> /etc/profile <<EOF export HADOOP_HOME= /usr/local/hadoop export PATH=$PATH:$HADOOP_HOME /bin EOF 编辑 hadoop-env.sh 文件 1 export JAVA_HOME= /usr/lib/jvm/jdk1 .7.0_80 #只改了这一行 编辑 core-site.xml 文件 1 2 3 4 5 6 7 8 9 10 <configuration> <property> <name>fs.defaultFS< /name > <value>hdfs: //spark1 :9000< /value > < /property > <property> <name>hadoop.tmp. dir < /name > <value> /usr/local/hadoop/hdfs/tmp < /value > < /property > < /configuration > 编辑hdfs-site.xml 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 <configuration> <property> <name>dfs.namenode.name. dir < /name > <value> /usr/local/hadoop/hdfs/name < /value > < /property > <property> <name>dfs.datanode.data. dir < /name > <value> /usr/local/hadoop/hdfs/data < /value > < /property > <property> <name>dfs.replication< /name > <value>3< /value > < /property > < /configuration > 编辑mapred-site.xml 文件 1 2 3 4 5 6 <configuration> <property> <name>mapreduce.framework.name< /name > <value>yarn< /value > < /property > < /configuration > 编辑yarn-site.xml 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 <configuration> <property> <name>yarn.nodemanager.aux-services< /name > <value>mapreduce_shuffle< /value > < /property > <property> <name>yarn.resourcemanager. hostname < /name > <value>spark1< /value > < /property > <!--property> 别添加这个属性,添加了可能出现下面的错误: Problembindingto[spark1:0]java.net.BindException:Cannotassignrequestedaddress <name>yarn.nodemanager. hostname < /name > <value>spark1< /value > < /property-- > < /configuration > 上面相关文件的具体属性及值在官网查询: https://hadoop.apache.org/docs/r2.7.3/ 编辑masters 文件 1 echo spark1>masters 编辑 slaves 文件 1 2 3 spark1 spark2 spark3 安装好后,使用rsync 把相关目录及/etc/profile同步过去即可 启动hadoop dfs 1 . /sbin/start-dfs .sh 初始化文件系统 1 hadoopnamenode- format 启动 yarn 1 . /sbin/start-yarn .sh 检查spark1相关进程 1 2 3 4 5 6 7 root@spark1: /usr/local/spark/conf #jps 1699NameNode 8856Jps 2023SecondaryNameNode 2344NodeManager 1828DataNode 2212ResourceManager spark2 spark3 也要类似下面的运程 1 2 3 4 root@spark2: /tmp #jps 3238Jps 1507DataNode 1645NodeManager 可以打开web页面查看 1 http: //192 .168.100.25:50070 测试hadoop 1 2 3 4 hadoopfs- mkdir /testin hadoopfs-put~ /str .txt /testin cd /usr/local/hadoop hadoopjar. /share/hadoop/mapreduce/hadoop-mapreduce-examples-2 .7.3.jarwordcount /testin/str .txttestout 结果如下: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 hadoopjar. /share/hadoop/mapreduce/hadoop-mapreduce-examples-2 .7.3.jarwordcount /testin/str .txttestout 17 /02/24 11:20:59INFOclient.RMProxy:ConnectingtoResourceManageratspark1 /192 .168.100.25:8032 17 /02/24 11:21:01INFOinput.FileInputFormat:Totalinputpathstoprocess:1 17 /02/24 11:21:01INFOmapreduce.JobSubmitter:numberofsplits:1 17 /02/24 11:21:02INFOmapreduce.JobSubmitter:Submittingtokens for job:job_1487839487040_0002 17 /02/24 11:21:06INFOimpl.YarnClientImpl:Submittedapplicationapplication_1487839487040_0002 17 /02/24 11:21:06INFOmapreduce.Job:Theurltotrackthejob:http: //spark1 :8088 /proxy/application_1487839487040_0002/ 17 /02/24 11:21:06INFOmapreduce.Job:Runningjob:job_1487839487040_0002 17 /02/24 11:21:28INFOmapreduce.Job:Jobjob_1487839487040_0002running in ubermode: false 17 /02/24 11:21:28INFOmapreduce.Job:map0%reduce0% 17 /02/24 11:22:00INFOmapreduce.Job:map100%reduce0% 17 /02/24 11:22:15INFOmapreduce.Job:map100%reduce100% 17 /02/24 11:22:17INFOmapreduce.Job:Jobjob_1487839487040_0002completedsuccessfully 17 /02/24 11:22:17INFOmapreduce.Job:Counters:49 FileSystemCounters FILE:Numberofbytes read =212115 FILE:Numberofbyteswritten=661449 FILE:Numberof read operations=0 FILE:Numberoflarge read operations=0 FILE:Numberofwriteoperations=0 HDFS:Numberofbytes read =377966 HDFS:Numberofbyteswritten=154893 HDFS:Numberof read operations=6 HDFS:Numberoflarge read operations=0 HDFS:Numberofwriteoperations=2 JobCounters Launchedmaptasks=1 Launchedreducetasks=1 Data- local maptasks=1 Total time spentbyallmaps in occupiedslots(ms)=23275 Total time spentbyallreduces in occupiedslots(ms)=11670 Total time spentbyallmaptasks(ms)=23275 Total time spentbyallreducetasks(ms)=11670 Totalvcore-millisecondstakenbyallmaptasks=23275 Totalvcore-millisecondstakenbyallreducetasks=11670 Totalmegabyte-millisecondstakenbyallmaptasks=23833600 Totalmegabyte-millisecondstakenbyallreducetasks=11950080 Map-ReduceFramework Mapinputrecords=1635 Mapoutputrecords=63958 Mapoutputbytes=633105 Mapoutputmaterializedbytes=212115 Input split bytes=98 Combineinputrecords=63958 Combineoutputrecords=14478 Reduceinput groups =14478 Reduceshufflebytes=212115 Reduceinputrecords=14478 Reduceoutputrecords=14478 SpilledRecords=28956 ShuffledMaps=1 FailedShuffles=0 MergedMapoutputs=1 GC time elapsed(ms)=429 CPU time spent(ms)=10770 Physicalmemory(bytes)snapshot=455565312 Virtualmemory(bytes)snapshot=1391718400 Totalcommittedheapusage(bytes)=277348352 ShuffleErrors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 FileInputFormatCounters BytesRead=377868 FileOutputFormatCounters BytesWritten=154893 3: 安装 scala 1 2 tar xvfscala-2.11.8.tgz mv scala-2.11.8 /usr/local/scala 添加环境变量 1 2 3 4 cat >> /etc/profile <<EOF export SCALA_HOME= /usr/local/scala export PATH=$PATH:$SCALA_HOME /bin EOF 测试 1 2 3 source /etc/profile scala-version Scalacoderunnerversion2.11.8--Copyright2002-2016,LAMP /EPFL 4: 安装 spark 1 2 tar xvfspark-2.1.0-bin-hadoop2.7.tgz mv spark-2.1.0-bin-hadoop2.7 /usr/local/spark 添加环境变量 1 2 3 4 5 cat >> /etc/profile <<EOF export SPARK_HOME= /usr/local/spark export PATH=$PATH:$SPARK_HOME /bin export LD_LIBRARY_PATH=$HADOOP_HOME /lib/native EOF 1 2 3 export LD_LIBRARY_PATH=$HADOOP_HOME /lib/native #这一条不添加的话在运行spark-shell时会出现下面的错误 NativeCodeLoader:Unabletoloadnative-hadooplibrary for yourplatform...using builtin -javaclasseswhereapplicable 编辑 spark-env.sh 1 2 SPARK_MASTER_HOST=spark1 HADOOP_CONF_DIR= /usr/locad/hadoop/etc/hadoop 编辑 slaves 1 2 3 spark1 spark2 spark3 启动 spark 1 . /sbin/start-all .sh 此时在spark1上运行jps应该如下, 多了 Master 和 Worker 1 2 3 4 5 6 7 8 9 root@spark1: /usr/local/spark/conf #jps 1699NameNode 8856Jps 7774Master 2023SecondaryNameNode 7871Worker 2344NodeManager 1828DataNode 2212ResourceManager spark2 和 spark3 则多了 Worker 1 2 3 4 5 root@spark2: /tmp #jps 3238Jps 1507DataNode 1645NodeManager 3123Worker 可以打开web页面查看 1 http: //192 .168.100.25:8080/ 运行 spark-shell 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 root@spark1: /usr/local/spark/conf #spark-shell UsingSpark'sdefaultlog4jprofile:org /apache/spark/log4j-defaults .properties Settingdefaultloglevelto "WARN" . Toadjustlogginglevelusesc.setLogLevel(newLevel).ForSparkR,usesetLogLevel(newLevel). 17 /02/24 11:55:46WARNSparkContext:Support for Java7isdeprecatedasofSpark2.0.0 17 /02/24 11:56:17WARNObjectStore:Failedtogetdatabaseglobal_temp,returningNoSuchObjectException SparkcontextWebUIavailableathttp: //192 .168.100.25:4040 Sparkcontextavailableas 'sc' (master= local [*],app id = local -1487908553475). Sparksessionavailableas 'spark' . Welcometo ______ /__ /__ ________/ /__ _\\/_\/_`/__/'_/ /___/ .__/\_,_ /_/ /_/ \_\version2.1.0 /_/ UsingScalaversion2.11.8(JavaHotSpot(TM)64-BitServerVM,Java1.7.0_80) Type in expressionstohavethemevaluated. Type:help for more information. scala>:help 此时可以打开spark 查看 1 http: //192 .168.100.25:4040 /environment/ spark 测试 1 2 3 run-exampleorg.apache.spark.examples.SparkPi 17 /02/28 11:17:20INFODAGScheduler:Job0finished:reduceatSparkPi.scala:38,took3.491241s Piisroughly3.1373756868784346 至此完成. 本文转自 nonono11 51CTO博客,原文链接:http://blog.51cto.com/abian/1900868,如需转载请自行联系原作者