k8s基本概念

![architecture architecture]()

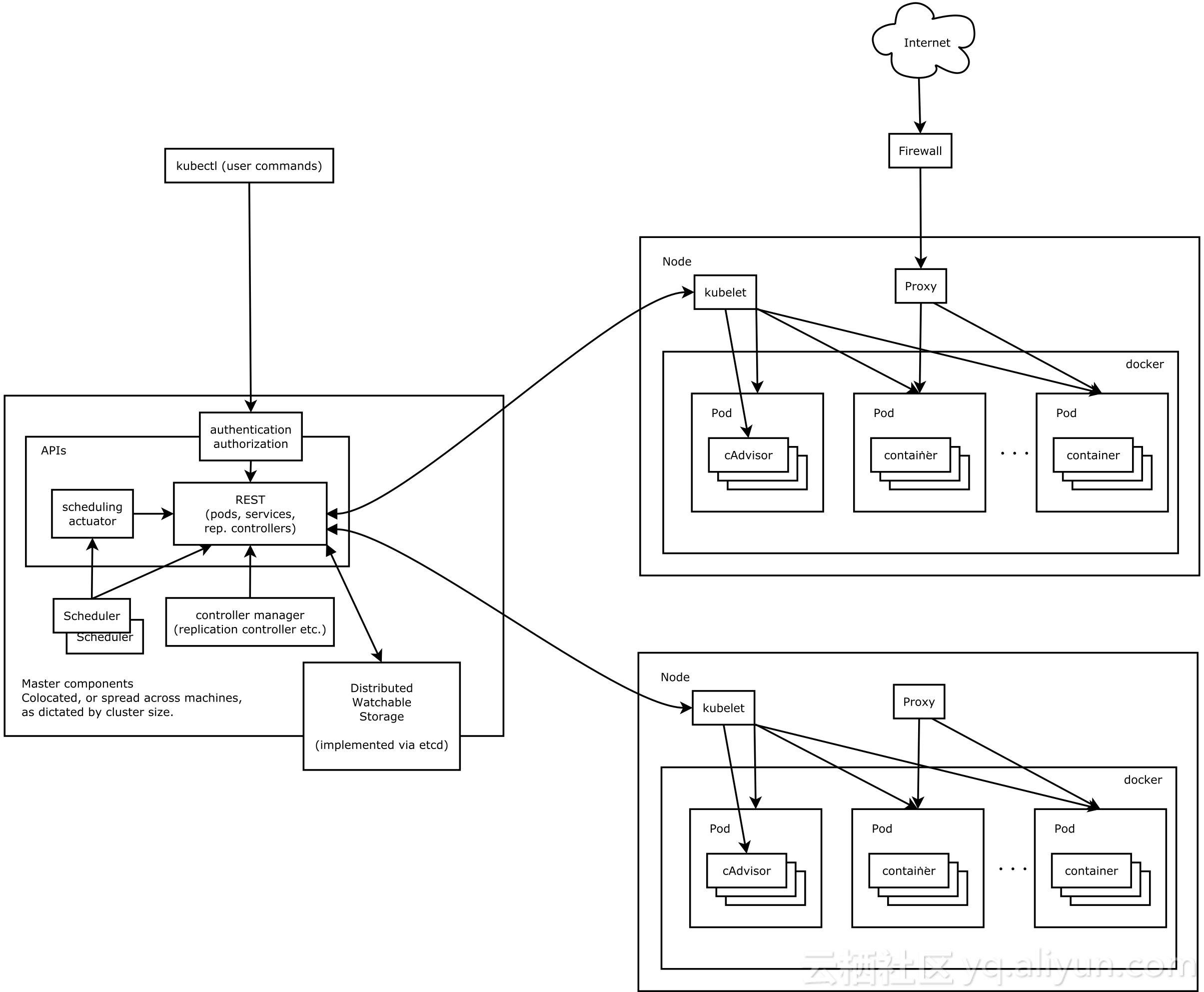

Master

1、k8s集群的管理节点,负责管理集群,提供集群的资源数据访问入口。

2、拥有Etcd存储服务(可选),

3、运行Api Server进程,Controller Manager服务进程及Scheduler服务进程,关联工作节点Node。

4、Kubernetes API server提供HTTP Rest接口的关键服务进程,是Kubernetes里所有资源的增、删、改、查等操作的唯一入口。也是集群控制的入口进程;

5、Kubernetes Controller Manager是Kubernetes所有资源对象的自动化控制中心;

6、Kubernetes Schedule是负责资源调度(Pod调度)的进程

Node

Node是Kubernetes集群架构中运行Pod的服务节点(亦叫agent或minion)。

Node是Kubernetes集群操作的单元,用来承载被分配Pod的运行,是Pod运行的宿主机。

关联Master管理节点,拥有名称和IP、系统资源信息。

运行docker eninge服务,守护进程kunelet及负载均衡器kube-proxy.

每个Node节点都运行着以下一组关键进程

kubelet:负责对Pod对于的容器的创建、启停等任务

kube-proxy:实现Kubernetes Service的通信与负载均衡机制的重要组件

Docker Engine(Docker):Docker引擎,负责本机容器的创建和管理工作

Node节点可以在运行期间动态增加到Kubernetes集群中,默认情况下,kubelet会想master注册自己,这也是 Kubernetes推荐的Node管理方式,kubelet进程会定时向Master汇报自身情报,如操作系统、Docker版本、CPU和内存,以及 有哪些Pod在运行等等,这样Master可以获知每个Node节点的资源使用情况,冰实现高效均衡的资源调度策略。

Node也可以是K8S集群中的一个逻辑概念,表示加入集群中的物理节点或者虚拟机的信息:

Node地址:主机的IP地址、或者NODEID

Node运行状态:包括Padding、running、terminated三种状态。

Node Condition:描述running状态Node的运行条件,当前只有一个Ready.表示Node处于健康状态,可以接收Master发来的创建Pod的指令。

Node系统容量:描述Node可用的系统资源,包括CPU,最大可用内存数量、可调度的Pod数量等。

其他:Node节点的其他信息,包括实例的内核版本号、Kubernetes的版本号、Docker版本号、操作系统名称等。

Pod

运行于Node节点上,若干相关容器的组合。

Pod内包含的容器运行在同一宿主机上,使用相同的网络命名空间、IP地址和端口,能够通过 localhost进行通。

Pod是Kurbernetes进行创建、调度和管理的最小单位,它提供了比容器更高层次的抽象,使得部署和管理更加灵活。

一 个Pod可以包含一个容器或者多个相关容器,其中的应用容器共享一组资源:PID命名空间、网络命名空间、IPC命名空间、UTS命名空间、Volumes(共享存储卷)。

PID命名空间:Pod中的应用程序可以看到其他应用程序的进程ID

网络命名空间:Pod中的多个容器能够访问同一个IP和端口范围。

IPC命名空间:Pod中多个容器能够使用SystemV IPC或者POSIX消息队列进行通信

UTS命名空间:Pod中多个容器共享一个主机名

Volumes(共享存储卷):Pod中各个容器可以访问在Pod级别定义的Volumes

Pod其实有两种类型:普通Pod和静态Pod,后者比较特殊,它并不存在Kubernetes的etcd存储中,而是存放在某个具体的 Node上的一个具体文件中,并且只在此Node上启动。普通Pod一旦被创建,就会被放入etcd存储中,随后会被Kubernetes Master调度到摸个具体的Node上进行绑定,随后该Pod被对应的Node上的kubelet进程实例化成一组相关的Docker容器并启动起来。在默认情况下,当Pod里的某个容器停止时,Kubernetes会自动检测到这个问起并且重启这个Pod(重启Pod里的所有容器),如果Pod所在的Node宕机,则会将这个Node上的所有Pod重新调度到其他节点上。

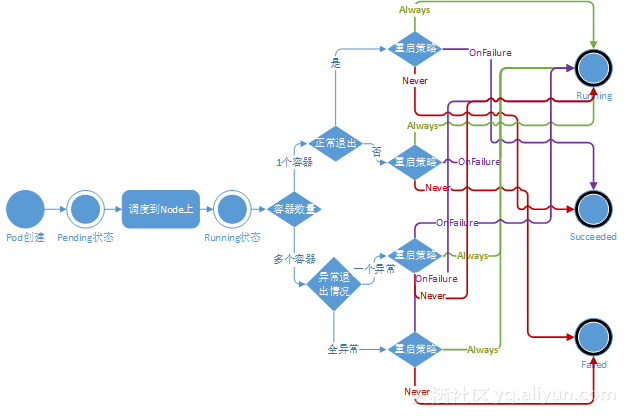

Pod的生命周期

Pod 相位的数量和含义是严格指定的,不应该再假定 Pod 有其他的 phase 值。

下面是 phase 可能的值:

挂起(Pending):Pod 已被 Kubernetes 系统接受,但有一个或者多个容器镜像尚未创建。等待时间包括调度 Pod 的时间和通过网络下载镜像的时间,这可能需要花点时间。

运行中(Running):该 Pod 已经绑定到了一个节点上,Pod 中所有的容器都已被创建。至少有一个容器正在运行,或者正处于启动或重启状态。

成功(Succeeded):Pod 中的所有容器都被成功终止,并且不会再重启。

失败(Failed):Pod 中的所有容器都已终止了,并且至少有一个容器是因为失败终止。也就是说,容器以非0状态退出或者被系统终止。

未知(Unknown):因为某些原因无法取得 Pod 的状态,通常是因为与 Pod 所在主机通信失败。

![704717_20170304103856954_1091445106_1_ 704717_20170304103856954_1091445106_1_]()

Replication Controller

在Master内,Controller Manager通过Replication Controller(RC)来进行Pod的启动、停止、监控。

Replication Controller用来管理Pod的副本,保证集群中存在指定数量的Pod副本。集群中副本的数量大于指定数量,则会停止指定数量之外的多余容器数量, 反之,则会启动少于指定数量个数的容器,保证数量不变。

Replication Controller是实现弹性伸缩、动态扩容和滚动升级的核心。

Service

Service定义了Pod的逻辑集合和访问该集合的策略,是真实服务的抽象。Service提供了一个统一的服务访问入口以及服务代理和发现机制,关联多个相同Label的Pod,用户不需要了解后台Pod是如何运行。

外部系统访问Service的问题,首先需要弄明白Kubernetes的三种IP这个问题

Node IP:Node节点的IP地址,Node IP是Kubernetes集群中节点的物理网卡IP地址,所有属于这个网络的服务器之间都能通过这个网络直接通信。这也表明Kubernetes集群之 外的节点访问Kubernetes集群之内的某个节点或者TCP/IP服务的时候,必须通过Node IP进行通信

Pod IP: Pod的IP地址,Pod IP是每个Pod的IP地址,他是Docker Engine根据docker0网桥的IP地址段进行分配的,通常是一个虚拟的二层网络。

Cluster IP:Service的IP地址,Cluster IP是Kubernetes系统中的一个虚拟的IP,但更像是一个伪造的IP网络,原因有以下几点

Cluster IP仅仅作用于Kubernetes Service这个对象,并由Kubernetes管理和分配P地址;

Cluster IP无法被ping,他没有一个“实体网络对象”来响应;

Cluster IP只能结合Service Port组成一个具体的通信端口,单独的Cluster IP不具备通信的基础,并且他们属于Kubernetes集群这样一个封闭的空间。

Kubernetes集群之内,Node IP网、Pod IP网于Cluster IP网之间的通信,采用的是Kubernetes自己设计的一种编程方式的特殊路由规则。

Label

Kubernetes中的任意API对象都是通过Label进行标识,Label的实质是一系列的Key/Value键值对,其中key于 value由用户自己指定。Label可以附加在各种资源对象上,如Node、Pod、Service、RC等,一个资源对象可以定义任意数量的 Label,同一个Label也可以被添加到任意数量的资源对象上去。Label是Replication Controller和Service运行的基础,二者通过Label来进行关联Node上运行的Pod。

我们可以通过给指定的资源对象捆绑一个或者多个不同的Label来实现多维度的资源分组管理功能,以便于灵活、方便的进行资源分配、调度、配置等管理工作。

一些常用的Label如下:

版本标签:"release":"stable","release":"canary"......

环境标签:"environment":"dev","environment":"qa","environment":"production"

架构标签:"tier":"frontend","tier":"backend","tier":"middleware"

分区标签:"partition":"customerA","partition":"customerB"

质量管控标签:"track":"daily","track":"weekly"

Label相当于我们熟悉的标签,给某个资源对象定义一个Label就相当于给它大了一个标签,随后可以通过Label Selector(标签选择器)查询和筛选拥有某些Label的资源对象,Kubernetes通过这种方式实现了类似SQL的简单又通用的对象查询机 制。

Label Selector在Kubernetes中重要使用场景如下:

kube-Controller进程通过资源对象RC上定义Label Selector来筛选要监控的Pod副本的数量,从而实现副本数量始终符合预期设定的全自动控制流程

kube-proxy进程通过Service的Label Selector来选择对应的Pod,自动建立起每个Service岛对应Pod的请求转发路由表,从而实现Service的智能负载均衡,通过对某些Node定义特定的Label,并且在Pod定义文件中使用Nodeselector这种标签调度策略,kuber-scheduler进程可以实现Pod”定向调度“的特性

Volume

Volume是Pod中能够被多个容器访问的共享目录。Kubernetes的Volume与Docker的Volume比较相似,但并不完全相同。

Kubernetes中的Volume与Pod的生命周期相同,但与容器的生命周期不相关,当容器终止或者重启时,Volume中的数据不会丢失。

Kubernetes支持多种类型的Volume,并且一个Pod可以同时使用任意多个Volume。

1) EmptyDir:在Pod分配到Node时创建,初始内容为空,当Pod从Node上移除时,EmptyDir中的数据也会被删除。

2) HostPath:在Pod上挂载宿主机的文件或目录。

3) GCE Persistent Disk

4) AWS Elastic Block Store

5) NFS

6) iSCSI

7) Flocker

8) GlusterFS

9) RBD

10)Git Repo

11)Secret

12)Persistent Volume Claim

13)Downward API

Namespace

Namespace是Kubernetes中另一个非常重要的概念,通过将系统内部的对象分配到不同的Namespace中,形成逻辑上分组的不同项目、小组或者用户组,便于不同的分组在共享使用整个集群的资源的同时还能被分别管理。

Kubernetes集群在启动后,会创建一个命名为default的Namespace,Pod、RC、Service如果不指明namespace则默认分配到default namespace中。

使用Namespace来组织Kubernetes中的各种对象,可以实现对用户的分组、即多租户管理。

kubectl get namespaces

kubectl get pods --namespace=xxx

Annotation

Annotation与Label类似,可使用K-V键值对的形式进行定义。

与Label具有严格定义相对的是,Annotation是用户任意定义的“附加”信息,以便于进行查找。

K8S安装

CentOS Linux release 7.5.1804 (Core)

Docker version 18.06.1-ce, build e68fc7a

安装kubeadm 1.12.1版本

#设置主机名、关闭selinux、关闭防火墙、重启系统

hostnamectl set-hostname master

sed -i 's/enforcing/disabled/' /etc/selinux/config

set selinux false

systemctl stop firewalld

systemctl disable firewalld

reboot

#配置本地DNS

echo "192.168.31.105 master

192.168.31.106 node01

192.168.31.107 node02" >> /etc/hosts

#添加docker-ce的yum库

echo '[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://download.docker.com/linux/centos/7/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://download.docker.com/linux/centos/gpg'>/etc/yum.repos.d/docker-ce.repo

#安装docker-ce、配置开机启动

yum list docker-ce --showduplicates|grep "^doc"|sort -r

yum -y install docker-ce-18.06.1.ce-3.el7

systemctl start docker

systemctl enable docker

#开启ip转发

echo "net.ipv4.ip_forward = 1">>/etc/sysctl.conf

sysctl -p

#配置kubernetes的yum库

echo '[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg'>/etc/yum.repos.d/kubernetes.repo

#由于k8s.gcr.io的Google无法下载,故从阿里云上下载,重新打上k8s.gcr.io的tag

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.12.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.12.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.12.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.12.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.24

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.2

#重新打tag

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.2 k8s.gcr.io/coredns:1.2.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.12.1 k8s.gcr.io/kube-scheduler:v1.12.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.12.1 k8s.gcr.io/kube-controller-manager:v1.12.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.12.1 k8s.gcr.io/kube-apiserver:v1.12.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.12.1 k8s.gcr.io/kube-proxy:v1.12.1

#安装kubelet kubeadm kubectl

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

#设置忽略swap、并关闭swap挂载

vi /etc/sysconfig/kubelet

#add args to KUBELET_EXTRA_ARGS

KUBELET_EXTRA_ARGS=--fail-swap-on=false

swapoff -a

sed -i 's/.swap./#&/' /etc/fstab

free -h

#如果要永久禁止swap挂载,可以修改/etc/fstab,将与swap有关的配置注释,重启系统即可

sed -i 's/.swap./#&/' /etc/fstab

#初始化

kubeadm init --kubernetes-version=1.12.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.31.105

#如果初始化失败,可以使用kubeadm reset后再重新初始化

#初始化成功返回的信息

[init] using Kubernetes version: v1.12.1

[preflight] running pre-flight checks

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.31.105]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [master localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.31.105 127.0.0.1 ::1]

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[certificates] Generated sa key and public key.

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 19.003253 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.12" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node master as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node master as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "master" as an annotation

[bootstraptoken] using token: ayy3kb.npks65qpznhb39w7

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.31.105:6443 --token ayy3kb.npks65qpznhb39w7 --discovery-token-ca-cert-hash sha256:c3d0f476fc857b6ee05e840bb4dd95799405fd9086b095284cdffb43382a3370

#可以使用如下命令找回token

kubeadm token create --print-join-command

I1027 01:37:16.239107 19974 version.go:89] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I1027 01:37:16.239173 19974 version.go:94] falling back to the local client version: v1.12.1

kubeadm join 192.168.31.105:6443 --token xv6nlv.pqq3gss1uisty93k --discovery-token-ca-cert-hash sha256:c3d0f476fc857b6ee05e840bb4dd95799405fd9086b095284cdffb43382a3370

#若是非root用户

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

#若是root用户

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

kubeadm join 172.31.3.11:6443 --token jlidhg.sczmdc5wnvgi5pir --discovery-token-ca-cert-hash sha256:74f5e3a3b1163fdafe9a634ff5ca81ea314a6895af18e03a5b482635b4ca93e0

过程中遇到一个问题,各个节点的状态都是NotReady,原因是没有按照网络插件,按照flannel后重启docker引擎即可(systemctl restart docker)

Ready False Sat, 27 Oct 2018 11:17:06 +0800 Sat, 27 Oct 2018 01:10:19 +0800 KubeletNotReady runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

cat /var/lib/kubelet/kubeadm-flags.env

kubectl get pod -n kube-system -o wide

kubectl get nodes

#按照flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#master node 参与工作负载

kubectl taint nodes master node.kubernetes.io/not-ready-

最终集群状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 11h v1.12.1

node01 Ready <none> 10h v1.12.1

node02 Ready <none> 10h v1.12.1

[root@master ~]# kubectl get pods --all-namespaces -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kube-system coredns-576cbf47c7-2tsdz 1/1 Running 0 11h 10.244.1.3 node01 <none>

kube-system coredns-576cbf47c7-87cvn 1/1 Running 0 11h 10.244.1.2 node01 <none>

kube-system etcd-master 1/1 Running 1 18m 192.168.31.105 master <none>

kube-system kube-apiserver-master 1/1 Running 1 18m 192.168.31.105 master <none>

kube-system kube-controller-manager-master 1/1 Running 1 18m 192.168.31.105 master <none>

kube-system kube-flannel-ds-amd64-76z5p 1/1 Running 0 20m 192.168.31.107 node02 <none>

kube-system kube-flannel-ds-amd64-7kw2n 1/1 Running 1 20m 192.168.31.105 master <none>

kube-system kube-flannel-ds-amd64-f45t2 1/1 Running 0 20m 192.168.31.106 node01 <none>

kube-system kube-proxy-mg62l 1/1 Running 0 10h 192.168.31.107 node02 <none>

kube-system kube-proxy-n7njk 1/1 Running 1 11h 192.168.31.105 master <none>

kube-system kube-proxy-ws6v2 1/1 Running 0 10h 192.168.31.106 node01 <none>

kube-system kube-scheduler-master 1/1 Running 2 18m 192.168.31.105 master <none>

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

[root@master ~]# grep image kubernetes-dashboard.yaml

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.0 k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.0

kubectl create -f kubernetes-dashboard.yaml

#rc扩容

kubectl scale rc nginx-controller --replicas=4

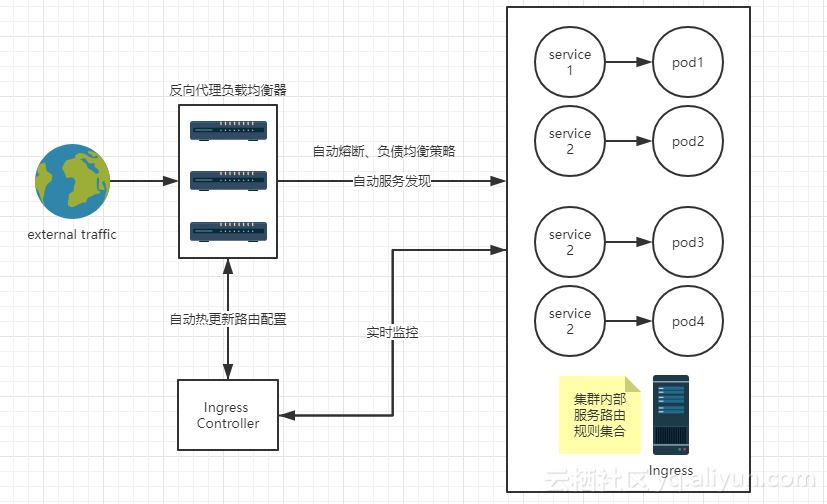

Ingress

在kubernetes 1.2 版本之前,实现服务对外暴露的方式有两种

- LoadBlancer Service

- NodePort Service

通过NodePort 的方式,后期随着进入的服务增多,众多的端口映射,后期端口管理是个大麻烦

通过LoadBlancer 暴露,一般为对接GCE、阿里云、Openstack等云平台,深度结合了云平台,所以只能在一些云平台上来使用,对于后期的迁移是个麻烦

为了解决服务暴露的问题,同时避免NodePort 的端口管理问题和LoadBlancer 的云平台深度结合问题,Kubernetes在1.2版本支持通过 Ingress 用户可以实现使用 nginx 等开源的反向代理负载均衡器实现对外暴露服务。

1、Ingress 可以解决什么问题?

- 动态配置服务

如果按照传统方式, 当新增加一个服务时, 我们可能需要在流量入口加一个反向代理指向我们新的k8s服务. 而如果用了Ingress, 只需要配置好这个服务, 当服务启动时, 会自动注册到Ingress的中, 不需要而外的操作.

- 减少不必要的端口暴露

配置过k8s的都清楚, 第一步是要关闭防火墙的, 主要原因是k8s的很多服务会以NodePort方式映射出去, 这样就相当于给宿主机打了很多孔, 既不安全也不优雅. 而Ingress可以避免这个问题, 除了Ingress自身服务可能需要映射出去, 其他服务都不要用NodePort方式。Ingresss是k8s集群中的一个API资源对象,扮演边缘路由器(edge router)的角色,也可以理解为集群防火墙、集群网关,我们可以自定义路由规则来转发、管理、暴露服务(一组pod),非常灵活,生产环境建议使用这种方式。另外LoadBlancer也可以暴露服务,不过这种方式需要向云平台申请负债均衡器;虽然目前很多云平台都支持,但是这种方式深度耦合了云平台

2、使用 Ingress 时一般会有三个组件:反向代理负载均衡器、Ingress Controller、 Ingress

- 反向代理负载均衡器

反向代理负载均衡器很简单,说白了就是 nginx、apache 什么的;在集群中反向代理负载均衡器可以自由部署,可以使用 Replication Controller、Deployment、DaemonSet 等等,不过个人喜欢以 DaemonSet 的方式部署,感觉比较方便

- Ingress Controller

Ingress Controller 实质上可以理解为是个监视器,Ingress Controller 通过不断地跟 kubernetes API 打交道,实时的感知后端 service、pod 等变化,比如新增和减少 pod,service 增加与减少等;当得到这些变化信息后,Ingress Controller 再结合下文的 Ingress 生成配置,然后更新反向代理负载均衡器,并刷新其配置,达到服务发现的作用

- Ingress

Ingress 简单理解就是个规则定义;比如说某个域名对应某个 service,即当某个域名的请求进来时转发给某个 service;这个规则将与 Ingress Controller 结合,然后 Ingress Controller 将其动态写入到负载均衡器配置中,从而实现整体的服务发现和负载均衡

![1082769_20180506230327444_207443165 1082769_20180506230327444_207443165]()

ingress nginx的实现方式

aliyun docker镜像库下载:https://dev.aliyun.com/search.html

#查看github上ingress-nginx的配置文件,需要在k8s.gce.io上下载如下几个镜像,提前在aliyun的docker镜像库中下载好,本地重新打上k8s.gce.io的tag,配置文件地址为https://github.com/kubernetes/ingress-nginx/blob/master/deploy/mandatory.yaml

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

docker tag registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5 k8s.gcr.io/defaultbackend-amd64:1.5

docker image rm registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0

docker tag registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0 quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.20.0

docker image rm registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.20.0/deploy/mandatory.yaml

mv mandatory.yaml ingress-nginx-mandatory.yaml

kubectl apply -f ingress-nginx-mandatory.yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/provider/baremetal/service-nodeport.yaml

kubectl apply -f service-nodeport.yaml

#至此,nginx的ingress rc controller和ingress nginx service创建完成,并且ingress nginx使用如下方式(NodePort 10.111.38.64 <none> 80:30412/TCP,443:31488/TCP)进行服务暴露。

[root@master ~]# kubectl get deployment,svc,rc,pod,ing -o wide -n ingress-nginx

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.extensions/default-http-backend 1 1 1 1 14h default-http-backend k8s.gcr.io/defaultbackend-amd64:1.5 app.kubernetes.io/name=default-http-backend,app.kubernetes.io/part-of=ingress-nginx

deployment.extensions/nginx-ingress-controller 1 1 1 1 14h nginx-ingress-controller quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.20.0 app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/default-http-backend ClusterIP 10.108.66.33 <none> 80/TCP 14h app.kubernetes.io/name=default-http-backend,app.kubernetes.io/part-of=ingress-nginx

service/ingress-nginx NodePort 10.111.38.64 <none> 80:30412/TCP,443:31488/TCP 6m5s app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

pod/default-http-backend-85b8b595f9-pcdjb 1/1 Running 0 14h 10.244.1.10 node01 <none>

pod/nginx-ingress-controller-866568b999-sqvmk 1/1 Running 0 14h 10.244.2.17 node02 <none>

#创建应用服务,本地文件名为nginx.yaml

kubectl apply -f nginx.yaml

[root@master ~]# cat nginx.yaml

apiVersion: v1

kind: Service

metadata:

name: test-ingress

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: test-ingress

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: test-ingress

spec:

replicas: 1

template:

metadata:

labels:

app: test-ingress

spec:

containers:

- image: nginx:latest

imagePullPolicy: IfNotPresent

name: test-nginx

ports:

- containerPort: 80

#可以看到,本地创建的服务(ClusterIP 10.110.198.202 <none> 80/TCP)并没有对外进行暴露。

[root@master ~]# kubectl get deployment,svc,rc,pod,ing -o wide -n default

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.extensions/test-ingress 1 1 1 1 73m test-nginx nginx:latest app=test-ingress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 26h <none>

service/test-ingress ClusterIP 10.110.198.202 <none> 80/TCP 73m app=test-ingress

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

pod/test-ingress-78fc77444f-5pvn4 1/1 Running 0 73m 10.244.1.11 node01 <none>

#下面需要继续创建与改服务相关的ingress对象,ingress-nginx(namespace:ingress-nginx 下的service/ingress-nginx)服务监听ingress来完成nginx的配置的更新,本地文件名为nginx-ingress.yaml

[root@master ~]# cat nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: feiutest.cn

http:

paths:

- path:

backend:

serviceName: test-ingress

servicePort: 80

#最终的kubernetes集群中的对象信息如下

[root@master ~]# kubectl get deployment,svc,rc,pod,ing -o wide -n default

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.extensions/test-ingress 1 1 1 1 86m test-nginx nginx:latest app=test-ingress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27h <none>

service/test-ingress ClusterIP 10.110.198.202 <none> 80/TCP 86m app=test-ingress

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

pod/test-ingress-78fc77444f-5pvn4 1/1 Running 0 86m 10.244.1.11 node01 <none>

#创建的ingress对象

NAME HOSTS ADDRESS PORTS AGE

ingress.extensions/test-ingress feiutest.cn 80 85m

[root@master ~]# kubectl get deployment,svc,rc,pod,ing -o wide -n ingress-nginx

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.extensions/default-http-backend 1 1 1 1 14h default-http-backend k8s.gcr.io/defaultbackend-amd64:1.5 app.kubernetes.io/name=default-http-backend,app.kubernetes.io/part-of=ingress-nginx

deployment.extensions/nginx-ingress-controller 1 1 1 1 14h nginx-ingress-controller quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.20.0 app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/default-http-backend ClusterIP 10.108.66.33 <none> 80/TCP 14h app.kubernetes.io/name=default-http-backend,app.kubernetes.io/part-of=ingress-nginx

service/ingress-nginx NodePort 10.111.38.64 <none> 80:30412/TCP,443:31488/TCP 26m app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

pod/default-http-backend-85b8b595f9-pcdjb 1/1 Running 0 14h 10.244.1.10 node01 <none>

pod/nginx-ingress-controller-866568b999-sqvmk 1/1 Running 0 14h 10.244.2.17 node02 <none>

#最终效果

[root@master ~]# curl -H "Host: feiutest.cn" http://127.0.0.1:30412

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#资源不存在,直接路由到default backend上

[root@master ~]# curl http://127.0.0.1:30412

default backend - 404[root@master ~]#

创建应用的tls证书,待验证

第一步:制作自签证书

[root@master demo]# openssl genrsa -out tls.key 2048

[root@master demo]# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=Guangdong/L=Guangzhou/O=devops/CN=feiutest.cn

生成两个文件:

[root@master demo]# ls

tls.crt tls.key

[root@master demo]# kubectl create secret tls nginx-test --cert=tls.crt --key=tls.key

[root@master demo]# kubectl get secret

NAME TYPE DATA AGE

nginx-test kubernetes.io/tls 2 17s

#服务对应的ingress文件nginx-ingress.yaml中增加tls的配置,最终如下

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: feiutest.cn

http:

paths:

- path:

backend:

serviceName: test-ingress

servicePort: 80

tls:

- hosts:

- feiutest.cn

secretName: nginx-test

使用traefik部署Ingress

由于微服务架构以及 Docker 技术和 kubernetes 编排工具最近几年才开始逐渐流行,所以一开始的反向代理服务器比如 nginx、apache 并未提供其支持,毕竟他们也不是先知;所以才会出现 Ingress Controller 这种东西来做 kubernetes 和前端负载均衡器如 nginx 之间做衔接;即 Ingress Controller 的存在就是为了能跟 kubernetes 交互,又能写 nginx 配置,还能 reload 它,这是一种折中方案;

而最近开始出现的 traefik 天生就是提供了对 kubernetes 的支持,也就是说 traefik 本身就能跟 kubernetes API 交互,感知后端变化,使用traefix有天然的优势。

在docker的中央仓库中搜索traefix,最新版本为v1.7.3版本

https://hub.docker.com/explore/

#下载镜像

docker pull traefik:v1.7.3

[root@master ~]# docker image ls | grep traefik

traefik v1.7.3 99dafcf4b2eb 13 days ago 68.7MB

ca.crt

Issuer: CN=kubernetes

Validity

Not Before: Oct 26 17:10:07 2018 GMT

Not After : Oct 23 17:10:07 2028 GMT

Subject: CN=kubernetes

apiserver.crt

Issuer: CN=kubernetes

Validity

Not Before: Oct 26 17:10:07 2018 GMT

Not After : Oct 26 17:10:07 2019 GMT

Subject: CN=kube-apiserver

X509v3 Subject Alternative Name:

DNS:master, DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster.local, IP Address:10.96.0.1, IP Address:192.168.31.105

apiserver-etcd-client.crt

Issuer: CN=etcd-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 26 17:10:08 2019 GMT

Subject: O=system:masters, CN=kube-apiserver-etcd-client

apiserver-kubelet-client.crt

Issuer: CN=kubernetes

Validity

Not Before: Oct 26 17:10:07 2018 GMT

Not After : Oct 26 17:10:08 2019 GMT

Subject: O=system:masters, CN=kube-apiserver-kubelet-client

front-proxy-ca.crt

Issuer: CN=front-proxy-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 23 17:10:08 2028 GMT

Subject: CN=front-proxy-ca

front-proxy-client.crt

Issuer: CN=front-proxy-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 26 17:10:08 2019 GMT

Subject: CN=front-proxy-client

etc/ca.crt

Issuer: CN=etcd-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 23 17:10:08 2028 GMT

Subject: CN=etcd-ca

etcd/healthcheck-client.crt

Issuer: CN=etcd-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 26 17:10:08 2019 GMT

Subject: O=system:masters, CN=kube-etcd-healthcheck-client

etcd/peer.crt

Issuer: CN=etcd-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 26 17:10:09 2019 GMT

Subject: CN=master

X509v3 Subject Alternative Name:

DNS:master, DNS:localhost, IP Address:192.168.31.105, IP Address:127.0.0.1, IP Address:0:0:0:0:0:0:0:1

etcd/server.crt

Issuer: CN=etcd-ca

Validity

Not Before: Oct 26 17:10:08 2018 GMT

Not After : Oct 26 17:10:09 2019 GMT

Subject: CN=master

X509v3 Subject Alternative Name:

DNS:master, DNS:localhost, IP Address:127.0.0.1, IP Address:0:0:0:0:0:0:0:1

/etc/kubernetes/manifests/kube-apiserver.yaml

spec:

containers:

- command:

- kube-apiserver

- --anonymous-auth=false

- --authorization-mode=Node,RBAC

- --advertise-address=192.168.31.105

- --allow-privileged=true

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --insecure-port=0

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

image: k8s.gcr.io/kube-apiserver:v1.12.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.31.105

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-apiserver

resources:

requests:

cpu: 250m

volumeMounts:

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

kube-controller-manager.yaml

spec:

containers:

- command:

- kube-controller-manager

- --address=127.0.0.1

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-cidr=10.244.0.0/16

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

- --node-cidr-mask-size=24

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --use-service-account-credentials=true

image: k8s.gcr.io/kube-controller-manager:v1.12.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-controller-manager

resources:

requests:

cpu: 200m

volumeMounts:

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/kubernetes/controller-manager.conf

name: kubeconfig

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/kubernetes/controller-manager.conf

type: FileOrCreate

name: kubeconfig

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

kube-scheduler.yaml

spec:

containers:

- command:

- kube-scheduler

- --address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

image: k8s.gcr.io/kube-scheduler:v1.12.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10251

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-scheduler

resources:

requests:

cpu: 100m

volumeMounts:

- mountPath: /etc/kubernetes/scheduler.conf

name: kubeconfig

readOnly: true

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/kubernetes/scheduler.conf

type: FileOrCreate

name: kubeconfig

etcd.yaml

spec:

containers:

- command:

- etcd

- --advertise-client-urls=https://127.0.0.1:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --initial-advertise-peer-urls=https://127.0.0.1:2380

- --initial-cluster=master=https://127.0.0.1:2380

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379

- --listen-peer-urls=https://127.0.0.1:2380

- --name=master

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-client-cert-auth=true

- --peer-key-file=/etc/kubernetes/pki/etcd/peer.key

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --snapshot-count=10000

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

image: k8s.gcr.io/etcd:3.2.24

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- /bin/sh

- -ec

- ETCDCTL_API=3 etcdctl --endpoints=https://[127.0.0.1]:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key

get foo

failureThreshold: 8

initialDelaySeconds: 15

timeoutSeconds: 15

name: etcd

resources: {}

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

- hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

name: etcd-certs

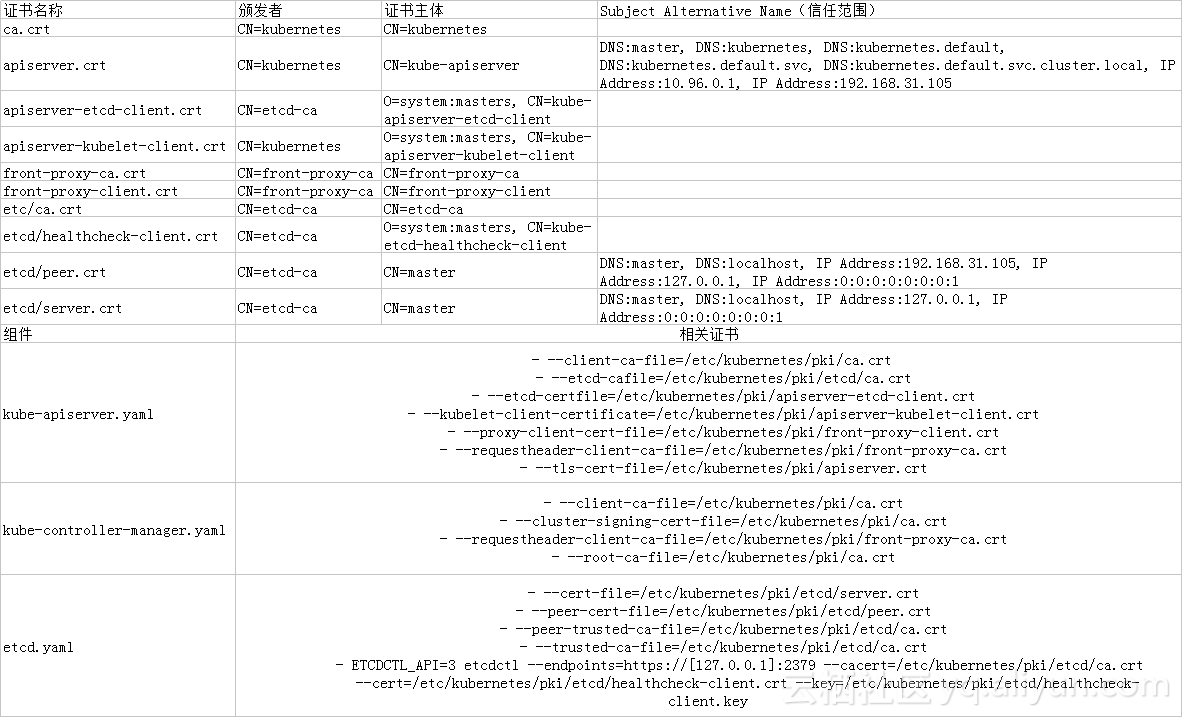

证书名称 颁发者 证书主体 Subject Alternative Name(信任范围)

ca.crt CN=kubernetes CN=kubernetes

apiserver.crt CN=kubernetes CN=kube-apiserver DNS:master, DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster.local, IP Address:10.96.0.1, IP Address:192.168.31.105

apiserver-etcd-client.crt CN=etcd-ca O=system:masters, CN=kube-apiserver-etcd-client

apiserver-kubelet-client.crt CN=kubernetes O=system:masters, CN=kube-apiserver-kubelet-client

front-proxy-ca.crt CN=front-proxy-ca CN=front-proxy-ca

front-proxy-client.crt CN=front-proxy-ca CN=front-proxy-client

etc/ca.crt CN=etcd-ca CN=etcd-ca

etcd/healthcheck-client.crt CN=etcd-ca O=system:masters, CN=kube-etcd-healthcheck-client

etcd/peer.crt CN=etcd-ca CN=master DNS:master, DNS:localhost, IP Address:192.168.31.105, IP Address:127.0.0.1, IP Address:0:0:0:0:0:0:0:1

etcd/server.crt CN=etcd-ca CN=master DNS:master, DNS:localhost, IP Address:127.0.0.1, IP Address:0:0:0:0:0:0:0:1

组件 相关证书

kube-apiserver.yaml " - --client-ca-file=/etc/kubernetes/pki/ca.crt

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt"

kube-controller-manager.yaml " - --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt"

etcd.yaml " - --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- ETCDCTL_API=3 etcdctl --endpoints=https://[127.0.0.1]:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key"

![image image]()

参考

https://docs.traefik.io/user-guide/kubernetes/

https://www.jianshu.com/p/e30b06906b77

https://github.com/kubernetes/ingress-nginx/

https://kubernetes.github.io/ingress-nginx/

https://mritd.me/2017/03/04/how-to-use-nginx-ingress/

http://blog.51cto.com/tryingstuff/2136831

https://www.cnblogs.com/justmine/p/8991379.html

其他资源

https://blog.csdn.net/liukuan73/article/details/78883847

https://www.jianshu.com/p/fada87851e63

https://blog.csdn.net/huwh_/article/details/71308171

https://www.kubernetes.org.cn/kubernetes%E8%AE%BE%E8%AE%A1%E6%9E%B6%E6%9E%84

https://www.kubernetes.org.cn/3096.html

https://www.kubernetes.org.cn/