注:这里就不科普了,直接开始部署。[这里使用HTTP来部署]

1. 关闭 SeLinux 和 FireWall

2. 安装 docker

|

1

2

3

4

5

6

7

8

9

|

Docker version 17.06.2-ce, build cec0b72

|

3. 安装 etcd

|

1

2

3

4

5

6

7

8

9

10

11

|

etcd Version: 3.2.9

Git SHA: f1d7dd8

Go Version: go1.8.4

Go OS

/Arch

: linux

/amd64

etcdctl version: 3.2.9

API version: 2

|

4. 安装 Kubernetes

5. 安装 flanneld

6. 配置并启用 etcd

A. 配置启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[Unit]

Description=etcd

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https:

//github

.com

/coreos/etcd

[Service]

Type=notify

WorkingDirectory=

/var/lib/etcd

EnvironmentFile=-

/etc/etcd/etcd

.conf

ExecStart=

/usr/bin/etcd

--config-

file

/etc/etcd/etcd

.conf

Restart=on-failure

LimitNOFILE=65536

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

|

B. 配置各节点 etcd.conf 配置文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

name:

'${ETCD_NAME}'

data-

dir

:

"/var/lib/etcd/"

listen-peer-urls: http:

//

${INTERNAL_IP}:2380

listen-client-urls: http:

//

${INTERNAL_IP}:2379,http:

//127

.0.0.1:2379

initial-advertise-peer-urls: http:

//

${INTERNAL_IP}:2380

advertise-client-urls: http:

//

${INTERNAL_IP}:2379

initial-cluster:

"etcd=http://${INTERNAL_IP}:2380"

initial-cluster-token:

'etcd-cluster'

initial-cluster-state:

'new'

EOF

|

注:

new-----初始化集群安装时使用该选项;

existing-----新加入集群时使用该选项。

C.启动 etcd

##查看集群成员

|

1

2

|

b0f5befc15246c67: name=etcd peerURLs=http:

//192

.168.100.104:2380 clientURLs=http:

//192

.168.100.104:2379 isLeader=

true

|

##查看集群健康状况

|

1

2

3

|

member b0f5befc15246c67 is healthy: got healthy result from http:

//192

.168.100.104:2379

cluster is healthy

|

7. 配置并启用flanneld

A. 配置启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=

/etc/sysconfig/flanneld

EnvironmentFile=-

/etc/sysconfig/docker-network

ExecStart=

/usr/bin/flanneld-start

$FLANNEL_OPTIONS

ExecStartPost=

/usr/libexec/flannel/mk-docker-opts

.sh -k DOCKER_NETWORK_OPTIONS -d

/run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

|

|

1

2

3

4

5

6

7

|

exec

/usr/bin/flanneld

\

-etcd-endpoints=${FLANNEL_ETCD_ENDPOINTS:-${FLANNEL_ETCD}} \

-etcd-prefix=${FLANNEL_ETCD_PREFIX:-${FLANNEL_ETCD_KEY}} \

"$@"

|

B. 配置 flannel 配置文件

|

1

2

3

4

5

|

FLANNEL_ETCD_ENDPOINTS=

"http://192.168.100.104:2379"

FLANNEL_ETCD_PREFIX=

"/kube/network"

|

C. 启动 flanneld

D. 查看各节点网段

|

1

2

3

4

5

|

FLANNEL_NETWORK=10.254.0.0

/16

FLANNEL_SUBNET=10.254.26.1

/24

FLANNEL_MTU=1472

FLANNEL_IPMASQ=

false

|

E. 更改 docker 网段为 flannel 分配的网段

|

1

2

3

4

5

6

7

8

|

{

"bip"

:

"$FLANNEL_SUBNET"

}

EOF

|

F. 查看是否已分配相应网段

|

1

2

3

4

5

6

7

|

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.200.2 0.0.0.0 UG 100 0 0 ens33

10.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 flannel0

10.254.26.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

192.168.100.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

|

G. 使用 etcdctl 命令查看 flannel 的相关信息

|

1

2

3

4

5

6

7

8

9

|

/kube/network/subnets/10

.254.26.0-24

Key:

/kube/network/subnets/10

.254.26.0-24

Created-Index: 6

Modified-Index: 6

TTL: 85638

Index: 6

{

"PublicIP"

:

"192.168.100.104"

}

|

H. 测试网络是否正常

8. 配置并启用 Kubernetes Master 节点

Kubernetes Master 节点包含的组件:

kube-apiserver

kube-scheduler

kube-controller-manager

A. 配置 config 文件

|

1

2

3

4

5

6

|

KUBE_LOGTOSTDERR=

"--logtostderr=true"

KUBE_LOG_LEVEL=

"--v=0"

KUBE_ALLOW_PRIV=

"--allow-privileged=false"

KUBE_MASTER=

"--master=http://192.168.100.104:8080"

|

B. 配置 kube-apiserver 启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

[Unit]

Description=Kubernetes API Server

Documentation=https:

//github

.com

/kubernetes/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-

/etc/kubernetes/config

EnvironmentFile=-

/etc/kubernetes/apiserver

ExecStart=

/usr/bin/kube-apiserver

\

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

|

C. 配置 apiserver 配置文件

|

1

2

3

4

5

6

|

KUBE_API_ADDRESS=

"--advertise-address=192.168.100.104 --bind-address=192.168.100.104 --insecure-bind-address=0.0.0.0"

KUBE_ETCD_SERVERS=

"--etcd-servers=http://192.168.100.104:2379"

KUBE_SERVICE_ADDRESSES=

"--service-cluster-ip-range=10.254.0.0/16"

KUBE_ADMISSION_CONTROL=

"--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=

"--enable-swagger-ui=true --apiserver-count=3 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/log/apiserver.log"

|

注:使用 HTTP 和 使用 HTTPS 的最大不同就是--admission-control=ServiceAccount选项。

D. 启动 kube-apiserver

E. 配置 kube-controller-manager 启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

[Unit]

Description=Kubernetes Controller Manager

Documentation=https:

//github

.com

/kubernetes/kubernetes

[Service]

EnvironmentFile=-

/etc/kubernetes/config

EnvironmentFile=-

/etc/kubernetes/controller-manager

ExecStart=

/usr/bin/kube-controller-manager

\

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

|

F. 配置 kube-controller-manager 配置文件

|

1

2

|

KUBE_CONTROLLER_MANAGER_ARGS=

"--address=127.0.0.1 --service-cluster-ip-range=10.254.0.0/16 --cluster-name=kubernetes"

|

G.启动 kube-controller-manager

H. 配置 kube-scheduler 启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https:

//github

.com

/kubernetes/kubernetes

[Service]

EnvironmentFile=-

/etc/kubernetes/config

EnvironmentFile=-

/etc/kubernetes/scheduler

ExecStart=

/usr/bin/kube-scheduler

\

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

|

I. 配置 kube-scheduler 配置文件

|

1

2

|

KUBE_SCHEDULER_ARGS=

"--address=127.0.0.1"

|

J. 启动 kube-scheduler

K. 验证 Master 节点

|

1

2

3

4

5

6

|

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {

"health"

:

"true"

}

|

9. 配置并启用 Kubernetes Node 节点

Kubernetes Node 节点包含如下组件:

kubelet

kube-proxy

A. 配置 kubelet 启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https:

//github

.com

/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=

/var/lib/kubelet

EnvironmentFile=-

/etc/kubernetes/config

EnvironmentFile=-

/etc/kubernetes/kubelet

ExecStart=

/usr/bin/kubelet

\

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

|

B. 配置 kubelet 配置文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http:

//

${MASTER_ADDRESS}:8080/

name:

local

contexts:

- context:

cluster:

local

name:

local

current-context:

local

EOF

|

|

1

2

3

4

5

6

|

KUBELET_ADDRESS=

"--address=192.168.100.104"

KUBELET_PORT=

"--port=10250"

KUBELET_HOSTNAME=

"--hostname-override=master"

KUBELET_POD_INFRA_CONTAINER=

"--pod-infra-container-image=hub.c.163.com/k8s163/pause-amd64:3.0"

KUBELET_ARGS=

"--kubeconfig=/etc/kubernetes/kubelet.kubeconfig --fail-swap-on=false --cluster-dns=10.254.0.2 --cluster-domain=cluster.local. --serialize-image-pulls=false"

|

C. 启动 kubelet

注:

--fail-swap-on ##如果在节点上启用了swap,则Kubelet无法启动.(default true)[该命令是1.8版本开始才有的]

--cluster-dns=10.254.0.2

--cluster-domain=cluster.local.

##与 KubeDNS Pod 配置的参数一致

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig

##新版本不再支持 --api-servers 模式

D. 配置 kube-proxy 启动项

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https:

//github

.com

/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=-

/etc/kubernetes/config

EnvironmentFile=-

/etc/kubernetes/proxy

ExecStart=

/usr/bin/kube-proxy

\

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

|

E. 配置 kube-proxy 配置文件

|

1

2

|

KUBE_PROXY_ARGS=

"--bind-address=192.168.100.104 --hostname-override=192.168.100.104 --cluster-cidr=10.254.0.0/16"

|

F. 启动 kube-proxy

G. 查看 Nodes相关信息

|

1

2

3

4

5

6

7

8

9

10

11

12

|

NAME STATUS ROLES AGE VERSION

master Ready <none> 5h v1.8.1

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready <none> 5h v1.8.1 <none> CentOS Linux 7 (Core) 3.10.0-693.2.2.el7.x86_64 docker:

//Unknown

NAME STATUS ROLES AGE VERSION LABELS

master Ready <none> 5h v1.8.1 beta.kubernetes.io

/arch

=amd64,beta.kubernetes.io

/os

=linux,kubernetes.io

/hostname

=master

Client Version: v1.8.1

Server Version: v1.8.1

|

H. 查看集群信息

|

1

2

|

Kubernetes master is running at http:

//localhost

:8080

|

10. 部署 KubeDNS 插件

官方的yaml文件目录:kubernetes/cluster/addons/dns。

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns

##下载 Kube-DNS 相关 yaml 文件

##修改后缀

### 替换所有的 images

####替换如下

######对比

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

33c33

< clusterIP: 10.254.0.2

---

> clusterIP: __PILLAR__DNS__SERVER__

97c97

< image: 192.168.100.100

/k8s/k8s-dns-kube-dns-amd64

:1.14.5

---

> image: gcr.io

/google_containers/k8s-dns-kube-dns-amd64

:1.14.6

127,128c127

< - --domain=cluster.

local

.

< - --kube-master-url=http:

//192

.168.100.104:8080

---

> - --domain=__PILLAR__DNS__DOMAIN__.

149c148

< image: 192.168.100.100

/k8s/k8s-dns-dnsmasq-nanny-amd64

:1.14.5

---

> image: gcr.io

/google_containers/k8s-dns-dnsmasq-nanny-amd64

:1.14.6

169c168

< - --server=

/cluster

.

local

/127

.0.0.1

---

> - --server=

/__PILLAR__DNS__DOMAIN__/127

.0.0.1

188c187

< image: 192.168.100.100

/k8s/k8s-dns-sidecar-amd64

:1.14.5

---

> image: gcr.io

/google_containers/k8s-dns-sidecar-amd64

:1.14.6

201,202c200,201

< - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.

local

,5,A

< - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.

local

,5,A

---

> - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.__PILLAR__DNS__DOMAIN__,5,SRV

> - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.__PILLAR__DNS__DOMAIN__,5,SRV

|

注1:这里我用的镜像是自己搭建的镜像仓库来pull,写这篇博文时kube-dns更新到了1.14.6,开会,墙又高了许多,所以拉取困难,我用回了1.14.5的版本,这里自行更改,自己能科学上网拉取最新版当然更好。

注2:看对比,第二个不同,1.14.6版--probe最后是SRV,而1.14.5版最后是A,这儿也要更改,不然会产生 CrashLoopBackOff 错误。

注3:这里我们要使用--kube-master-url命令指定apiserver,不然也会产生 CrashLoopBackOff 错误。

注4: 我将镜像放在了网易蜂巢上,地址:

hub.c.163.com/zhijiansd/k8s-dns-kube-dns-amd64:1.14.5

hub.c.163.com/zhijiansd/k8s-dns-dnsmasq-nanny-amd64:1.14.5

hub.c.163.com/zhijiansd/k8s-dns-sidecar-amd64:1.14.5

### 执行该文件

|

1

2

3

4

5

|

service

"kube-dns"

created

serviceaccount

"kube-dns"

created

configmap

"kube-dns"

created

deployment

"kube-dns"

created

|

### 查看 KubeDNS 服务

|

1

2

3

|

NAME READY STATUS RESTARTS AGE

kube-dns-84f48d556b-qprmw 3

/3

Running 3 5h

|

###查看集群信息

|

1

2

3

4

5

|

kube-dns ClusterIP 10.254.0.2 <none> 53

/UDP

,53

/TCP

5h

Kubernetes master is running at http:

//localhost

:8080

KubeDNS is running at http:

//localhost

:8080

/api/v1/namespaces/kube-system/services/kube-dns/proxy

|

####查看 KubeDNS 守护程序的日志

11. 部署 Heapster 组件

###下载 heapster

|

1

2

3

4

5

6

7

|

grafana.yaml heapster-rbac.yaml heapster.yaml influxdb.yaml

|

###替换所有 images

注:这里我也将镜像放在了网易蜂巢上,请自行更改:

hub.c.163.com/zhijiansd/heapster-grafana-amd64:v4.4.3

hub.c.163.com/zhijiansd/heapster-amd64:v1.4.0

hub.c.163.com/zhijiansd/heapster-influxdb-amd64:v1.3.3

###更改 heapster.yaml

|

1

2

3

|

- --

source

=kubernetes:http:

//192

.168.100.104:8080?inClusterConfig=

false

|

注: heapster 默认使用 https 连接 apiserver ,这里更改为使用 http 连接。

###执行 influxdb 目录下的所有文件

|

1

2

3

4

5

6

7

8

9

|

deployment

"monitoring-grafana"

created

service

"monitoring-grafana"

created

clusterrolebinding

"heapster"

created

serviceaccount

"heapster"

created

deployment

"heapster"

created

service

"heapster"

created

deployment

"monitoring-influxdb"

created

service

"monitoring-influxdb"

created

|

###检查执行结果

|

1

2

3

4

|

heapster 1 1 1 1 6h

monitoring-grafana 1 1 1 1 6h

monitoring-influxdb 1 1 1 1 6h

|

###检查 Pods

|

1

2

3

4

5

6

7

8

|

heapster-6c96ccd7c4-xbmlc 1

/1

Running 1 6h

monitoring-grafana-98d44cd67-z5m99 1

/1

Running 1 6h

monitoring-influxdb-6b6d749d9c-schdp 1

/1

Running 1 6h

heapster ClusterIP 10.254.201.85 <none> 80

/TCP

6h

monitoring-grafana ClusterIP 10.254.138.73 <none> 80

/TCP

6h

monitoring-influxdb ClusterIP 10.254.45.121 <none> 8086

/TCP

6h

|

###查看集群信息

|

1

2

3

4

5

6

|

Kubernetes master is running at http:

//localhost

:8080

Heapster is running at http:

//localhost

:8080

/api/v1/namespaces/kube-system/services/heapster/proxy

KubeDNS is running at http:

//localhost

:8080

/api/v1/namespaces/kube-system/services/kube-dns/proxy

monitoring-grafana is running at http:

//localhost

:8080

/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

monitoring-influxdb is running at http:

//localhost

:8080

/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy

|

12. 部署 Kubernetes Dashboard

这里我们使用不需要证书的版本:

### 替换 images

注:请自行更改镜像地址:

hub.c.163.com/zhijiansd/kubernetes-dashboard-amd64:v1.7.1

###添加 apiserver 地址

|

1

2

3

|

- --apiserver-host=http:

//192

.168.100.104:8080

|

###执行该文件

|

1

2

3

4

5

6

|

serviceaccount

"kubernetes-dashboard"

configured

role

"kubernetes-dashboard-minimal"

configured

rolebinding

"kubernetes-dashboard-minimal"

configured

deployment

"kubernetes-dashboard"

configured

service

"kubernetes-dashboard"

configured

|

###检查 kubernetes-dashboard 服务

|

1

2

|

kubernetes-dashboard-7648996855-54x6l 1

/1

Running 1 7h

|

注:1.7版不能使用 kubectl cluster-info 查看到 kubernetes-dashboard 地址,1.6.3版的可以。

1.7.0版需要使用http://localhost:8080/api/v1/namespaces/kube-system/services/http:kubernetes-dashboard:/proxy/ 进行访问。而1.7.1版可以使用http://localhost:8080/api/v1/namespaces/kube-system/services/http:kubernetes-dashboard:/proxy/ 访问,也可以使用http://localhost:8080/ui访问,其会自动跳转。

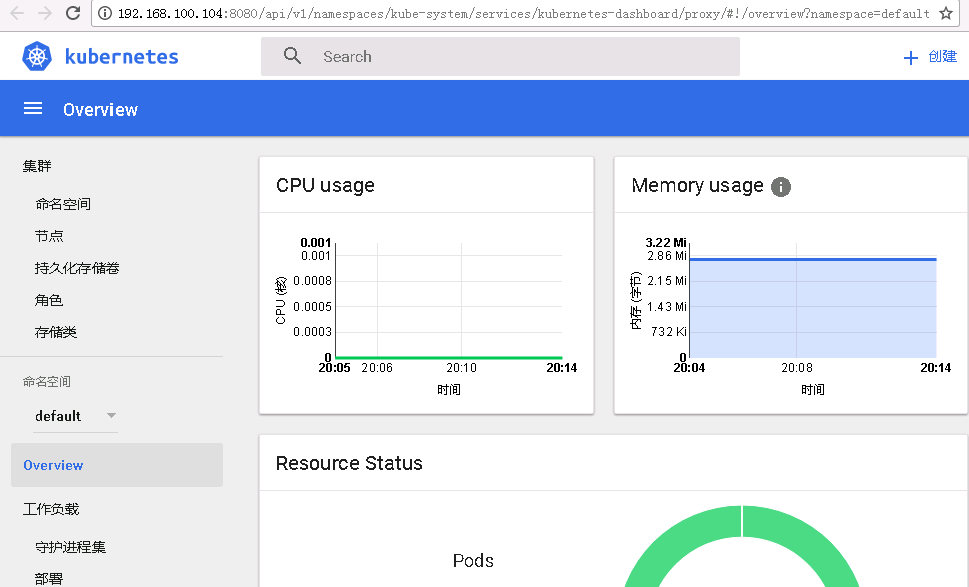

13.查看 kubernetes dashboard

使用http://localhost:8080/ui访问

![1.PNG ac7a81393d26be0888175baf10b19c84.png]()

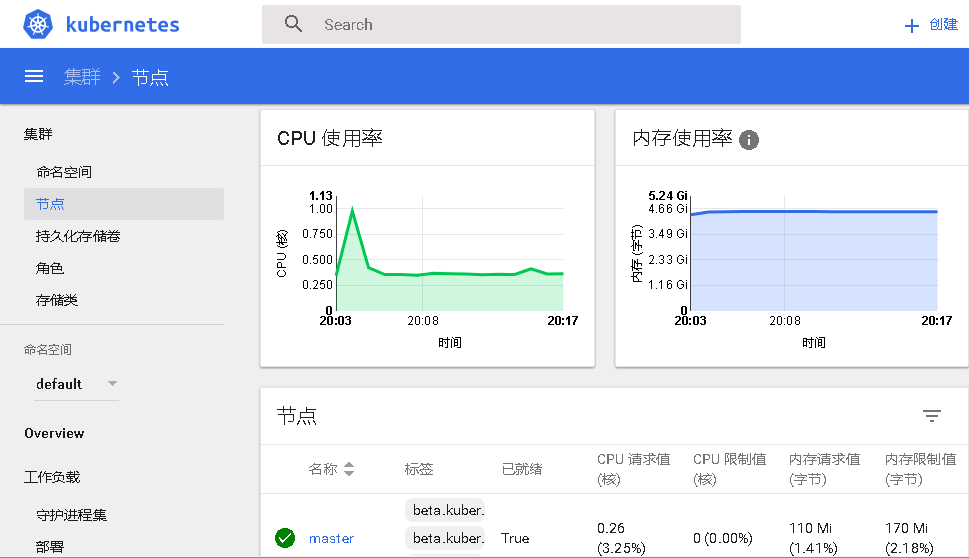

节点界面

![2.PNG 302305126794be2677032d6bada562ff.png]()

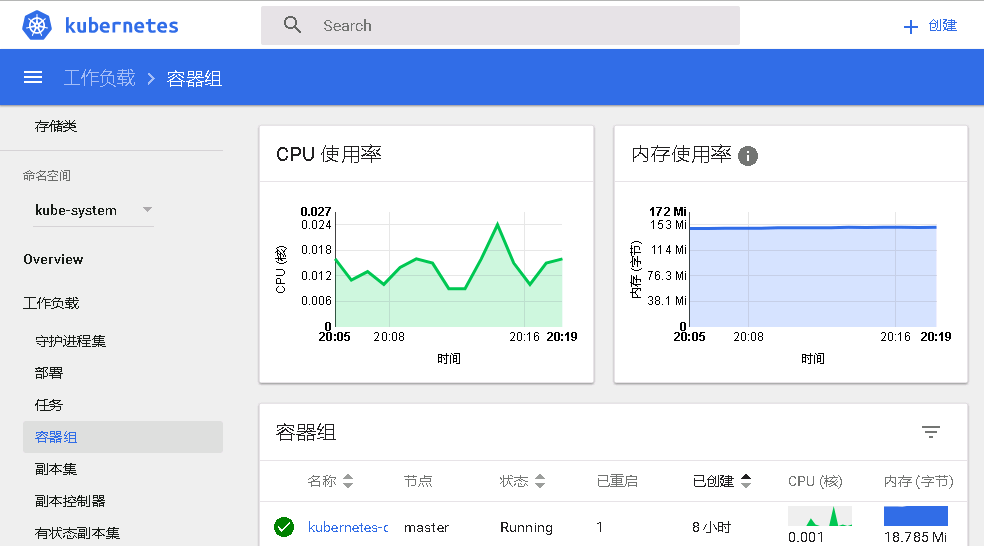

查看Pod界面

![3.PNG e3e10770783cb850eadf7d7b0e656000.png]()

![4.PNG a43014c553ba3289f36c017632e54af2.png]()

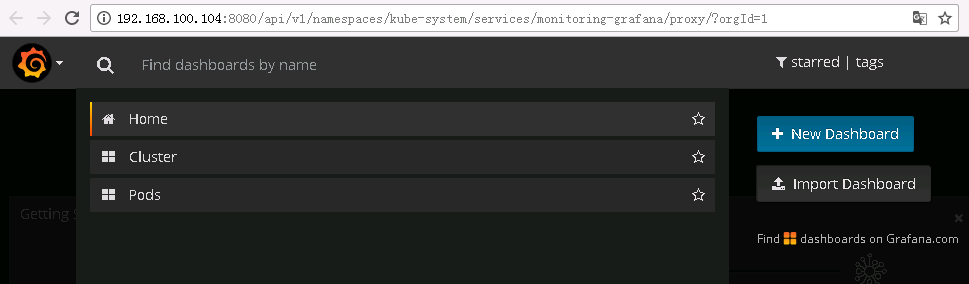

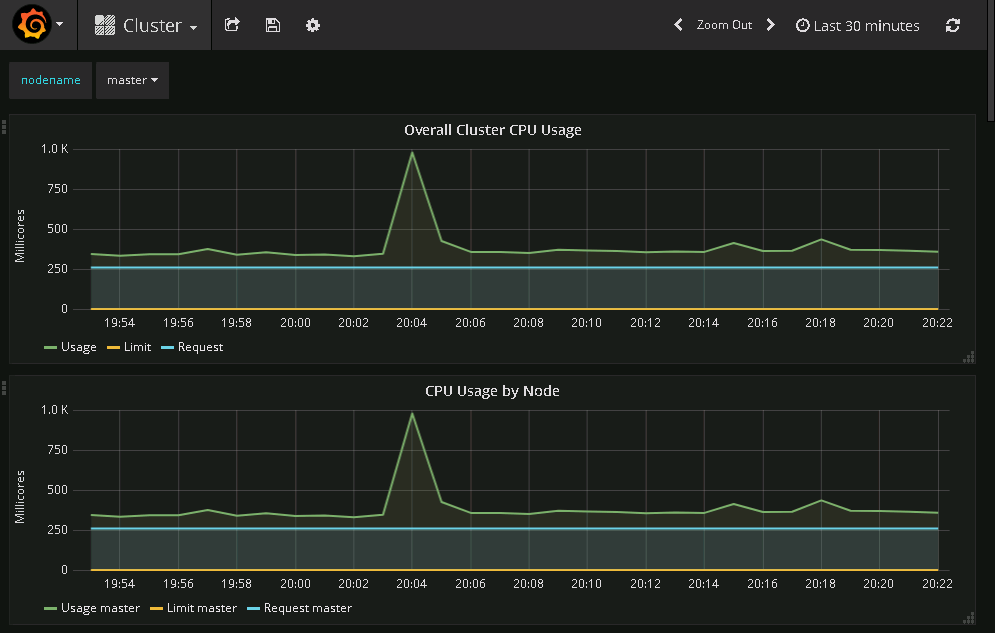

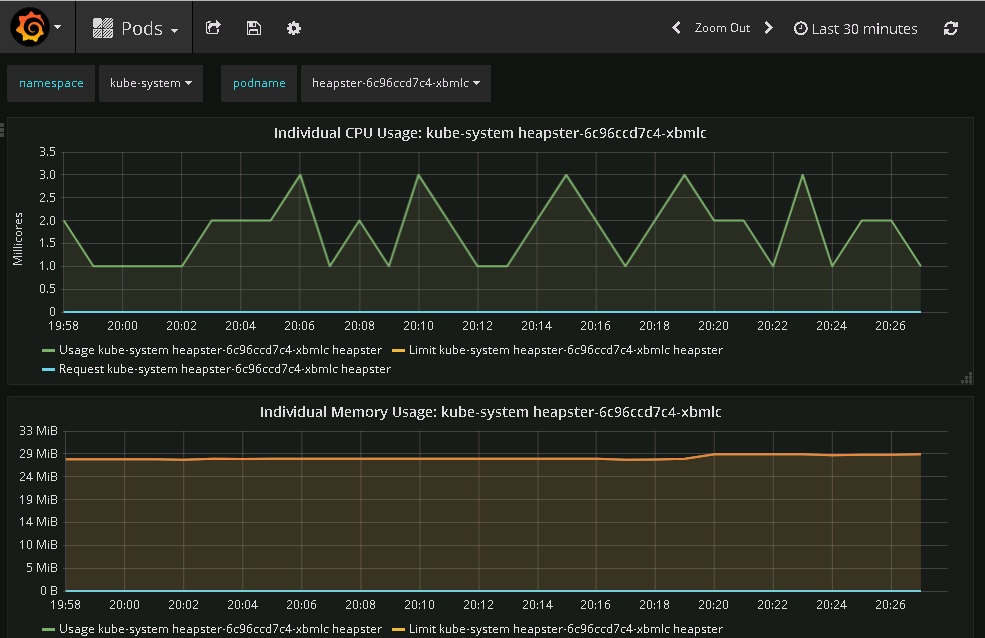

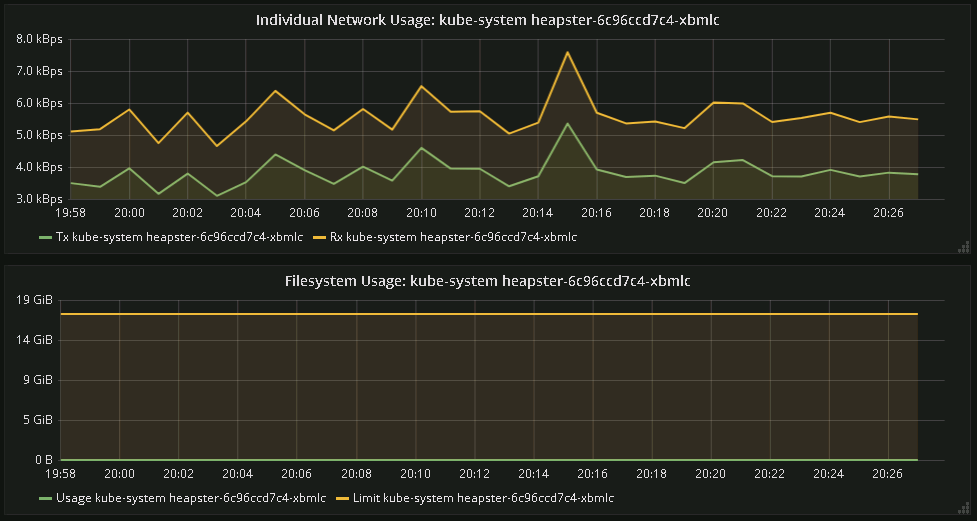

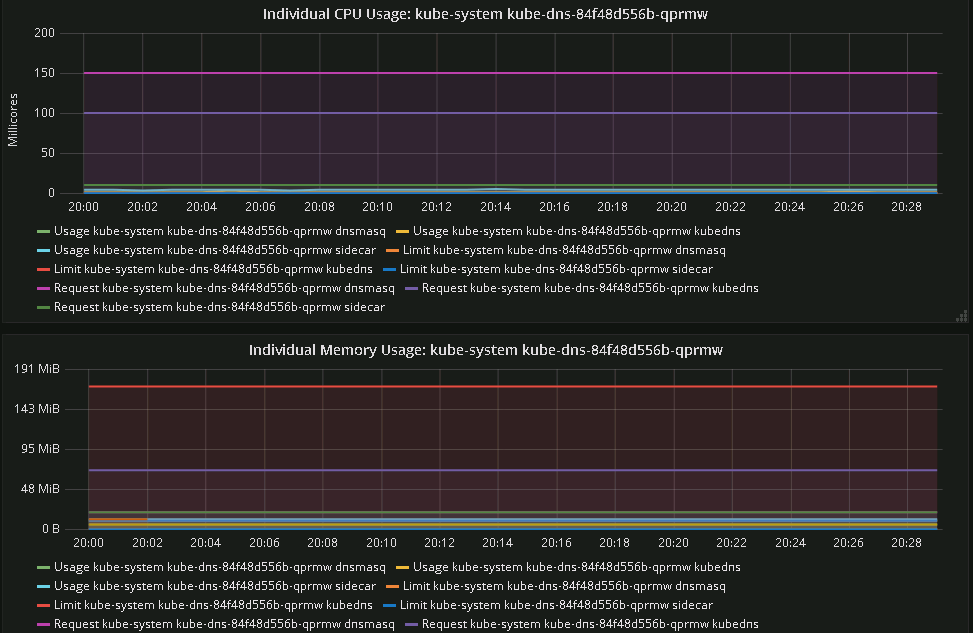

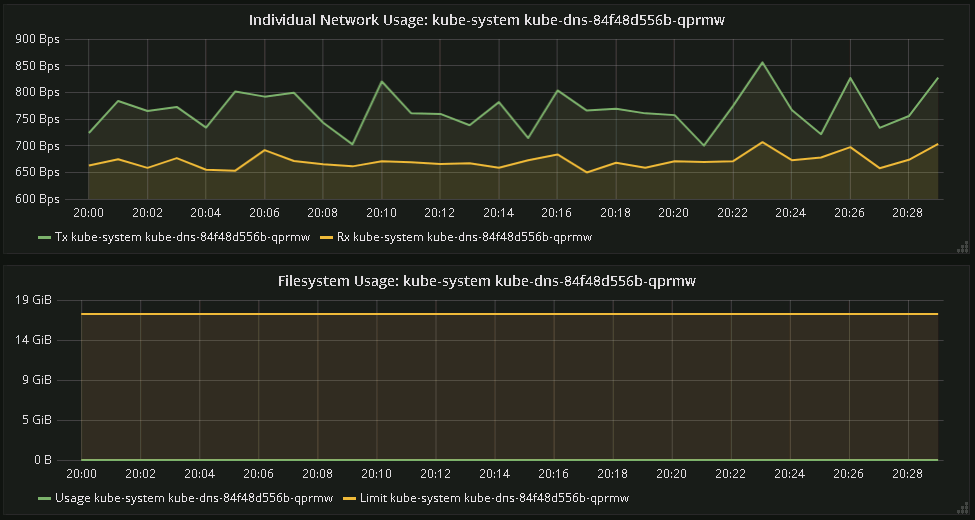

14. 查看 grafana

使用http://localhost:8080/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy访问

![5.PNG 613de1f6167e51cf7172ec2cc24f4991.png]()

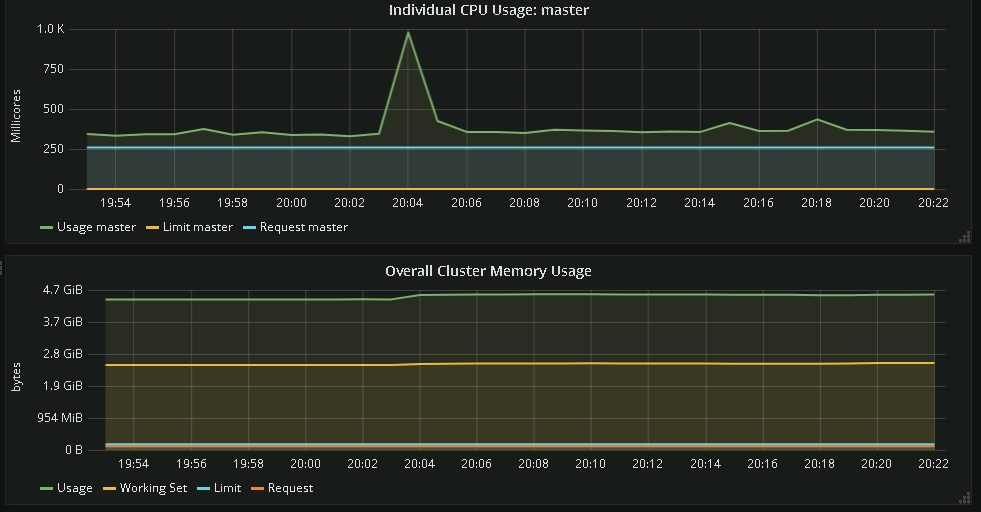

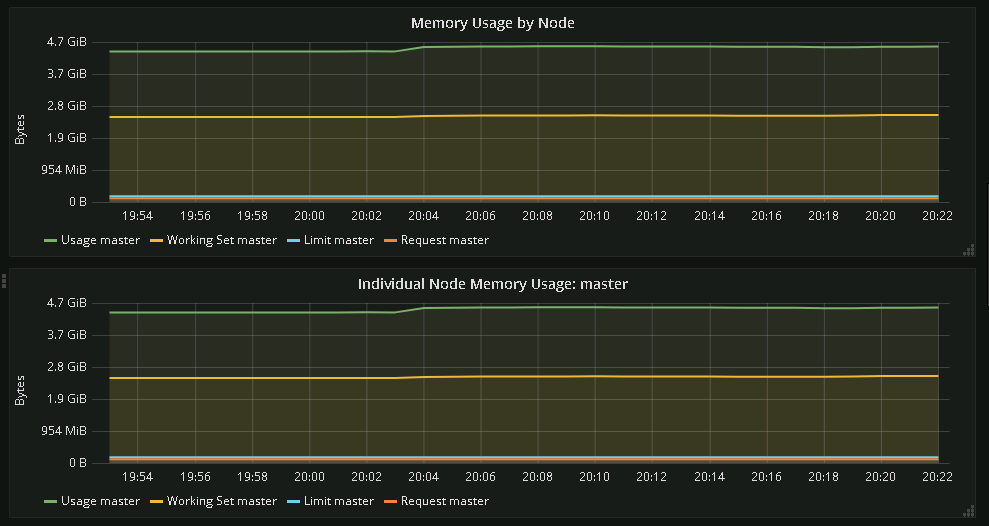

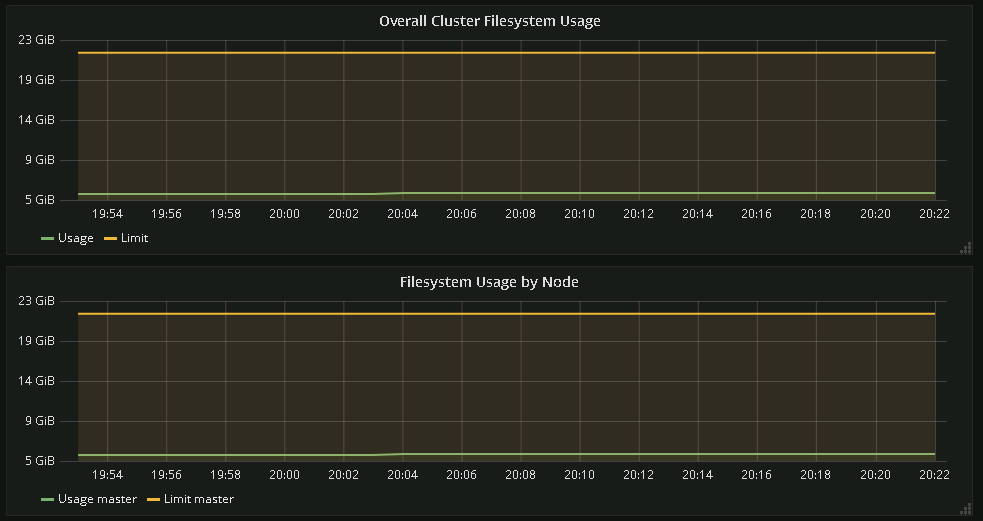

查看Cluster

![6.PNG 6406876ac513661a58c195345df2c941.png]()

![7.PNG 75d32d5c42f14ba78970447a68aaeda5.png]()

![8.PNG dd4be2451818f0eba1e6427973ae6e43.png]()

![9.PNG b7afc7e23981b27caa1bb172b8235753.png]()

查看Pod

![10.PNG 75a30392fd879cc086d5a5e3d68fd5d5.png]()

![11.PNG 89f4462b0f8c59acd2e423996aeca7e7.png]()

![12.PNG d5f00e3cc82f6f41da28c7cfbd51f204.png]()

![13.PNG f980ae313fd74448328124676c75d9d2.png]()

本文转自 结束的伤感 51CTO博客,原文链接:http://blog.51cto.com/wangzhijian/1975356