在使用Apache DolphinScheduler调度执行复杂的HiveSQL时,HQL包含多种海豚无法正确识别的符号,怎么办?本文提供了可行的思路和方法,供用户参考。

一、目的

在Hive中完成复杂JSON,既有对象还有数组而且数组中包含数组的解析后,原本以为没啥问题了,结果在DolphinScheduler中调度又出现了大问题,搞了一天。试了很多种方法,死了无数脑细胞,才解决了这个问题!

二、HiveSQL

insert overwrite table hurys_dc_dwd.dwd_json_statistics partition(day)

select

t1.device_no,

source_device_type,

sn,

model,

create_time,

cycle,

get_json_object(coil_list,'$.laneNo') lane_no,

get_json_object(coil_list,'$.laneType') lane_type,

section_no,

get_json_object(coil_list,'$.coilNo') coil_no,

get_json_object(coil_list,'$.volumeSum') volume_sum,

get_json_object(coil_list,'$.volumePerson') volume_person,

get_json_object(coil_list,'$.volumeCarNon') volume_car_non,

get_json_object(coil_list,'$.volumeCarSmall') volume_car_small,

get_json_object(coil_list,'$.volumeCarMiddle') volume_car_middle,

get_json_object(coil_list,'$.volumeCarBig') volume_car_big,

get_json_object(coil_list,'$.speedAvg') speed_avg,

get_json_object(coil_list,'$.speed85') speed_85,

get_json_object(coil_list,'$.timeOccupancy') time_occupancy,

get_json_object(coil_list,'$.averageHeadway') average_headway,

get_json_object(coil_list,'$.averageGap') average_gap,

substr(create_time,1,10) day

from (select

get_json_object(statistics_json,'$.deviceNo') device_no,

get_json_object(statistics_json,'$.sourceDeviceType') source_device_type,

get_json_object(statistics_json,'$.sn') sn,

get_json_object(statistics_json,'$.model') model,

get_json_object(statistics_json,'$.createTime') create_time ,

get_json_object(statistics_json,'$.data.cycle') cycle,

get_json_object(replace(replace(section_list,':{',':[{'),'}}','}]}'),'$.sectionNo') section_no,

section_list

from hurys_dc_ods.ods_statistics

lateral view explode(split(replace(replace(replace(get_json_object(statistics_json,'$.data.sectionList'),

'[',''),']',''),'},{"sectionNo"','}|{"sectionNo"'),"\\|")) tf as section_list

where day='2024-07-18' -- date_sub(current_date(), 1) -- '2024-07-18' --

) as t1

lateral view explode(split(replace(replace(replace(get_json_object(replace(replace(section_list,

':{',':[{'),'}}','}]}'),'$.coilList'),'[',''),']',''),'},','}|'),"\\|")) tf1 as coil_list

where substr(create_time,1,10) = '2024-07-18' --date_sub(current_date(), 1) --'2024-07-17'

;

三、原先海豚的任务调度方式

在shell脚本里添加HiveSQL语句

#! /bin/bash

source /etc/profile

nowdate=`date --date='0 days ago' "+%Y%m%d"`

yesdate=`date -d yesterday +%Y-%m-%d`

hive -e "

use hurys_dc_dwd;

set hive.vectorized.execution.enabled=false;

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.exec.max.dynamic.partitions.pernode=1000;

set hive.exec.max.dynamic.partitions=1500;

with t1 as(

select

get_json_object(statistics_json,'$.deviceNo') device_no,

get_json_object(statistics_json,'$.sourceDeviceType') source_device_type,

get_json_object(statistics_json,'$.sn') sn,

get_json_object(statistics_json,'$.model') model,

get_json_object(statistics_json,'$.createTime') create_time ,

get_json_object(statistics_json,'$.data.cycle') cycle,

get_json_object(replace(replace(section_list,':{',':[{'),'}}','}]}'),'$.sectionNo') section_no,

section_list

from hurys_dc_ods.ods_statistics

lateral view explode(split(replace(replace(replace(get_json_object(statistics_json,'$.data.sectionList'),'[',''),']',''),'},{"sectionNo"','}|{"sectionNo"'),"\\\\|")) tf as section_list

where day='$yesdate'

)

insert overwrite table hurys_dc_dwd.dwd_json_statistics partition(day)

select

t1.device_no,

source_device_type,

sn,

model,

substr(create_time,1,19) create_time ,

cycle,

get_json_object(coil_list,'$.laneNo') lane_no,

get_json_object(coil_list,'$.laneType') lane_type,

section_no,

get_json_object(coil_list,'$.coilNo') coil_no,

get_json_object(coil_list,'$.volumeSum') volume_sum,

get_json_object(coil_list,'$.volumePerson') volume_person,

get_json_object(coil_list,'$.volumeCarNon') volume_car_non,

get_json_object(coil_list,'$.volumeCarSmall') volume_car_small,

get_json_object(coil_list,'$.volumeCarMiddle') volume_car_middle,

get_json_object(coil_list,'$.volumeCarBig') volume_car_big,

get_json_object(coil_list,'$.speedAvg') speed_avg,

get_json_object(coil_list,'$.speed85') speed_85,

get_json_object(coil_list,'$.timeOccupancy') time_occupancy,

get_json_object(coil_list,'$.averageHeadway') average_headway,

get_json_object(coil_list,'$.averageGap') average_gap,

substr(create_time,1,10) day

from t1

lateral view explode(split(replace(replace(replace(get_json_object(replace(replace(section_list,':{',':[{'),'}}','}]}'),'$.coilList'),'[',''),']',''),'},','}|'),"\\\\|")) tf1 as coil_list

where substr(create_time,1,10) ='$yesdate'

"

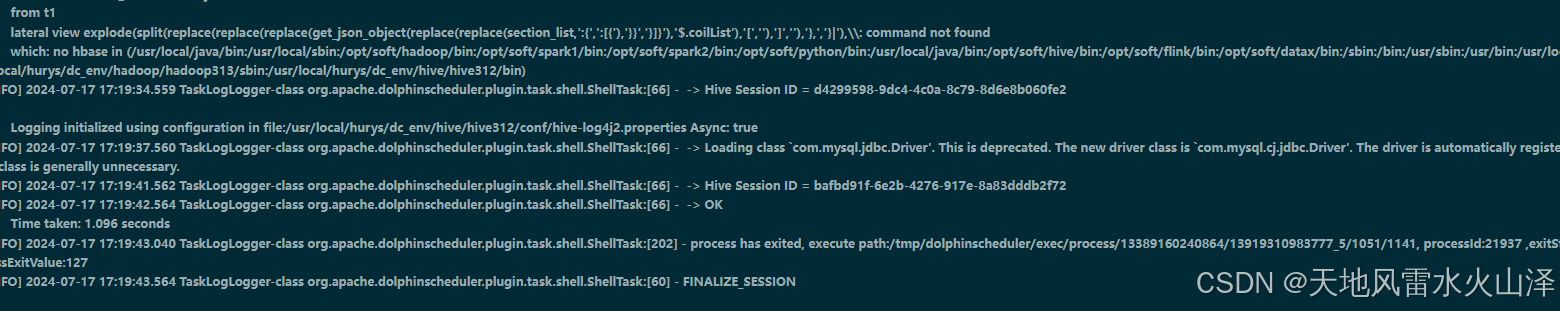

四、原先方式报错日志

![file]()

DolphinScheduler无法正确识别HiveSQL里解析复杂JSON的多种符号。

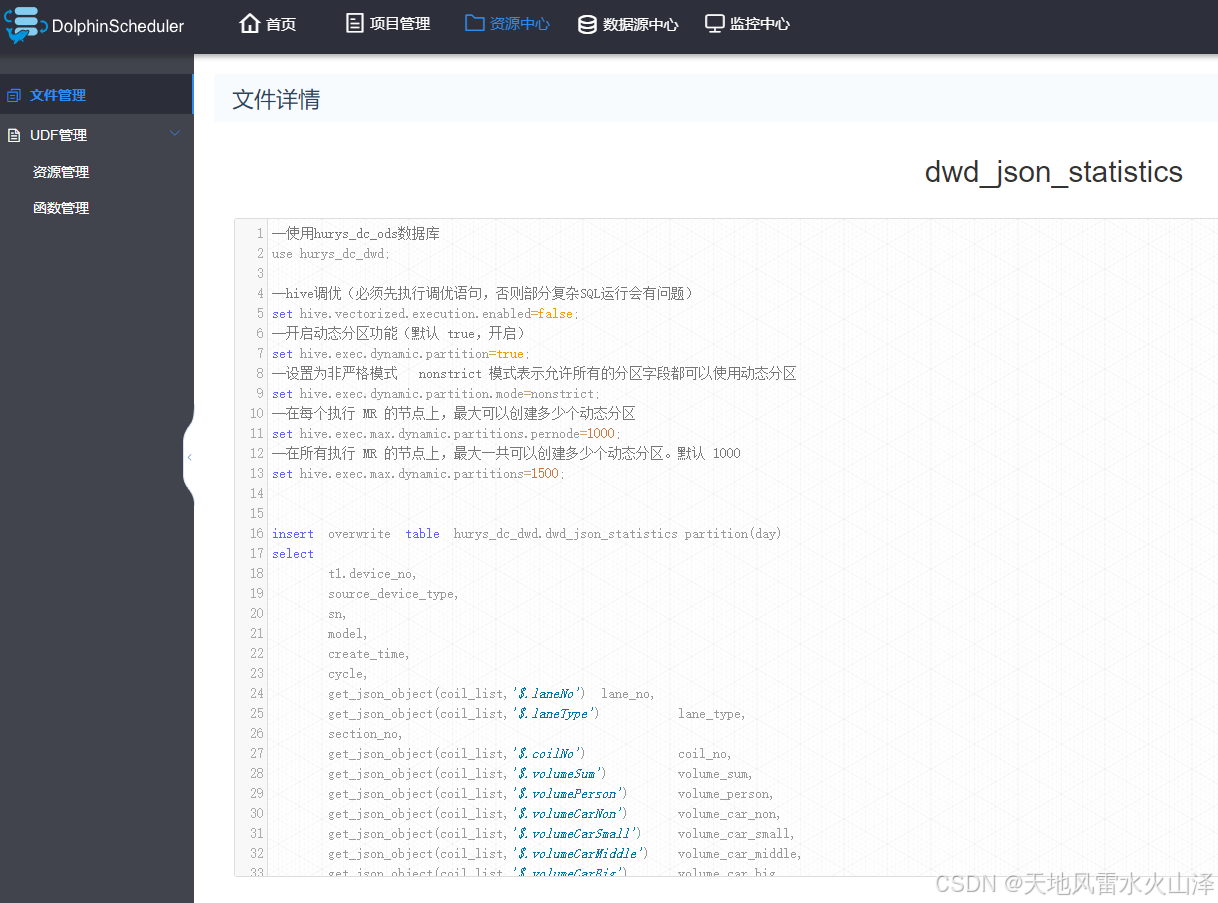

五、解决方式

把HiveSQL放在一个SQL文件里,然后在脚本里是执行Hive的sourceSQL文件。

1 SQL文件

![file]()

--使用hurys_dc_ods数据库 use hurys_dc_dwd;

--hive调优(必须先执行调优语句,否则部分复杂SQL运行会有问题) set hive.vectorized.execution.enabled=false; --开启动态分区功能(默认 true,开启) set hive.exec.dynamic.partition=true; --设置为非严格模式 nonstrict 模式表示允许所有的分区字段都可以使用动态分区 set hive.exec.dynamic.partition.mode=nonstrict; --在每个执行 MR 的节点上,最大可以创建多少个动态分区 set hive.exec.max.dynamic.partitions.pernode=1000; --在所有执行 MR 的节点上,最大一共可以创建多少个动态分区。默认 1000 set hive.exec.max.dynamic.partitions=1500;

insert overwrite table hurys_dc_dwd.dwd_json_statistics partition(day)

select

t1.device_no,

source_device_type,

sn,

model,

create_time,

cycle,

get_json_object(coil_list,'$.laneNo') lane_no,

get_json_object(coil_list,'$.laneType') lane_type,

section_no,

get_json_object(coil_list,'$.coilNo') coil_no,

get_json_object(coil_list,'$.volumeSum') volume_sum,

get_json_object(coil_list,'$.volumePerson') volume_person,

get_json_object(coil_list,'$.volumeCarNon') volume_car_non,

get_json_object(coil_list,'$.volumeCarSmall') volume_car_small,

get_json_object(coil_list,'$.volumeCarMiddle') volume_car_middle,

get_json_object(coil_list,'$.volumeCarBig') volume_car_big,

get_json_object(coil_list,'$.speedAvg') speed_avg,

get_json_object(coil_list,'$.speed85') speed_85,

get_json_object(coil_list,'$.timeOccupancy') time_occupancy,

get_json_object(coil_list,'$.averageHeadway') average_headway,

get_json_object(coil_list,'$.averageGap') average_gap,

substr(create_time,1,10) day

from (select

get_json_object(statistics_json,'$.deviceNo') device_no,

get_json_object(statistics_json,'$.sourceDeviceType') source_device_type,

get_json_object(statistics_json,'$.sn') sn,

get_json_object(statistics_json,'$.model') model,

get_json_object(statistics_json,'$.createTime') create_time ,

get_json_object(statistics_json,'$.data.cycle') cycle,

get_json_object(replace(replace(section_list,':{',':[{'),'}}','}]}'),'$.sectionNo') section_no,

section_list

from hurys_dc_ods.ods_statistics

lateral view explode(split(replace(replace(replace(get_json_object(statistics_json,'$.data.sectionList'),'[',''),']',''),'},{"sectionNo"','}|{"sectionNo"'),"\\|")) tf as section_list

where day= date_sub(current_date(), 1)

) as t1

lateral view explode(split(replace(replace(replace(get_json_object(replace(replace(section_list,':{',':[{'),'}}','}]}'),'$.coilList'),'[',''),']',''),'},','}|'),"\\|")) tf1 as coil_list

where substr(create_time,1,10) = date_sub(current_date(), 1)

;

2 海豚任务执行脚本

![file]()

#! /bin/bash

source /etc/profile

nowdate=`date --date='0 days ago' "+%Y-%m-%d"`

yesdate=`date -d yesterday +%Y-%m-%d`

hive -e "

source dwd_json_statistics.sql

"

3 执行任务,验证结果

![file]()

终于解决了!以后碰到类似调度器识别不了SQL里符号的问题,可以用这个方法,把SQL放在SQL文件里,然后在脚本里执行这个SQL文件,这样就能规避这类问题了。

转载自天地风雷水火山泽 原文链接:https://blog.csdn.net/tiantang2renjian/article/details/140605840

本文由 白鲸开源科技 提供发布支持!