1)部署Agent

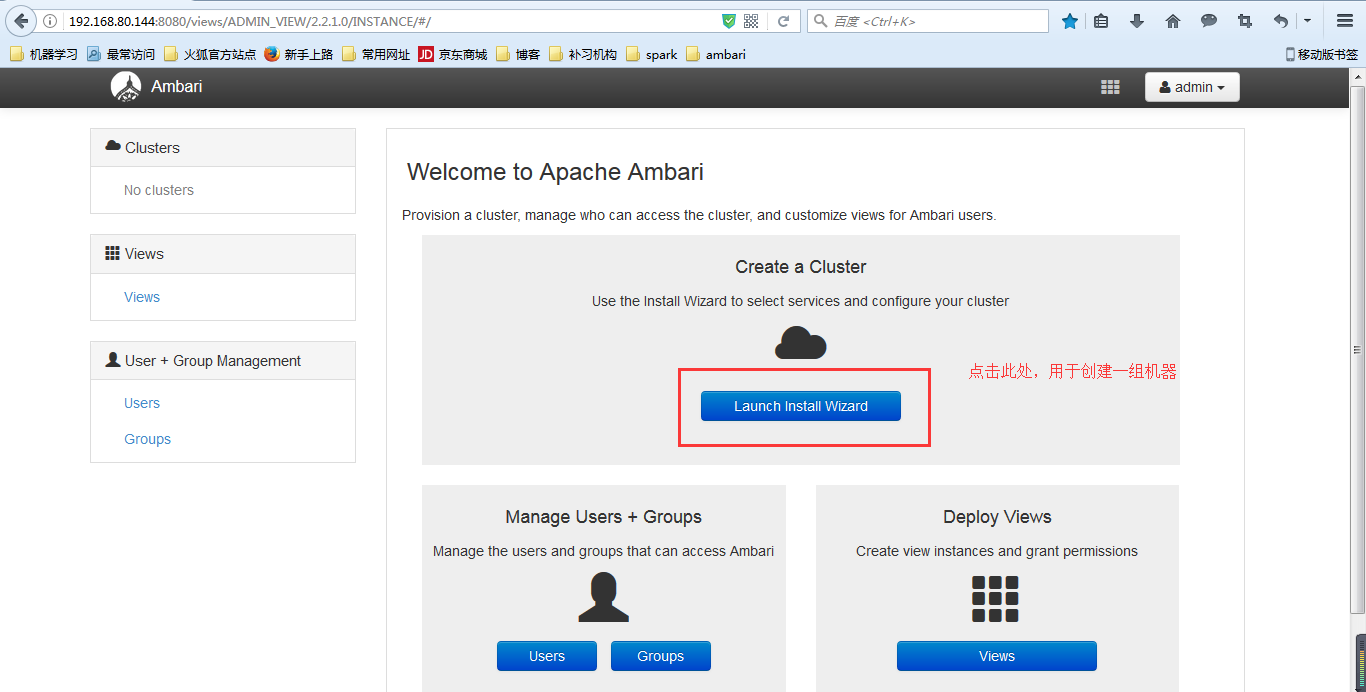

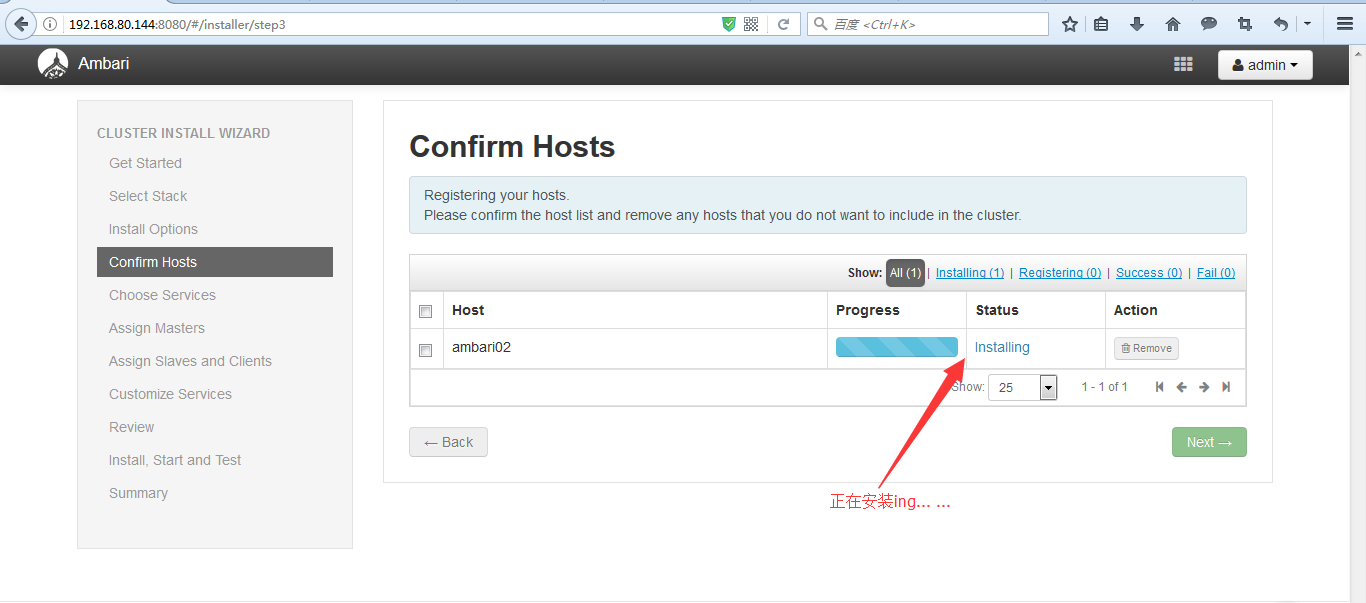

1) 注册并安装agent

http://192.168.80.144:8080/views/ADMIN_VIEW/2.2.1.0/INSTANCE/#/

![]()

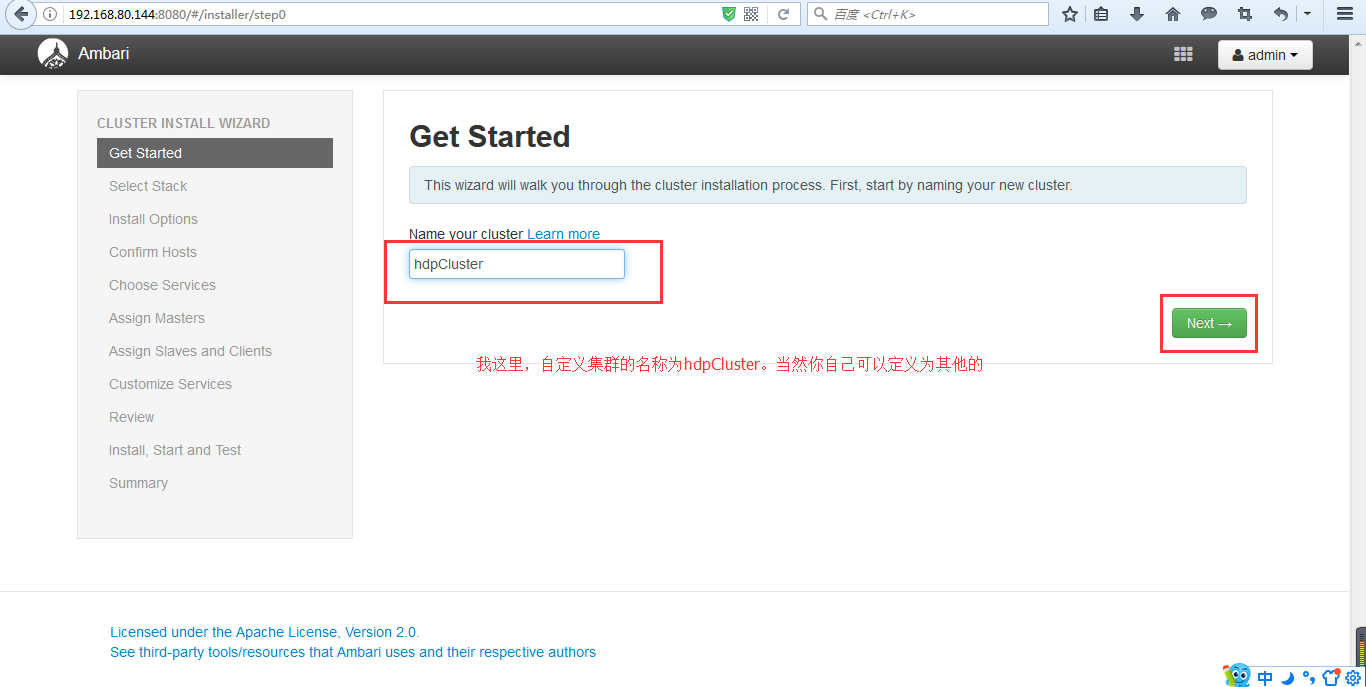

2)为集群取个名字,我这里,就直接取为hdpCluster,然后点击next

http://192.168.80.144:8080/#/installer/step0

![]()

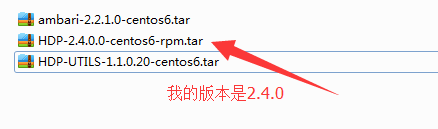

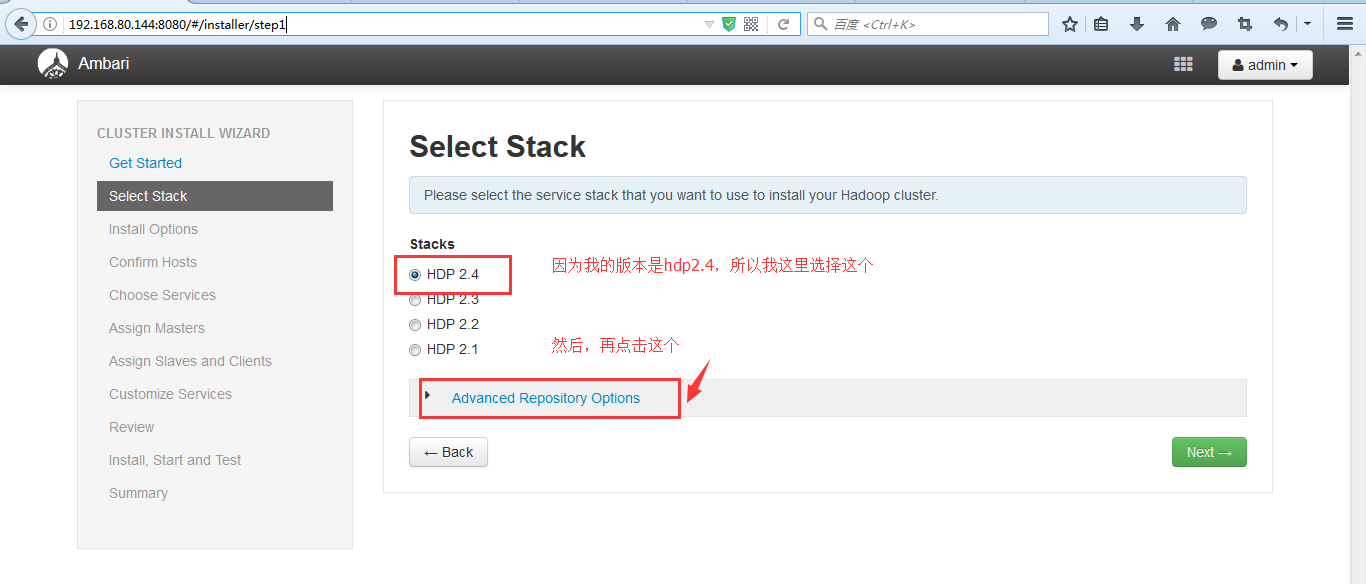

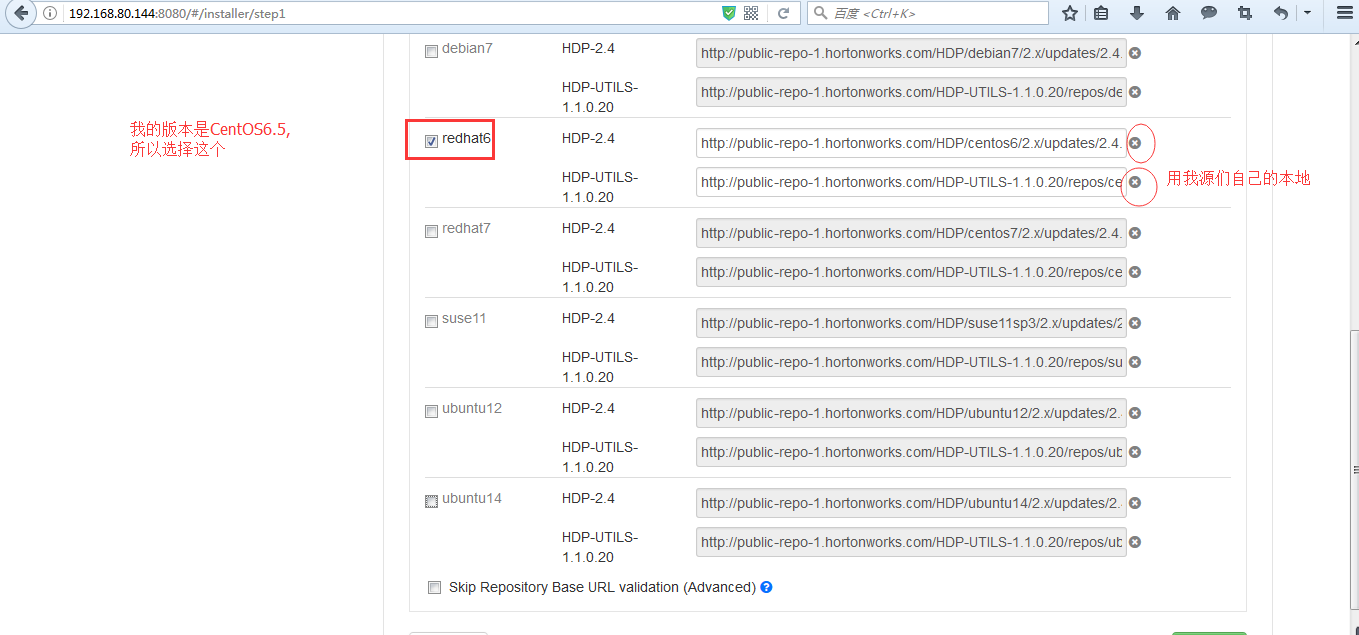

3)选择HDP2.4的版本并选择高级配置,注意和自己下载安装的版本一致。

![]()

http://192.168.80.144:8080/#/installer/step1

![]()

![]()

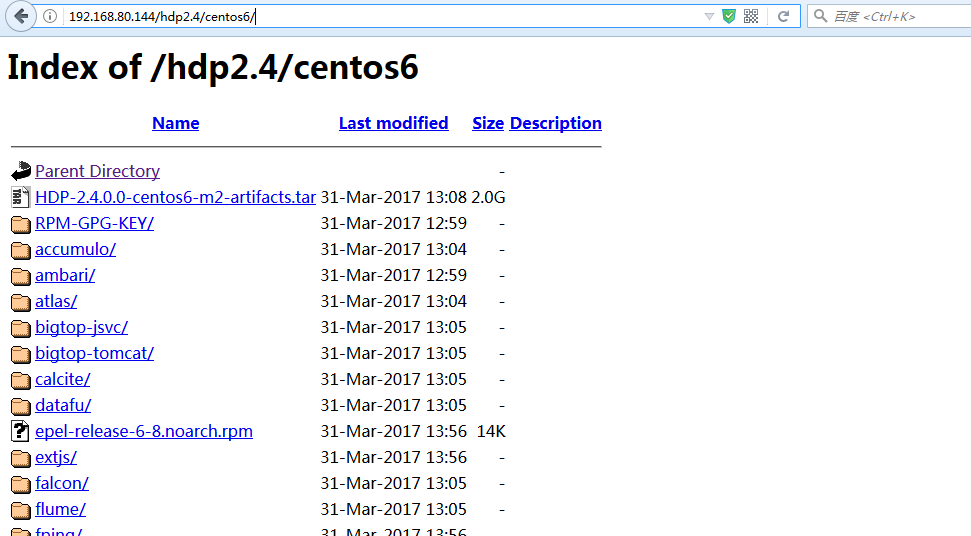

需要改成以下地址

http://192.168.80.144/hdp2.4/centos6/

![]()

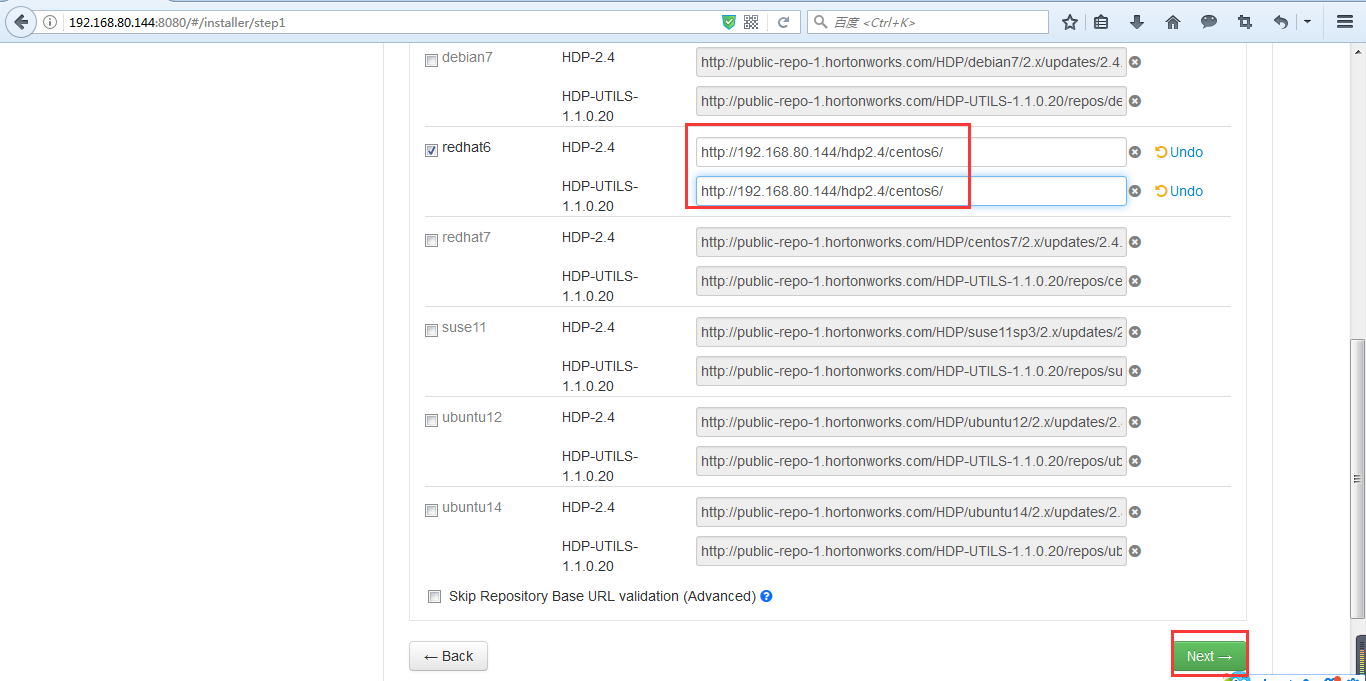

4)配置本地源地址并点击next

![]()

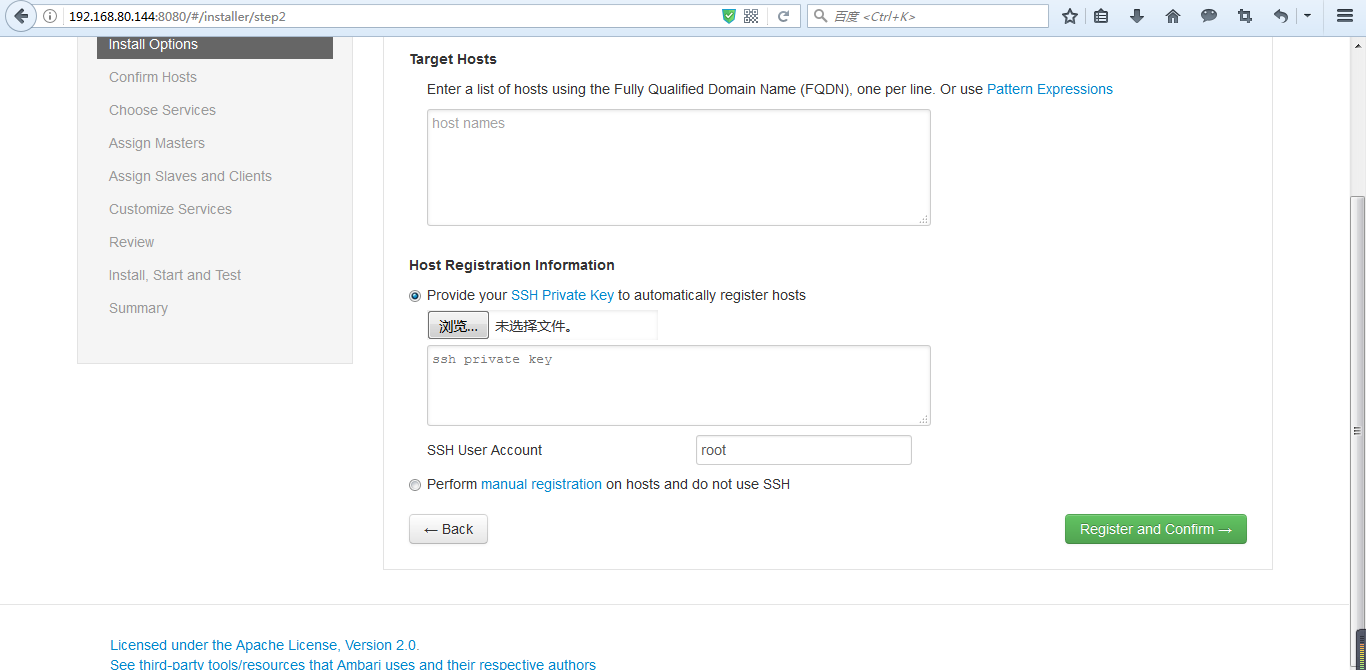

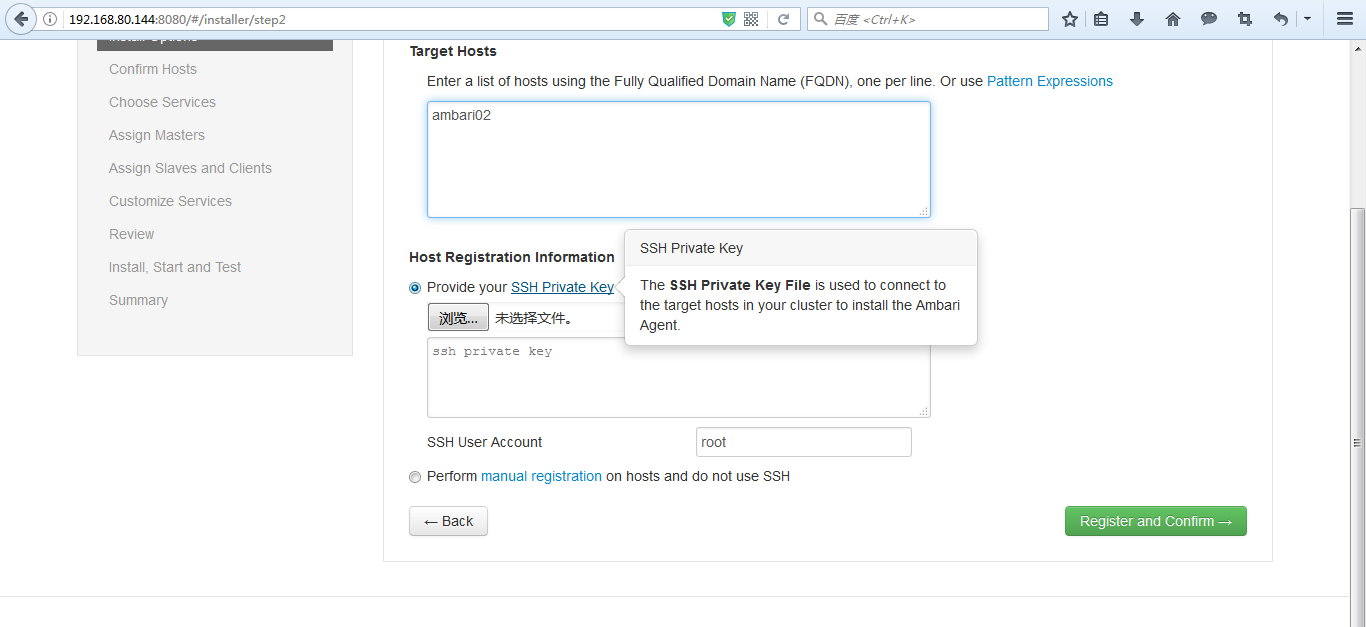

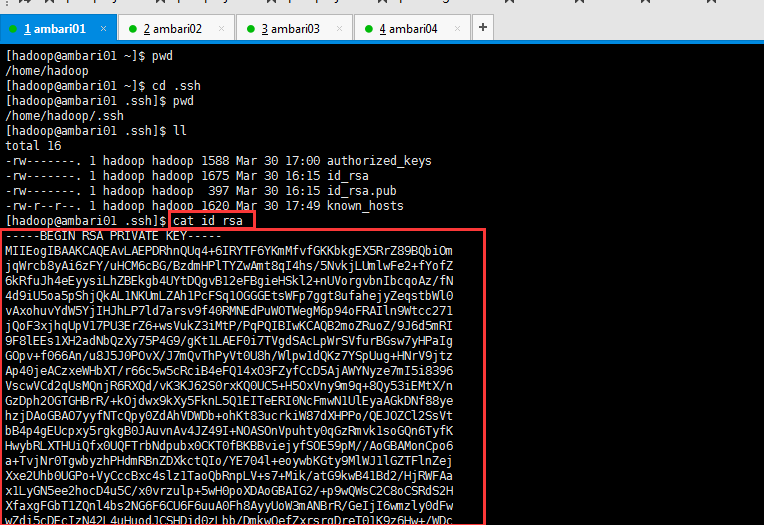

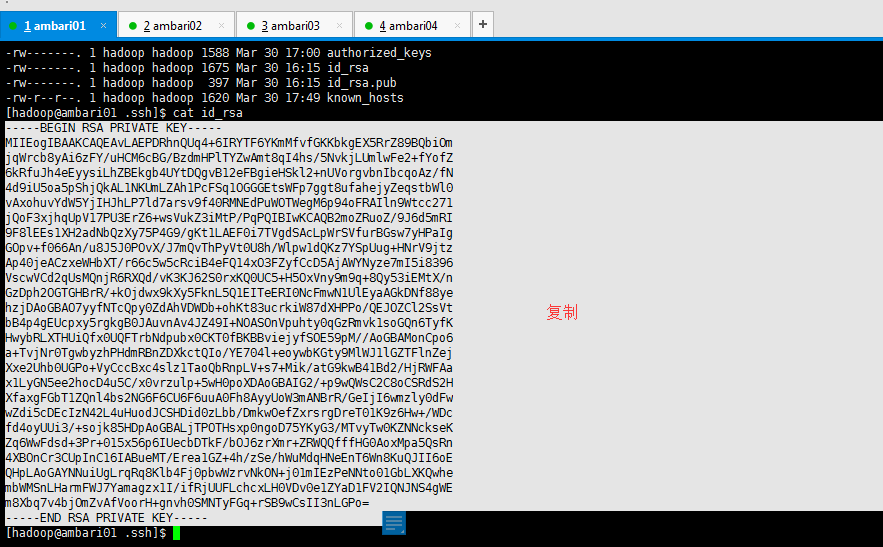

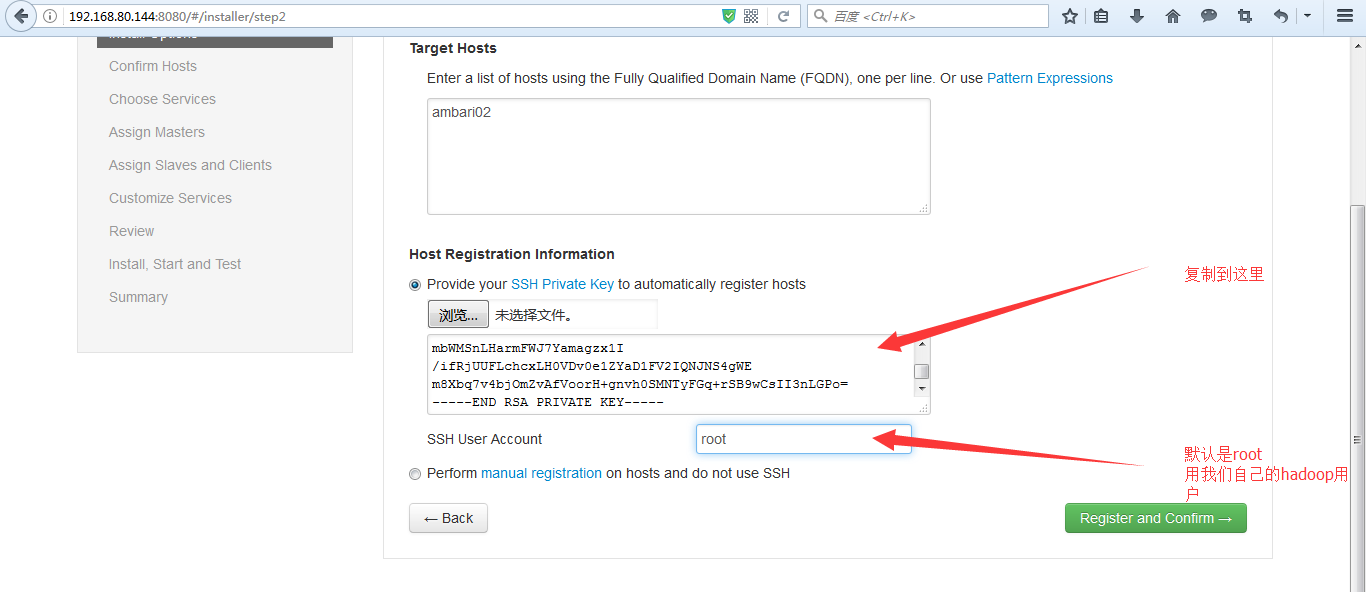

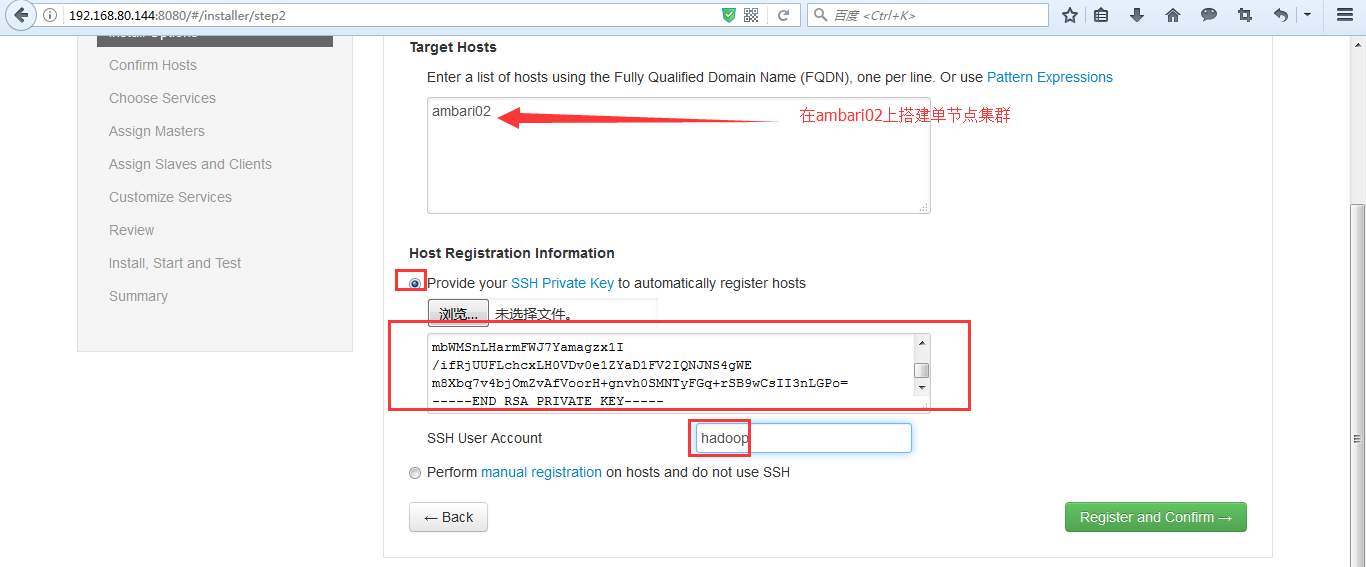

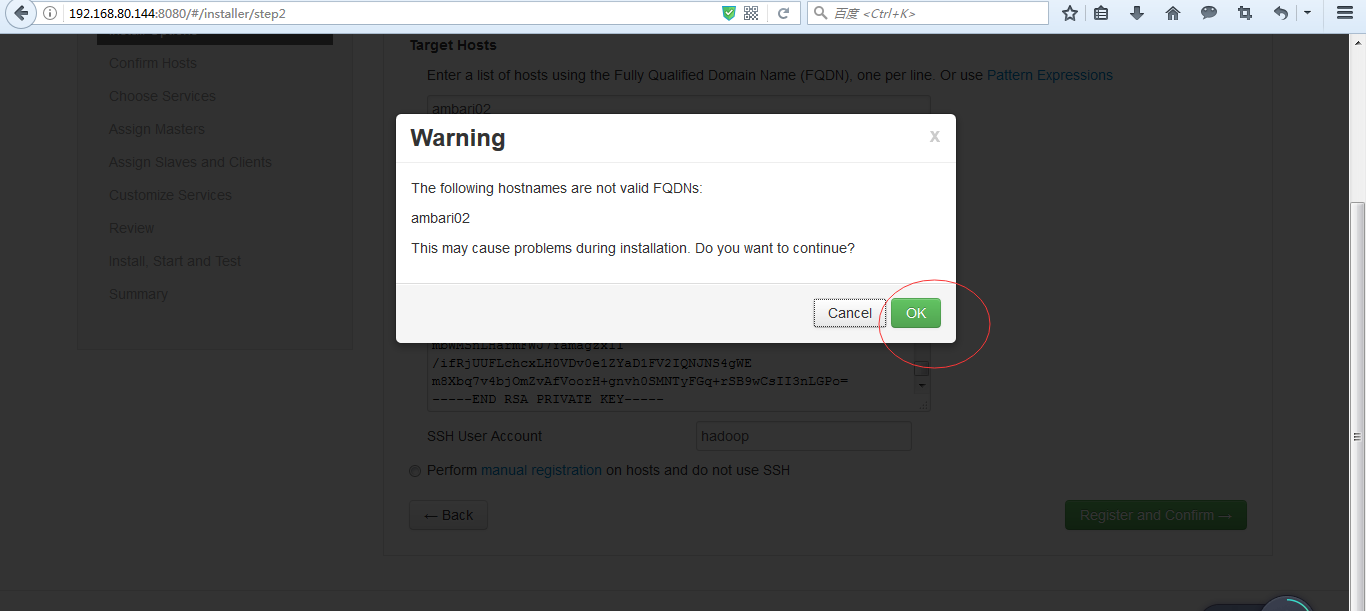

5)添加目标主机的主机名并配置ambari-server的私钥,保证其他节点能够和ambari-server免密码通信,并选择对应的hadoop用户(因为我们是在hadoop用户下配置的SSH免密码登录)然后点击注册。

http://192.168.80.144:8080/#/installer/step2

![]()

![]()

![]()

[hadoop@ambari01 ~]$ pwd

/home/hadoop

[hadoop@ambari01 ~]$ cd .ssh

[hadoop@ambari01 .ssh]$ pwd

/home/hadoop/.ssh

[hadoop@ambari01 .ssh]$ ll

total 16

-rw-------. 1 hadoop hadoop 1588 Mar 30 17:00 authorized_keys

-rw-------. 1 hadoop hadoop 1675 Mar 30 16:15 id_rsa

-rw-------. 1 hadoop hadoop 397 Mar 30 16:15 id_rsa.pub

-rw-r--r--. 1 hadoop hadoop 1620 Mar 30 17:49 known_hosts

[hadoop@ambari01 .ssh]$ cat id_rsa

![]()

![复制代码]()

-----BEGIN RSA PRIVATE KEY-----

MIIEogIBAAKCAQEAvLAEPDRhnQUq4+6IRYTF6YKmMfvfGKKbkgEX5RrZ89BQbiOm

jqWrcb8yAi6zFY/uHCM6cBG/BzdmHPlTYZwAmt8qI4hs/5NvkjLUmlwFe2+fYofZ

6kRfuJh4eEyysiLhZBEkgb4UYtDQgvB12eFBgieHSkl2+nUVorgvbnIbcqoAz/fN

4d9iU5oa5pShjQkAL1NKUmLZAh1PcFSq1OGGGEtsWFp7ggt8ufahejyZeqstbWl0

vAxohuvYdW5YjIHJhLP7ld7arsv9f40RMNEdPuWOTWegM6p94oFRAIln9Wtcc271

jQoF3xjhqUpV17PU3ErZ6+wsVukZ3iMtP/PqPQIBIwKCAQB2moZRuoZ/9J6d5mRI

9F8lEEs1XH2adNbQzXy75P4G9/gKt1LAEF0i7TVgdSAcLpWrSVfurBGsw7yHPaIg

GOpv+f066An/u8J5J0POvX/J7mQvThPyVt0U8h/Wlpw1dQKz7YSpUug+HNrV9jtz

Ap40jeACzxeWHbXT/r66c5w5cRciB4eFQ14xO3FZyfCcD5AjAWYNyze7mI5i8396

VscwVCd2qUsMQnjR6RXQd/vK3KJ62S0rxKQ0UC5+H5OxVny9m9q+8Qy53iEMtX/n

GzDph2OGTGHBrR/+kOjdwx9kXy5FknL5Q1EITeERI0NcFmwN1UlEyaAGkDNf88ye

hzjDAoGBAO7yyfNTcQpy0ZdAhVDWDb+ohKt83ucrkiW87dXHPPo/QEJOZCl2SsVt

bB4p4gEUcpxy5rgkgB0JAuvnAv4JZ49I+NOASOnVpuhty0qGzRmvk1soGQn6TyfK

HwybRLXTHUiQfx0UQFTrbNdpubx0CKT0fBKBBviejyfSOE59pM//AoGBAMonCpo6

a+TvjNr0TgwbyzhPHdmRBnZDXkctQIo/YE704l+eoywbKGty9MlWJ1lGZTFlnZej

Xxe2Uhb0UGPo+VyCccBxc4slz1TaoQbRnpLV+s7+Mik/atG9kwB41Bd2/HjRWFAa

x1LyGN5ee2hocD4u5C/x0vrzulp+5wH0poXDAoGBAIG2/+p9wQWsC2C8oCSRdS2H

XfaxgFGbT1ZQnl4bs2NG6F6CU6F6uuA0Fh8AyyUoW3mANBrR/GeIjI6wmzly0dFw

wZdi5cDEcIzN42L4uHuodJCSHDid0zLbb/DmkwOefZxrsrgDreT01K9z6Hw+/WDc

fd4oyUUi3/+sojk85HDpAoGBALjTPOTHsxp0ngoD75YKyG3/MTvyTw0KZNNckseK

Zq6WwFdsd+3Pr+015x56p6IUecbDTkF/bOJ6zrXmr+ZRWQQfffHG0AoxMpa5QsRn

4XBOnCr3CUpInC16IABueMT/Erea1GZ+4h/zSe/hWuMdqHNeEnT6Wn8KuQJII6oE

QHpLAoGAYNNuiUgLrqRq8Klb4Fj0pbwWzrvNkON+j01mIEzPeNNto01GbLXKQwhe

mbWMSnLHarmFWJ7Yamagzx1I/ifRjUUFLchcxLH0VDv0e1ZYaD1FV2IQNJNS4gWE

m8Xbq7v4bjOmZvAfVoorH+gnvh0SMNTyFGq+rSB9wCsII3nLGPo=

-----END RSA PRIVATE KEY-----

![复制代码]()

![]()

![]()

![]()

![]()

经过一段时间后,![]()

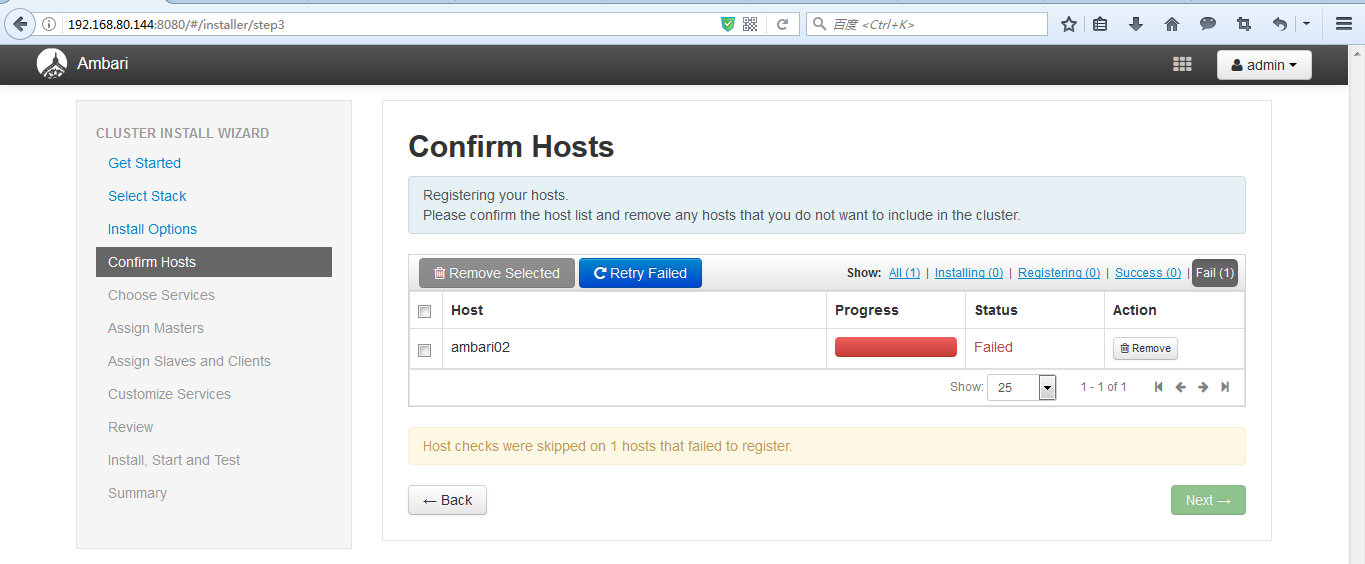

那是因为,如下:

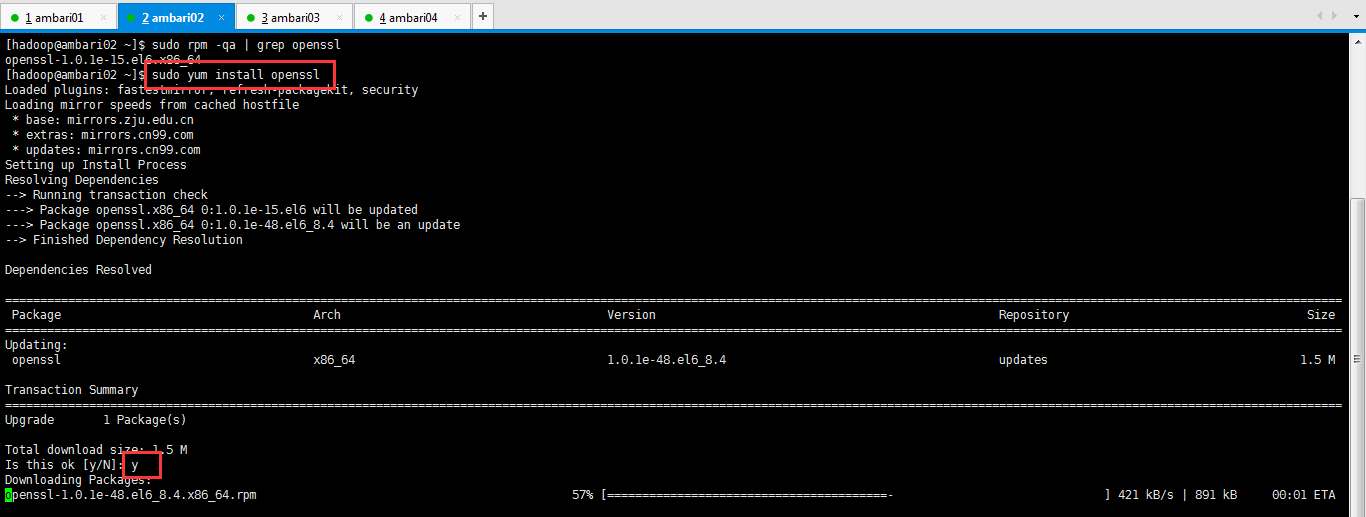

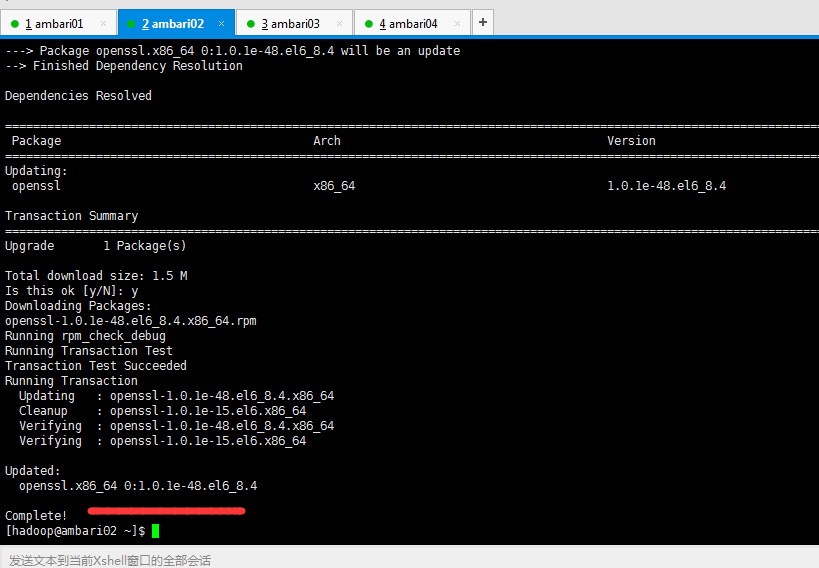

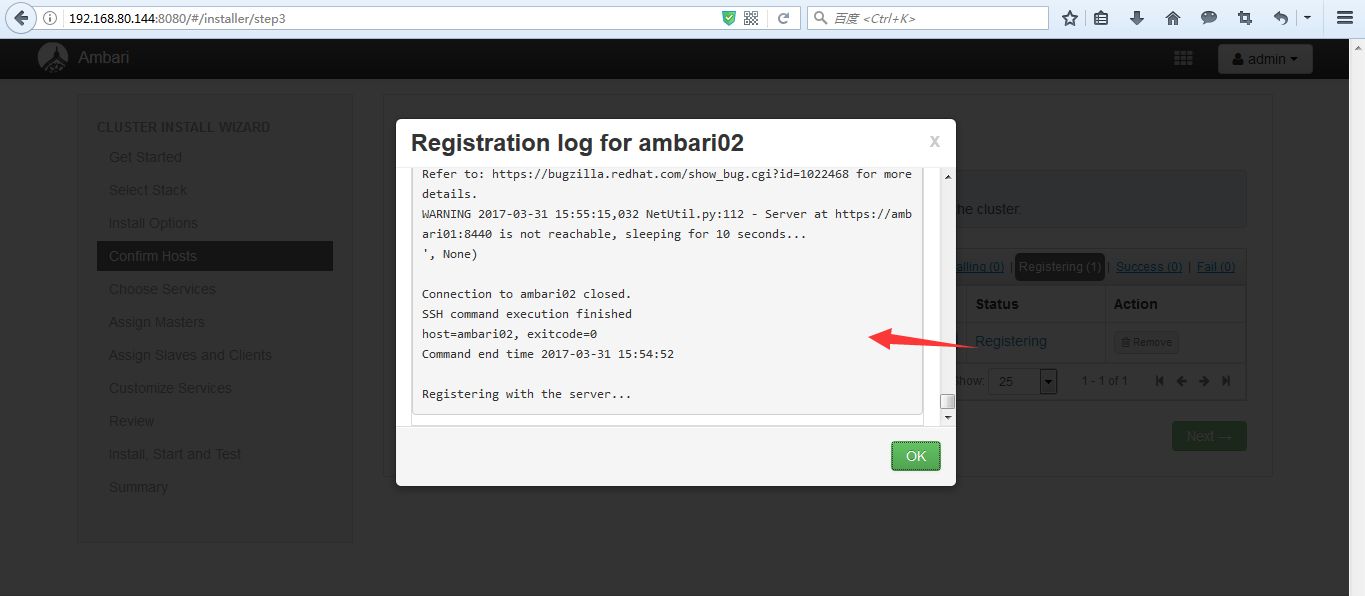

6)注册过程中可能会遇到一些问题,比如openssl的版本问题,这个时候我们只需要在对应节点上更新一下openssl的版本即可,然后重新注册。

![]()

![]()

![复制代码]()

[hadoop@ambari02 .ssh]$ sudo rpm -qa | grep openssl

openssl-1.0.1e-15.el6.x86_64

[hadoop@ambari01 .ssh]$ sudo yum install openssl

Loaded plugins: fastestmirror, refresh-packagekit, security

Setting up Install Process

Loading mirror speeds from cached hostfile

* base: mirrors.zju.edu.cn

* extras: mirrors.zju.edu.cn

* updates: mirrors.zju.edu.cn

Resolving Dependencies

--> Running transaction check

---> Package openssl.x86_64 0:1.0.1e-15.el6 will be updated

---> Package openssl.x86_64 0:1.0.1e-48.el6_8.4 will be an update

--> Finished Dependency Resolution

Dependencies Resolved

===============================================================================================================================================================================================

Package Arch Version Repository Size

===============================================================================================================================================================================================

Updating:

openssl x86_64 1.0.1e-48.el6_8.4 updates 1.5 M

Transaction Summary

===============================================================================================================================================================================================

Upgrade 1 Package(s)

Total download size: 1.5 M

Is this ok [y/N]: y

Downloading Packages:

openssl-1.0.1e-48.el6_8.4.x86_64.rpm | 1.5 MB 00:01

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Updating : openssl-1.0.1e-48.el6_8.4.x86_64 1/2

Cleanup : openssl-1.0.1e-15.el6.x86_64 2/2

Verifying : openssl-1.0.1e-48.el6_8.4.x86_64 1/2

Verifying : openssl-1.0.1e-15.el6.x86_64 2/2

Updated:

openssl.x86_64 0:1.0.1e-48.el6_8.4

Complete!

[hadoop@ambari02 .ssh]$

![复制代码]()

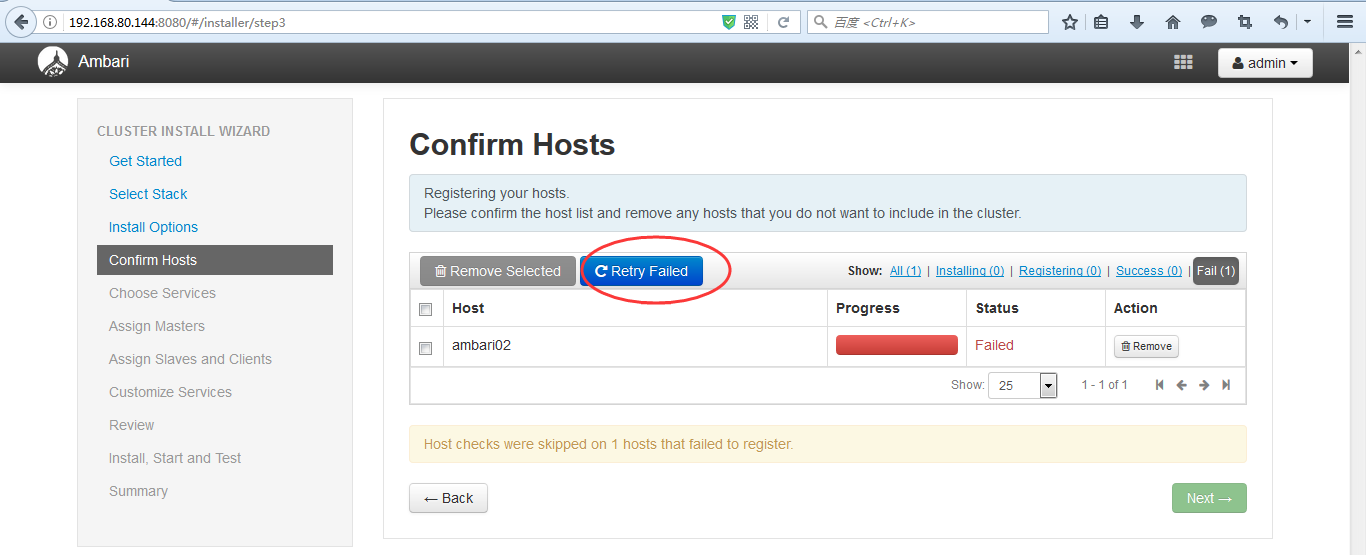

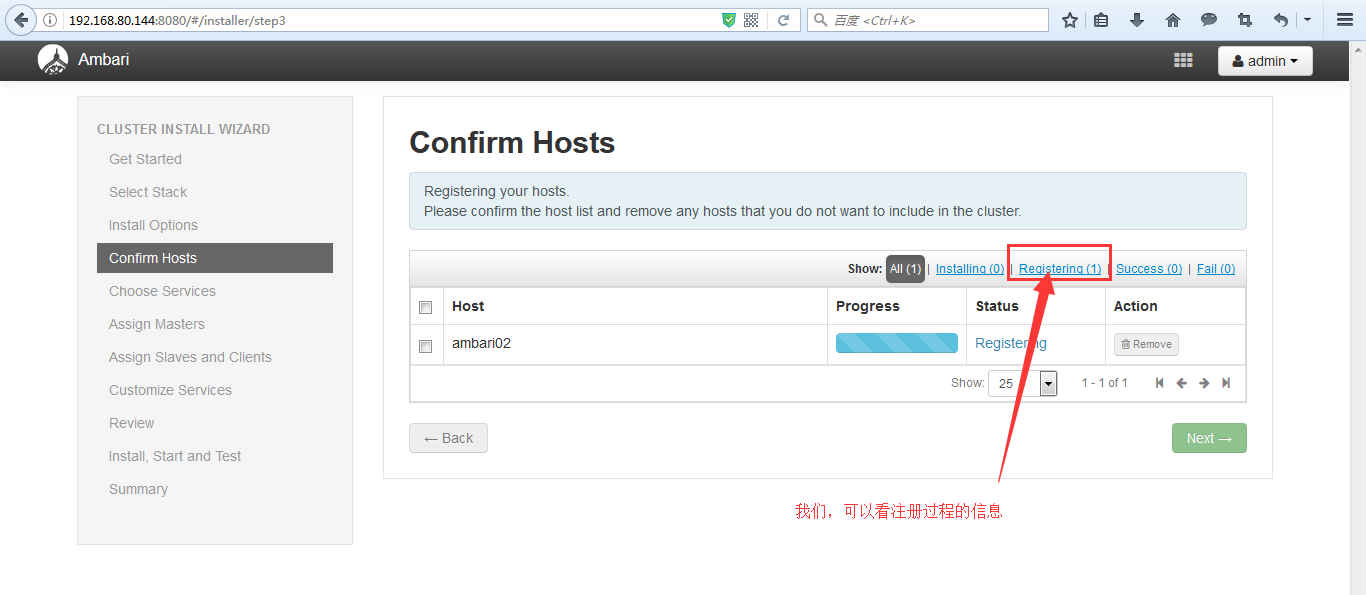

然后,再来重新注册

![]()

![]()

![]()

![]()

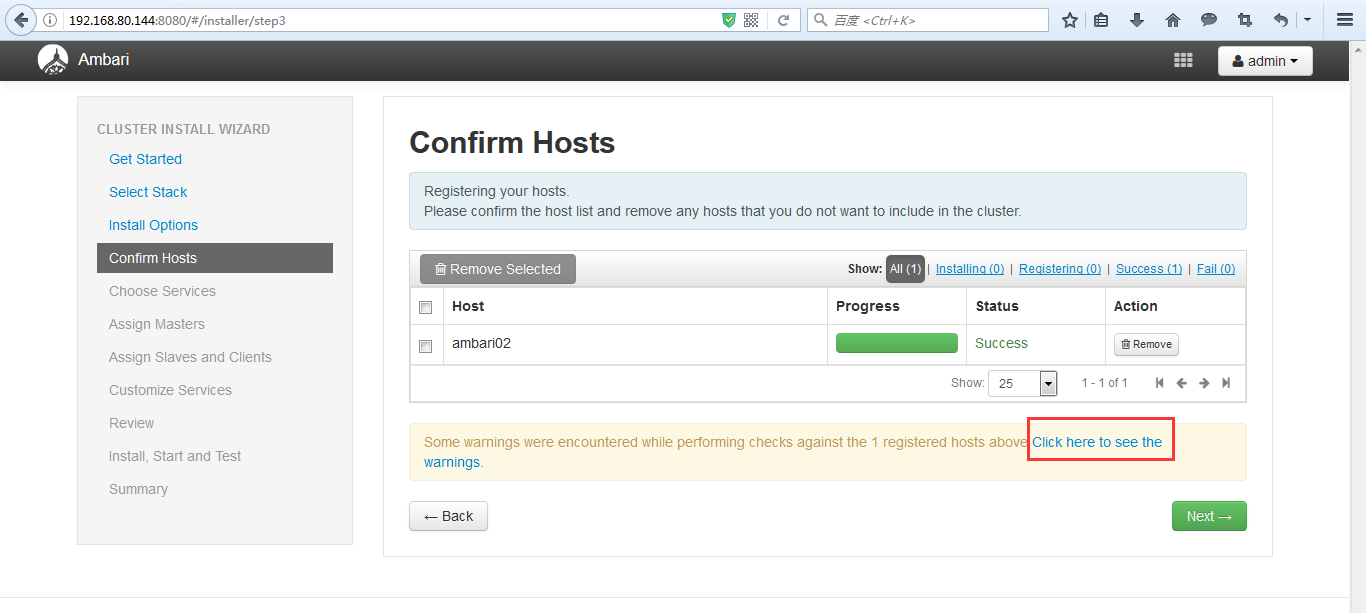

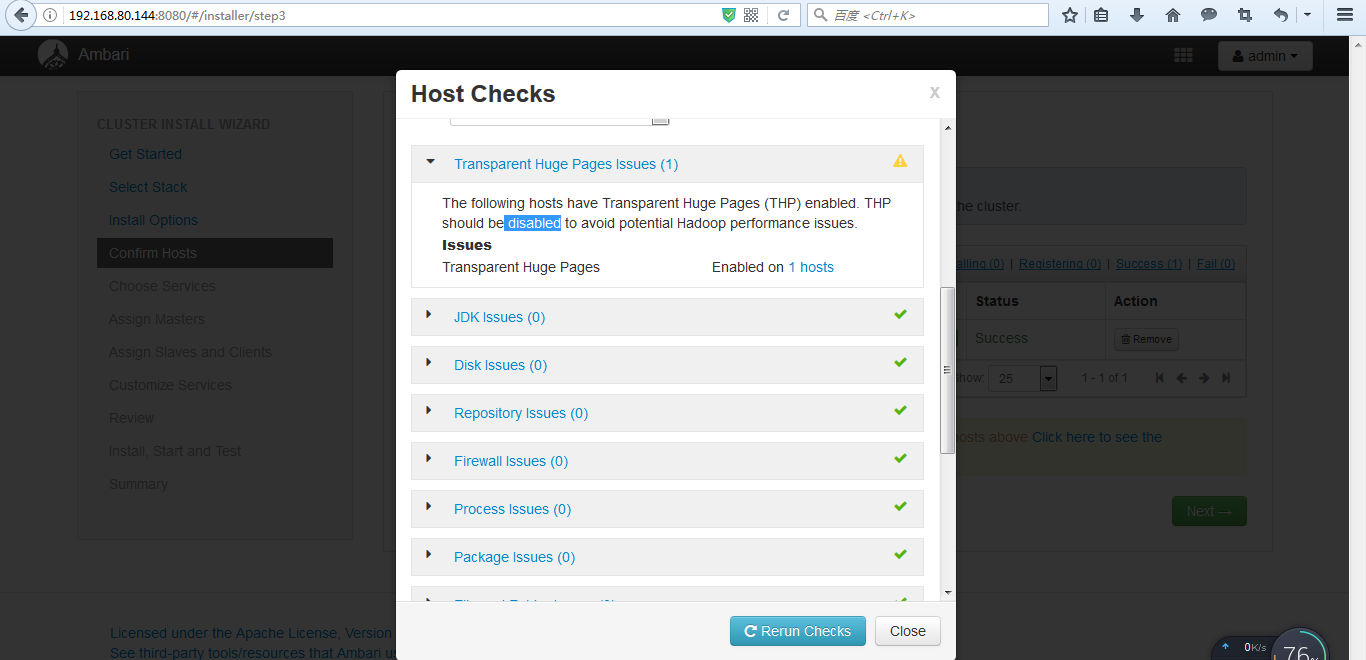

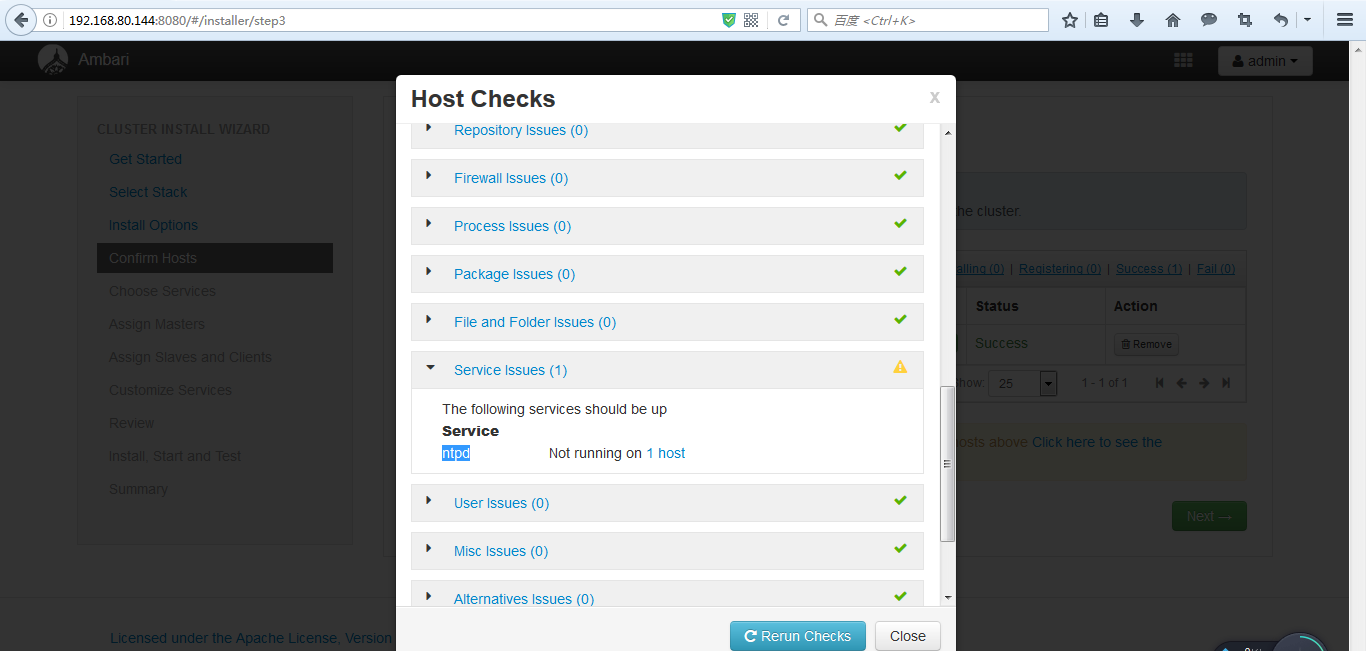

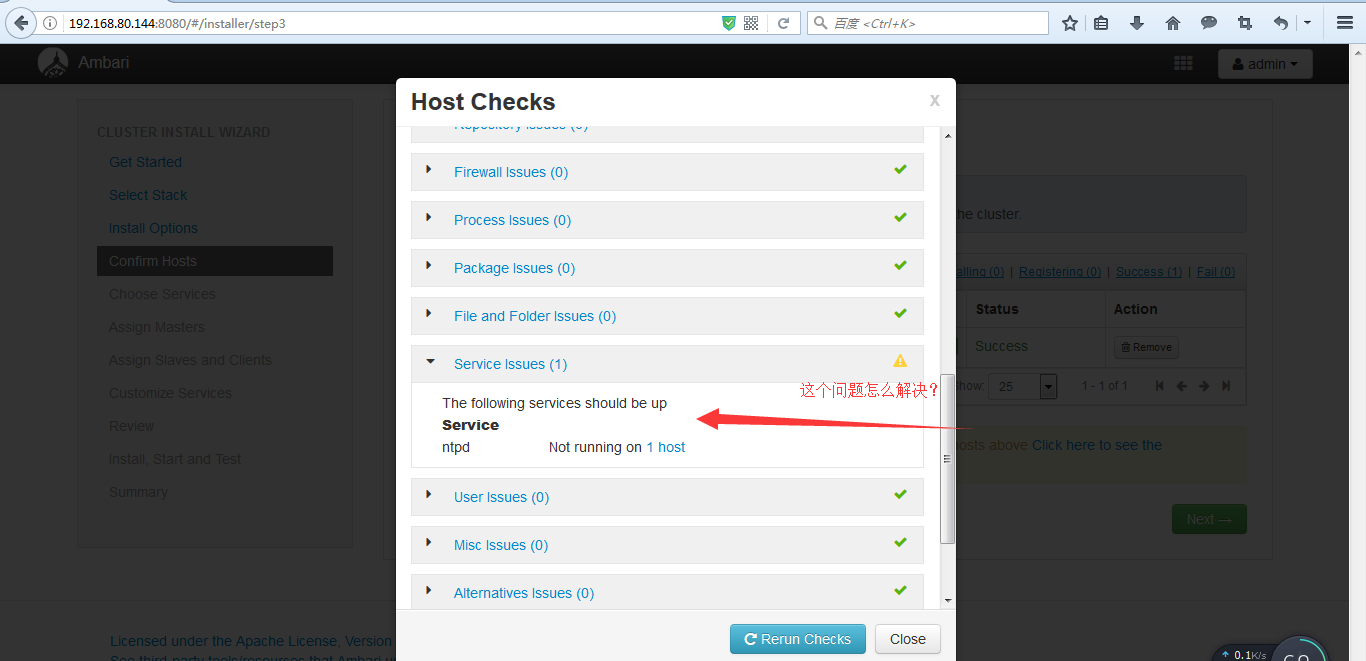

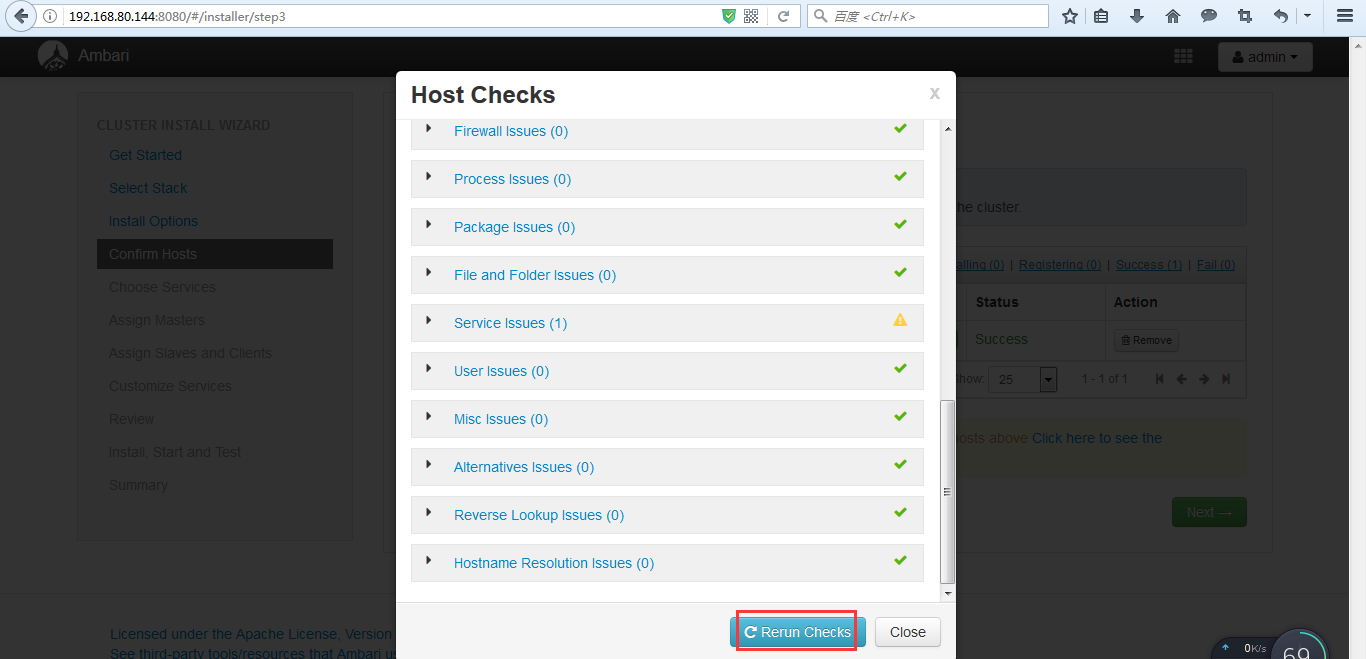

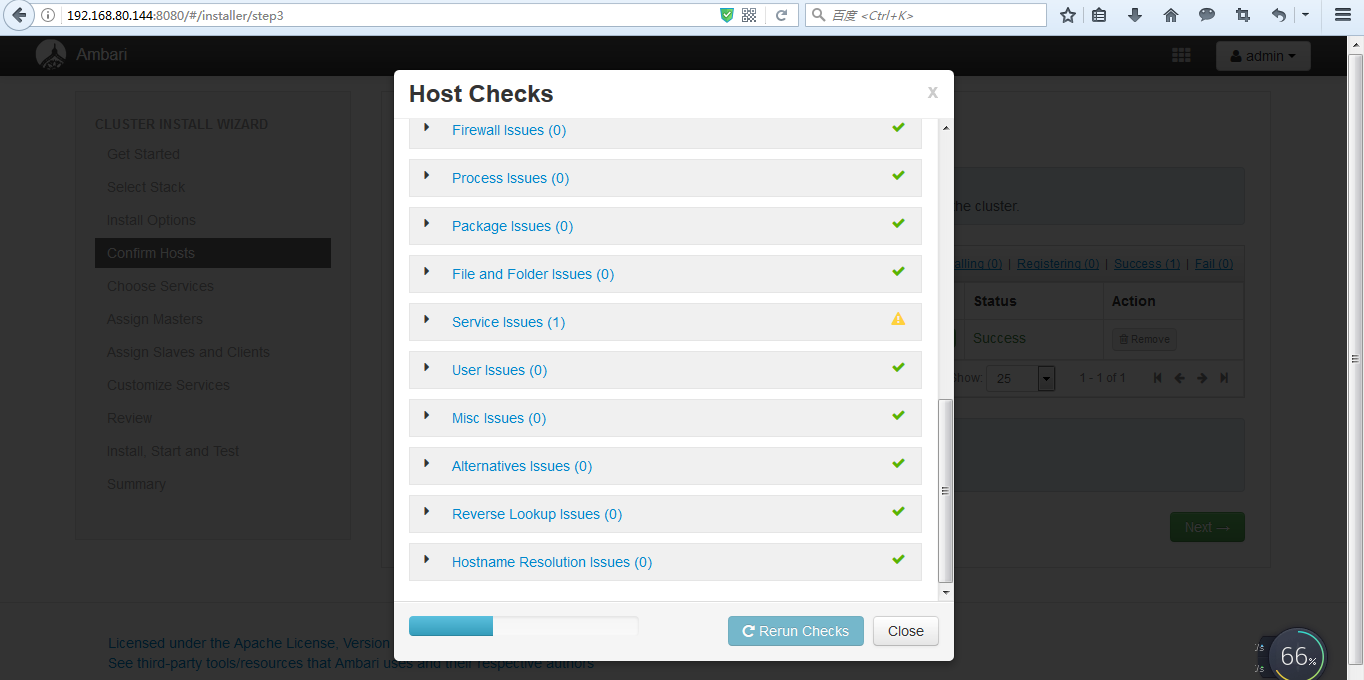

7)注册成功之后,我们还要查看一下警告信息,一定要在部署hadoop组件之前把所有的警告信息都消除掉。

![]()

![]()

由此,可见,需要如下来做。

8)比如时钟同步问题,我们可以通过如下方式解决

![]()

![]()

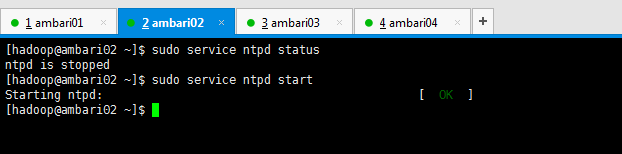

[hadoop@ambari02 ~]$ sudo service ntpd status

ntpd is stopped

[hadoop@ambari02 ~]$ sudo service ntpd start

Starting ntpd: [ OK ]

[hadoop@ambari02 ~]$

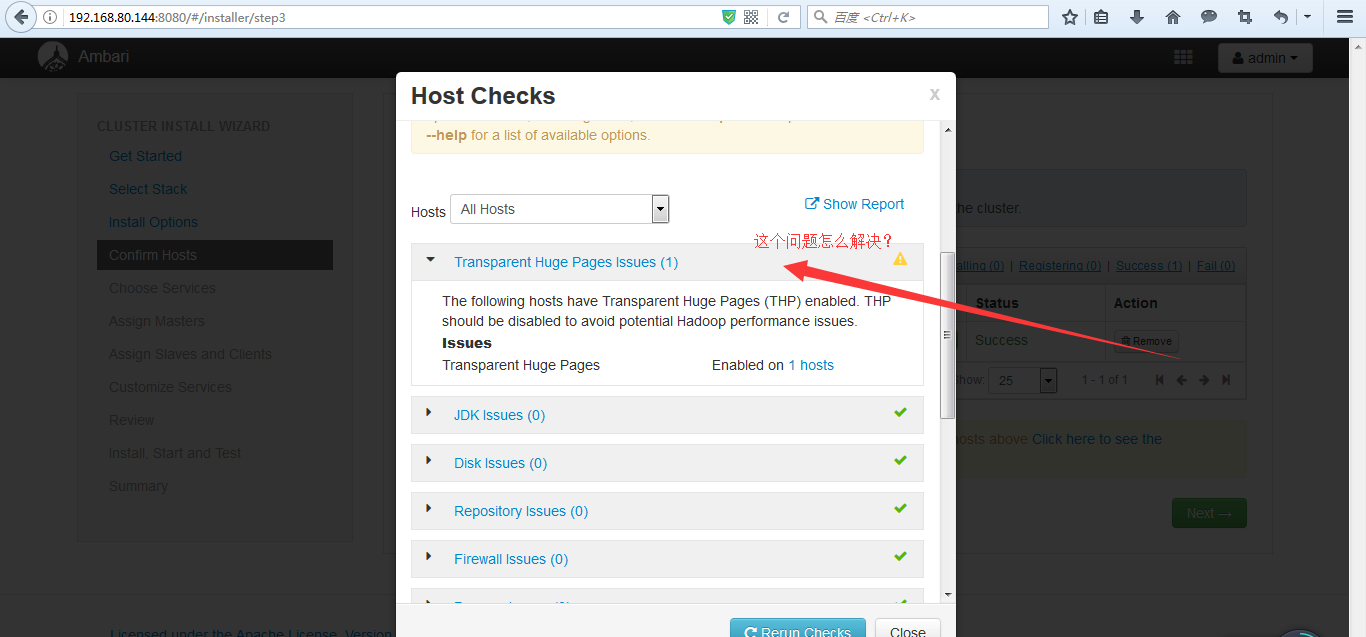

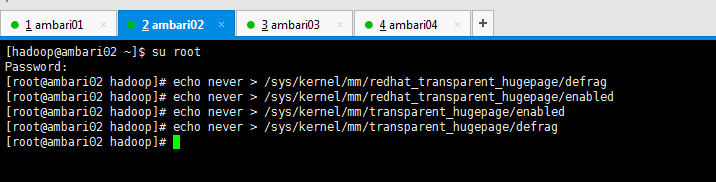

9)下面这个问题的解决方法 The following hosts have Transparent Huge Pages (THP) enabled。THP should be disabled to avoid potential Hadoop performance issues.

关闭Transparent HugePages的办法: 在linux的root用户下下执行

echo never > /sys/kernel/mm/redhat_transparent_hugepage/defrag

echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/defrag

![]()

![]()

[hadoop@ambari02 ~]$ su root

Password:

[root@ambari02 hadoop]# echo never > /sys/kernel/mm/redhat_transparent_hugepage/defrag

[root@ambari02 hadoop]# echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled

[root@ambari02 hadoop]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@ambari02 hadoop]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@ambari02 hadoop]#

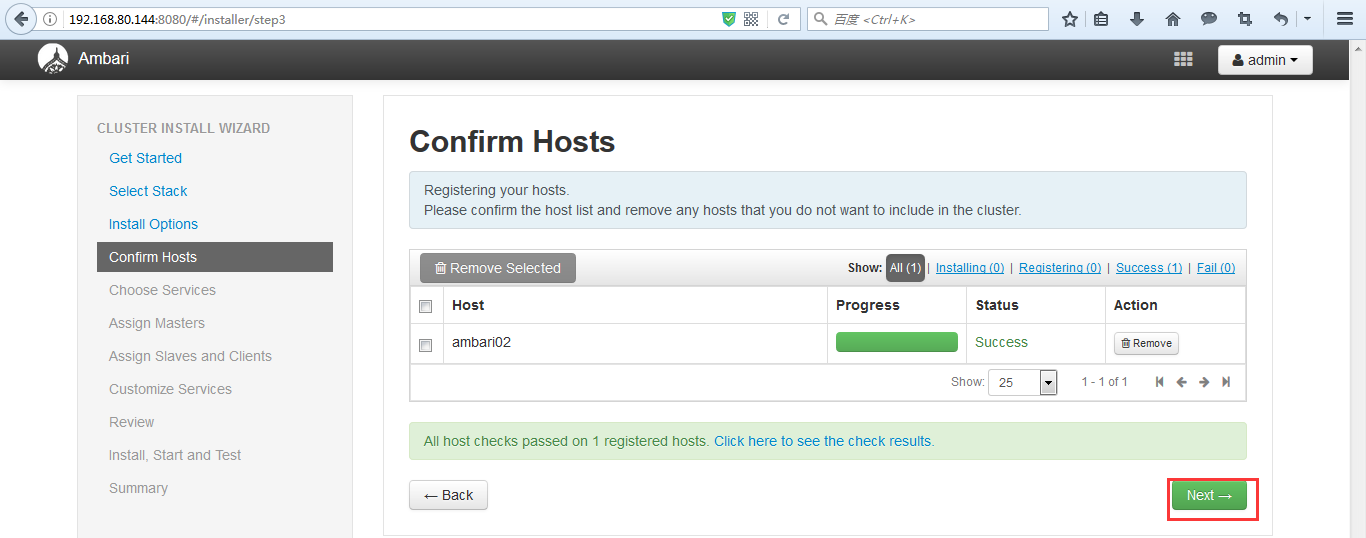

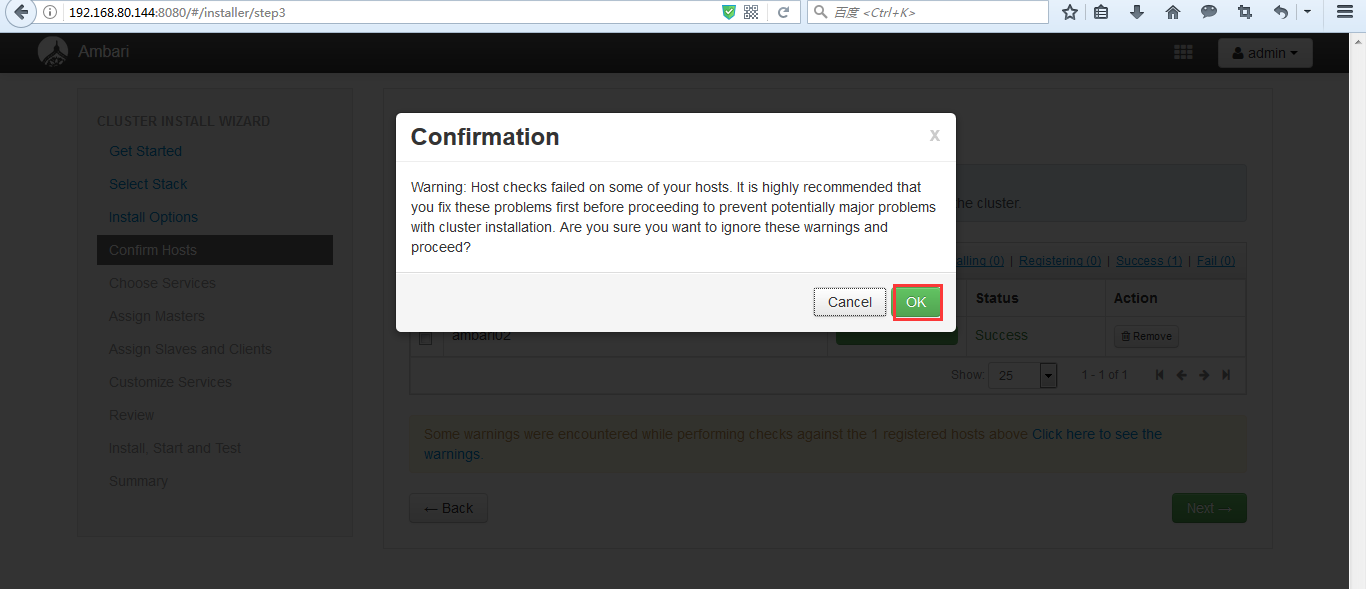

10)然后,重新检测,保证没有警告之后,我们点击next

![]()

![]()

![]()

![]()

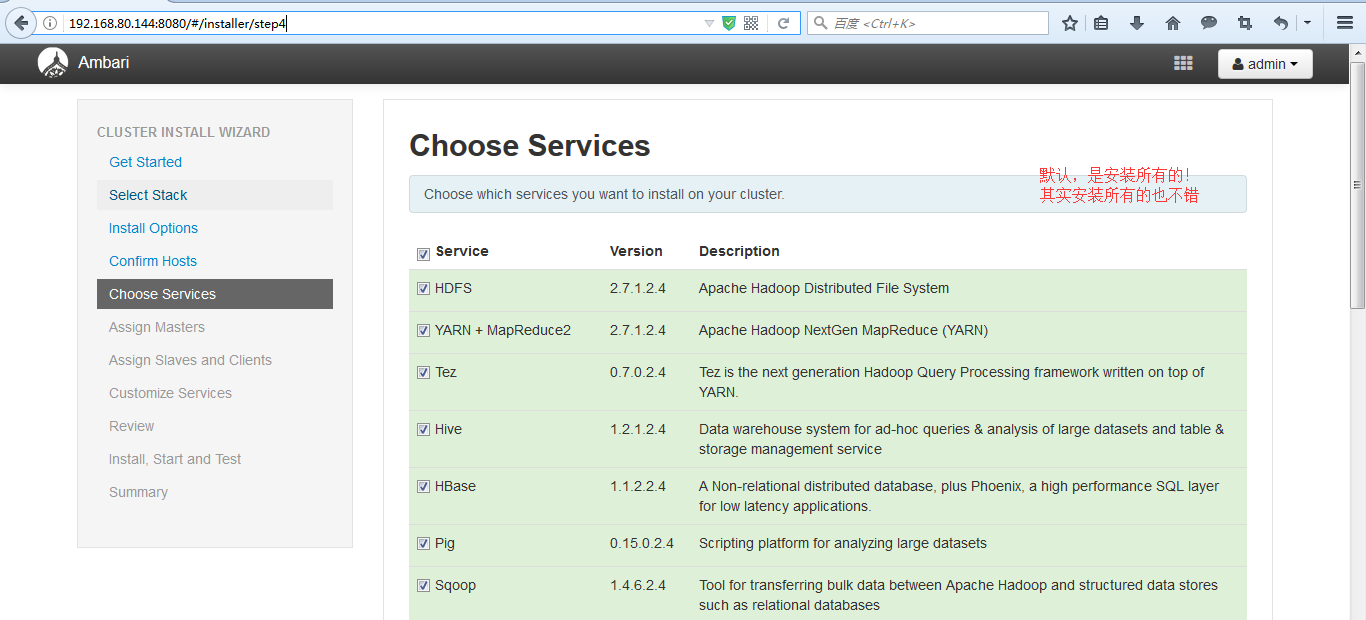

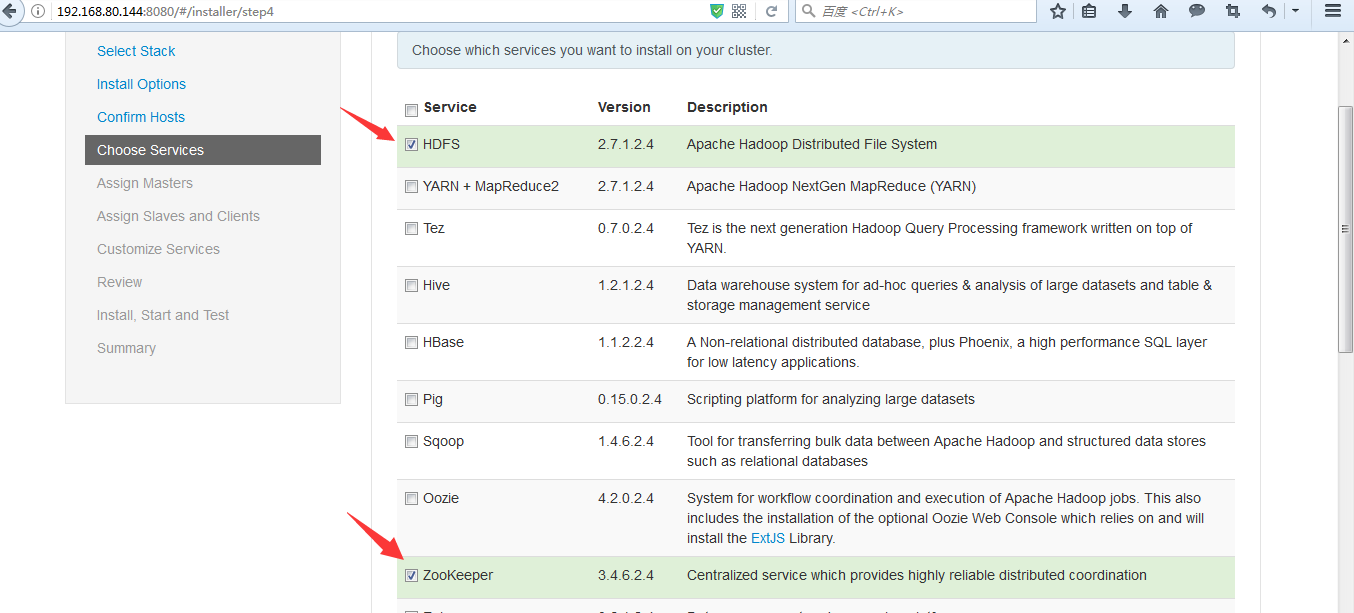

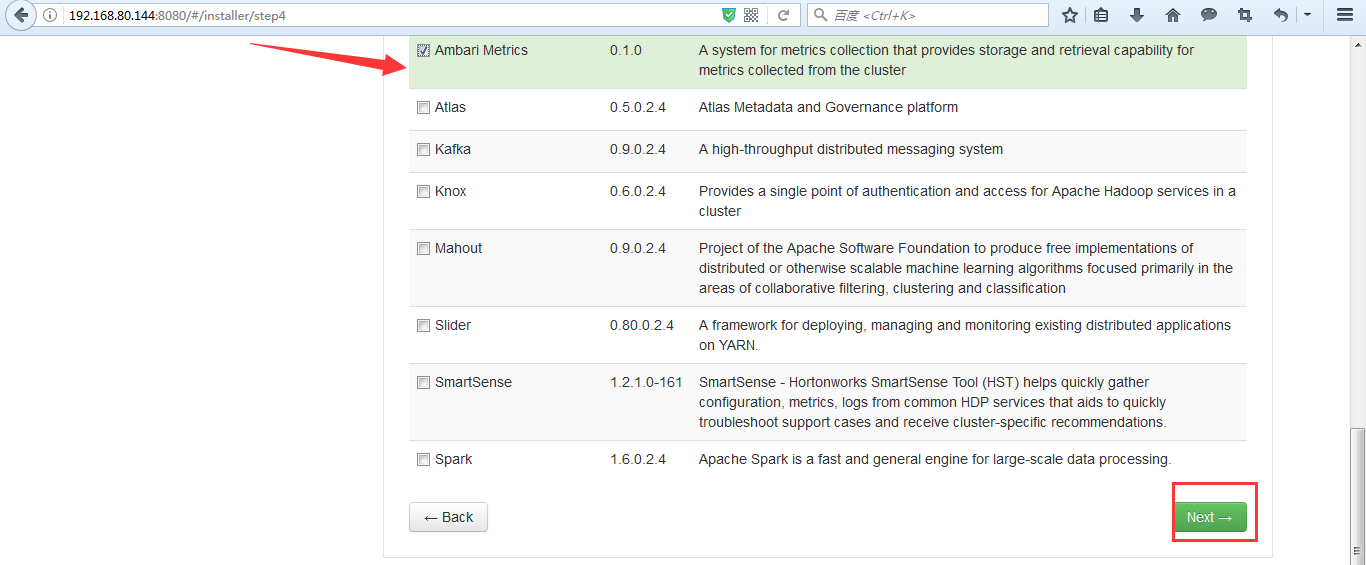

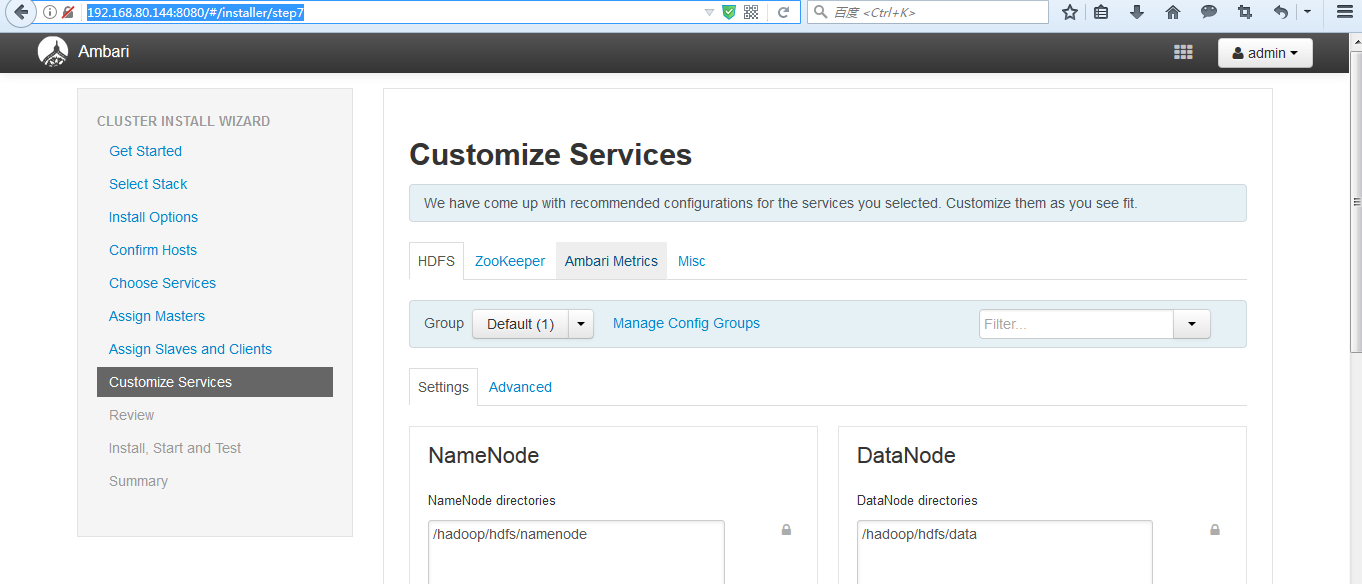

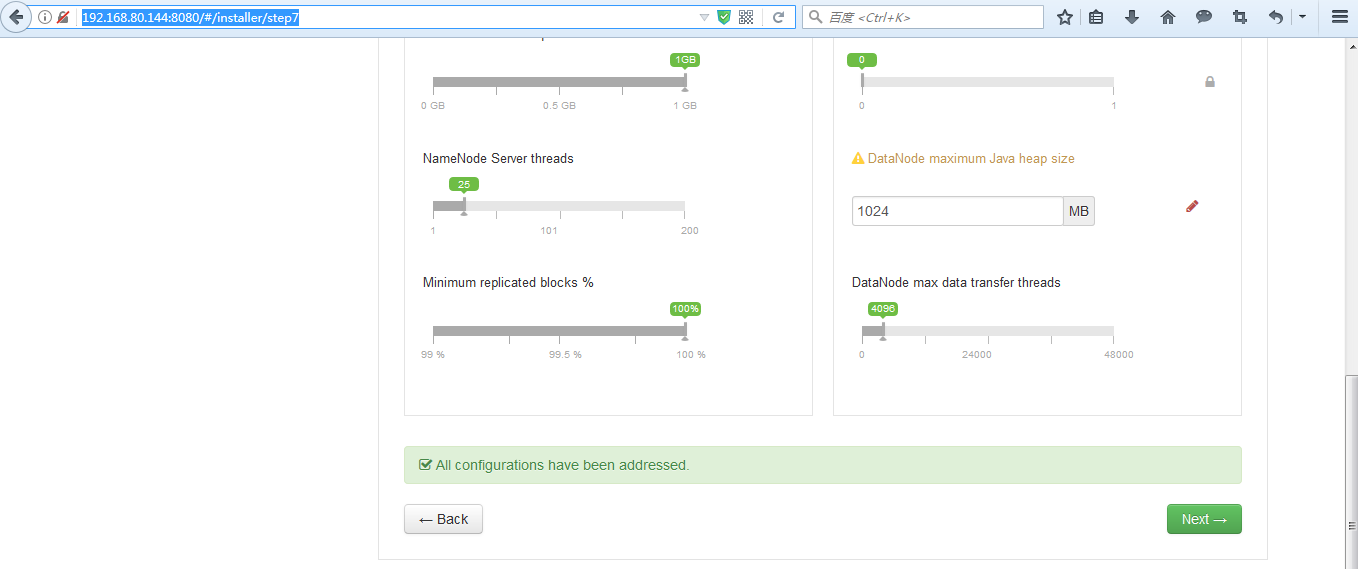

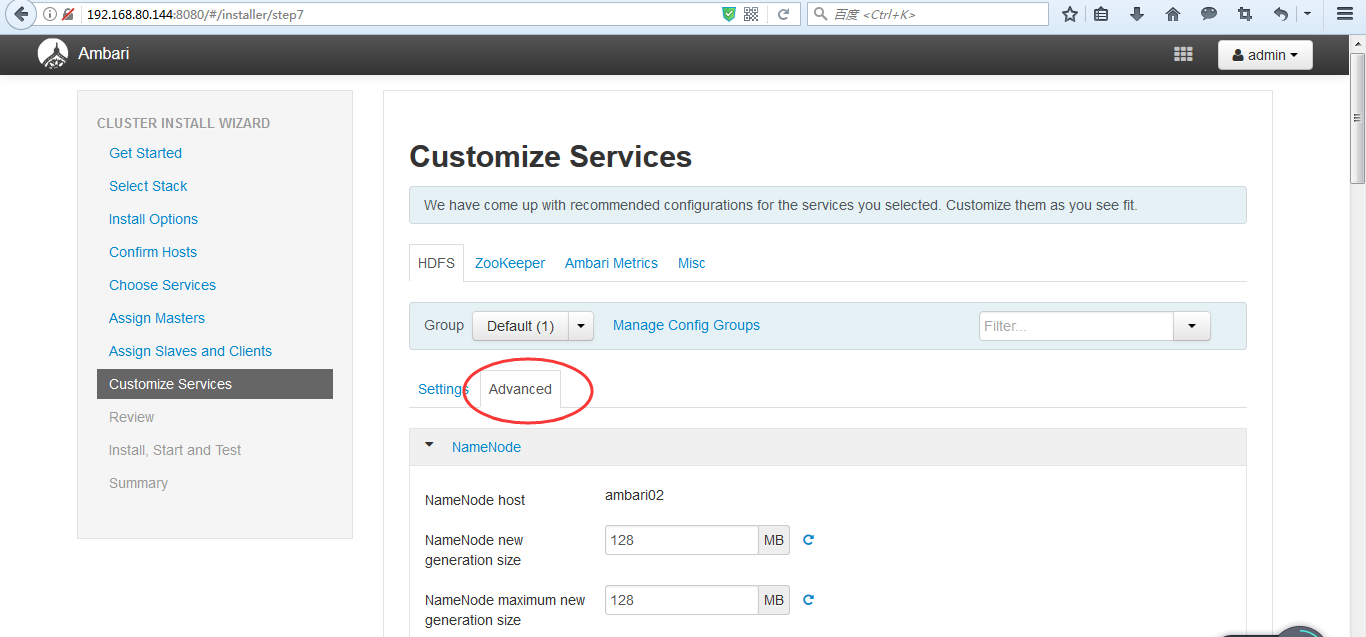

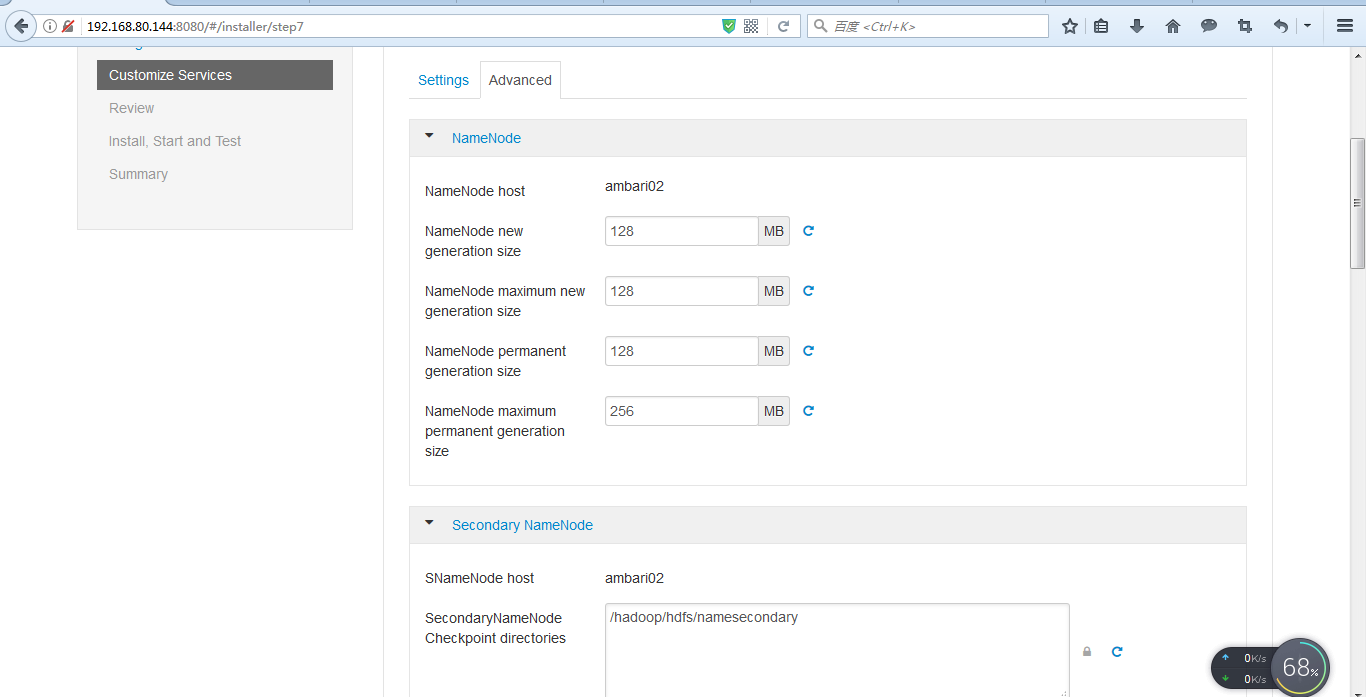

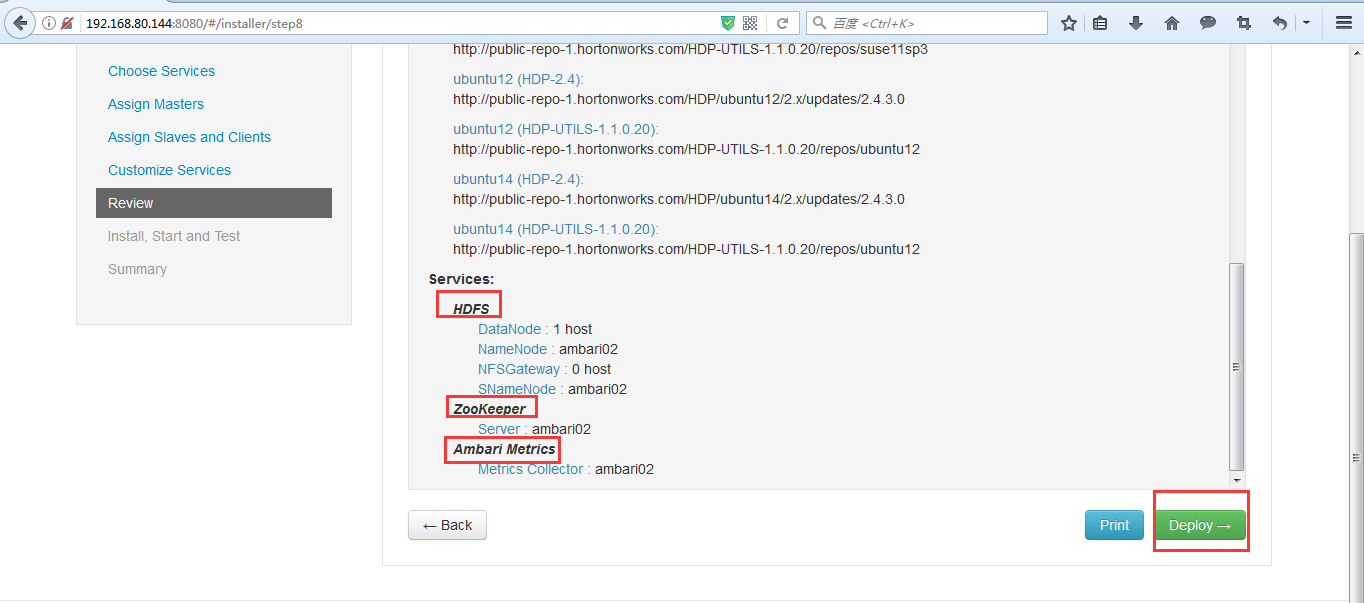

(2)部署HDFS

1)选择我们要安装的组件名称,然后点击next

http://192.168.80.144:8080/#/installer/step4

![]()

我这里为了演示给大家后续的如何去新增一个进来。就只选择 HDFS + Zookeeper + Ambari Metrics。

![]()

![]()

![]()

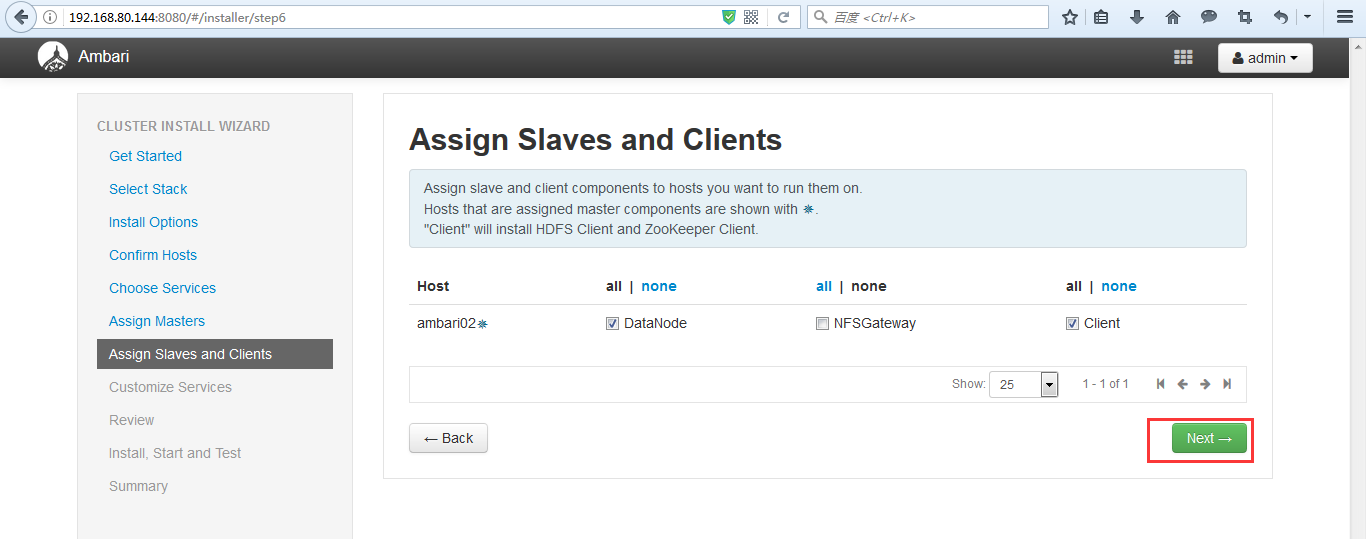

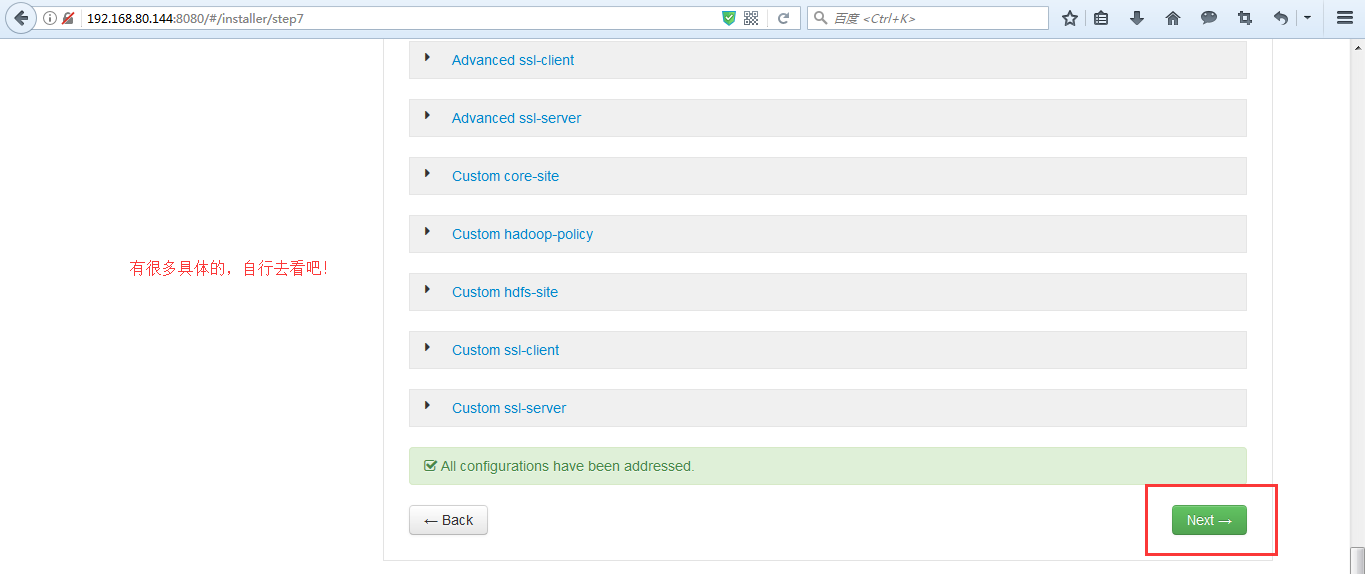

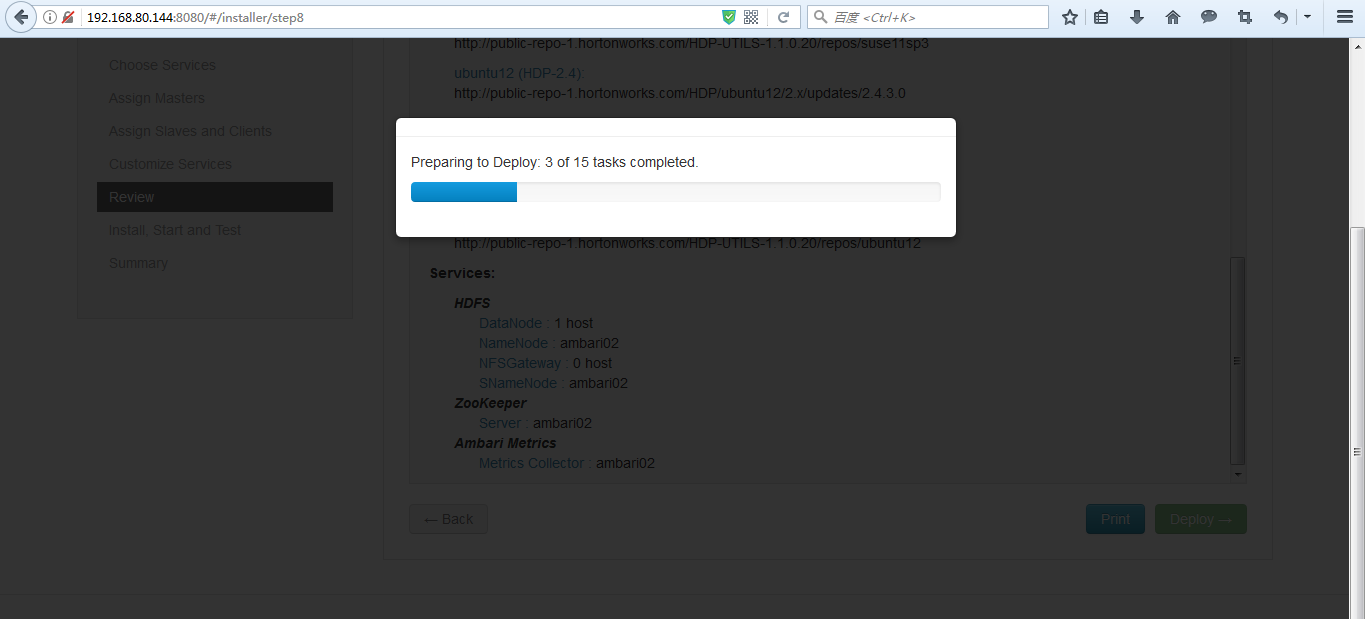

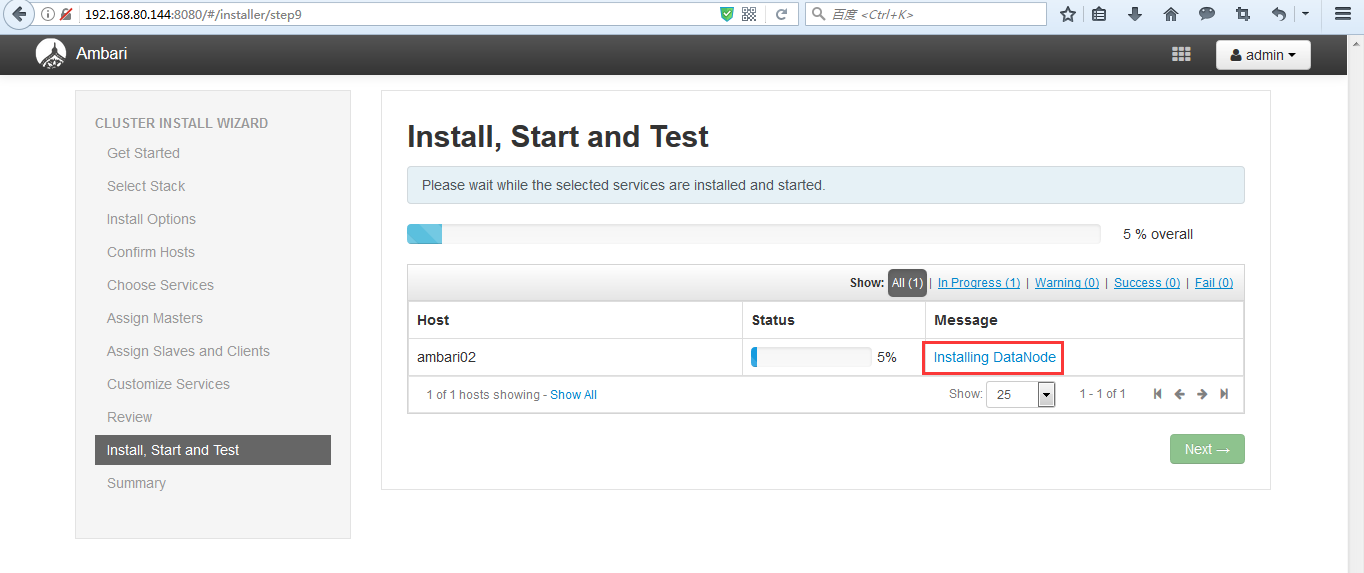

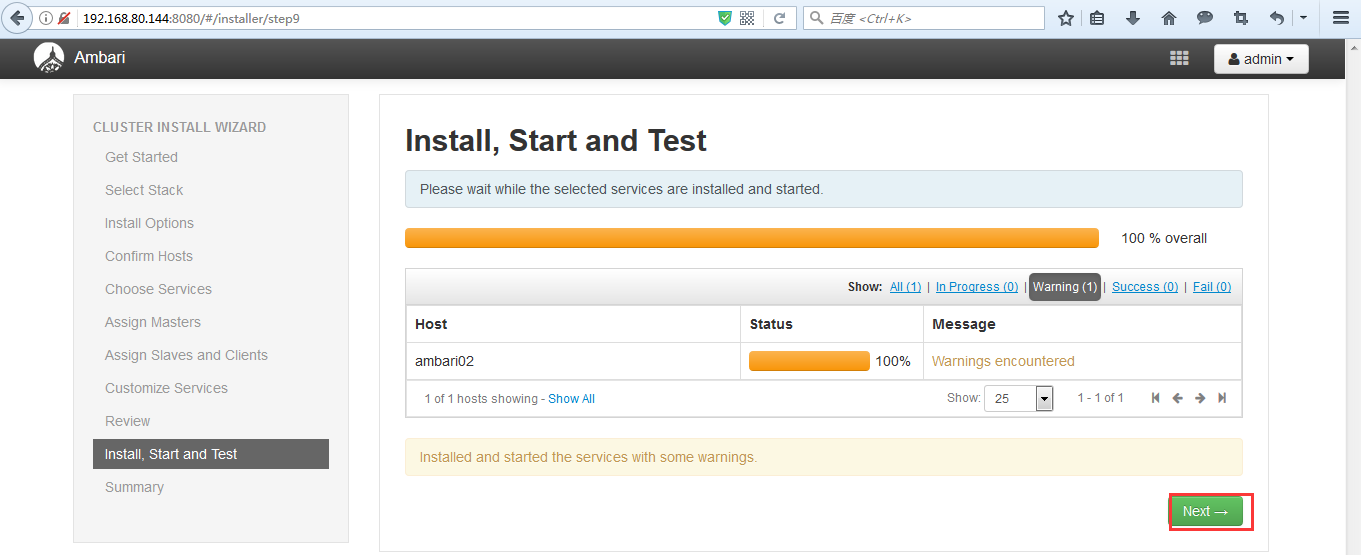

2)如果没有其他疑问,就一直点击next,ambari就会进入自动的安装部署过程。

http://192.168.80.144:8080/#/installer/step6

![]()

![]()

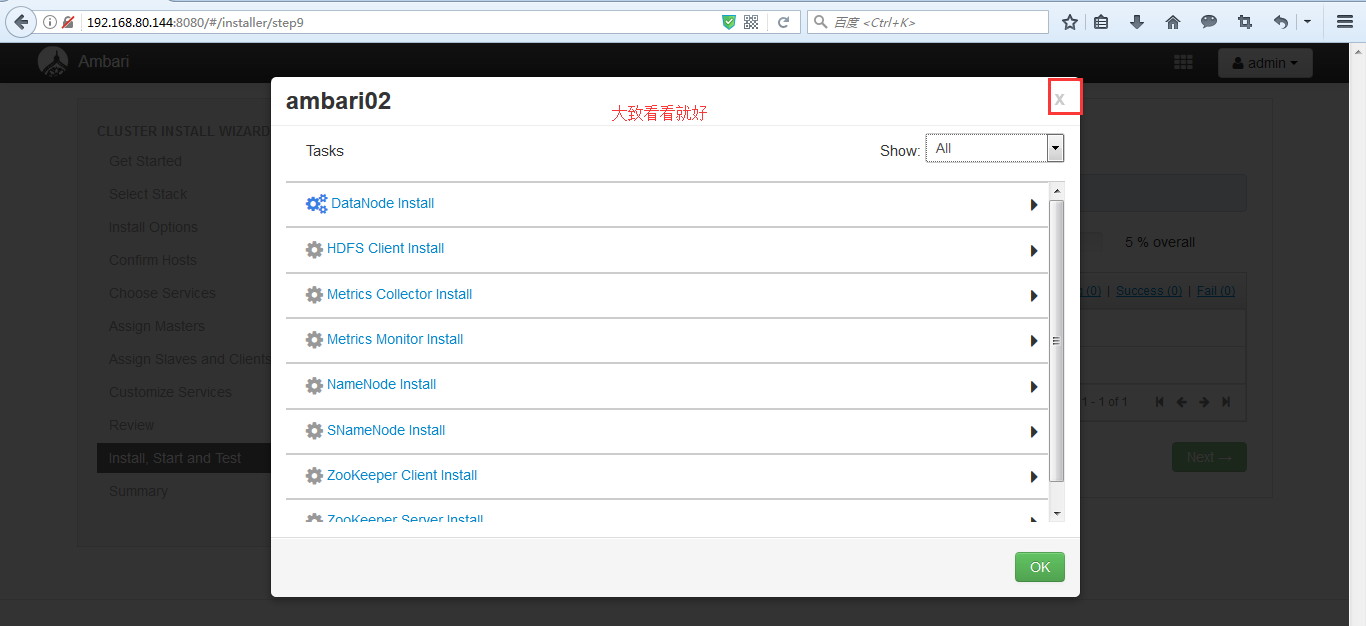

带着看看信息

![]()

![]()

![]()

![]()

当然,如果牵扯到高级优化等,或者一些其他自定义的,以后自己在搭建好之后,是可以返回来改的!

![]()

![]()

![]()

![]()

![]()

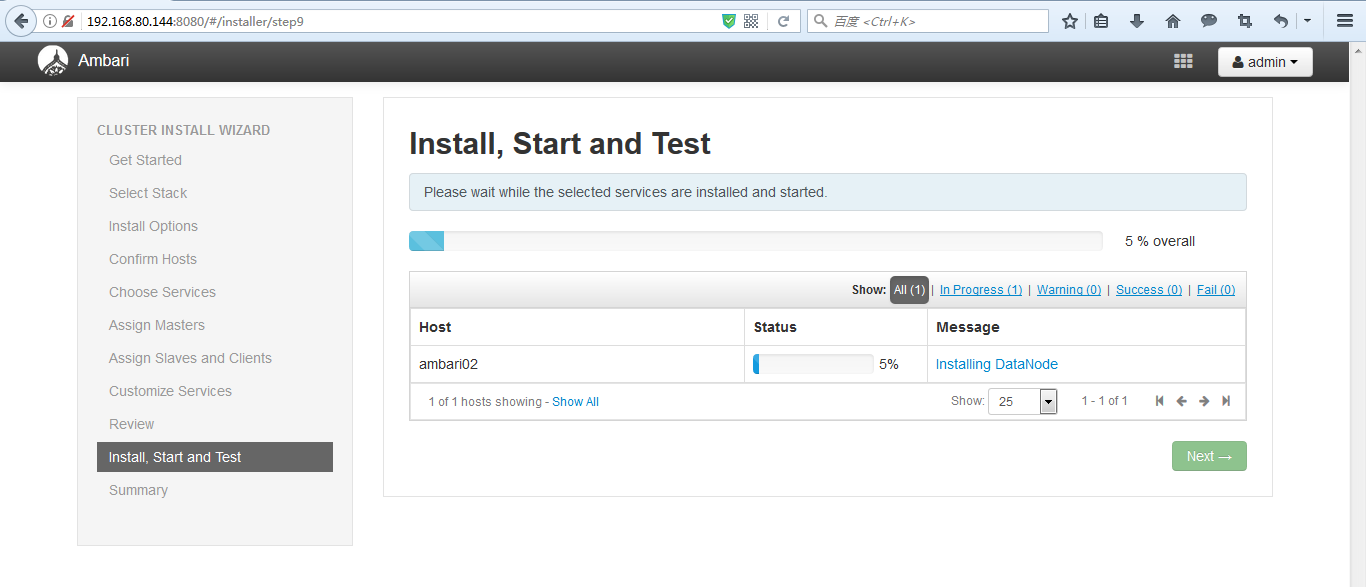

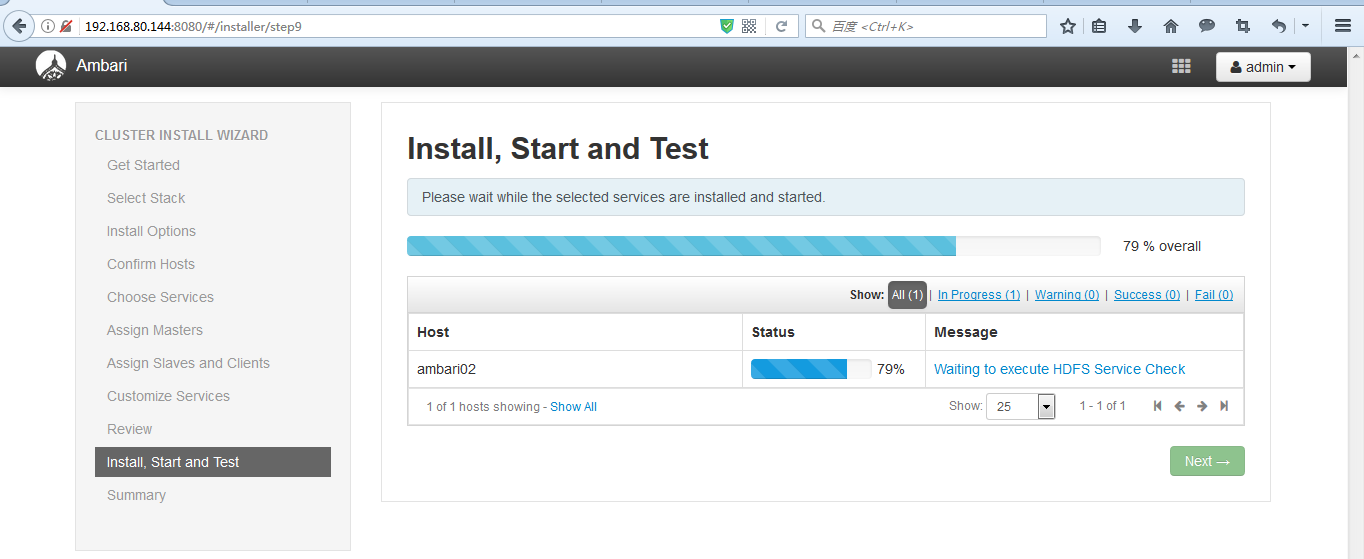

等待一段时间。

![]()

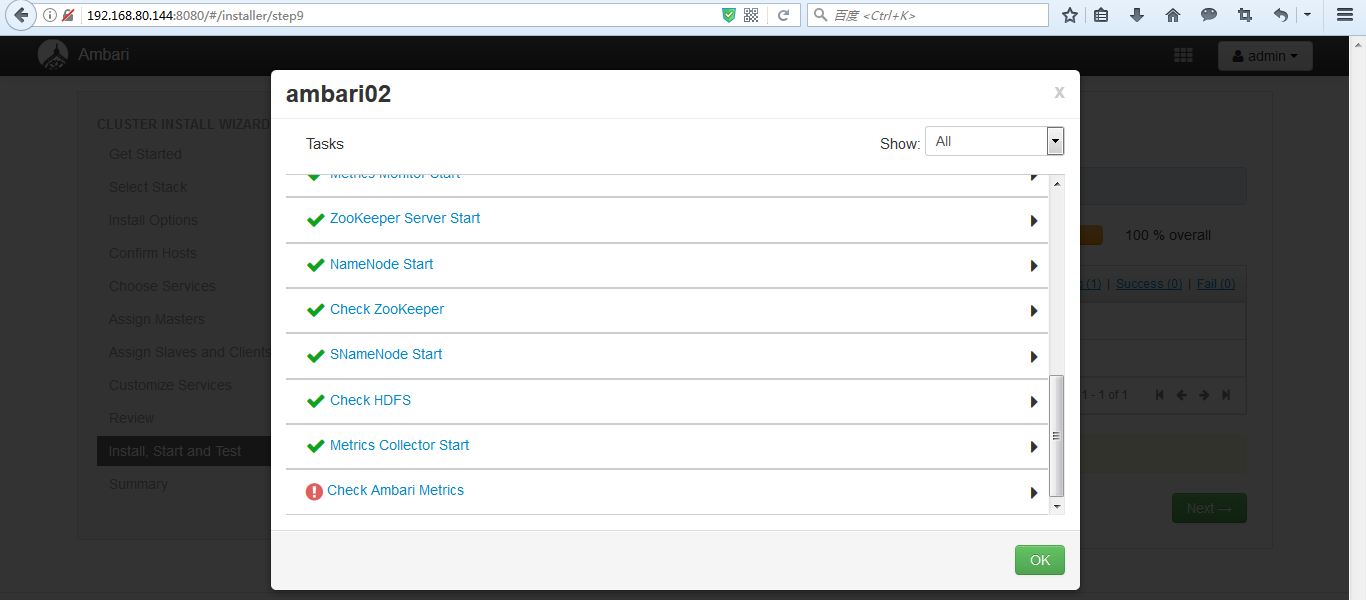

3)中间可能会出现一些问题,我们只需要针对性的解决就行,比如下面的问题

![]()

这里是,自动就会检测出问题出来。我们直接Next,后面来手动处理它!。即到ambari02机器上去。

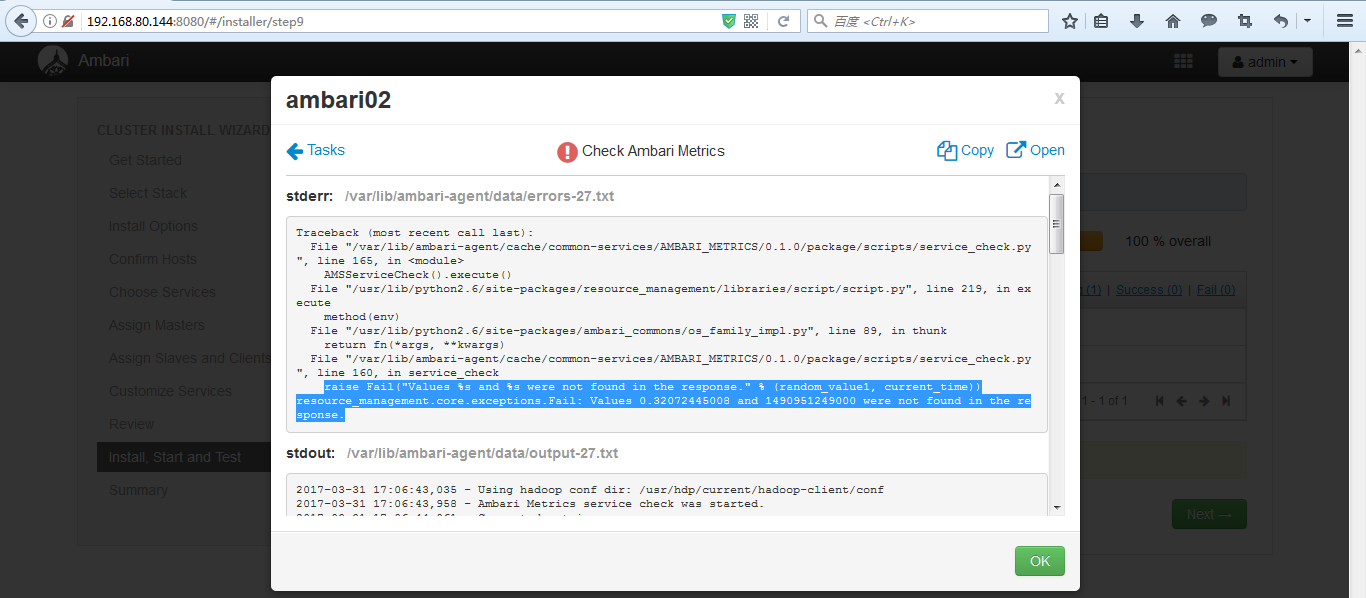

![]()

![]()

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/AMBARI_METRICS/0.1.0/package/scripts/service_check.py", line 165, in <module>

AMSServiceCheck().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 219, in execute

method(env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/AMBARI_METRICS/0.1.0/package/scripts/service_check.py", line 160, in service_check

raise Fail("Values %s and %s were not found in the response." % (random_value1, current_time))

resource_management.core.exceptions.Fail: Values 0.32072445008 and 1490951249000 were not found in the response.

其实,这一步每个人安装时,出现的错误不一样。具体是报什么错误,去百度就好。

请移步

安装ambari的时候遇到的ambari和hadoop问题集

![]()

![]()

![]()

![]()

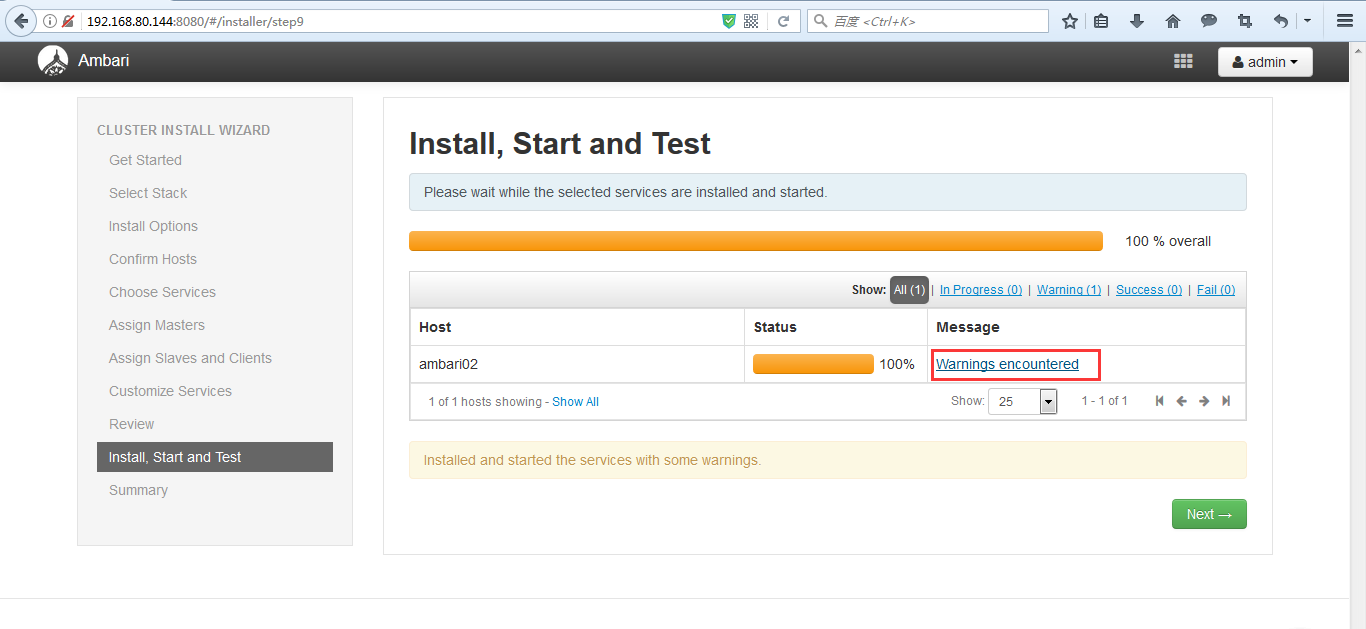

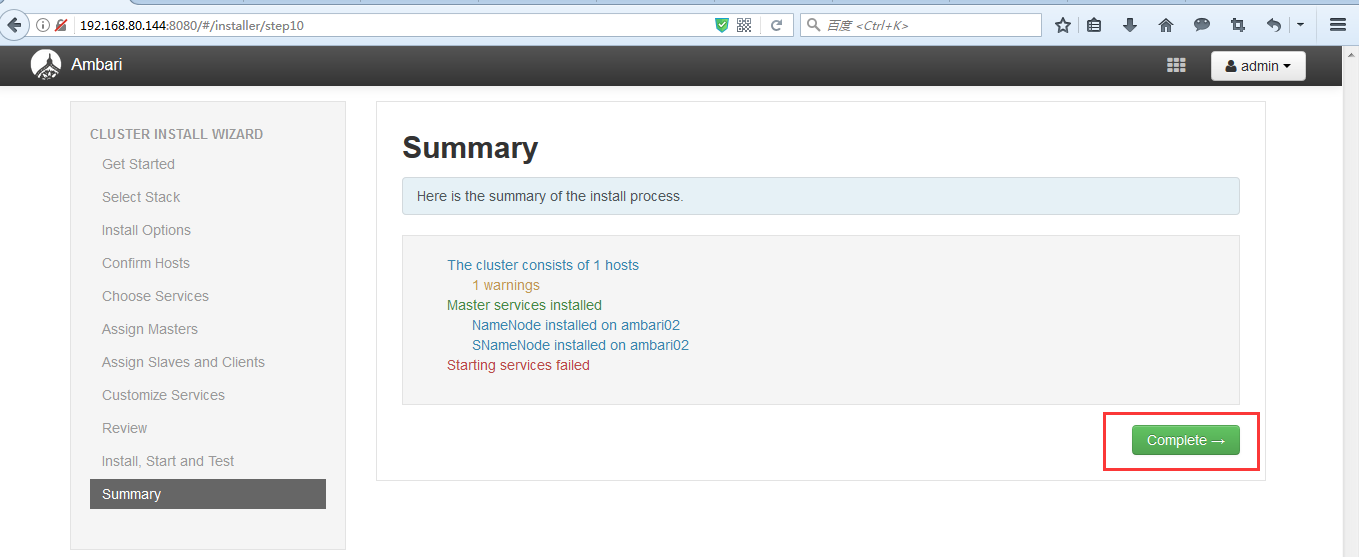

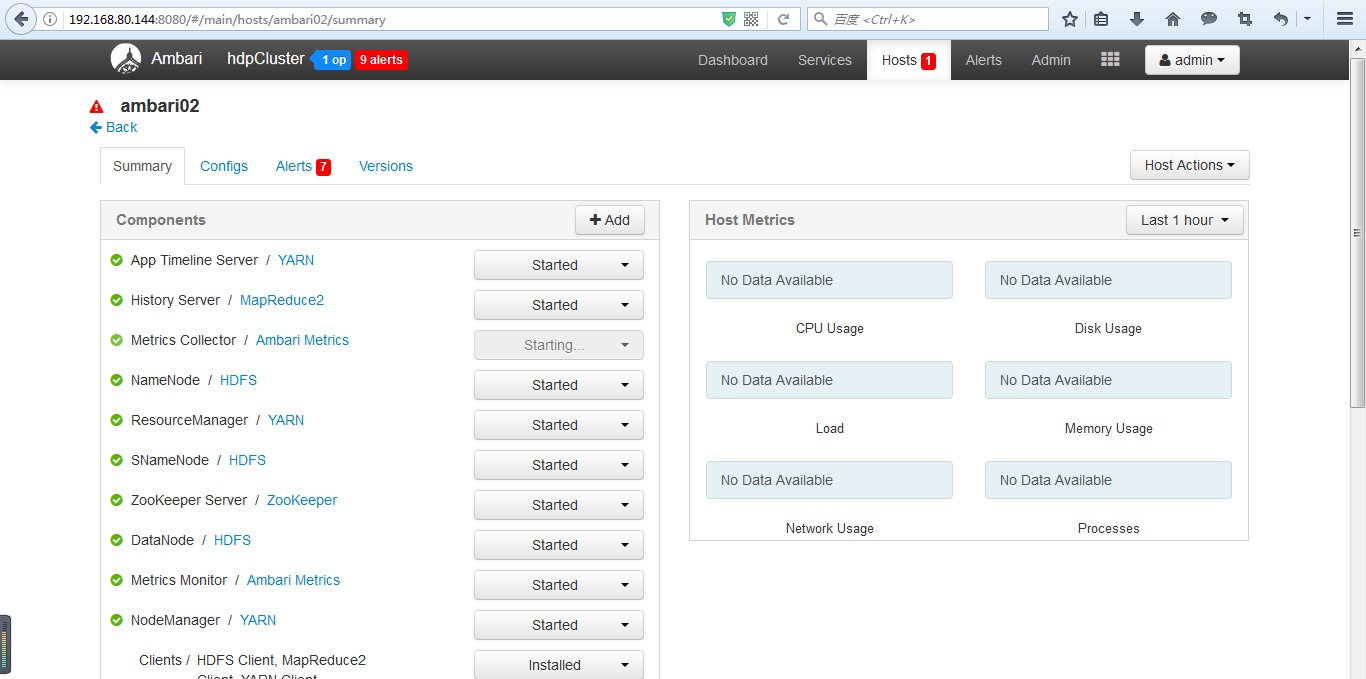

OK,到此为止,我们刚才安装的所有组件就都能够成功启动了.

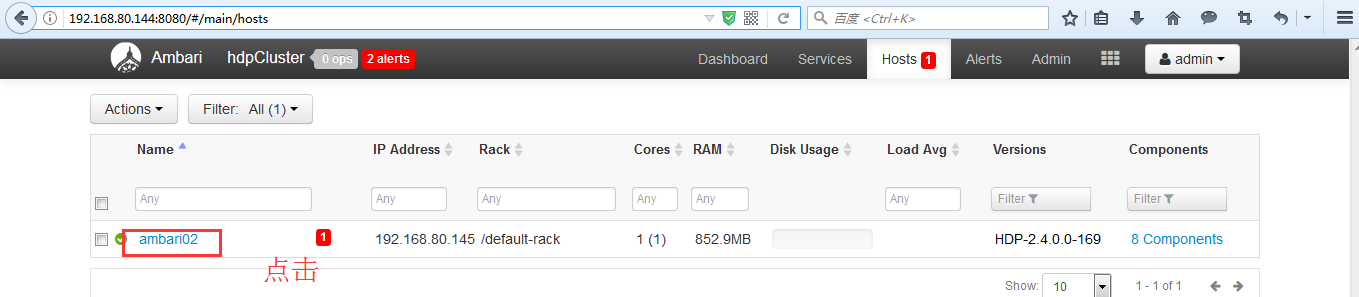

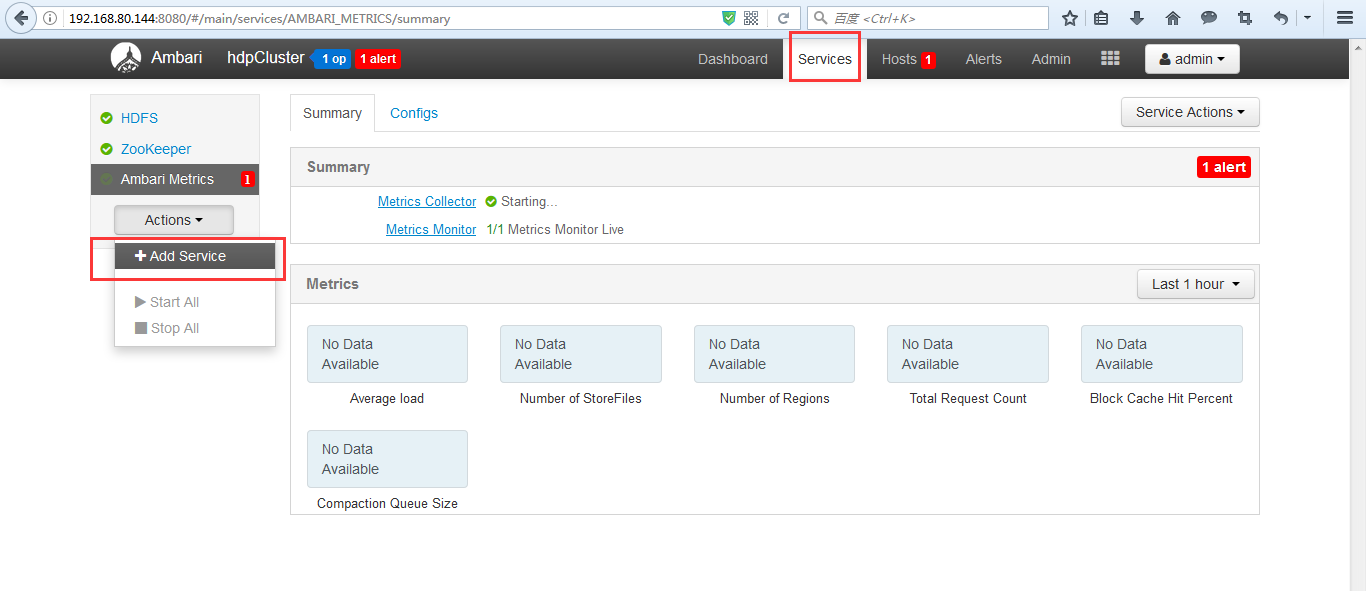

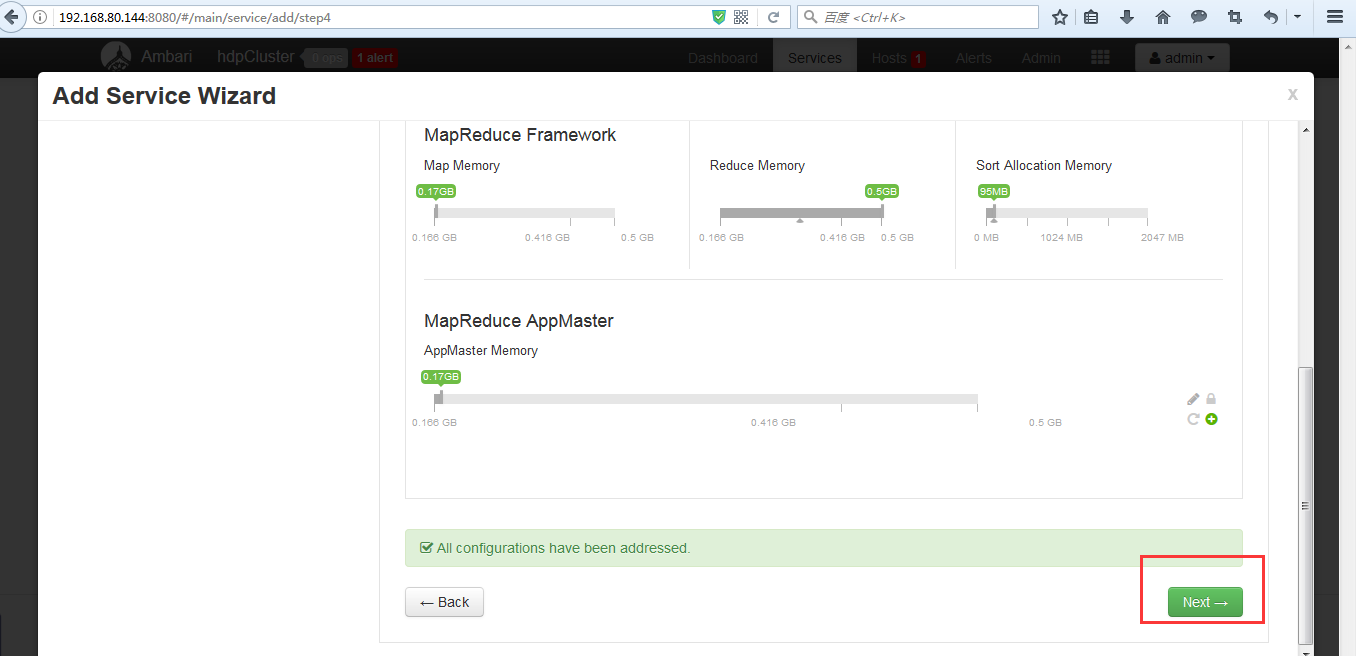

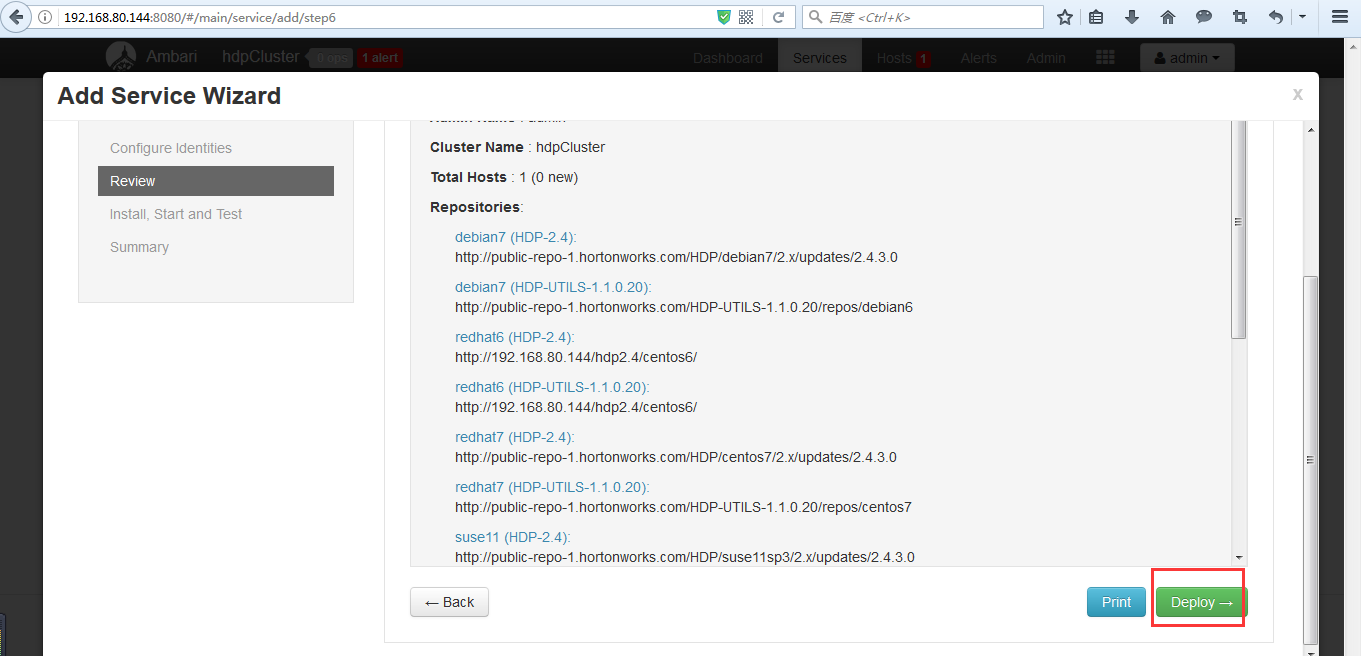

(3)部署Mapreduce和YARN

1)接下来我们就来演示一下如何添加新的服务

![]()

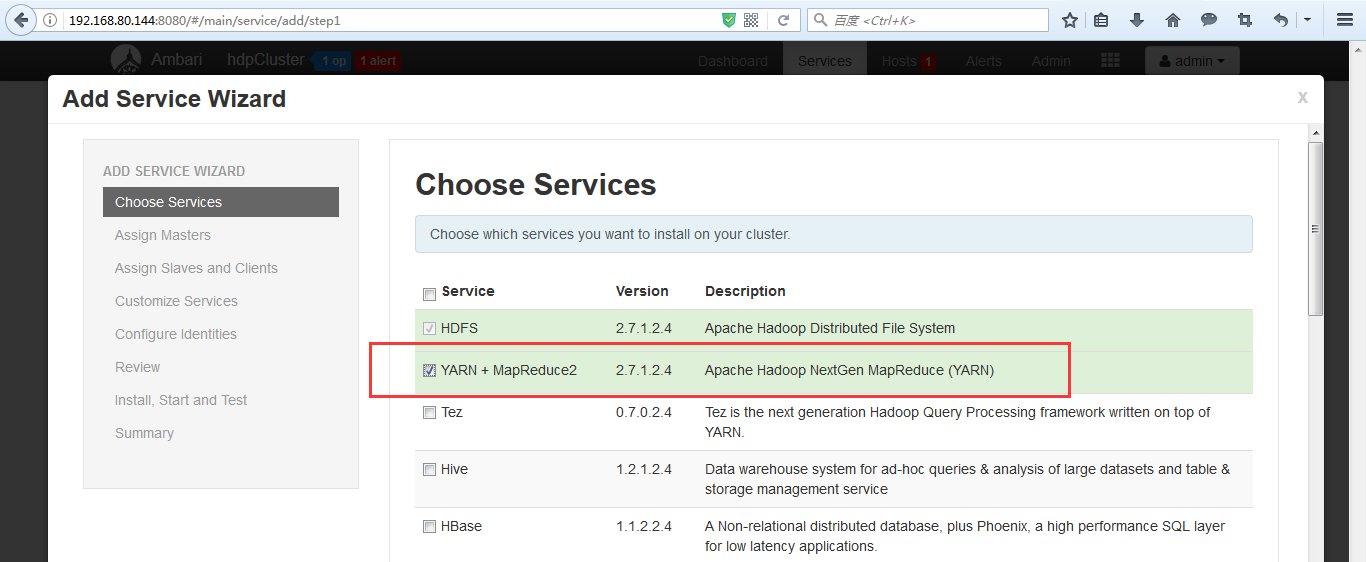

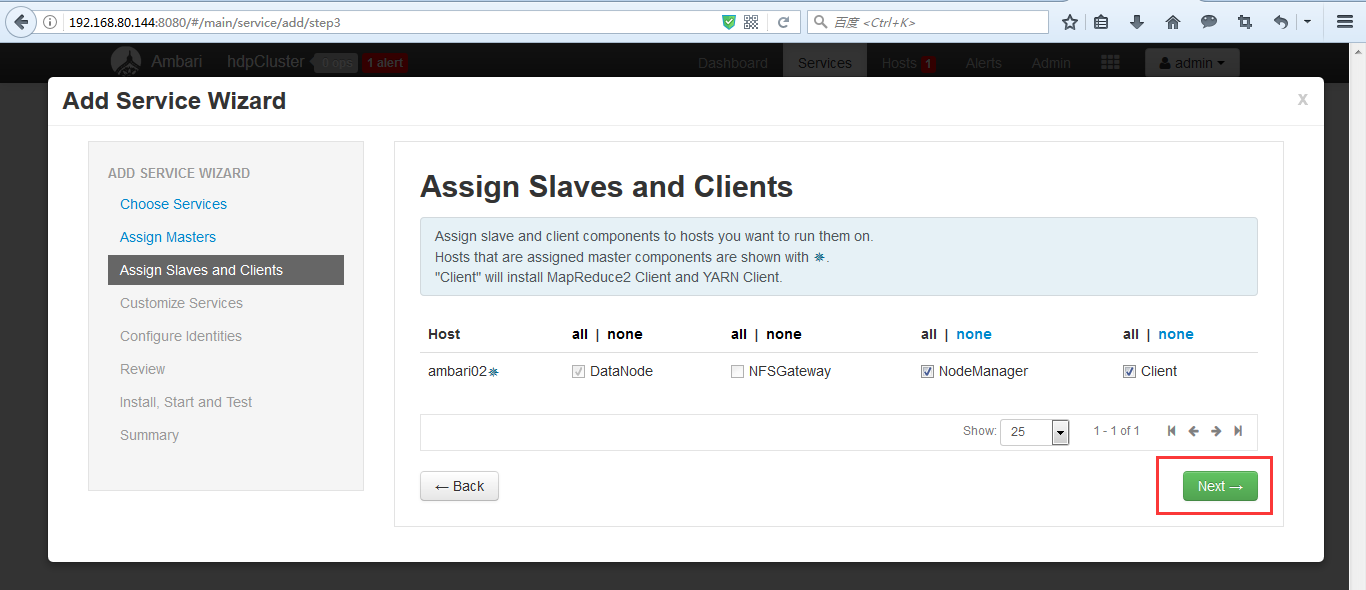

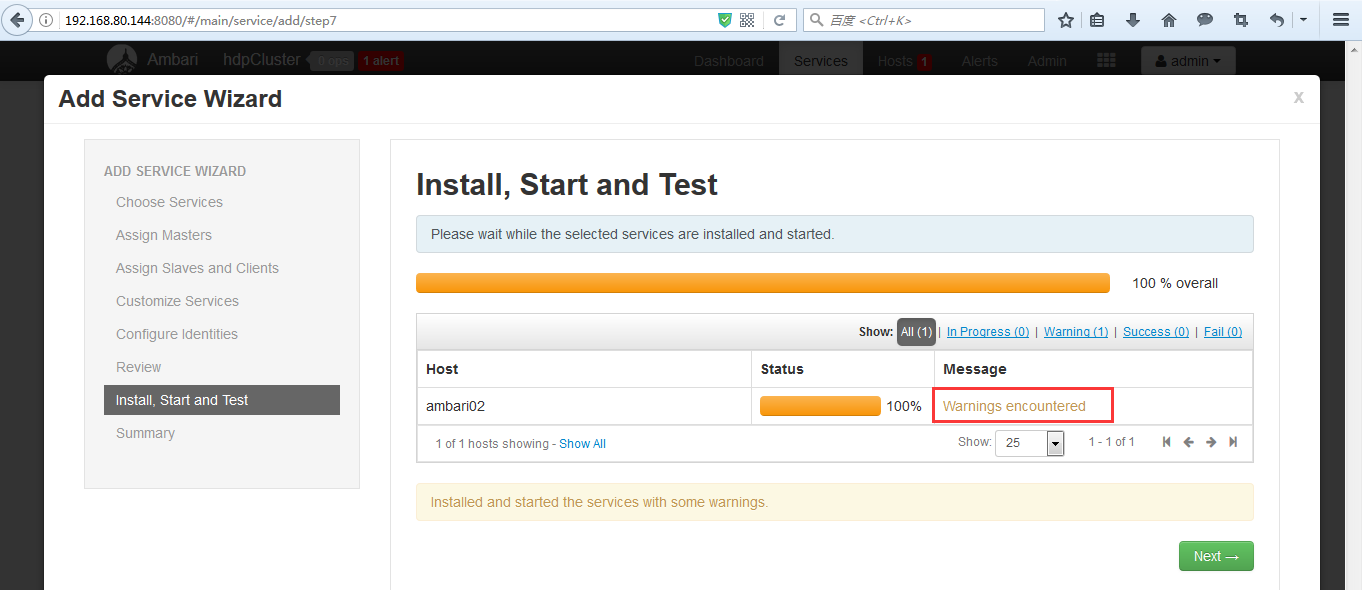

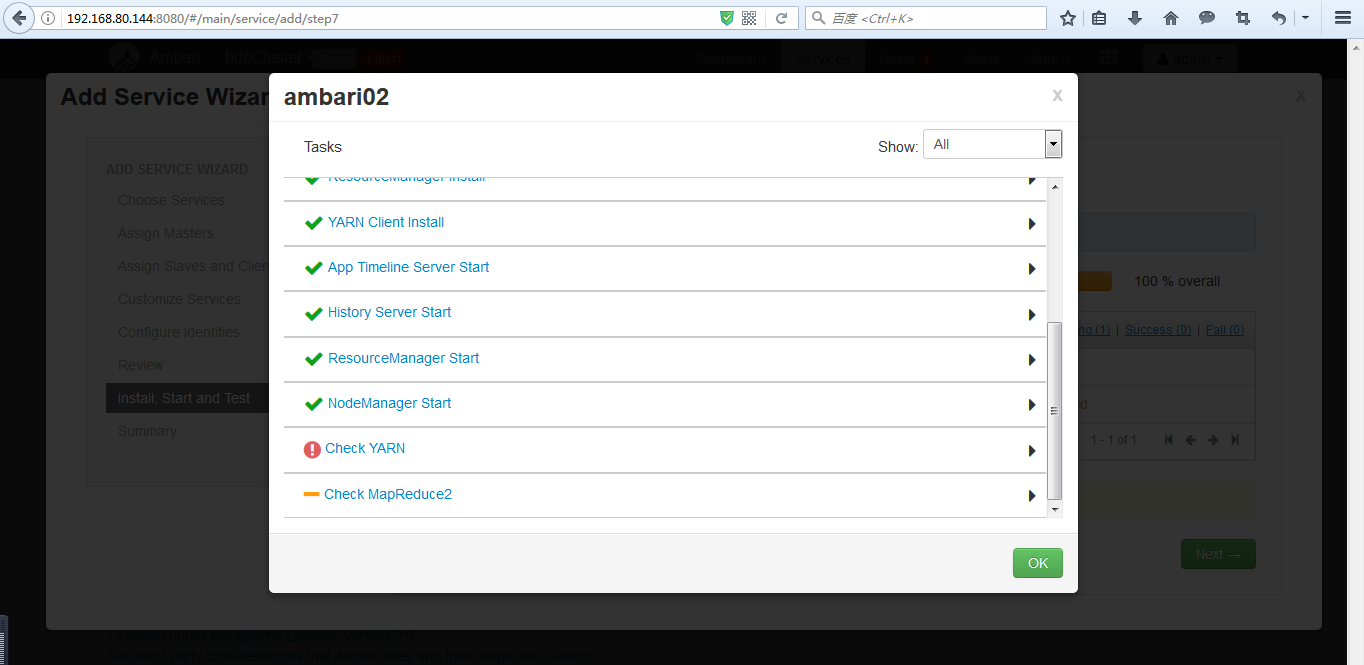

2)然后我们选择需要添加的服务,然后按照提示点击next即可,ambari会进入自动安装并启动

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

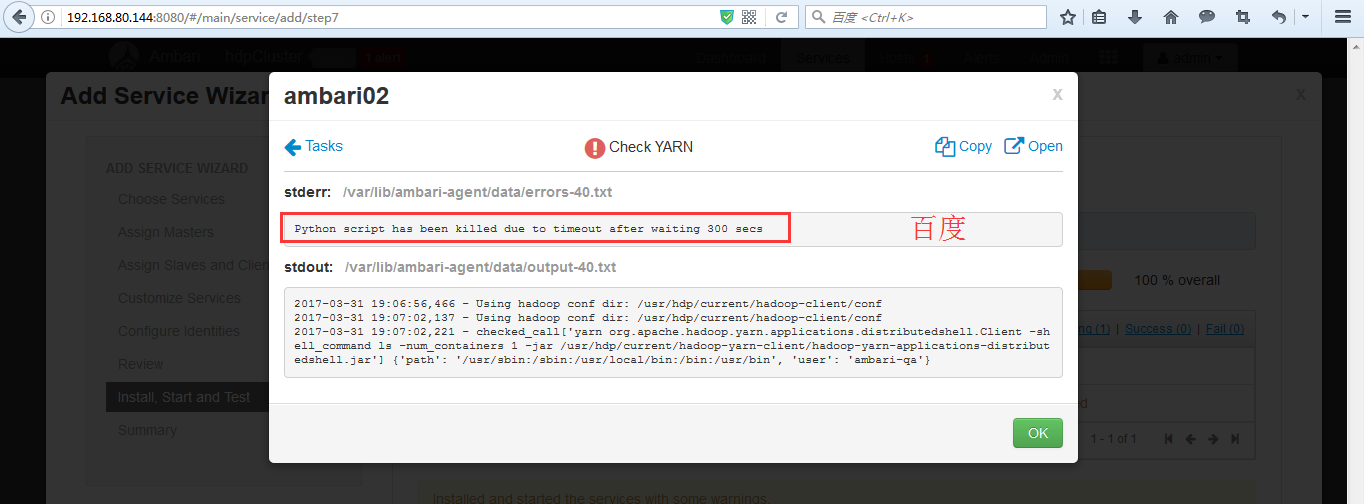

Python script has been killed due to timeout after waiting 300 secs

具体,请移步

Python script has been killed due totimeoutafter waiting 1800 secs

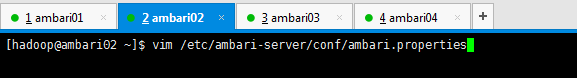

vim /etc/ambari-server/conf/ambari.properties(此错误为ambari-server ssh连接ambari-agent安装超时)

agent.package.install.task.timeout=1800更改为9600(时间可根据网络情况继续调整)

说白了,就是,跟大家的网速有关。

![]()

或者

这里装着装着就失败了,显示Python script has been killed due to timeout after waiting 1800 secs

解决办法:

vim /etc/yum.conf,把installonly_limit的值设成600

后来仍然有超时失败,只能retry了,最后终于成功了!

![]()

![]()

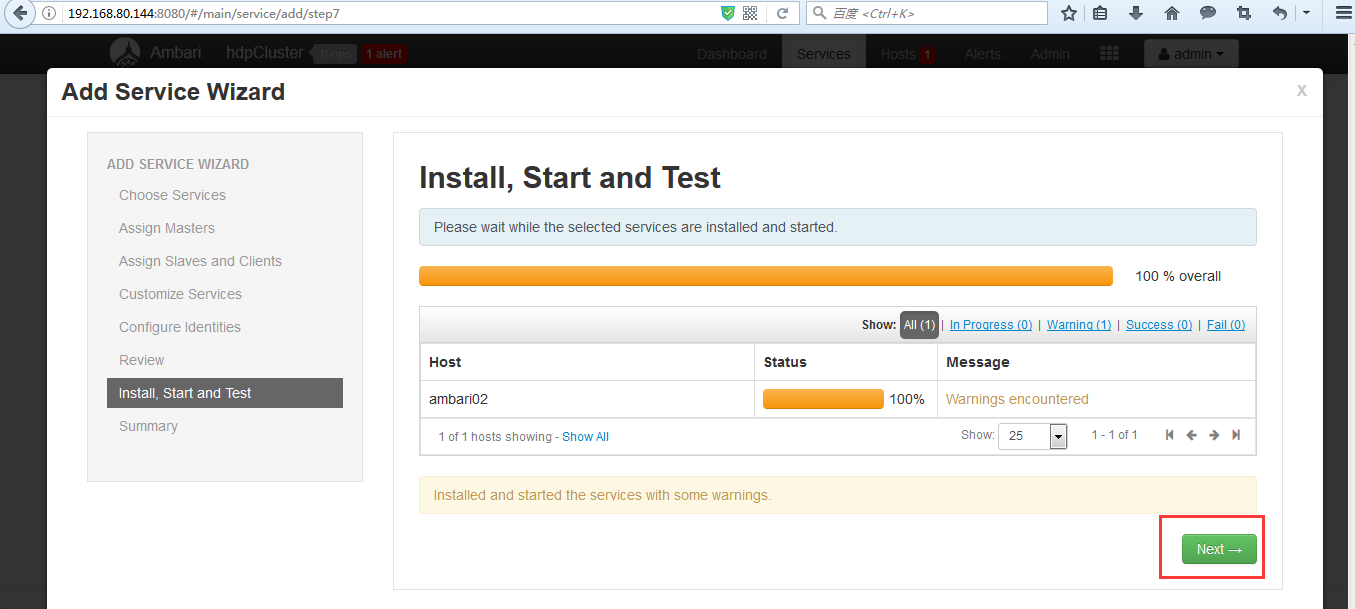

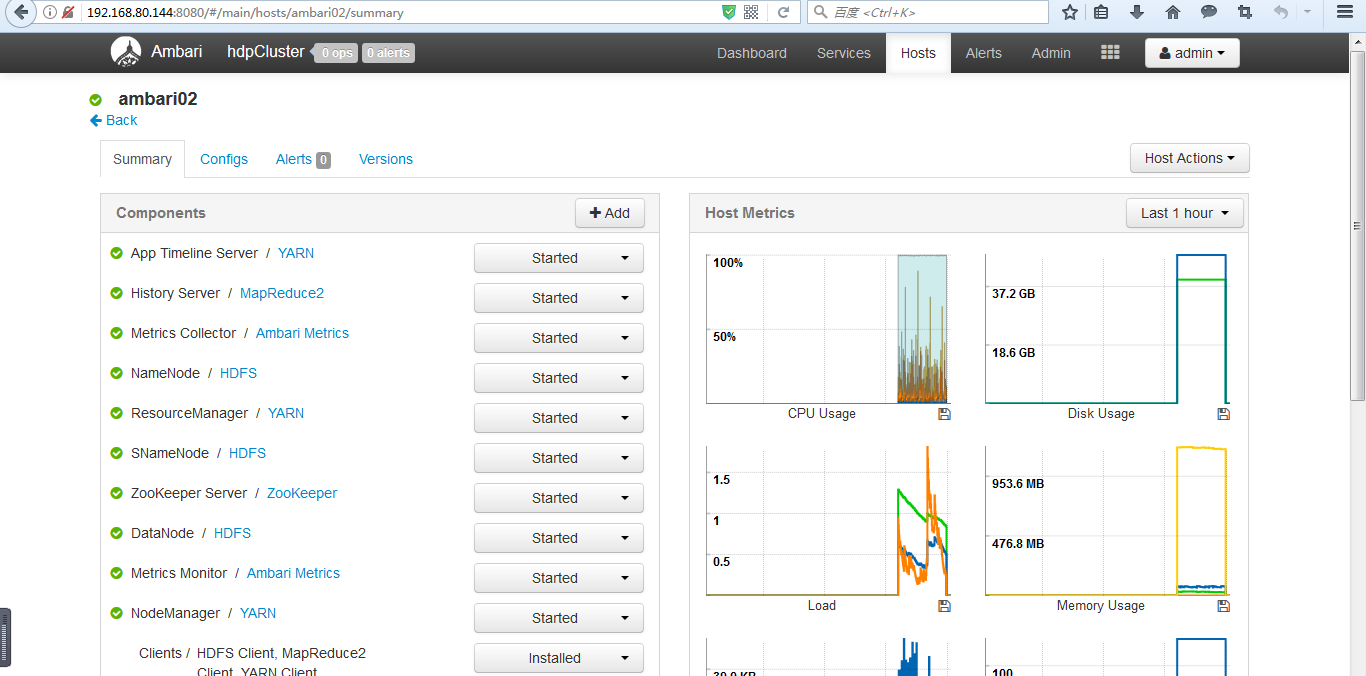

3)一段时间之后,我们发现所有的服务就都启动起来了

![]()

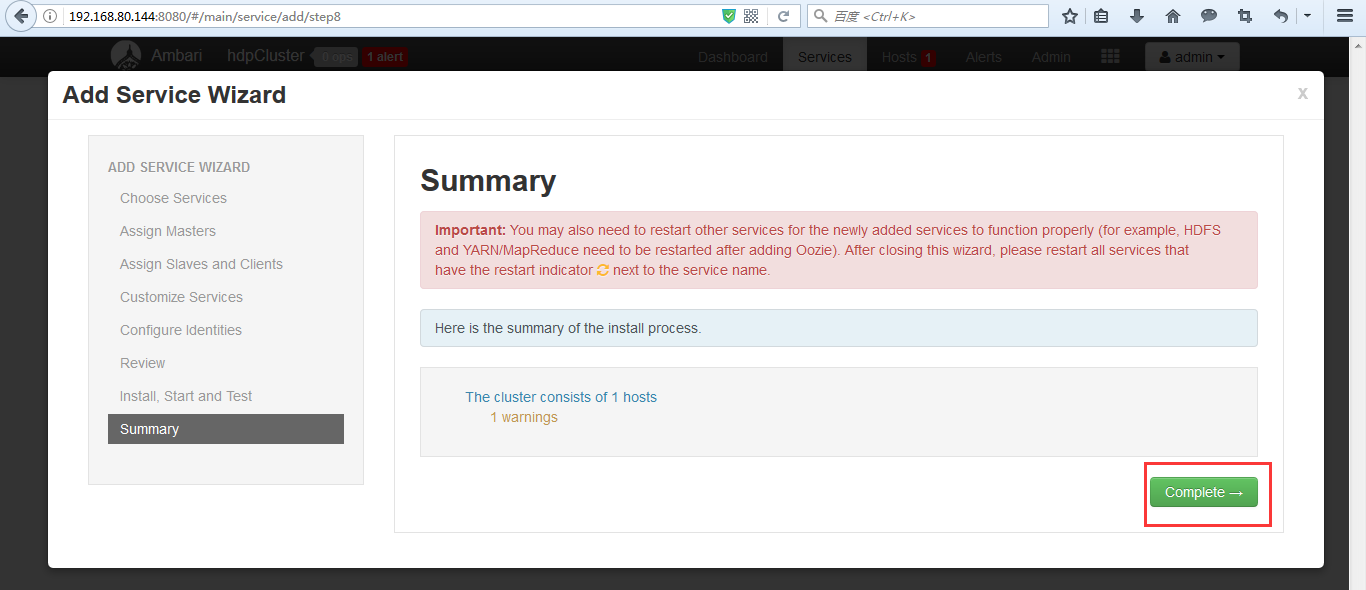

Ambari安装之部署 (Metrics Collector和 Metrics Monitor) Install Pending ...问题

然后,成功解决了,如下

![]()

![]()

![]()

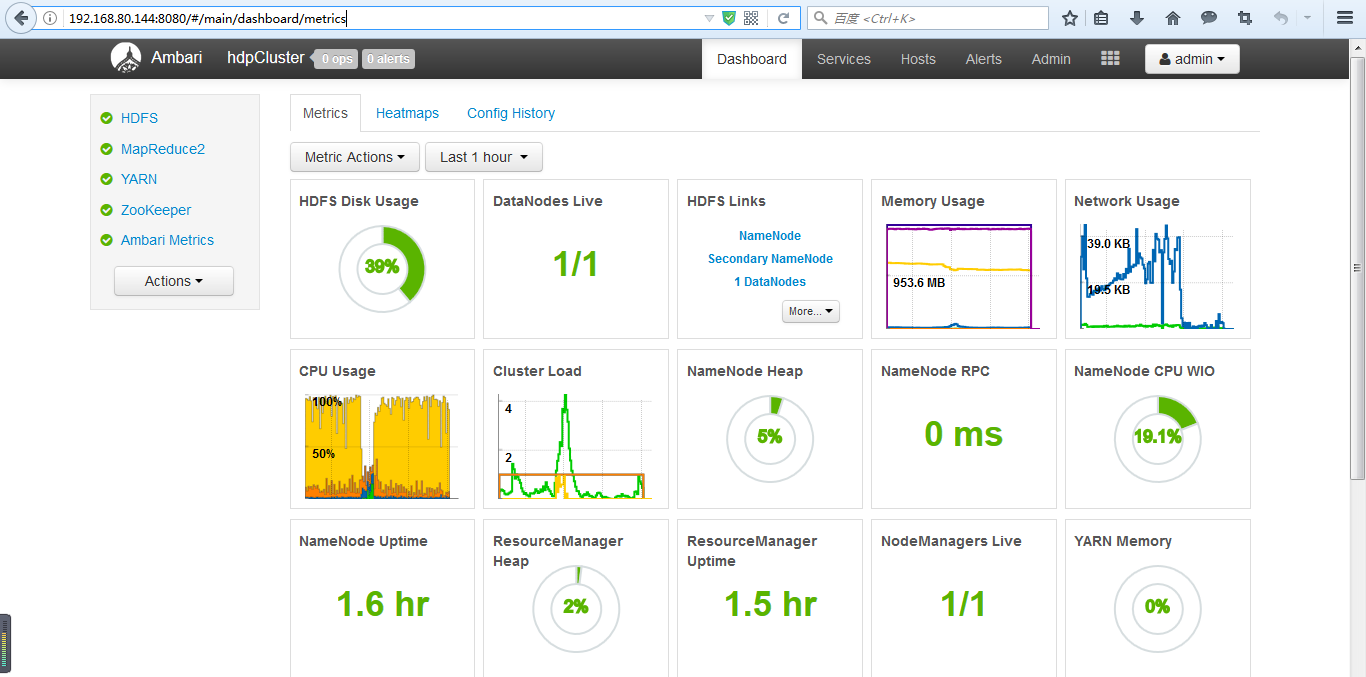

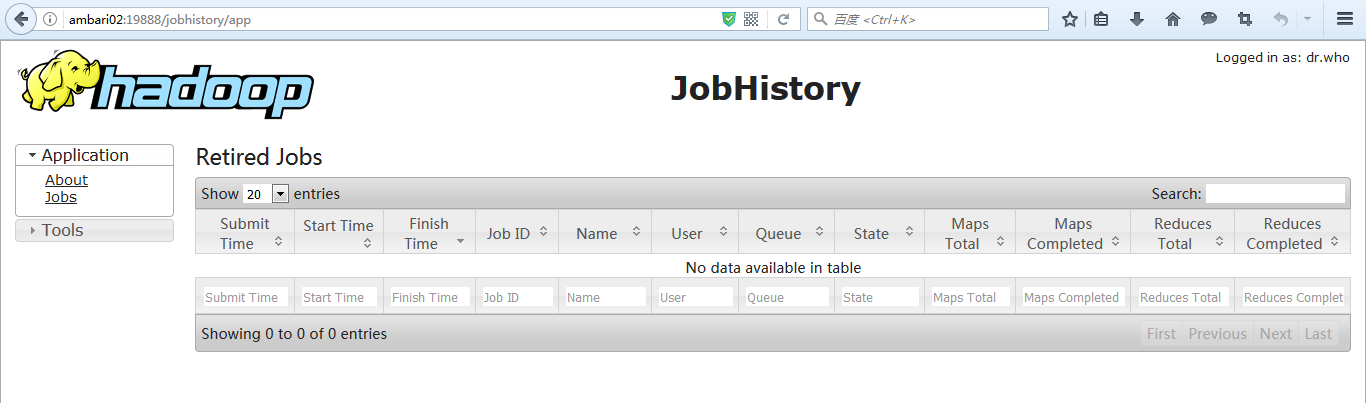

(4)运行MapReduce程序

实际上在mapreduce检测的过程中,系统已经跑过一个mapreduce进行测试了

![]()

OK,到此为止,我们的单节点集群就部署成功了。

本文转自大数据躺过的坑博客园博客,原文链接:http://www.cnblogs.com/zlslch/p/6629249.html,如需转载请自行联系原作者