为什么我用过storm-0.9.6版本,我还要用storm-1.0.2?

storm集群也是由主节点和从节点组成的。

storm版本的变更:

storm0.9.x

storm0.10.x

storm1.x

前面这些版本里面storm的核心源码是由Java+clojule组成的。

storm2.x

后期这个版本就是全部用java重写了。

(阿里在很早的时候就对storm进程了重写,提供了jstorm,后期jstorm也加入到apachestorm

负责使用java对storm进行重写,这就是storm2.x版本的由来。)

注意:

在storm0.9.x的版本中,storm集群只支持一个nimbus节点,主节点是存在问题。

在storm0.10.x以后,storm集群可以支持多个nimbus节点,其中有一个为leader,负责真正运行,其余的为offline。

主节点(控制节点 master)【主节点可以有一个或者多个】

职责:负责分发代码,监控代码的执行。

nimbus:

ui:可以查看集群的信息以及topology的运行情况

logviewer:因为主节点会有多个,有时候也需要查看主节点的日志信息。

从节点(工作节点 worker)【从节点可以有一个或者多个】

职责:负责产生worker进程,执行任务。

supervisor:

logviewer:可以通过webui界面查看topology的运行日志

Storm的本地模式安装

本地模式在一个进程里面模拟一个storm集群的所有功能, 这对开发和测试来说非常方便。以本地模式运行topology跟在集群上运行topology类似。

要创建一个进程内“集群”,使用LocalCluster对象就可以了:

import backtype.storm.LocalCluster;

LocalCluster cluster = new LocalCluster();

然后可以通过LocalCluster对象的submitTopology方法来提交topology, 效果和StormSubmitter对应的方法是一样的。submitTopology方法需要三个参数: topology的名字, topology的配置以及topology对象本身。你可以通过killTopology方法来终止一个topology, 它需要一个topology名字作为参数。

要关闭一个本地集群,简单调用:

就可以了。

Storm的分布式模式安装(本博文)

官方安装文档

http://storm.apache.org/releases/current/Setting-up-a-Storm-cluster.html

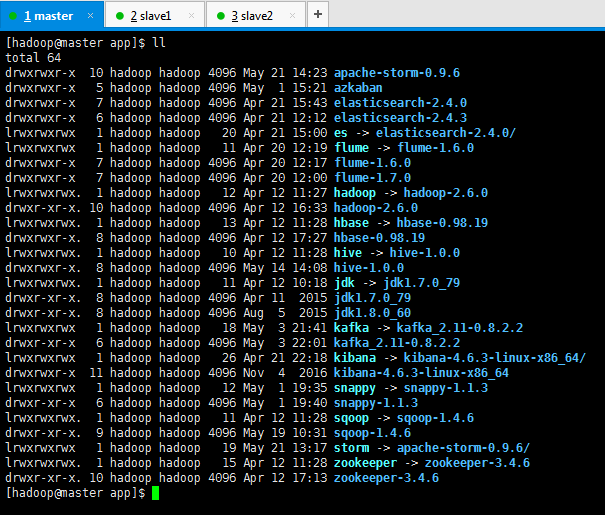

机器情况:在master、slave1、slave2机器的/home/hadoop/app目录下分别下载storm安装包

![]()

![]()

![]()

本博文情况是

master nimbus

slave1 nimbus supervisor

slave2 supervisor

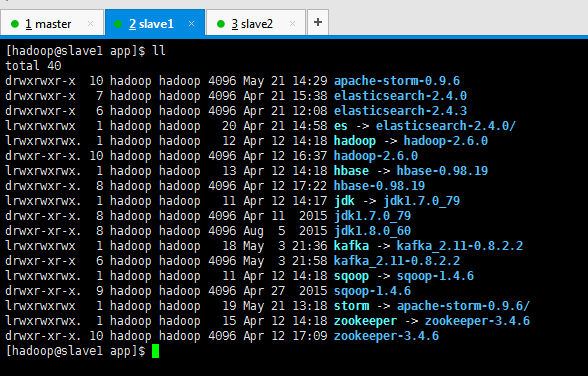

1、apache-storm-1.0.2.tar.gz的下载

http://archive.apache.org/dist/storm/apache-storm-1.0.2/

![]()

或者,直接在安装目录下,在线下载

wget http://apache.fayea.com/storm/apache-storm-1.0.2/apache-storm-1.0.2.tar.gz

我这里,选择先下载好,再上传安装的方式。

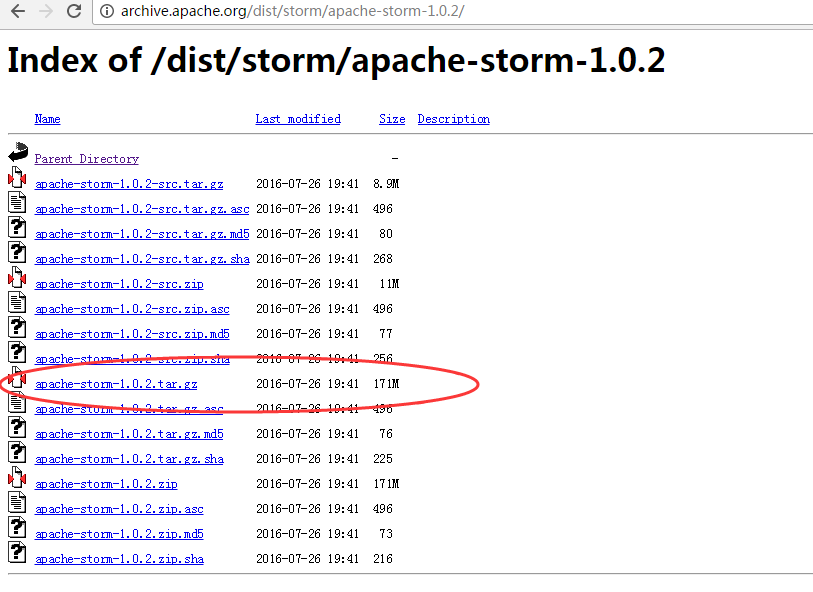

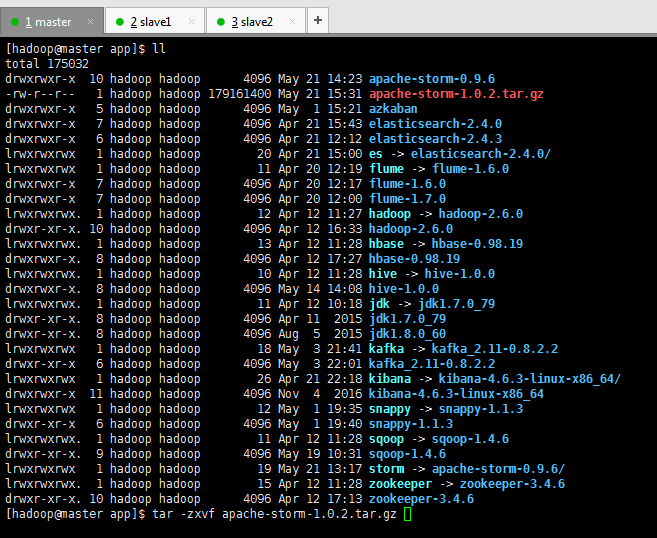

2、上传压缩包

![]()

![]()

![复制代码]()

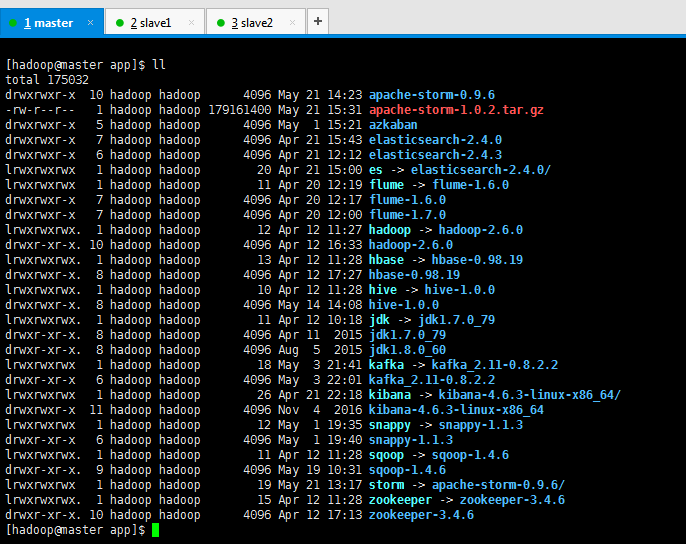

[hadoop@master app]$ ll

total 64

drwxrwxr-x 10 hadoop hadoop 4096 May 21 14:23 apache-storm-0.9.6

drwxrwxr-x 5 hadoop hadoop 4096 May 1 15:21 azkaban

drwxrwxr-x 7 hadoop hadoop 4096 Apr 21 15:43 elasticsearch-2.4.0

drwxrwxr-x 6 hadoop hadoop 4096 Apr 21 12:12 elasticsearch-2.4.3

lrwxrwxrwx 1 hadoop hadoop 20 Apr 21 15:00 es -> elasticsearch-2.4.0/

lrwxrwxrwx 1 hadoop hadoop 11 Apr 20 12:19 flume -> flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:17 flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:00 flume-1.7.0

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 12 11:27 hadoop -> hadoop-2.6.0

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 16:33 hadoop-2.6.0

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 12 11:28 hbase -> hbase-0.98.19

drwxrwxr-x. 8 hadoop hadoop 4096 Apr 12 17:27 hbase-0.98.19

lrwxrwxrwx. 1 hadoop hadoop 10 Apr 12 11:28 hive -> hive-1.0.0

drwxrwxr-x. 8 hadoop hadoop 4096 May 14 14:08 hive-1.0.0

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 10:18 jdk -> jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60

lrwxrwxrwx 1 hadoop hadoop 18 May 3 21:41 kafka -> kafka_2.11-0.8.2.2

drwxr-xr-x 6 hadoop hadoop 4096 May 3 22:01 kafka_2.11-0.8.2.2

lrwxrwxrwx 1 hadoop hadoop 26 Apr 21 22:18 kibana -> kibana-4.6.3-linux-x86_64/

drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64

lrwxrwxrwx 1 hadoop hadoop 12 May 1 19:35 snappy -> snappy-1.1.3

drwxr-xr-x 6 hadoop hadoop 4096 May 1 19:40 snappy-1.1.3

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 11:28 sqoop -> sqoop-1.4.6

drwxr-xr-x. 9 hadoop hadoop 4096 May 19 10:31 sqoop-1.4.6

lrwxrwxrwx 1 hadoop hadoop 19 May 21 13:17 storm -> apache-storm-0.9.6/

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$ rz

[hadoop@master app]$ ll

total 175032

drwxrwxr-x 10 hadoop hadoop 4096 May 21 14:23 apache-storm-0.9.6

-rw-r--r-- 1 hadoop hadoop 179161400 May 21 15:31 apache-storm-1.0.2.tar.gz

drwxrwxr-x 5 hadoop hadoop 4096 May 1 15:21 azkaban

drwxrwxr-x 7 hadoop hadoop 4096 Apr 21 15:43 elasticsearch-2.4.0

drwxrwxr-x 6 hadoop hadoop 4096 Apr 21 12:12 elasticsearch-2.4.3

lrwxrwxrwx 1 hadoop hadoop 20 Apr 21 15:00 es -> elasticsearch-2.4.0/

lrwxrwxrwx 1 hadoop hadoop 11 Apr 20 12:19 flume -> flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:17 flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:00 flume-1.7.0

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 12 11:27 hadoop -> hadoop-2.6.0

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 16:33 hadoop-2.6.0

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 12 11:28 hbase -> hbase-0.98.19

drwxrwxr-x. 8 hadoop hadoop 4096 Apr 12 17:27 hbase-0.98.19

lrwxrwxrwx. 1 hadoop hadoop 10 Apr 12 11:28 hive -> hive-1.0.0

drwxrwxr-x. 8 hadoop hadoop 4096 May 14 14:08 hive-1.0.0

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 10:18 jdk -> jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60

lrwxrwxrwx 1 hadoop hadoop 18 May 3 21:41 kafka -> kafka_2.11-0.8.2.2

drwxr-xr-x 6 hadoop hadoop 4096 May 3 22:01 kafka_2.11-0.8.2.2

lrwxrwxrwx 1 hadoop hadoop 26 Apr 21 22:18 kibana -> kibana-4.6.3-linux-x86_64/

drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64

lrwxrwxrwx 1 hadoop hadoop 12 May 1 19:35 snappy -> snappy-1.1.3

drwxr-xr-x 6 hadoop hadoop 4096 May 1 19:40 snappy-1.1.3

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 11:28 sqoop -> sqoop-1.4.6

drwxr-xr-x. 9 hadoop hadoop 4096 May 19 10:31 sqoop-1.4.6

lrwxrwxrwx 1 hadoop hadoop 19 May 21 13:17 storm -> apache-storm-0.9.6/

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$

![复制代码]()

slave1和slave2机器同样。不多赘述。

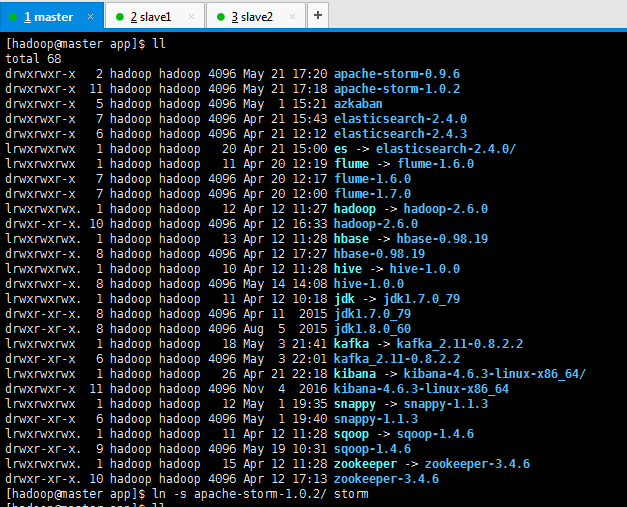

3、解压压缩包,并赋予用户组和用户权限

![]()

![复制代码]()

[hadoop@master app]$ ll

total 175032

drwxrwxr-x 10 hadoop hadoop 4096 May 21 14:23 apache-storm-0.9.6

-rw-r--r-- 1 hadoop hadoop 179161400 May 21 15:31 apache-storm-1.0.2.tar.gz

drwxrwxr-x 5 hadoop hadoop 4096 May 1 15:21 azkaban

drwxrwxr-x 7 hadoop hadoop 4096 Apr 21 15:43 elasticsearch-2.4.0

drwxrwxr-x 6 hadoop hadoop 4096 Apr 21 12:12 elasticsearch-2.4.3

lrwxrwxrwx 1 hadoop hadoop 20 Apr 21 15:00 es -> elasticsearch-2.4.0/

lrwxrwxrwx 1 hadoop hadoop 11 Apr 20 12:19 flume -> flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:17 flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:00 flume-1.7.0

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 12 11:27 hadoop -> hadoop-2.6.0

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 16:33 hadoop-2.6.0

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 12 11:28 hbase -> hbase-0.98.19

drwxrwxr-x. 8 hadoop hadoop 4096 Apr 12 17:27 hbase-0.98.19

lrwxrwxrwx. 1 hadoop hadoop 10 Apr 12 11:28 hive -> hive-1.0.0

drwxrwxr-x. 8 hadoop hadoop 4096 May 14 14:08 hive-1.0.0

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 10:18 jdk -> jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60

lrwxrwxrwx 1 hadoop hadoop 18 May 3 21:41 kafka -> kafka_2.11-0.8.2.2

drwxr-xr-x 6 hadoop hadoop 4096 May 3 22:01 kafka_2.11-0.8.2.2

lrwxrwxrwx 1 hadoop hadoop 26 Apr 21 22:18 kibana -> kibana-4.6.3-linux-x86_64/

drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64

lrwxrwxrwx 1 hadoop hadoop 12 May 1 19:35 snappy -> snappy-1.1.3

drwxr-xr-x 6 hadoop hadoop 4096 May 1 19:40 snappy-1.1.3

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 11:28 sqoop -> sqoop-1.4.6

drwxr-xr-x. 9 hadoop hadoop 4096 May 19 10:31 sqoop-1.4.6

lrwxrwxrwx 1 hadoop hadoop 19 May 21 13:17 storm -> apache-storm-0.9.6/

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$ tar -zxvf apache-storm-1.0.2.tar.gz

![复制代码]()

slave1和slave2机器同样。不多赘述。

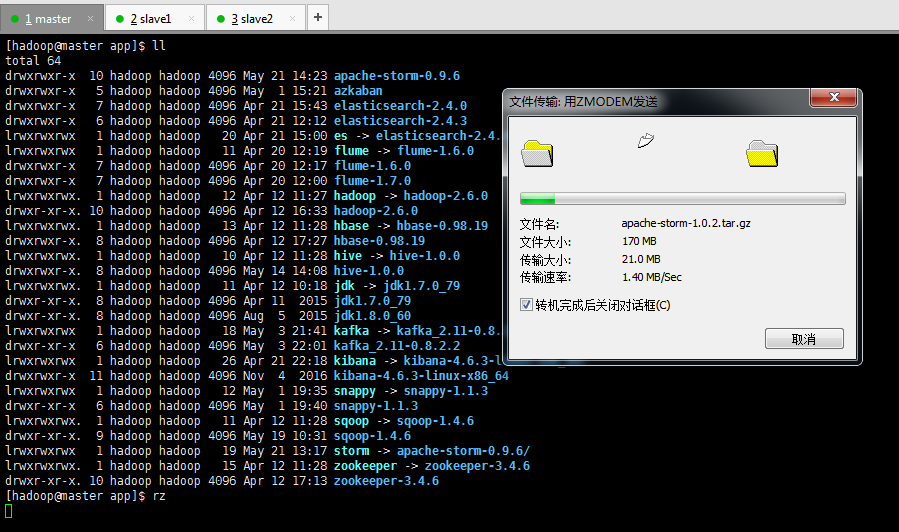

4、删除压缩包,为了更好容下多版本,创建软链接

![]()

![]()

![复制代码]()

[hadoop@master app]$ ll

total 68

drwxrwxr-x 2 hadoop hadoop 4096 May 21 17:20 apache-storm-0.9.6

drwxrwxr-x 11 hadoop hadoop 4096 May 21 17:18 apache-storm-1.0.2

drwxrwxr-x 5 hadoop hadoop 4096 May 1 15:21 azkaban

drwxrwxr-x 7 hadoop hadoop 4096 Apr 21 15:43 elasticsearch-2.4.0

drwxrwxr-x 6 hadoop hadoop 4096 Apr 21 12:12 elasticsearch-2.4.3

lrwxrwxrwx 1 hadoop hadoop 20 Apr 21 15:00 es -> elasticsearch-2.4.0/

lrwxrwxrwx 1 hadoop hadoop 11 Apr 20 12:19 flume -> flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:17 flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:00 flume-1.7.0

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 12 11:27 hadoop -> hadoop-2.6.0

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 16:33 hadoop-2.6.0

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 12 11:28 hbase -> hbase-0.98.19

drwxrwxr-x. 8 hadoop hadoop 4096 Apr 12 17:27 hbase-0.98.19

lrwxrwxrwx. 1 hadoop hadoop 10 Apr 12 11:28 hive -> hive-1.0.0

drwxrwxr-x. 8 hadoop hadoop 4096 May 14 14:08 hive-1.0.0

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 10:18 jdk -> jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60

lrwxrwxrwx 1 hadoop hadoop 18 May 3 21:41 kafka -> kafka_2.11-0.8.2.2

drwxr-xr-x 6 hadoop hadoop 4096 May 3 22:01 kafka_2.11-0.8.2.2

lrwxrwxrwx 1 hadoop hadoop 26 Apr 21 22:18 kibana -> kibana-4.6.3-linux-x86_64/

drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64

lrwxrwxrwx 1 hadoop hadoop 12 May 1 19:35 snappy -> snappy-1.1.3

drwxr-xr-x 6 hadoop hadoop 4096 May 1 19:40 snappy-1.1.3

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 11:28 sqoop -> sqoop-1.4.6

drwxr-xr-x. 9 hadoop hadoop 4096 May 19 10:31 sqoop-1.4.6

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$ ln -s apache-storm-1.0.2/ storm

[hadoop@master app]$ ll

total 68

drwxrwxr-x 2 hadoop hadoop 4096 May 21 17:20 apache-storm-0.9.6

drwxrwxr-x 11 hadoop hadoop 4096 May 21 17:18 apache-storm-1.0.2

drwxrwxr-x 5 hadoop hadoop 4096 May 1 15:21 azkaban

drwxrwxr-x 7 hadoop hadoop 4096 Apr 21 15:43 elasticsearch-2.4.0

drwxrwxr-x 6 hadoop hadoop 4096 Apr 21 12:12 elasticsearch-2.4.3

lrwxrwxrwx 1 hadoop hadoop 20 Apr 21 15:00 es -> elasticsearch-2.4.0/

lrwxrwxrwx 1 hadoop hadoop 11 Apr 20 12:19 flume -> flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:17 flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:00 flume-1.7.0

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 12 11:27 hadoop -> hadoop-2.6.0

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 16:33 hadoop-2.6.0

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 12 11:28 hbase -> hbase-0.98.19

drwxrwxr-x. 8 hadoop hadoop 4096 Apr 12 17:27 hbase-0.98.19

lrwxrwxrwx. 1 hadoop hadoop 10 Apr 12 11:28 hive -> hive-1.0.0

drwxrwxr-x. 8 hadoop hadoop 4096 May 14 14:08 hive-1.0.0

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 10:18 jdk -> jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60

lrwxrwxrwx 1 hadoop hadoop 18 May 3 21:41 kafka -> kafka_2.11-0.8.2.2

drwxr-xr-x 6 hadoop hadoop 4096 May 3 22:01 kafka_2.11-0.8.2.2

lrwxrwxrwx 1 hadoop hadoop 26 Apr 21 22:18 kibana -> kibana-4.6.3-linux-x86_64/

drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64

lrwxrwxrwx 1 hadoop hadoop 12 May 1 19:35 snappy -> snappy-1.1.3

drwxr-xr-x 6 hadoop hadoop 4096 May 1 19:40 snappy-1.1.3

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 11:28 sqoop -> sqoop-1.4.6

drwxr-xr-x. 9 hadoop hadoop 4096 May 19 10:31 sqoop-1.4.6

lrwxrwxrwx 1 hadoop hadoop 19 May 21 17:21 storm -> apache-storm-1.0.2/

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$

![复制代码]()

slave1和slave2机器同样。不多赘述。

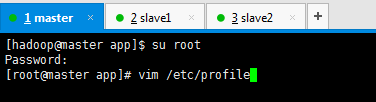

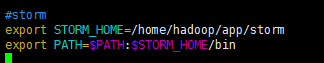

5、修改配置环境

![]()

[hadoop@master app]$ su root

Password:

[root@master app]# vim /etc/profile

slave1和slave2机器同样。不多赘述

![]()

#storm

export STORM_HOME=/home/hadoop/app/storm

export PATH=$PATH:$STORM_HOME/bin

slave1和slave2机器同样。不多赘述

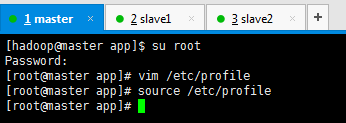

![]()

[hadoop@master app]$ su root

Password:

[root@master app]# vim /etc/profile

[root@master app]# source /etc/profile

[root@master app]#

slave1和slave2机器同样。不多赘述

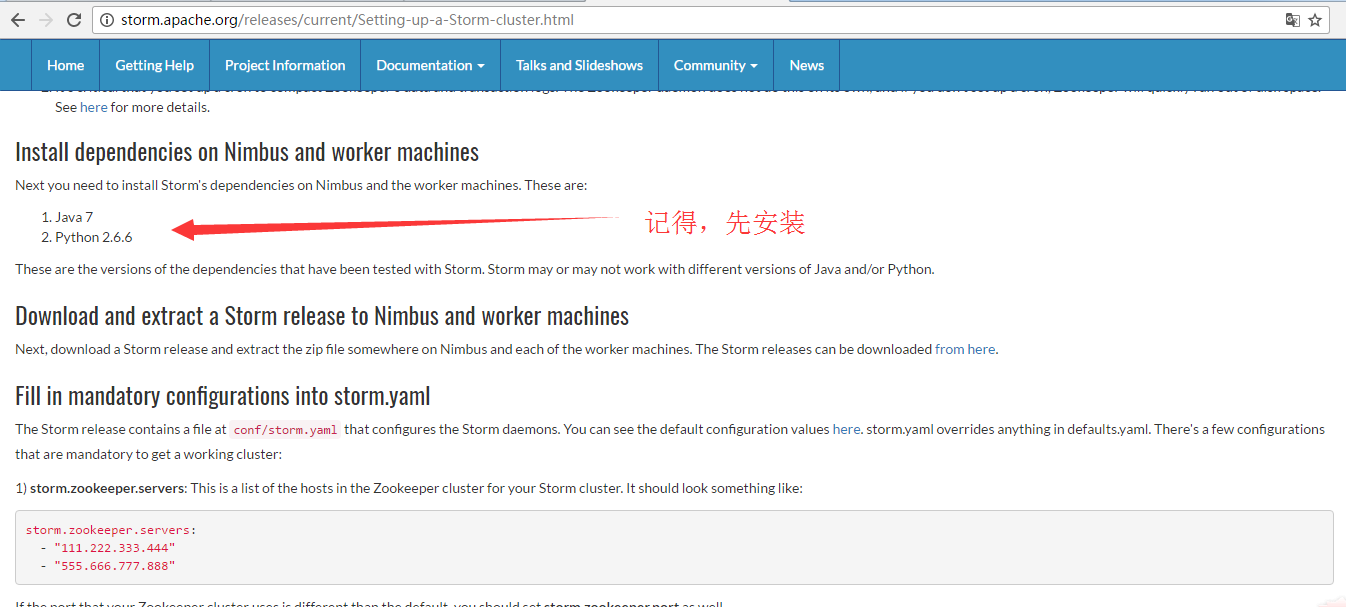

6、下载好Storm集群所需的其他

![]()

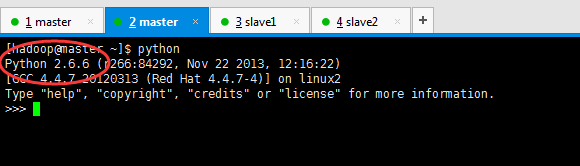

因为博主我的机器是CentOS6.5,已经自带了

![]()

[hadoop@master ~]$ python

Python 2.6.6 (r266:84292, Nov 22 2013, 12:16:22)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-4)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>>

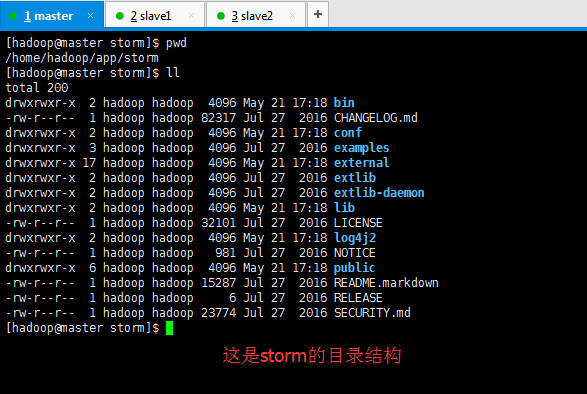

7、配置storm的配置文件

![]()

[hadoop@master storm]$ pwd

/home/hadoop/app/storm

[hadoop@master storm]$ ll

total 200

drwxrwxr-x 2 hadoop hadoop 4096 May 21 17:18 bin

-rw-r--r-- 1 hadoop hadoop 82317 Jul 27 2016 CHANGELOG.md

drwxrwxr-x 2 hadoop hadoop 4096 May 21 17:18 conf

drwxrwxr-x 3 hadoop hadoop 4096 Jul 27 2016 examples

drwxrwxr-x 17 hadoop hadoop 4096 May 21 17:18 external

drwxrwxr-x 2 hadoop hadoop 4096 Jul 27 2016 extlib

drwxrwxr-x 2 hadoop hadoop 4096 Jul 27 2016 extlib-daemon

drwxrwxr-x 2 hadoop hadoop 4096 May 21 17:18 lib

-rw-r--r-- 1 hadoop hadoop 32101 Jul 27 2016 LICENSE

drwxrwxr-x 2 hadoop hadoop 4096 May 21 17:18 log4j2

-rw-r--r-- 1 hadoop hadoop 981 Jul 27 2016 NOTICE

drwxrwxr-x 6 hadoop hadoop 4096 May 21 17:18 public

-rw-r--r-- 1 hadoop hadoop 15287 Jul 27 2016 README.markdown

-rw-r--r-- 1 hadoop hadoop 6 Jul 27 2016 RELEASE

-rw-r--r-- 1 hadoop hadoop 23774 Jul 27 2016 SECURITY.md

[hadoop@master storm]$

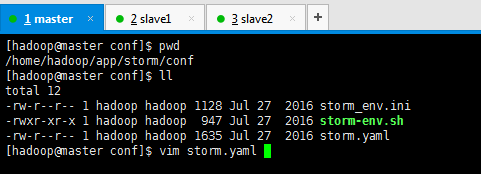

进入storm配置目录下,修改配置文件storm.yaml

![]()

[hadoop@master conf]$ pwd

/home/hadoop/app/storm/conf

[hadoop@master conf]$ ll

total 12

-rw-r--r-- 1 hadoop hadoop 1128 Jul 27 2016 storm_env.ini

-rwxr-xr-x 1 hadoop hadoop 947 Jul 27 2016 storm-env.sh

-rw-r--r-- 1 hadoop hadoop 1635 Jul 27 2016 storm.yaml

[hadoop@master conf]$ vim storm.yaml

slave1和slave2机器同样。不多赘述

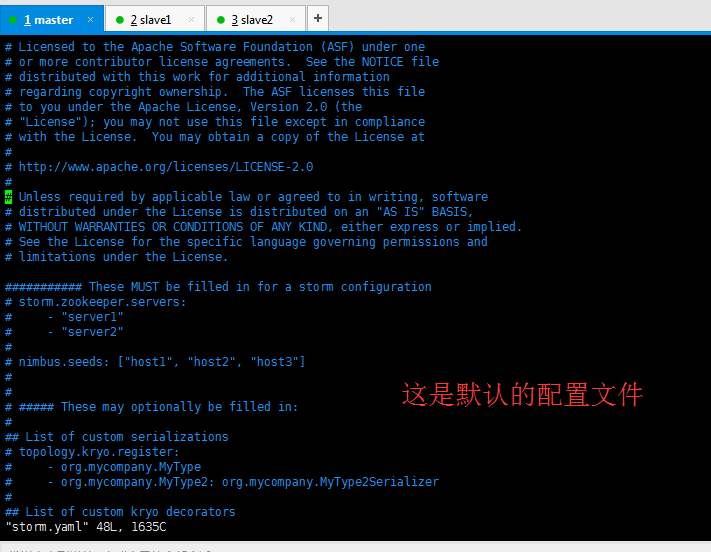

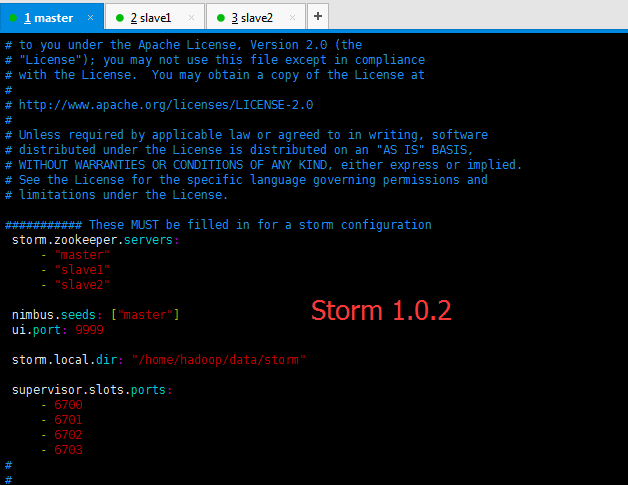

![]()

这里,教给大家一个非常好的技巧。

注意第一列需要一个空格

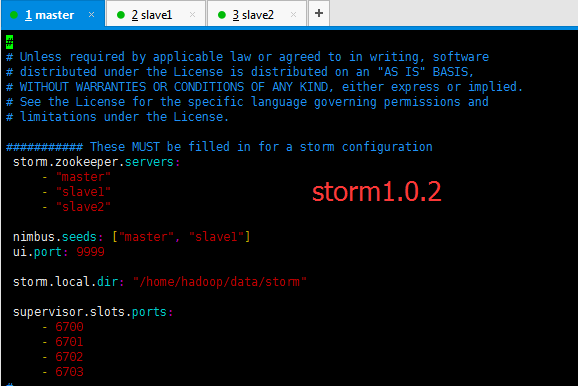

![]()

注意第一列需要一个空格(HA)

![]()

storm.zookeeper.servers:

- "master"

- "slave1"

- "slave2"

nimbus.seeds: ["master", "slave1"]

ui.port: 9999

storm.local.dir: "/home/hadoop/data/storm"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

注意:我的这里ui.port选定为9999,是自定义,为了解决Storm 和spark默认的 8080 端口冲突!

slave1和slave2机器同样。不多赘述。

注意第一列需要一个空格(非HA)

![]()

storm.zookeeper.servers:

- "master"

- "slave1"

- "slave2"

nimbus.seeds: ["master"]

ui.port: 9999

storm.local.dir: "/home/hadoop/data/storm"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

注意:我的这里ui.port选定为9999,是自定义,为了解决Storm 和spark默认的 8080 端口冲突!

slave1和slave2机器同样。不多赘述。

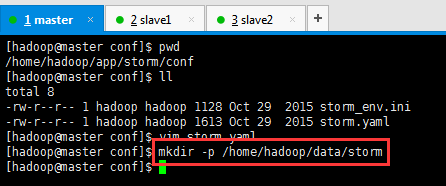

8、新建storm数据存储的路径目录

![]()

[hadoop@master conf]$ mkdir -p /home/hadoop/data/storm

slave1和slave2机器同样。不多赘述

9、启动storm集群(HA)

本博文情况是

master(主) nimbus

slave1(主)(从) nimbus supervisor

slave2(从) supervisor

1、先在master上启动

nohup bin/storm nimbus >/dev/null 2>&1 &

![]()

[hadoop@master storm]$ jps

2374 QuorumPeerMain

7862 Jps

3343 AzkabanWebServer

2813 ResourceManager

3401 AzkabanExecutorServer

2515 NameNode

2671 SecondaryNameNode

[hadoop@master storm]$ nohup bin/storm nimbus >/dev/null 2>&1 &

[1] 7876

[hadoop@master storm]$ jps

2374 QuorumPeerMain

7905 Jps

7910 config_value

3343 AzkabanWebServer

2813 ResourceManager

3401 AzkabanExecutorServer

2515 NameNode

2671 SecondaryNameNode

[hadoop@master storm]$

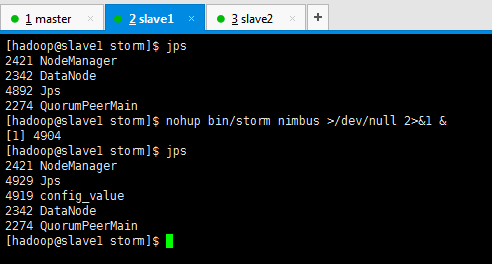

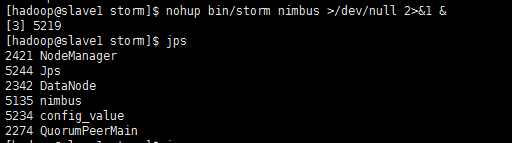

2、再在slave1上启动

nohup bin/storm nimbus >/dev/null 2>&1 &

![]()

![]()

[hadoop@slave1 storm]$ jps

2421 NodeManager

2342 DataNode

4892 Jps

2274 QuorumPeerMain

[hadoop@slave1 storm]$ nohup bin/storm nimbus >/dev/null 2>&1 &

[1] 4904

[hadoop@slave1 storm]$ jps

2421 NodeManager

5244 Jps

2342 DataNode

5135 nimbus

5234 config_value

2274 QuorumPeerMain

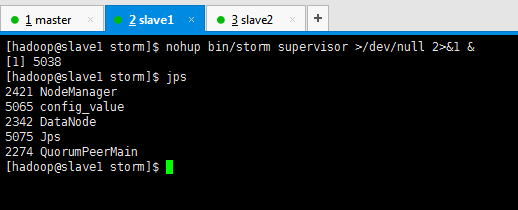

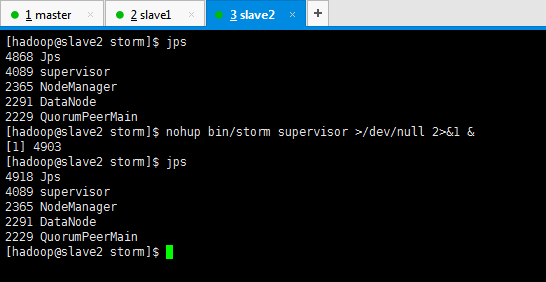

3、先在slave1和slave2上启动

nohup bin/storm supervisor >/dev/null 2>&1 &

![]()

![]()

[hadoop@slave2 storm]$ jps

4868 Jps

4089 supervisor

2365 NodeManager

2291 DataNode

2229 QuorumPeerMain

[hadoop@slave2 storm]$ nohup bin/storm supervisor >/dev/null 2>&1 &

[1] 4903

[hadoop@slave2 storm]$ jps

4918 Jps

4089 supervisor

2365 NodeManager

2291 DataNode

2229 QuorumPeerMain

[hadoop@slave2 storm]$

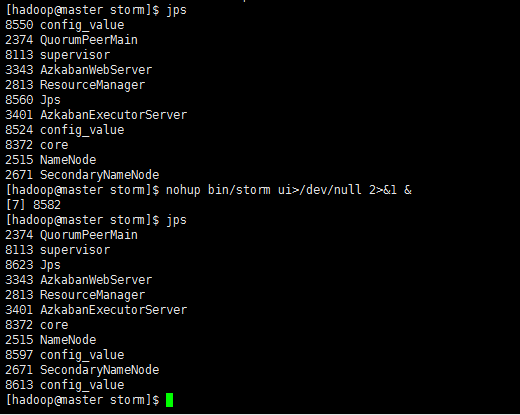

4、在master上启动

nohup bin/storm ui>/dev/null 2>&1 &

![]()

[hadoop@master storm]$ jps

8550 config_value

2374 QuorumPeerMain

8113 supervisor

3343 AzkabanWebServer

2813 ResourceManager

8560 Jps

3401 AzkabanExecutorServer

8524 config_value

8372 core

2515 NameNode

2671 SecondaryNameNode

[hadoop@master storm]$ nohup bin/storm ui>/dev/null 2>&1 &

[7] 8582

[hadoop@master storm]$ jps

2374 QuorumPeerMain

8113 supervisor

8623 Jps

3343 AzkabanWebServer

2813 ResourceManager

3401 AzkabanExecutorServer

8372 core

2515 NameNode

8597 config_value

2671 SecondaryNameNode

8613 config_value

[hadoop@master storm]$

5、在master、slave1和slave2上启动

nohup bin/storm logviwer >/dev/null 2>&1 &

9、启动storm集群(非HA)

本博文情况是

master(主) nimbus

slave1(主)(从) supervisor

slave2(从) supervisor

1、先在master上启动

nohup bin/storm nimbus >/dev/null 2>&1 &

![]()

![]()

[hadoop@master storm]$ jps

2374 QuorumPeerMain

7862 Jps

3343 AzkabanWebServer

2813 ResourceManager

3401 AzkabanExecutorServer 2515 NameNode 2671 SecondaryNameNode [hadoop@master storm]$ nohup bin/storm nimbus >/dev/null 2>&1 & [1] 7876 [hadoop@master storm]$ jps 2374 QuorumPeerMain 7905 Jps 7910 config_value 3343 AzkabanWebServer 2813 ResourceManager 3401 AzkabanExecutorServer 2515 NameNode 2671 SecondaryNameNode

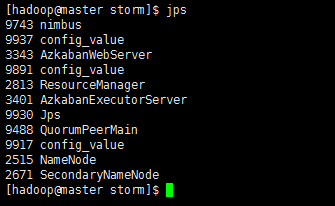

9743 nimbus [hadoop@master storm]$

2、先在slave1和slave2上启动

nohup bin/storm supervisor >/dev/null 2>&1 &

![]()

![]()

[hadoop@slave2 storm]$ jps

4868 Jps

4089 supervisor

2365 NodeManager

2291 DataNode

2229 QuorumPeerMain [hadoop@slave2 storm]$ nohup bin/storm supervisor >/dev/null 2>&1 & [1] 4903 [hadoop@slave2 storm]$ jps 4918 Jps 4089 supervisor 2365 NodeManager 2291 DataNode 2229 QuorumPeerMain [hadoop@slave2 storm]$

3、在master上启动

nohup bin/storm ui>/dev/null 2>&1 &

![]()

[hadoop@master storm]$ jps

8550 config_value

2374 QuorumPeerMain

8113 supervisor

3343 AzkabanWebServer

2813 ResourceManager 8560 Jps 3401 AzkabanExecutorServer 8524 config_value 8372 core 2515 NameNode 2671 SecondaryNameNode [hadoop@master storm]$ nohup bin/storm ui>/dev/null 2>&1 & [7] 8582 [hadoop@master storm]$ jps 2374 QuorumPeerMain 8113 supervisor 8623 Jps 3343 AzkabanWebServer 2813 ResourceManager 3401 AzkabanExecutorServer 8372 core 2515 NameNode 8597 config_value 2671 SecondaryNameNode 8613 config_value [hadoop@master storm]$

4、在master、slave1和slave2上启动

nohup bin/storm logviwer >/dev/null 2>&1 &

![]()

本文转自大数据躺过的坑博客园博客,原文链接:http://www.cnblogs.com/zlslch/p/6885145.html,如需转载请自行联系原作者