[root@localhost config]

Sending Logstash's logs to

/usr/local/logstash/logs

which

is now configured via log4j2.properties

[2017-06-20T22:55:23,801][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http:

//192

.168.180.23:9200/]}}

[2017-06-20T22:55:23,805][INFO ][logstash.outputs.elasticsearch] Running health check to see

if

an Elasticsearch connection is working {:healthcheck_url=>http:

//192

.168.180.23:9200/, :path=>

"/"

}

[2017-06-20T22:55:23,901][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>

[2017-06-20T22:55:23,909][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2017-06-20T22:55:23,947][INFO ][logstash.outputs.elasticsearch] Attempting to

install

template {:manage_template=>{

"template"

=>

"logstash-*"

,

"version"

=>50001,

"settings"

=>{

"index.refresh_interval"

=>

"5s"

},

"mappings"

=>{

"_default_"

=>{

"_all"

=>{

"enabled"

=>

true

,

"norms"

=>

false

},

"dynamic_templates"

=>[{

"message_field"

=>{

"path_match"

=>

"message"

,

"match_mapping_type"

=>

"string"

,

"mapping"

=>{

"type"

=>

"text"

,

"norms"

=>

false

}}}, {

"string_fields"

=>{

"match"

=>

"*"

,

"match_mapping_type"

=>

"string"

,

"mapping"

=>{

"type"

=>

"text"

,

"norms"

=>

false

,

"fields"

=>{

"keyword"

=>{

"type"

=>

"keyword"

}}}}}],

"properties"

=>{

"@timestamp"

=>{

"type"

=>

"date"

,

"include_in_all"

=>

false

},

"@version"

=>{

"type"

=>

"keyword"

,

"include_in_all"

=>

false

},

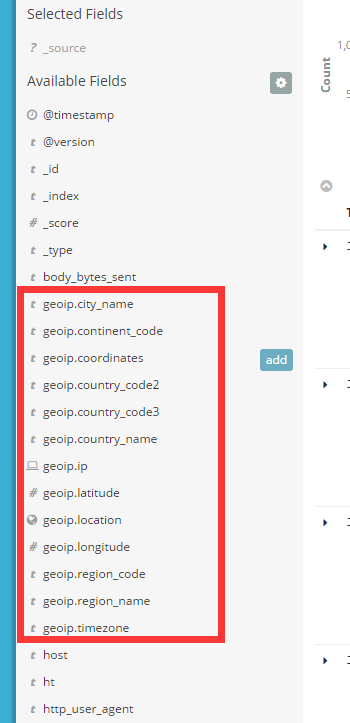

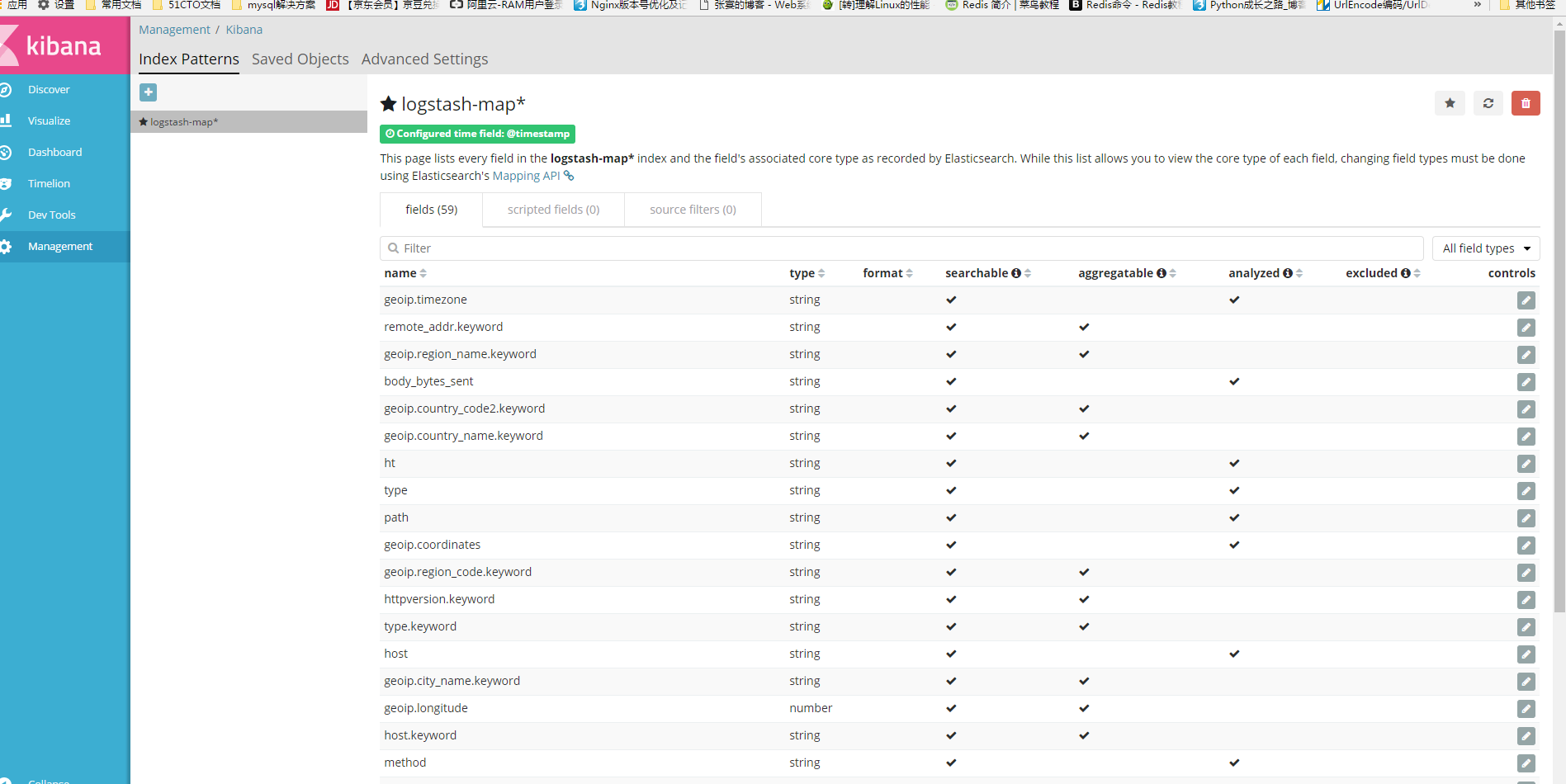

"geoip"

=>{

"dynamic"

=>

true

,

"properties"

=>{

"ip"

=>{

"type"

=>

"ip"

},

"location"

=>{

"type"

=>

"geo_point"

},

"latitude"

=>{

"type"

=>

"half_float"

},

"longitude"

=>{

"type"

=>

"half_float"

}}}}}}}}

[2017-06-20T22:55:23,955][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>

"LogStash::Outputs::ElasticSearch"

, :hosts=>[

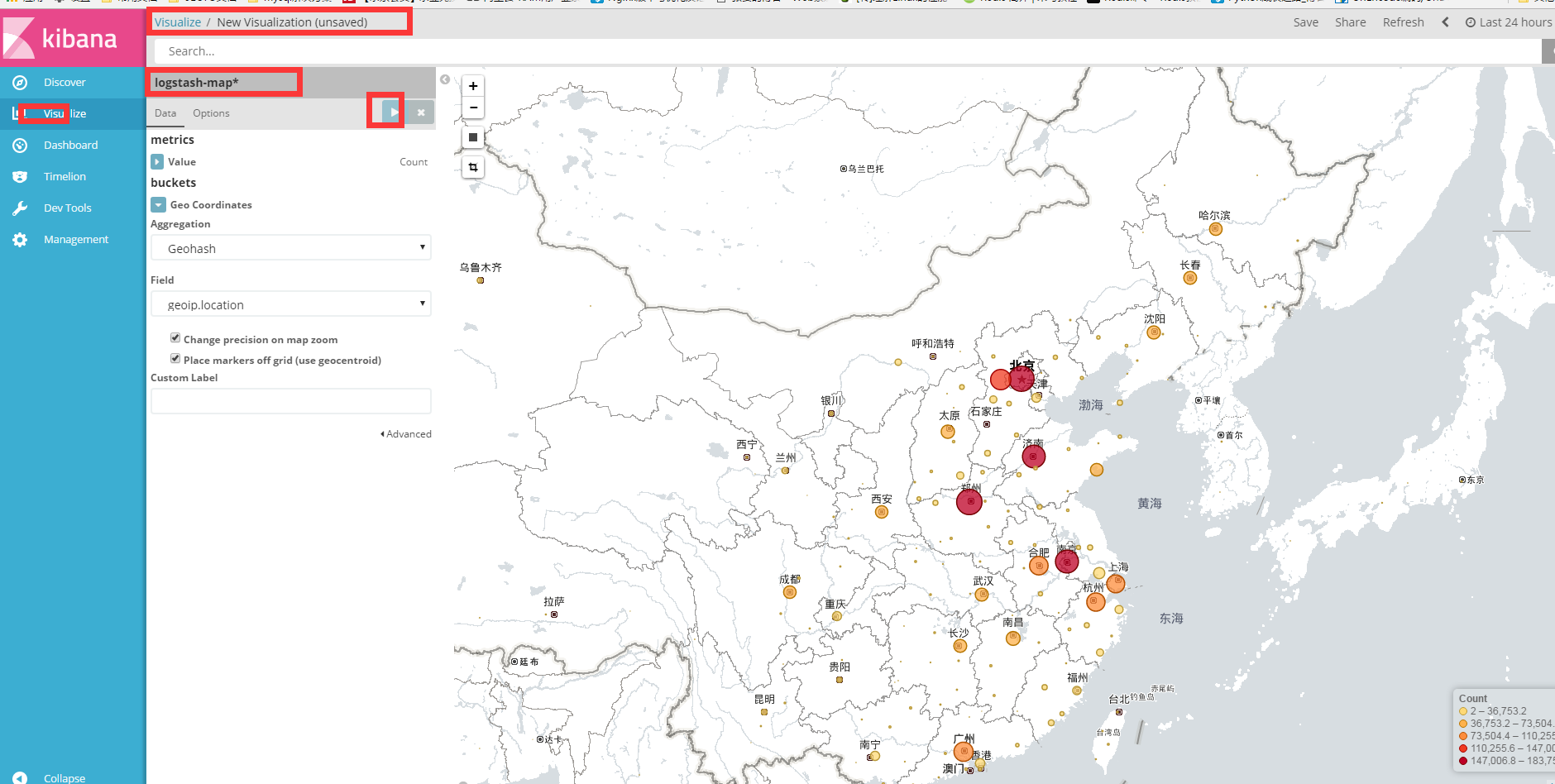

[2017-06-20T22:55:24,065][INFO ][logstash.filters.geoip ] Using geoip database {:path=>

"/usr/local/logstash/config/GeoLite2-City.mmdb"

}

[2017-06-20T22:55:24,094][INFO ][logstash.pipeline ] Starting pipeline {

"id"

=>

"main"

,

"pipeline.workers"

=>4,

"pipeline.batch.size"

=>125,

"pipeline.batch.delay"

=>5,

"pipeline.max_inflight"

=>500}

[2017-06-20T22:55:24,275][INFO ][logstash.pipeline ] Pipeline main started

[2017-06-20T22:55:24,369][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}