一.背景:

公司CDH5集群已经部署完毕,需要通过web界面添加hive组件,一般来说通过web界面来添加,会报两个错误,

一个是配置hive的元数据的/usr/share/java/mysql-connector-java.jar驱动包,

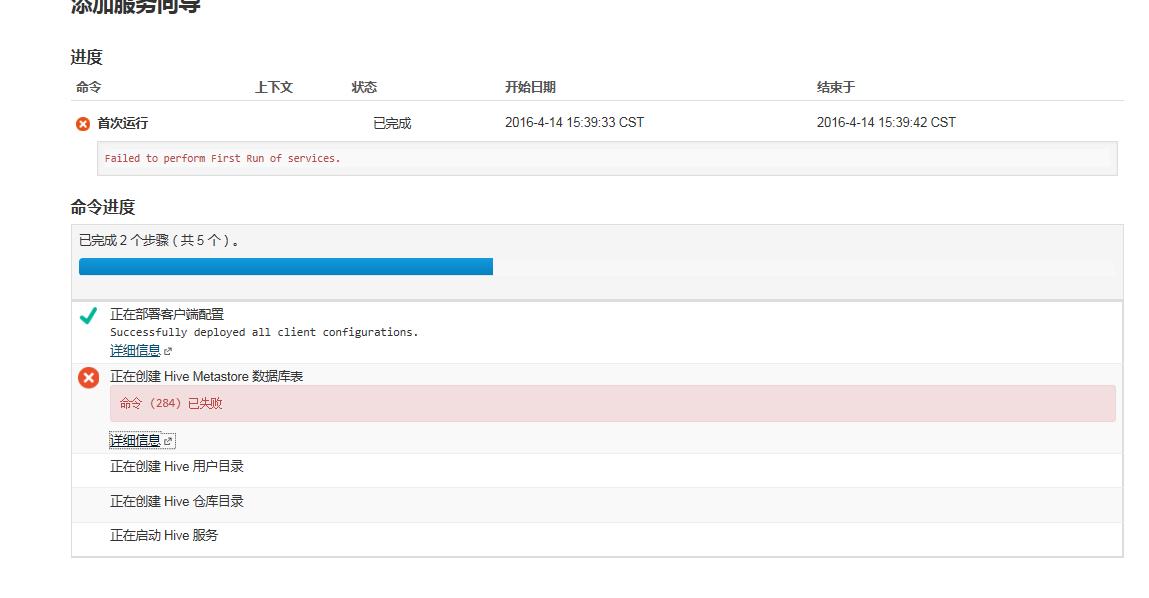

还有一个错误就是如图:

![]()

![]()

![]()

二.错误明细:

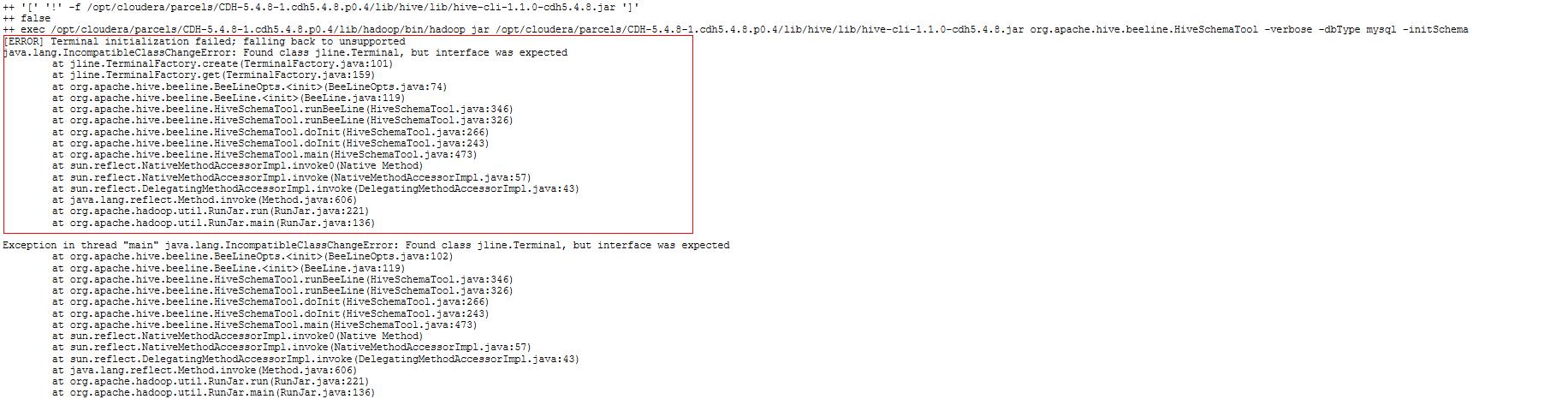

++ exec /opt/cloudera/parcels/CDH/lib/hadoop/bin/hadoop jar /opt/cloudera/parcels/CDH/lib/hive/lib/hive-cli-1.1.0-cdh5.4.8.jar org.apache.hive.beeline.HiveSchemaTool -verbose -dbType mysql -initSchema

[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

at jline.TerminalFactory.create(TerminalFactory.java:101)

at jline.TerminalFactory.get(TerminalFactory.java:159)

at org.apache.hive.beeline.BeeLineOpts.(BeeLineOpts.java:74)

at org.apache.hive.beeline.BeeLine.(BeeLine.java:119)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:346)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:326)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:266)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:243)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

三.错误分析:

1. ++ exec /opt/cloudera/parcels/CDH/lib/hadoop/bin/hadoop jar /opt/cloudera/parcels/CDH/lib/hive/lib/hive-cli-1.1.0-cdh5.4.8.jar org.apache.hive.beeline.HiveSchemaTool -verbose -dbType mysql -initSchema

这句是配置hive组件的元数据库是mysql,在初始化hive的schema,也就是存储元数据的表呀等等,所以这句对于我们没有用

2.[ERROR] Terminal initialization failed; falling back to unsupported

这句说初始化schema失败,回退到不支持,这句也没有给出很明显的错误,所以看第三句

3.java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

第三句说明发现类jline.Terminal,但是接口是过期的。初步怀疑是jline的jar版本太低。

四.解决方案:

hadoop-hdfs目录下存在老版本jline,将hive下的新版本jline的JAR包拷贝到hadoop-hdfs下

1.进入hive/lib目录查看jline版本和文件的所在位置

[root@alish1-xxx-01 ~]# cd /opt/cloudera/parcels/CDH-5.4.8-1.cdh5.4.8.p0.4/lib/hive/lib

[root@alish1-xxx-01 lib]# ll jline*

lrwxrwxrwx 1 root root 28 Apr 9 17:05 jline-2.12.jar -> ../../../jars/jline-2.12.jar

2.进入CDH集群的jar包文件夹,查看jline包,一看有四个版本,最新的是2.12

[root@alish1-xxx-01 lib]# cd ../../../jars/

[root@alish1-xxx-01 jars]# ll jline*

-rwxr-xr-x 1 root root 87249 Oct 16 01:36 jline-0.9.94.jar

-rw-r--r-- 1 root root 164623 Oct 16 01:36 jline-2.10.4.jar

-rw-r--r-- 1 root root 206202 Oct 16 01:33 jline-2.11.jar

-rw-r--r-- 1 root root 213854 Oct 16 01:32 jline-2.12.jar

3.find一下jline包哪些组件会有

[root@alish1-xxx-01 ~]# find / -name jline*

/opt/cloudera/parcels/CDH/lib/crunch/lib/jline-2.10.4.jar

/opt/cloudera/parcels/CDH/lib/llama/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/flume-ng/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/libtools/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-mr1/lib/pig/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-mr1/lib/hive/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-mr1/lib/hive2/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-mr1/lib/sqoop/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-mr1/lib/hcatalog/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/libserver/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-yarn/lib/pig/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-yarn/lib/hive/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-yarn/lib/hive2/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-yarn/lib/sqoop/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/oozie/oozie-sharelib-yarn/lib/hcatalog/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/hadoop-0.20-mapreduce/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/impala/lib/jline-2.12.jar

/opt/cloudera/parcels/CDH/lib/sentry/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/hive/lib/jline-2.12.jar

/opt/cloudera/parcels/CDH/lib/hadoop-httpfs/webapps/webhdfs/WEB-INF/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/whirr/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/hadoop-hdfs/lib/jline-0.9.94.jar

/opt/cloudera/parcels/CDH/lib/sqoop2/client-lib/jline-0.9.94.jar

/opt/cloudera/parcels/CDH/lib/hadoop-kms/webapps/kms/WEB-INF/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/zookeeper/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/lib/hadoop-yarn/lib/jline-2.11.jar

/opt/cloudera/parcels/CDH/jars/jline-2.12.jar

/opt/cloudera/parcels/CDH/jars/jline-2.11.jar

/opt/cloudera/parcels/CDH/jars/jline-2.10.4.jar

/opt/cloudera/parcels/CDH/jars/jline-0.9.94.jar

###通过观察发现,/opt/cloudera/parcels/CDH/lib/hadoop-hdfs/lib/jline-0.9.94.jar,这个路径的jar版本过低,才0.9.94版本,所以要替换为最新的版本2.12

(hive组件的最终创建表的数据还是会存在hdfs文件系统上.肯定就是替换一下就行);

###而sqoop2组件我没有安装,所以不需要替换!

4.cp 最新jline包

[root@alish1-xxx-01 ~]# cp /opt/cloudera/parcels/CDH/jars/jline-2.12.jar /opt/cloudera/parcels/CDH/lib/hadoop-hdfs/lib/

[root@alish1-xxx-01 ~]# cp /opt/cloudera/parcels/CDH/lib/hadoop-hdfs/lib/jline-0.9.94.jar /opt/cloudera/parcels/CDH/lib/hadoop-hdfs/lib/jline-0.9.94.jar.bak

[root@alish1-xxx-01 ~]#

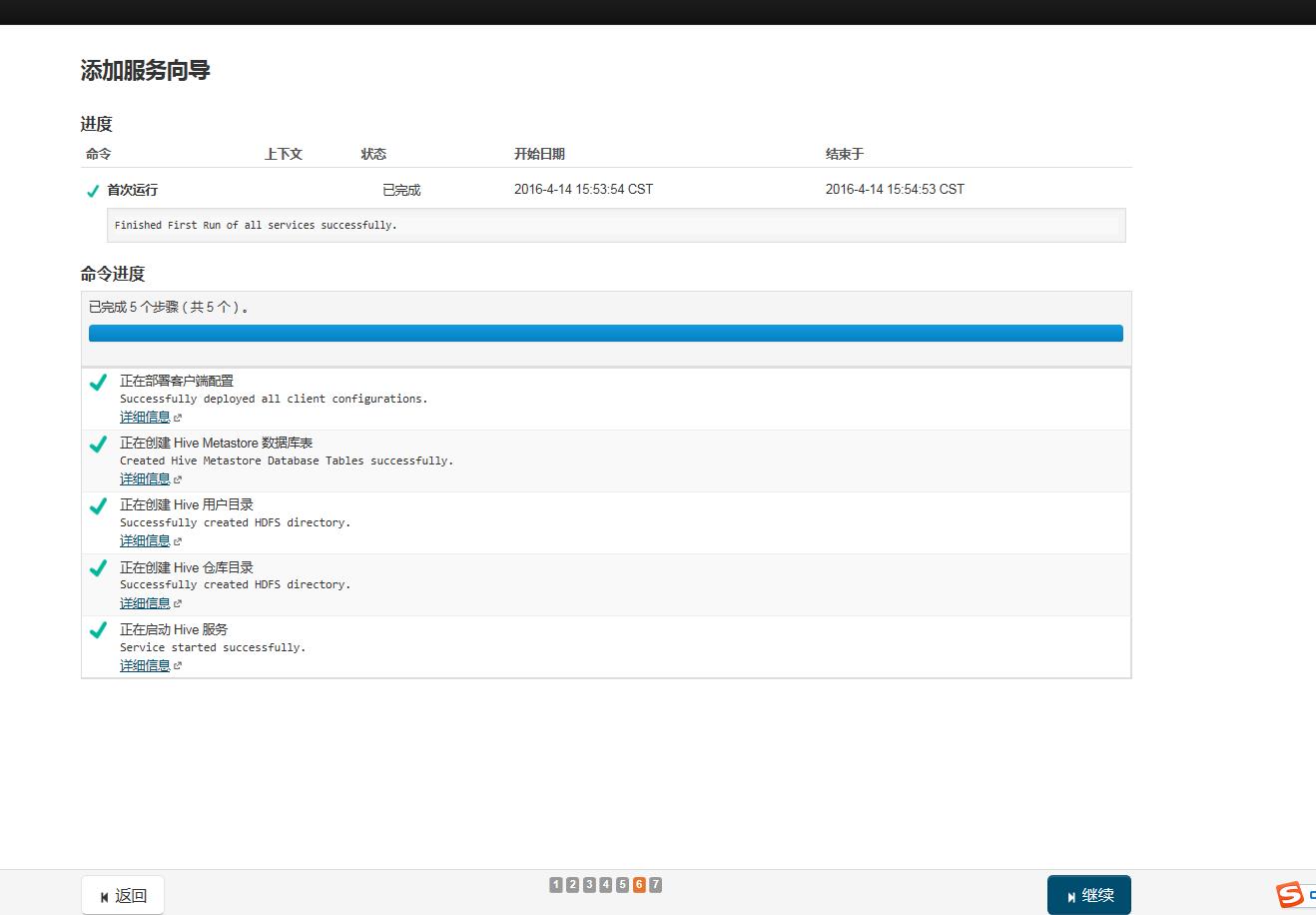

五.验证结果:

单击web安装界面的"重试"按钮,it's ok!!!

![]()