在运维系统中,经常遇到如下情况:

①开发人员不能登录线上服务器查看详细日志

②各个系统都有日志,日志数据分散难以查找

③日志数据量大,查询速度慢,或者数据不够实时

④一个调用会涉及多个系统,难以在这些系统的日志中快速定位数据

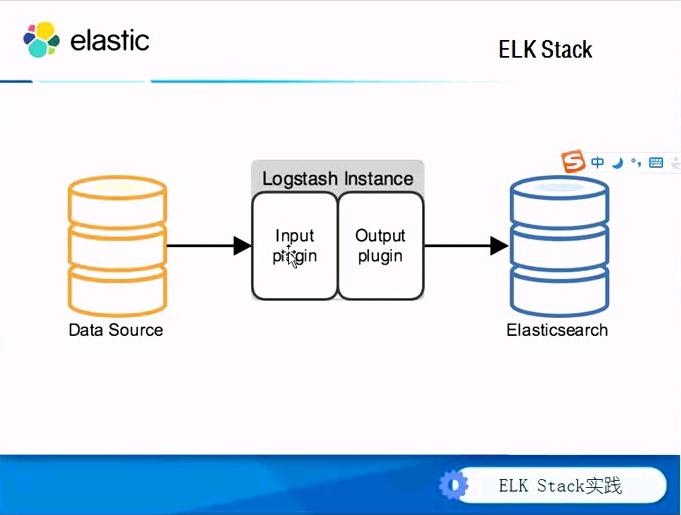

我们可以采用目前比较流行的ELKStack来满足以上需求。

ELK Stack组成部分:

![4a8833b7bab9d46d04ae8847d58a8be1.png wKiom1c9pvCQIqpsAAFRZMM2Yr4163.png]()

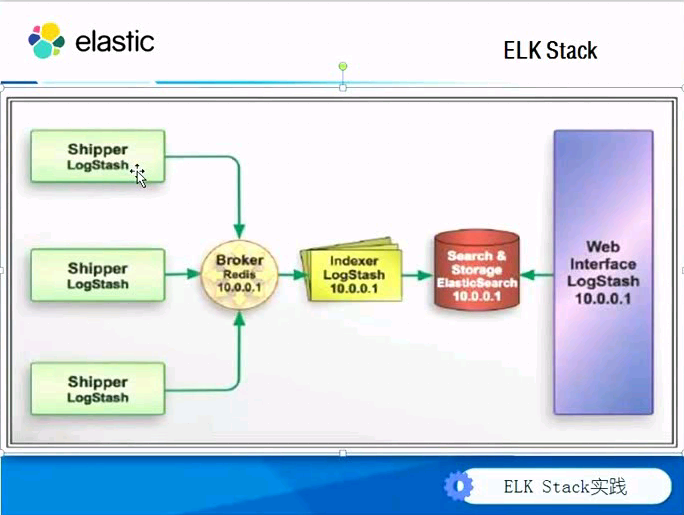

原理流程图如下:

![07f7e52cd140e795de51e8734756e1e4.png wKiom1c9pyHQw78qAAIfEXuRMnA381.png]()

系统环境:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 ~]

|

| IP地址 |

运行程序 |

角色 |

备注 |

| 10.0.0.41 |

elasticsearch |

node01 |

|

| 10.0.0.42 |

elasticsearch |

node02 |

|

| 10.0.0.43 |

elasticsearch |

node03 |

|

| 10.0.0.44 |

redis kibana |

kibana |

|

| 10.0.0.9 |

nginx logstash |

web01 |

|

| 10.0.0.10 |

logstash |

logstash

|

|

实战操作:

①下载安装包安装Java环境以及配置环境变量:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 tools]

|

安装jdk(在本次实验中所有服务器上做同样的操作安装jdk,这里以node01为例):

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 tools]

|

②安装配置elasticsearch(node01、node02、node03上做同样操作)

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 tools]

|

修改配置文件如下:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 config]

|

创建对应的目录:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

③启动elasticsearch服务

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

如果要elasticsearch在后台运行则只需要添加-d,即:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

启动之后查看端口状态:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

访问查看状态信息:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

④管理elasticsearch服务的脚本(该部分可选)

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

根据提示,这里我们将其安装到系统启动项中:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 elasticsearch]

|

通过service命令来启动elasticsearch服务

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 logs]

|

如果启动报错,即输入如下内容:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 service]

|

通过启动日志排查问题:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 logs]

|

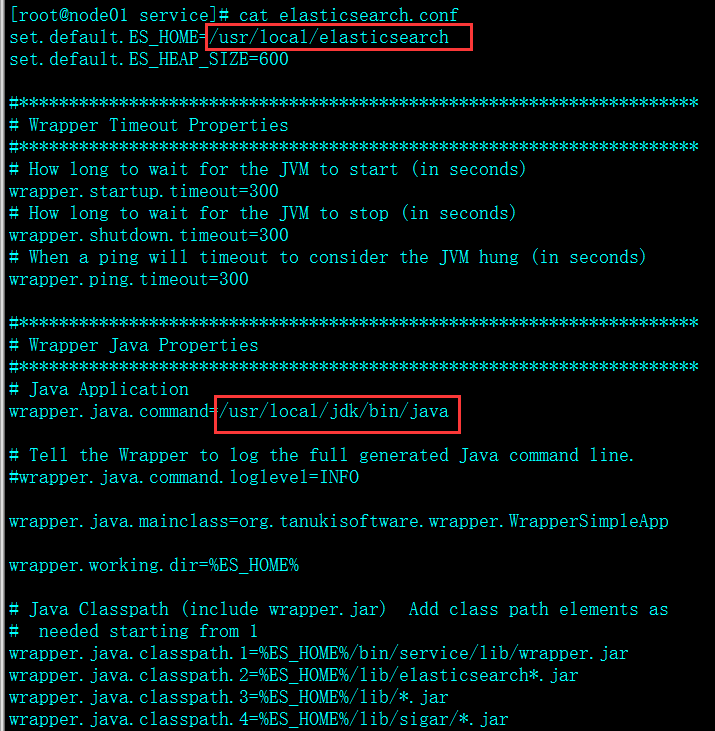

根据上述提示,根据实际配置环境将对应参数修改为红色部分,然后重启

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 service]

|

![e056fe395846791196a80b16bdebcb15.png wKioL1c9qEnQDz6NAADA3kZaySI001.png]()

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 logs]

|

检查启动状态:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 service]

|

⑤JAVA API

node client

Transport client

⑥RESTful API

⑦javascript,.NET,PHP,Perl,Python,Ruby

查询例子:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 service]

|

插件能额外扩展elasticsearch功能,提供各类功能等等。有三种类型的插件:

1. java插件

只包含JAR文件,必须在集群中每个节点上安装而且需要重启才能使插件生效。

2. 网站插件

这类插件包含静态web内容,如js、css、html等等,可以直接从elasticsearch服务,如head插件。只需在一个节点上安装,不需要重启服务。可以通过下面的URL访问,如:http://node-ip:9200/_plugin/plugin_name

3. 混合插件

顾名思义,就是包含上面两种的插件。

安装elasticsearch插件Marvel:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 service]

|

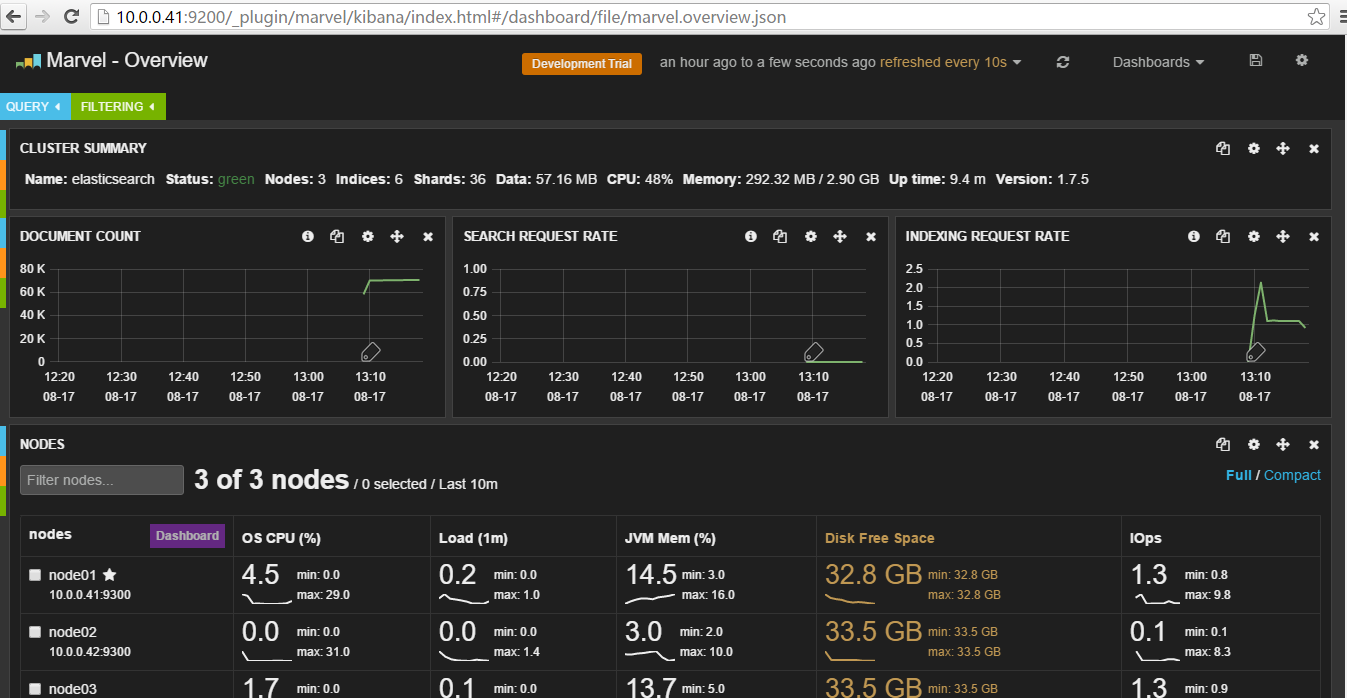

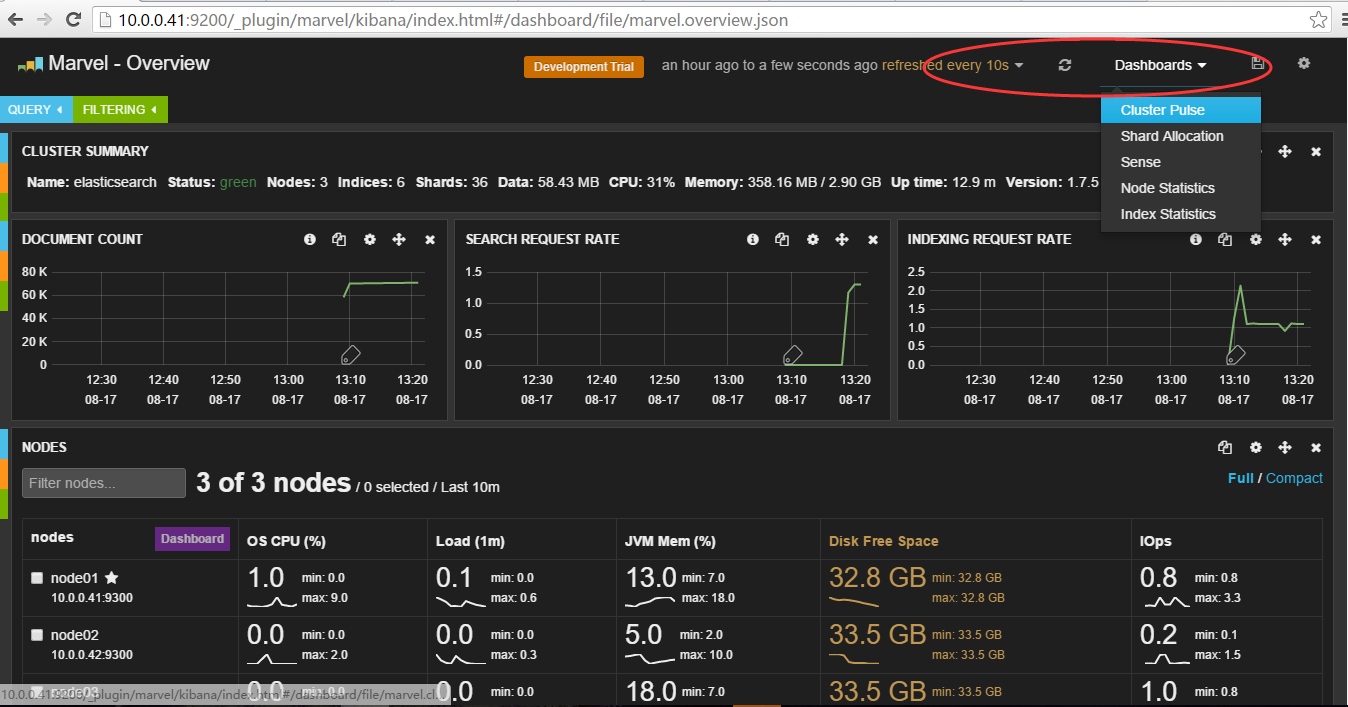

安装完成之后通过浏览器访问查看集群状况:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>http:

//10

.0.0.41:9200

/_plugin/marvel/kibana/index

.html

|

![marvel.png wKioL1ez8-7j4cdyAAHKHcpVraY207.png]()

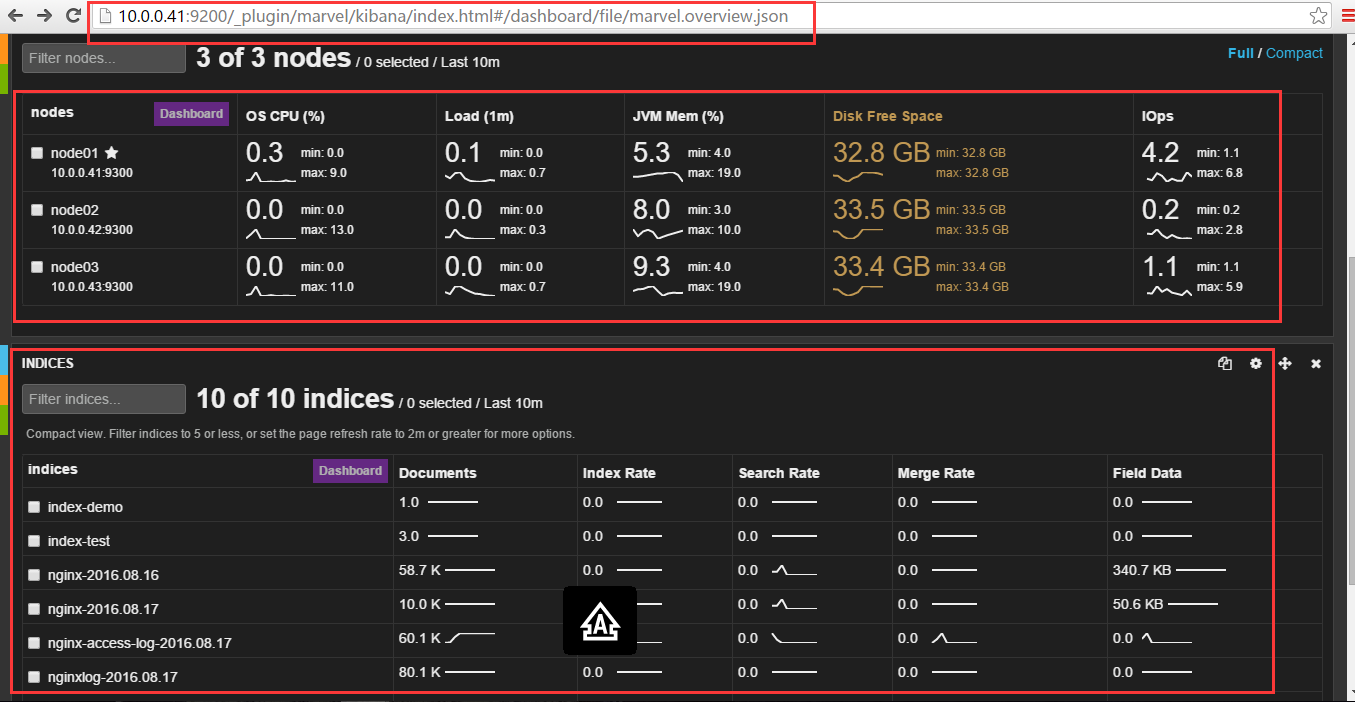

下面是集群节点和索引状况:

![集群管理.png wKiom1e0Hc_j8n4gAAHT4ebRkTk091.png]()

选择Dashboards菜单:

![marvel-dashbord01.png wKioL1ez9MKB5-ofAAIW8au2MFE914.png]()

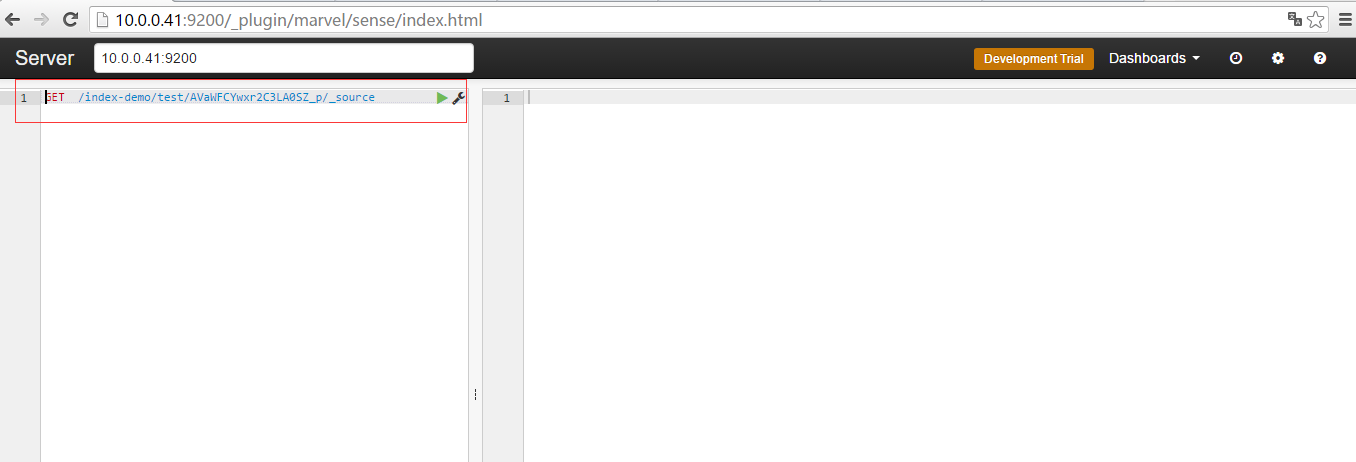

点击sense进入如下界面:

![GET.png wKioL1ez9S7QaWISAACG-US__Y4548.png]()

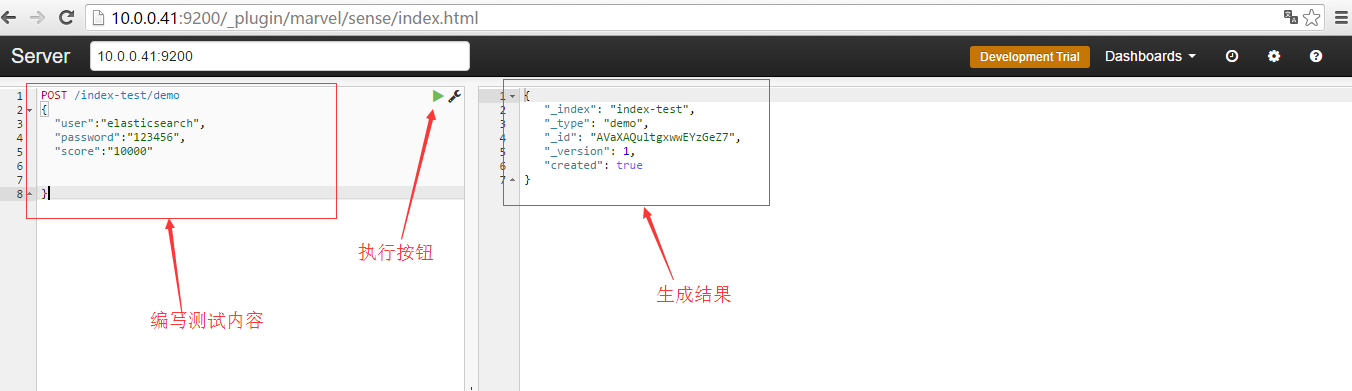

编写测试内容:

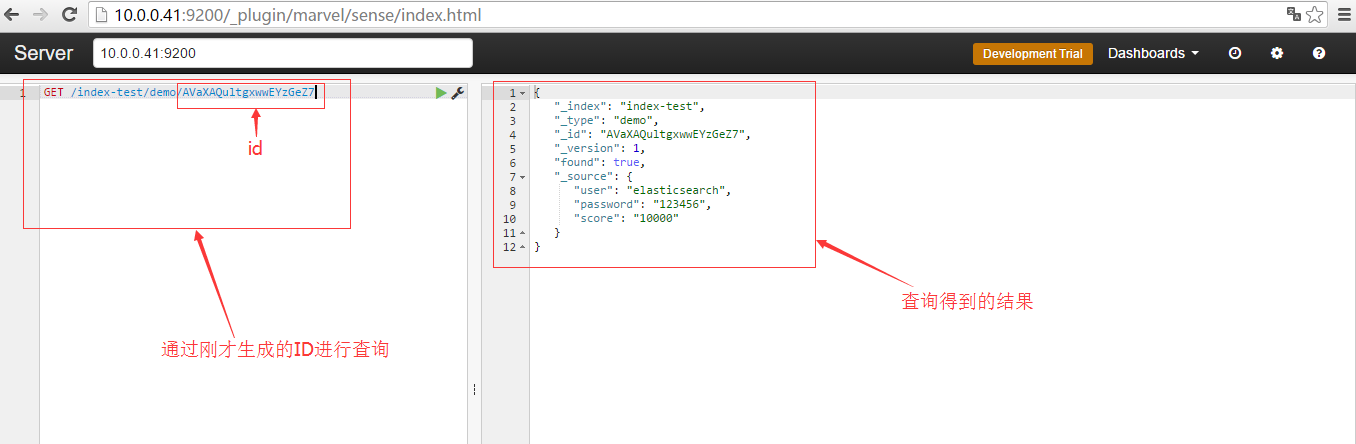

![编写内容.png wKiom1ez-JSTt6a3AADTQ0dmlug512.png]() 记录右边生成的id,然后在左边通过id进行查询

记录右边生成的id,然后在左边通过id进行查询

![id查询结果.png wKioL1ez-UaS18_EAADfwb28-aY082.png]()

如果要进一步查询,则可以做如下操作:

![进一步查询.png wKiom1ez-fuhT6D_AACv2aWLkHw837.png]()

⑧集群管理插件安装

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 service]

|

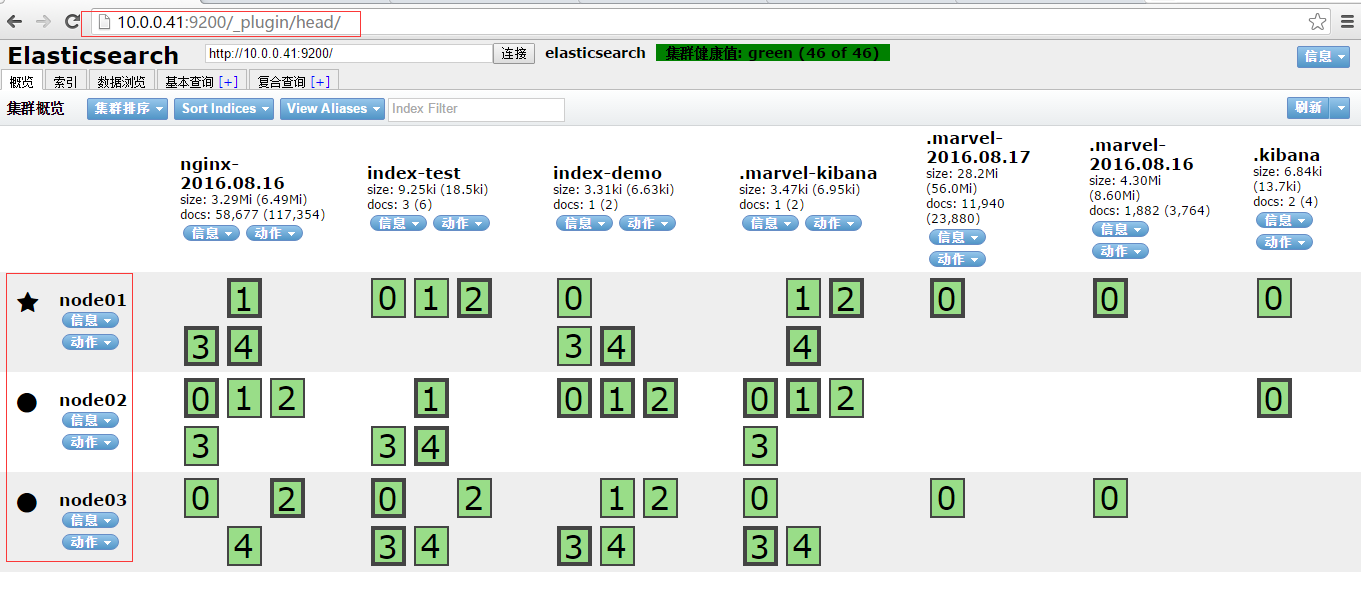

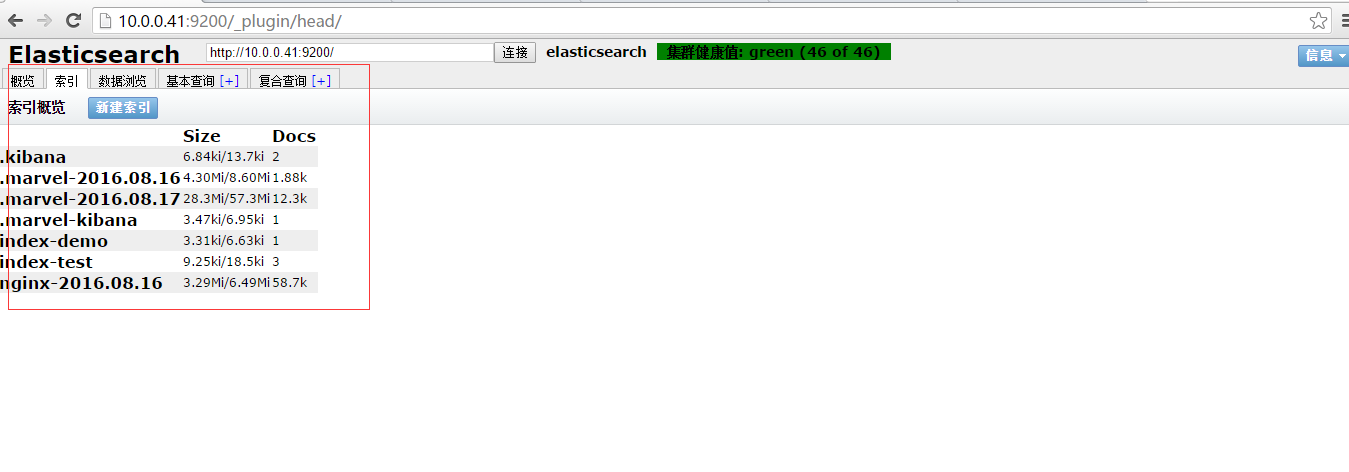

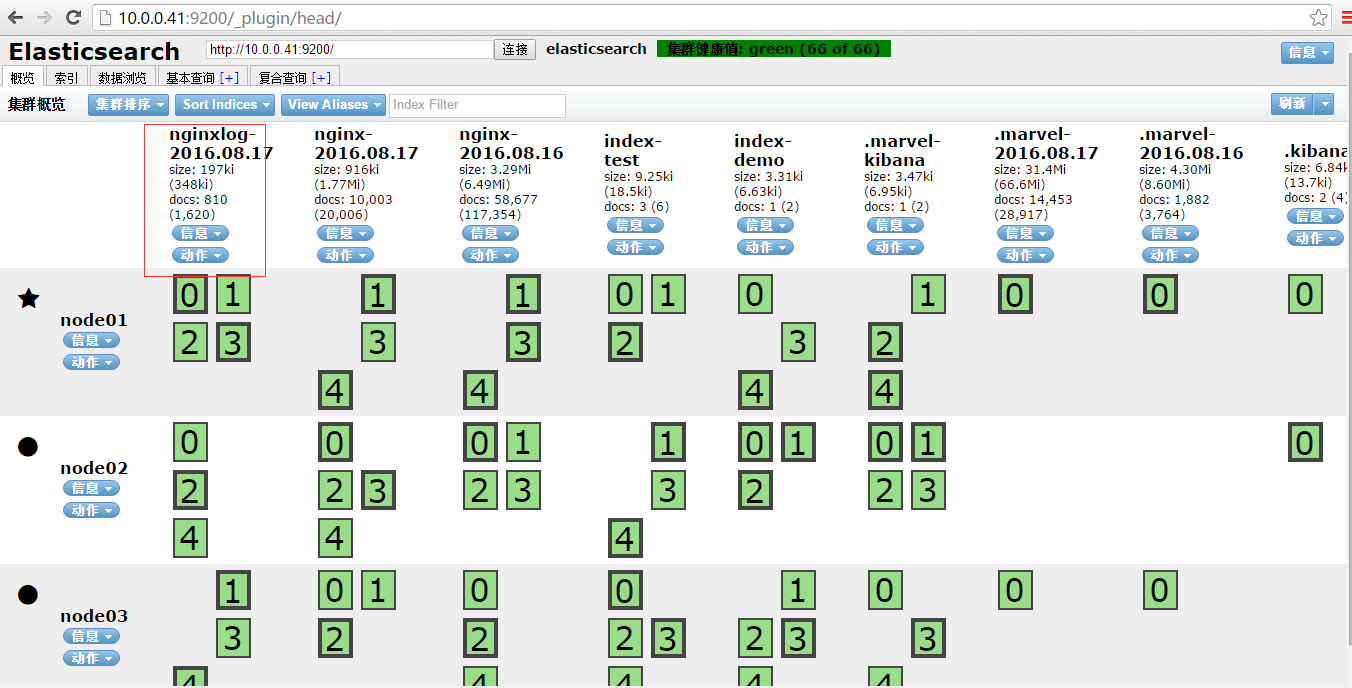

安装完成后,通过浏览器访问:

http://10.0.0.41:9200/_plugin/head/

![head.png wKiom1ez-kzjPuRqAAFbPQBHs1A955.png]()

部署另外一个节点node02,需要在elasticsearch的配置文件中修改node.name: "node02"即可,其他与node01保持一致

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node02 tools]

|

安装管理elasticsearch的管理工具elasticsearch-servicewrapper

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node02 elasticsearch]

|

![3cebb499fa906878262e240d0e9aec51.png wKiom1c9qQqxv-MEAACkwT6OhoY905.png]()

提示:set.default.ES_HEAP_SIZE值设置小于服务器的物理内存,不能等于实际的内存,否则就会启动失败

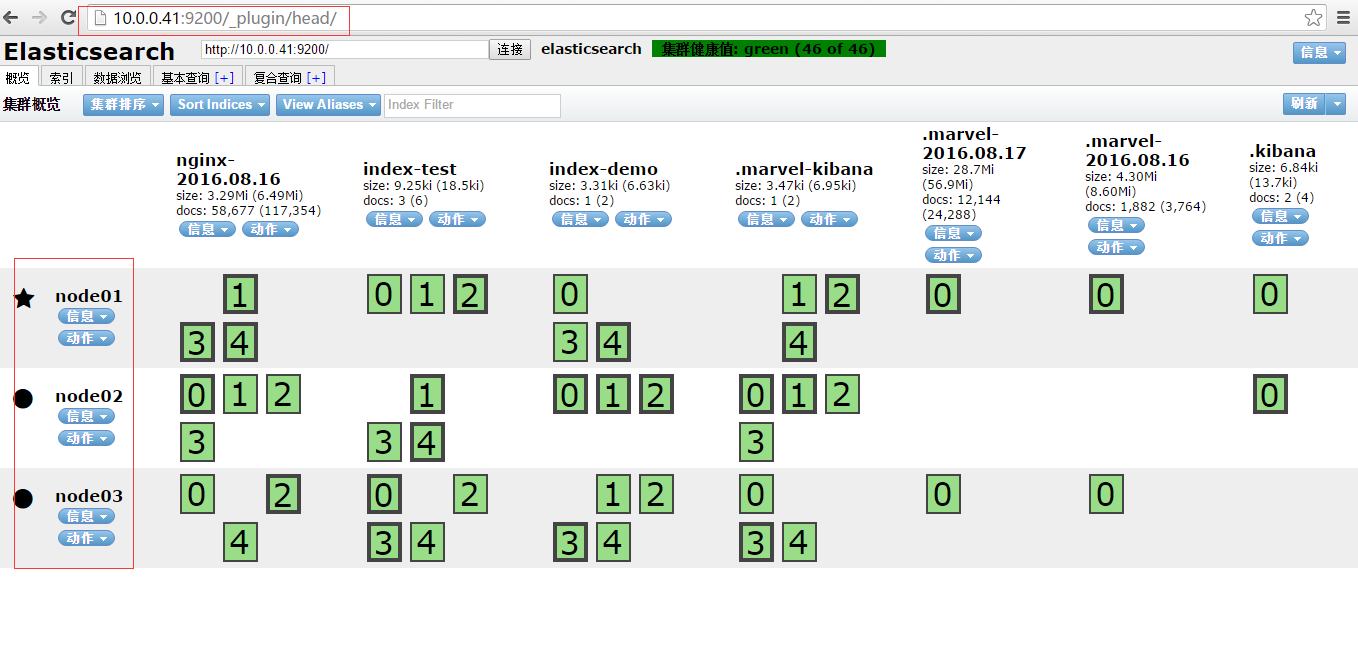

接上操作,刷新 http://10.0.0.42:9200/_plugin/head/

![head2.png wKioL1ez-viCpjhpAAFbgll75yA562.png]()

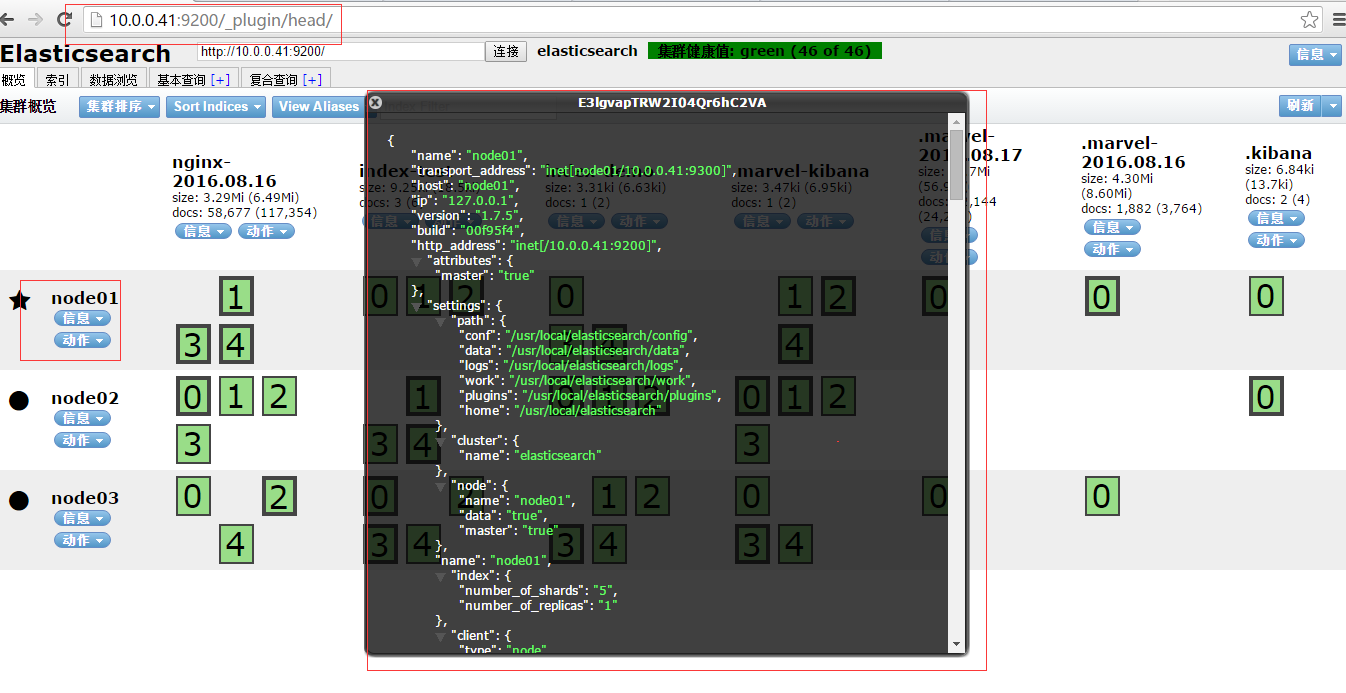

查看node01的信息:

![0001.png wKioL1ez-zTzD6KcAAJnvIsS5Dk661.png]()

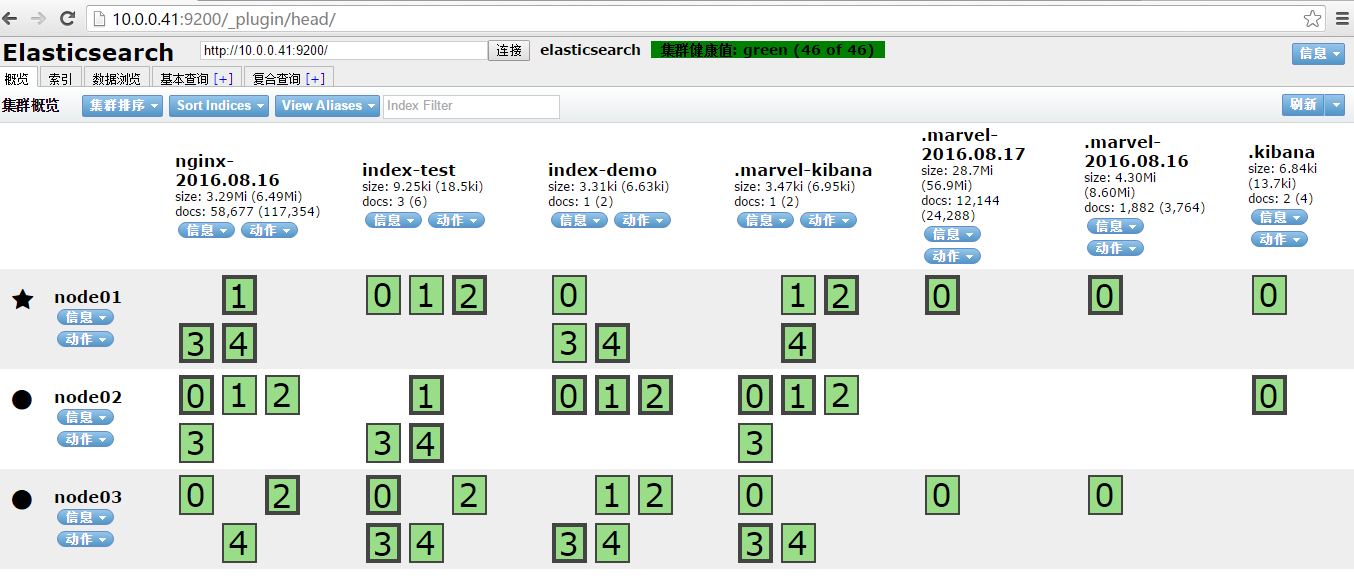

概览:

![概览.png wKioL1ez-6PiahV-AAFTIIzf1r4942.png]()

索引:

![索引.png wKioL1ez-7WRuntQAADNsZF0mO8478.png]()

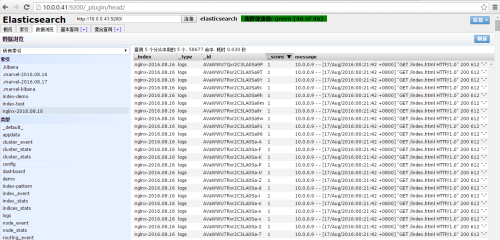

数据浏览:

![数据浏览.png wKiom1ez-8yQg5vjAAMLOQz4zQc183.png-wh_50]()

基本信息:

![基本查询.png wKioL1ez_BeygzVPAAGheJ_dHnk433.png]()

符合查询:

![复合查询.png wKioL1ez_Fzz1f1BAAGBrDg5KOk326.png]()

以上步骤在上述三个节点node01、node02、node03中分别执行

logstash快速入门

官方建议yum安装:

Download and install the public signing key:

rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch

Add the following in your /etc/yum.repos.d/ directory in a file with a .repo suffix, for example logstash.repo

[logstash-2.2]

name=Logstash repository for 2.2.x packages

baseurl=http://packages.elastic.co/logstash/2.2/centos

gpgcheck=1

gpgkey=http://packages.elastic.co/GPG-KEY-elasticsearch

enabled=1

And your repository is ready for use. You can install it with:

yum install logstash

![spacer.gif]()

我这里采用源码解压安装: web01上部署nginx和logstash

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 tools]

|

logstash配置文件格式:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>input { stdin { } }<br data-filtered=

"filtered"

>output {<br data-filtered=

"filtered"

> elasticsearch { hosts => [

"localhost:9200"

] }<br data-filtered=

"filtered"

> stdout { codec => rubydebug }<br data-filtered=

"filtered"

>}<br data-filtered=

"filtered"

><

/span

>

|

配置文件结构:

# This is a comment. You should use comments to describe

# parts of your configuration.

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>input {<br data-filtered=

"filtered"

> ...<br data-filtered=

"filtered"

>}<br data-filtered=

"filtered"

>filter {<br data-filtered=

"filtered"

> ...<br data-filtered=

"filtered"

>}<br data-filtered=

"filtered"

>output {<br data-filtered=

"filtered"

> ...<br data-filtered=

"filtered"

>}<br data-filtered=

"filtered"

><

/span

>

|

插件的结构:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>input {<br data-filtered=

"filtered"

>

file

{<br data-filtered=

"filtered"

> path =>

"/var/log/messages"

<br data-filtered=

"filtered"

>

type

=>

"syslog"

<br data-filtered=

"filtered"

> }<br data-filtered=

"filtered"

>

file

{<br data-filtered=

"filtered"

> path =>

"/var/log/apache/access.log"

<br data-filtered=

"filtered"

>

type

=>

"apache"

<br data-filtered=

"filtered"

> }<br data-filtered=

"filtered"

>}<br data-filtered=

"filtered"

><

/span

>

|

插件的数据结构:

数组array

Example:

path => [ "/var/log/messages", "/var/log/*.log" ]

path => "/data/mysql/mysql.log"

布尔类型

Example:

ssl_enable => true

字节

Examples:

my_bytes => "1113" # 1113 bytes

my_bytes => "10MiB" # 10485760 bytes

my_bytes => "100kib" # 102400 bytes

my_bytes => "180 mb" # 180000000 bytes

codeC

Example:

codec => "json"

HASH

Example:

match => {

"field1" => "value1"

"field2" => "value2"

...

}

Number

Numbers must be valid numeric values (floating point or integer).

Example:

port => 33

Password

A password is a string with a single value that is not logged or printed.

Example:

my_password => "password"

Pathedit

A path is a string that represents a valid operating system path.

Example:

my_path => "/tmp/logstash"

String

A string must be a single character sequence. Note that string values are enclosed in quotes, either double or single. Literal quotes in the string need to be escaped with a backslash if they are of the same kind as the string delimiter, i.e. single quotes within a single-quoted string need to be escaped as well as double quotes within a double-quoted string.

Example:

name => "Hello world"

name => 'It\'s a beautiful day'

Comments

Comments are the same as in perl, ruby, and python. A comment starts with a # character, and does not need to be at the beginning of a line. For example:

# this is a comment

input { # comments can appear at the end of a line, too

# ...

}

![e5ed40542e55ec336241bd848a6331a5.png wKioL1c9q9PQ2A_lAAFOiVIxJ9Y910.png]()

logstash版本不同,插件也会不同,我这里用的logstash-2.1.3.tar.gz

https://www.elastic.co/guide/en/logstash/2.1/plugins-outputs-elasticsearch.html#plugins-outputs-elasticsearch-hosts

logstash的启动方式为:

/usr/local/logstash/bin/logstash -f /usr/local/logstash/logstash.conf &

使用方法如下:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 ~]

|

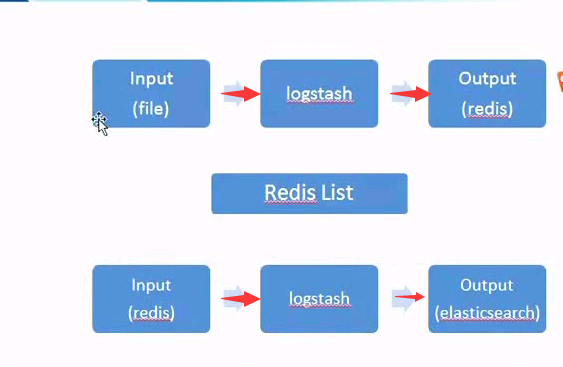

07-logstash-file-redis-es

redis的安装和配置:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana ~]

|

修改bind地址,为本机ip地址

[root@kibana ~]#redis-server /application/redis/conf/redis.conf &

切换到node01,登录node02的redis服务

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana ~]

|

修改web01上logstash配置文件如下:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 ~]

|

这里用nginx访问日志作为测试,定义输入文件为"/application/nginx/logs/access.log ",输出文件存储到redis里面,定义key为 "system-messages",host为10.0.0.44,端口6379,存储数据库库为db=0。

保证nginx是运行状态:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 ~]

|

登录redis,查看数据是否已经传入到redis上:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana ~]

|

发现通过浏览器访问的日志已经存入到了redis中,这一步实现日志的转存,下一步实现日志的从redis中通过logstash转存到elasticsearch中

![1fed1fcb6022c7686c9ac91c264392be.png wKioL1c9rBXDWeZBAAD3mO4Yf2g339.png]()

以上实现了数据从数据源(access.log)中转存到redis中,下一步实现从redis中转存到elasticsearch中

这里在logstash上开启一个logstash,并且编写配置文件/usr/local/logstash/logstash.conf

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@logstash ~]

|

说明:定义数据写入文件为redis,对应的键和主机以及端口如下:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>redis {<br data-filtered=

"filtered"

> data_type =>

"list"

<br data-filtered=

"filtered"

> key =>

"system-messages"

<br data-filtered=

"filtered"

> host =>

"10.0.0.44"

<br data-filtered=

"filtered"

> port =>

"6379"

<br data-filtered=

"filtered"

> db =>

"0"

<br data-filtered=

"filtered"

> }<br data-filtered=

"filtered"

><

/span

>

|

数据输出到 elasticsearch中,具体配置为:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>elasticsearch {<br data-filtered=

"filtered"

> hosts =>

"10.0.0.41"

<br data-filtered=

"filtered"

> index =>

"system-redis-messages-%{+YYYY.MM.dd}"

<br data-filtered=

"filtered"

> }<br data-filtered=

"filtered"

><

/span

>

|

启动logstash进行日志收集

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@logstash conf]

|

再回过来查看redis的db中的数据是否已经转存过去(即存储到elasticserach中)

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>127.0.0.1:6379> LLEN system-messages<br data-filtered=

"filtered"

>(integer) 0<br data-filtered=

"filtered"

>127.0.0.1:6379> <br data-filtered=

"filtered"

>127.0.0.1:6379> <br data-filtered=

"filtered"

>127.0.0.1:6379> keys *<br data-filtered=

"filtered"

>(empty list or

set

)<br data-filtered=

"filtered"

>127.0.0.1:6379><br data-filtered=

"filtered"

><

/span

>

|

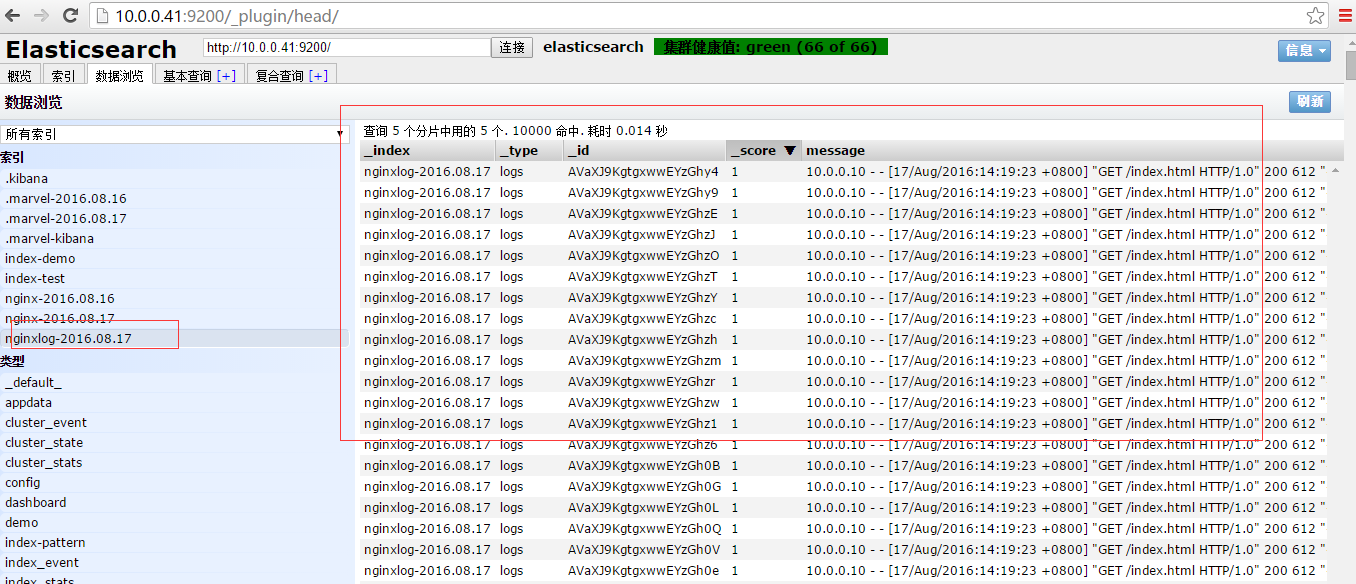

从上述输入可以知道数据应转存到elasticsearch中,下面我可以进行查看:

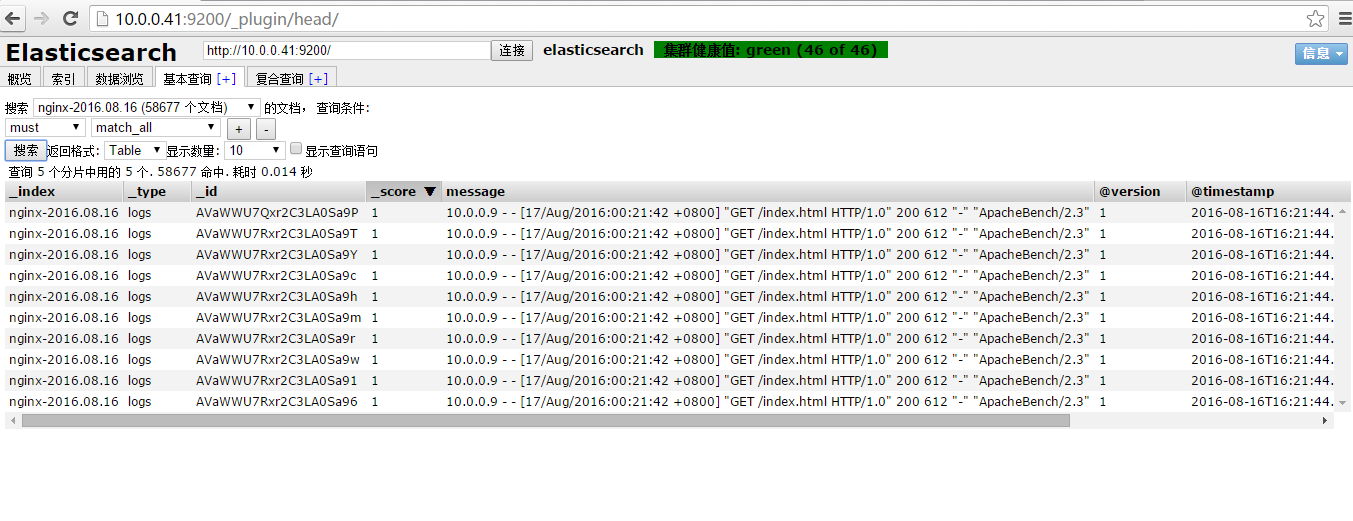

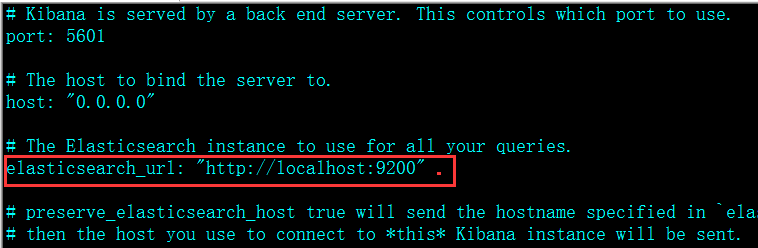

![nginxlog.png wKiom1e0Ai6QZ-hFAAFecqohNnk995.png]()

进一步查看日志信息:

![nginxlog-detail.png wKioL1e0AnnCMEL8AAK1-efQO0o145.png]()

logstash收集json格式的nginx日志,然后将日志转存到elasticsearch中

json格式分割nginx日志配置参数:

log_format logstash_json '{ "@timestamp": "$time_local", '

'"@fields": { '

'"remote_addr": "$remote_addr", '

'"remote_user": "$remote_user", '

'"body_bytes_sent": "$body_bytes_sent", '

'"request_time": "$request_time", '

'"status": "$status", '

'"request": "$request", '

'"request_method": "$request_method", '

'"http_referrer": "$http_referer", '

'"body_bytes_sent":"$body_bytes_sent", '

'"http_x_forwarded_for": "$http_x_forwarded_for", '

'"http_user_agent": "$http_user_agent" } }';

修改后的nginx配置文件为:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 conf]

|

启动nginx:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 nginx]

|

通过ab命令访问本地nginx服务:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@logstash conf]

|

访问nginx产生的日志为:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 logs]

|

logstash的配置文件文件为:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 logs]

|

重启logstash

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@web01 logs]

|

连接redis查看日志是否写入:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana]

|

通过ab命令访问nginx,

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@node01 ~]

|

再次检查redis是否写入数据:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana]

|

数据成功写入redis,接下来将redis数据写入elasticsearch中

在logstash主机上编写logstash配置文件

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@logstash]

|

测试文件是否正确,然后重启logstash

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@logstash conf]

|

用ab进行测试访问:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@logstash ~]

|

redis查看数据写入

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana ~]

|

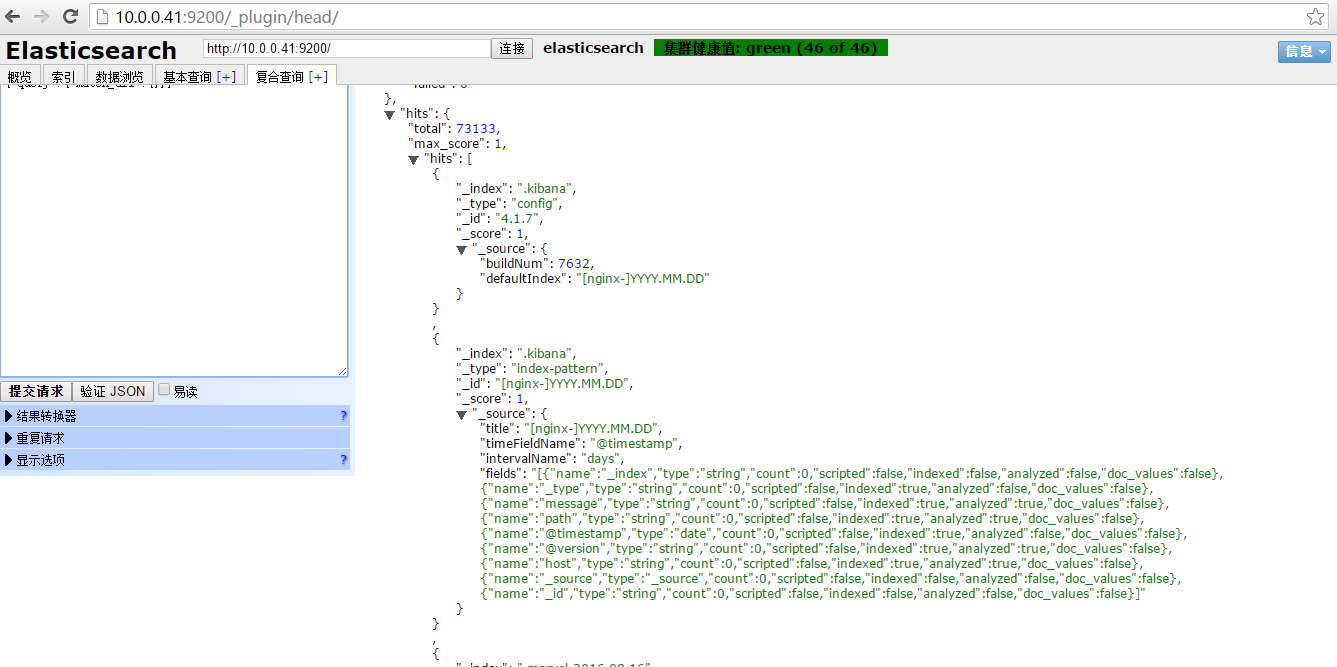

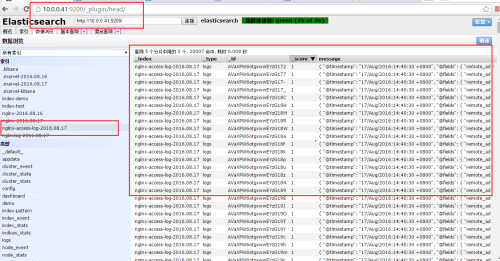

查看elasticsearch数据

![nginx-access-log.png wKiom1e0CRLhgXmmAAFnFnvOEiI928.png]()

进一步查看数据

![nginx-access-log.detaillpng.png wKiom1e0CWyxrbK2AAOPCQwmtF8434.png-wh_50]()

数据格式为键值形式,即json格式

![数据格式为json.png wKioL1e0CauQPoKMAATEjhUYMB0024.png]()

运用kibana插件对elasticsearch+logstash+redis收集到的数据进行可视化展示

解压:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana ~]

|

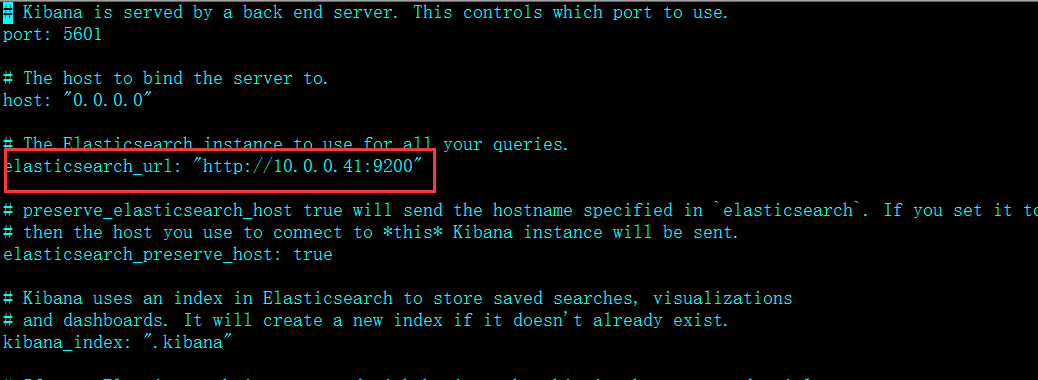

配置:

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana config]

|

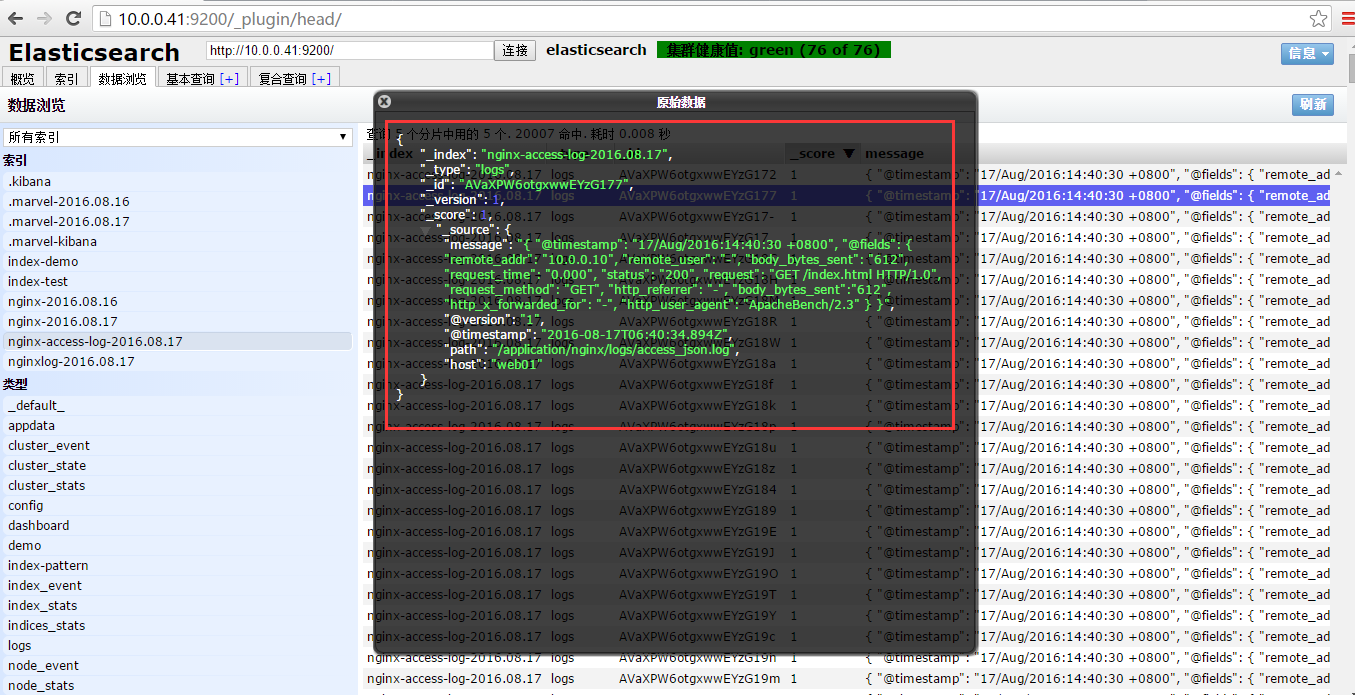

![1f6080000484c3f3fc2b774b910b2645.png wKiom1c9rGiigONeAAA66LDOH7w548.png]()

修改为:

![kibana.png wKioL1e0CvzguuNkAAB6OTqUmPA663.png]()

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana config]

|

将kibana放入后台运行

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana kibana]

|

查看启动状态

|

1

|

<span style=

"font-family:'宋体', SimSun;"

>[root@kibana kibana]

|

浏览器输入http://192.168.0.44:5601/

![kibana01.png wKiom1e0DJLCxRhGAAFgbRUte8U760.png-wh_50]()

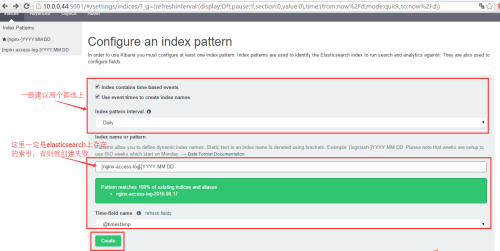

点击“create”创建nginx-access-log索引

切换到discover

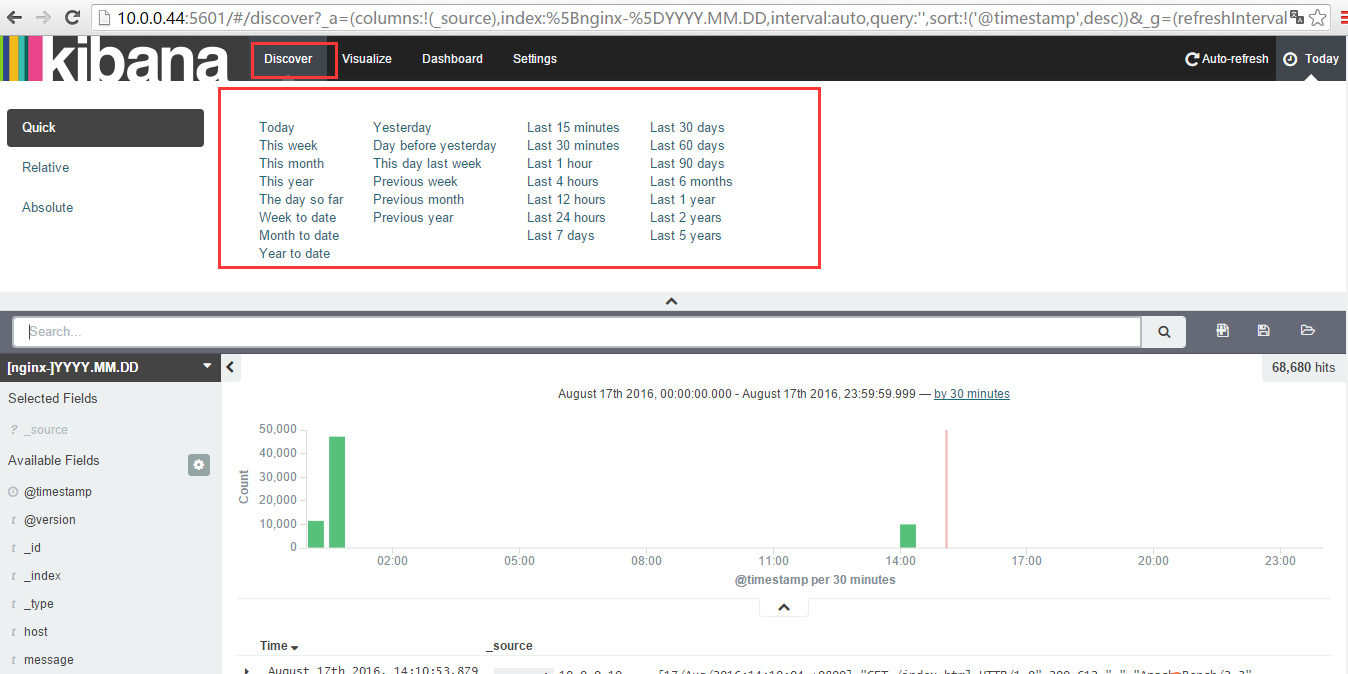

![discover.png wKiom1e0DRSydPkmAAFlftI4Q80063.png]()

在这里可以选择"today、Laster minutes等时间",例如我们选择"Laster minutes";

点击“Laster minutes”

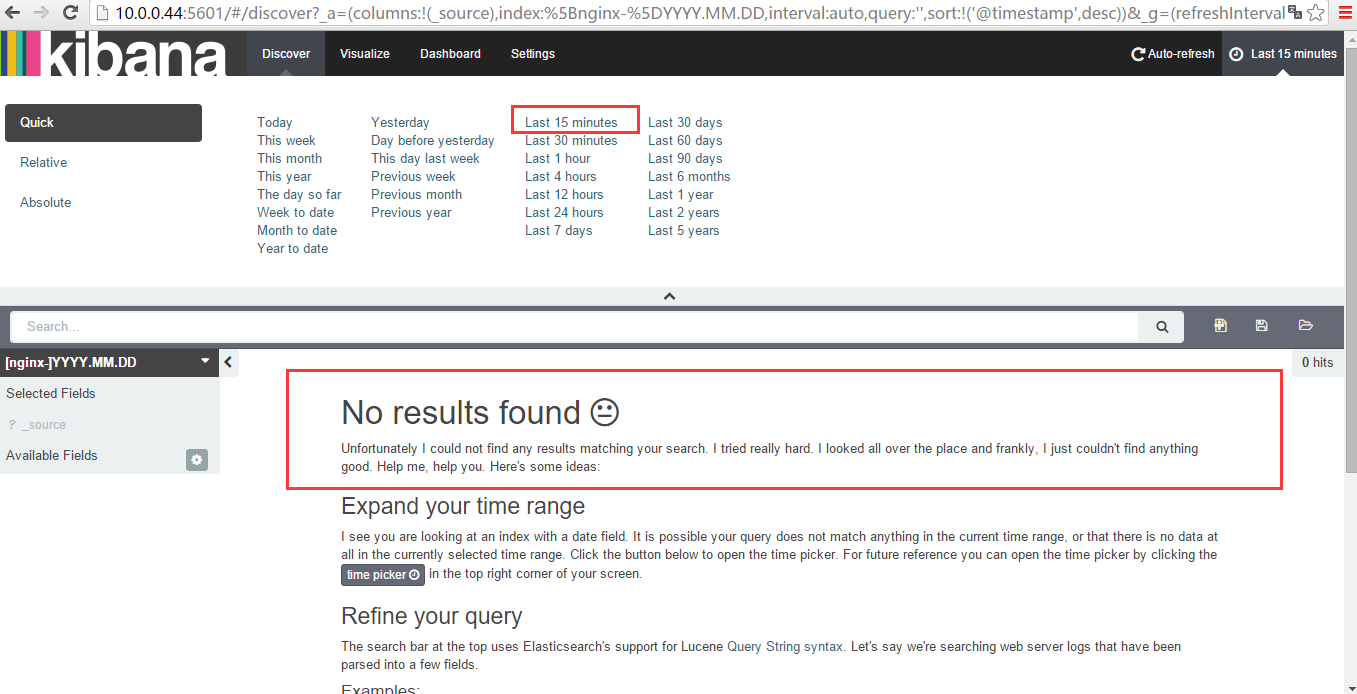

![laster 15.png wKiom1e0DaTB4dnnAAGhToH2kLI034.png]() y因为我们创建的索引是在15分钟以内的,所以这里显示找不到结果,这里选择today,时间选长一点,再看

y因为我们创建的索引是在15分钟以内的,所以这里显示找不到结果,这里选择today,时间选长一点,再看

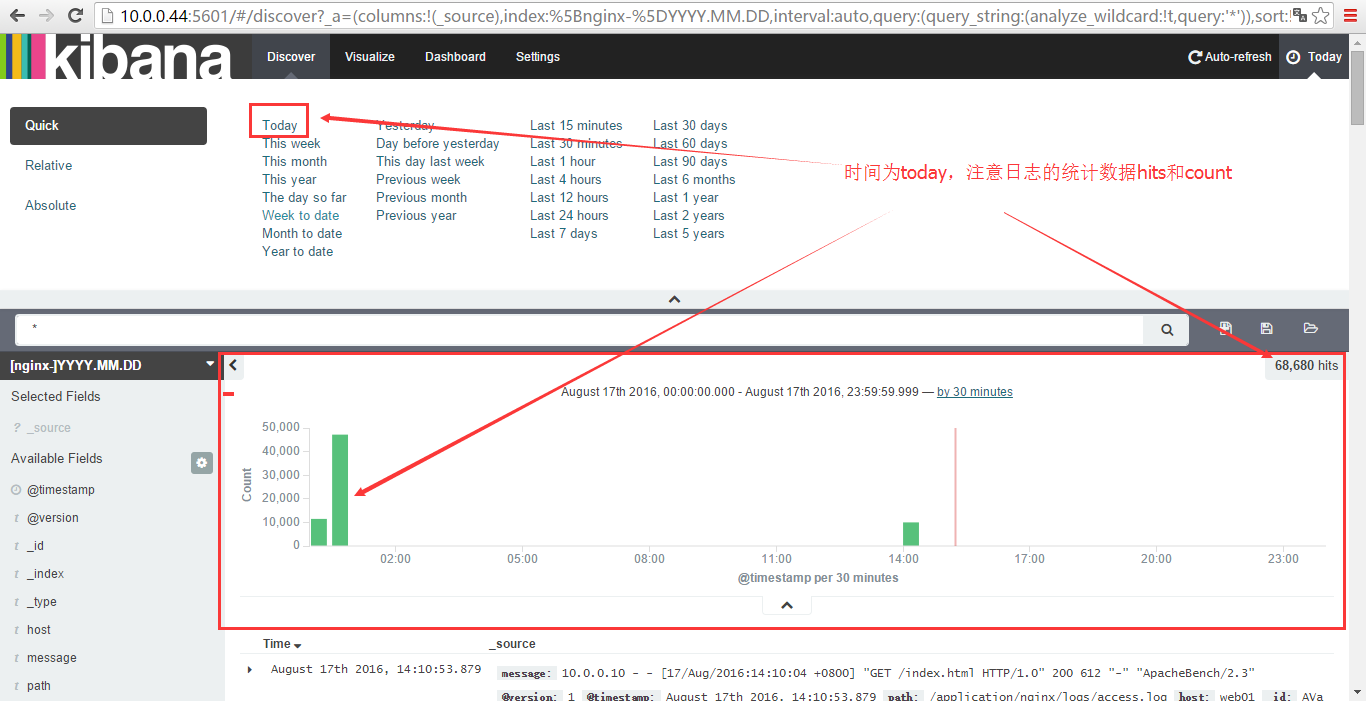

点击“today”

![today.png wKioL1e0D1vSaFRXAAG__2ChNgc456.png]()

下面是根据需要列出以前实验的操作步骤,仅供参考

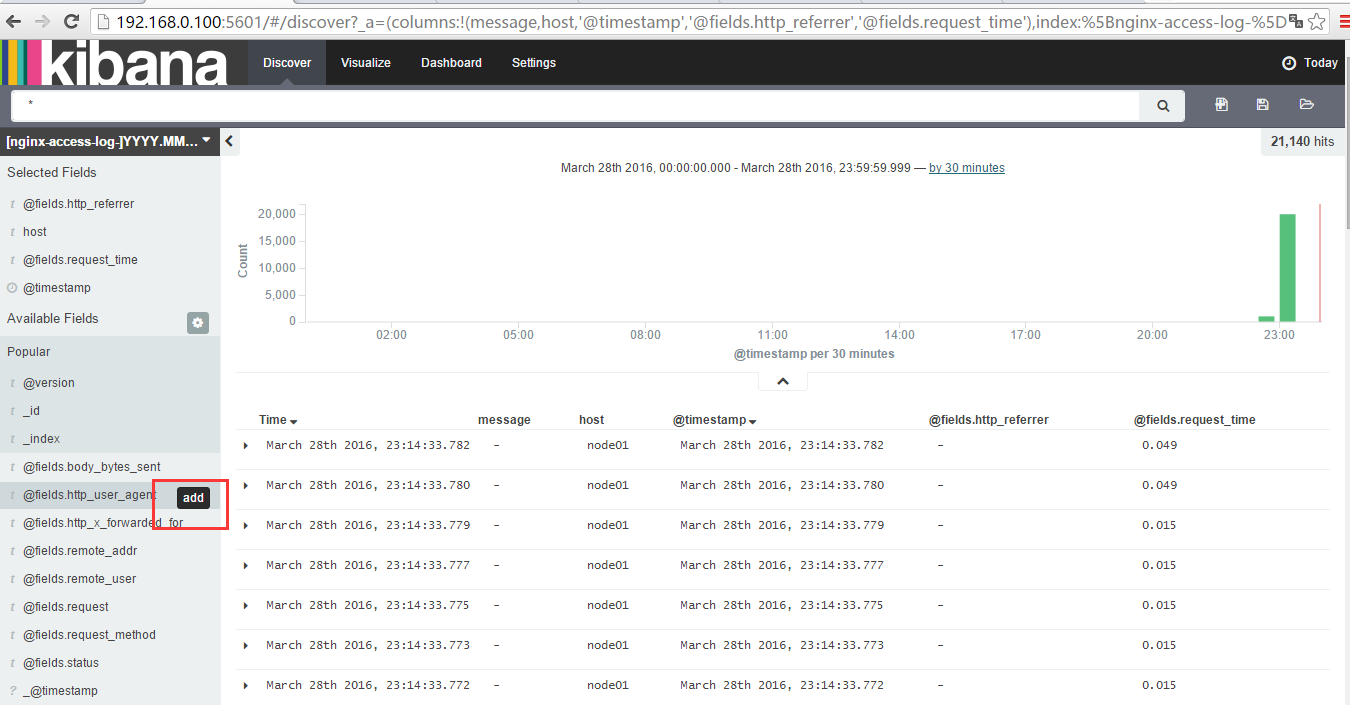

如果根据需求显示(默认是显示全部):这里用add来选择要显示的内容

![36e9fa697587628a530301f5b074e04a.png wKioL1c9rvviTV73AAE7oWVL28w332.png]()

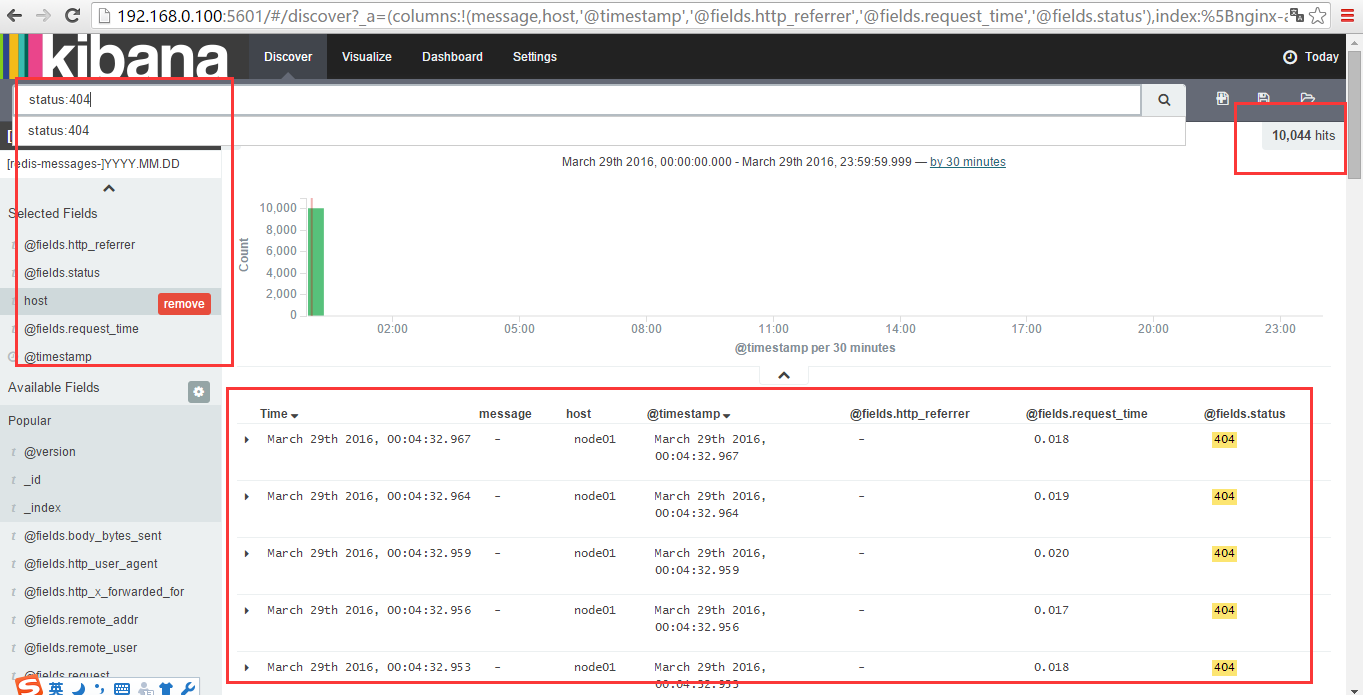

搜索功能,比如搜索状态为404的

![f196696135e54f949b2bc9ceb0195517.png wKioL1c9r07gkbg0AAFLWDqpSns412.png]()

搜索状态为200的

![39e316903cf7b8ad6883f53458bb695d.png wKioL1c9r5jApy2JAABybJSXF_w407.png]()

总结ELKStack工作流程

元数据(tomcat、Apache、PHP等服务器的日志文件log)-------->logstash将原始数据写入到redis中,然后通过logstash将redis中的数据写入到elasticsearch,最后通过kibana对elasticsearch数据进行分析整理,并展示出来

![93d08f4a27b3b12393b3511b881fffec.png wKioL1c9r8XSAEz_AABTp1KHDcY326.png]()

补充elasticsearch配置文件注释:

|

1

|

<span style="font-family:'宋体', SimSun;">elasticsearch的config文件夹里面有两个配置文 件:elasticsearch.yml和logging.yml,第一个是es的基本配置文件,第二个是日志配置文件,<br data-filtered="filtered">es也是使用log4j来记录日 志的,所以logging.yml里的设置按普通log4j配置文件来设置就行了。下面主要讲解下elasticsearch.yml这个文件中可配置的 东西。<br data-filtered="filtered">cluster.name: elasticsearch<br data-filtered="filtered">配置es的集群名称,默认是elasticsearch,es会自动发现在同一网段下的es,如果在同一网段下有多个集群,就可以用这个属性来区分不同的集群。<br data-filtered="filtered">node.name: "Franz Kafka"<br data-filtered="filtered">节点名,默认随机指定一个name列表中名字,该列表在es的jar包中config文件夹里name.txt文件中,其中有很多作者添加的有趣名字。<br data-filtered="filtered">node.master: true<br data-filtered="filtered">指定该节点是否有资格被选举成为node,默认是true,es是默认集群中的第一台机器为master,如果这台机挂了就会重新选举master。<br data-filtered="filtered">node.data: true<br data-filtered="filtered">指定该节点是否存储索引数据,默认为true。<br data-filtered="filtered">index.number_of_shards: 5<br data-filtered="filtered">设置默认索引分片个数,默认为5片。<br data-filtered="filtered">index.number_of_replicas: 1<br data-filtered="filtered">设置默认索引副本个数,默认为1个副本。<br data-filtered="filtered">path.conf: /path/to/conf<br data-filtered="filtered">设置配置文件的存储路径,默认是es根目录下的config文件夹。<br data-filtered="filtered">path.data: /path/to/data<br data-filtered="filtered">设置索引数据的存储路径,默认是es根目录下的data文件夹,可以设置多个存储路径,用逗号隔开,例:<br data-filtered="filtered">path.data: /path/to/data1,/path/to/data2<br data-filtered="filtered">path.work: /path/to/work<br data-filtered="filtered">设置临时文件的存储路径,默认是es根目录下的work文件夹。<br data-filtered="filtered">path.logs: /path/to/logs<br data-filtered="filtered">设置日志文件的存储路径,默认是es根目录下的logs文件夹<br data-filtered="filtered">path.plugins: /path/to/plugins<br data-filtered="filtered">设置插件的存放路径,默认是es根目录下的plugins文件夹<br data-filtered="filtered">bootstrap.mlockall: true<br data-filtered="filtered">设置为true来锁住内存。因为当jvm开始swapping时es的效率 会降低,所以要保证它不swap,可以把ES_MIN_MEM和ES_MAX_MEM两个环境变量设置成同一个值,并且保证机器有足够的内存分配给es。 同时也要允许elasticsearch的进程可以锁住内存,linux下可以通过`ulimit -l unlimited`命令。<br data-filtered="filtered">network.bind_host: 192.168.0.1<br data-filtered="filtered">设置绑定的ip地址,可以是ipv4或ipv6的,默认为0.0.0.0。<br data-filtered="filtered">network.publish_host: 192.168.0.1<br data-filtered="filtered">设置其它节点和该节点交互的ip地址,如果不设置它会自动判断,值必须是个真实的ip地址。<br data-filtered="filtered">network.host: 192.168.0.1<br data-filtered="filtered">这个参数是用来同时设置bind_host和publish_host上面两个参数。<br data-filtered="filtered">transport.tcp.port: 9300<br data-filtered="filtered">设置节点间交互的tcp端口,默认是9300。<br data-filtered="filtered">transport.tcp.compress: true<br data-filtered="filtered">设置是否压缩tcp传输时的数据,默认为false,不压缩。<br data-filtered="filtered">http.port: 9200<br data-filtered="filtered">设置对外服务的http端口,默认为9200。<br data-filtered="filtered">http.max_content_length: 100mb<br data-filtered="filtered">设置内容的最大容量,默认100mb<br data-filtered="filtered">http.enabled: false<br data-filtered="filtered">是否使用http协议对外提供服务,默认为true,开启。<br data-filtered="filtered">gateway.type: local<br data-filtered="filtered">gateway的类型,默认为local即为本地文件系统,可以设置为本地文件系统,分布式文件系统,hadoop的HDFS,和amazon的s3服务器,其它文件系统的设置方法下次再详细说。<br data-filtered="filtered">gateway.recover_after_nodes: 1<br data-filtered="filtered">设置集群中N个节点启动时进行数据恢复,默认为1。<br data-filtered="filtered">gateway.recover_after_time: 5m<br data-filtered="filtered">设置初始化数据恢复进程的超时时间,默认是5分钟。<br data-filtered="filtered">gateway.expected_nodes: 2<br data-filtered="filtered">设置这个集群中节点的数量,默认为2,一旦这N个节点启动,就会立即进行数据恢复。<br data-filtered="filtered">cluster.routing.allocation.node_initial_primaries_recoveries: 4<br data-filtered="filtered">初始化数据恢复时,并发恢复线程的个数,默认为4。<br data-filtered="filtered">cluster.routing.allocation.node_concurrent_recoveries: 2<br data-filtered="filtered">添加删除节点或负载均衡时并发恢复线程的个数,默认为4。<br data-filtered="filtered">indices.recovery.max_size_per_sec: 0<br data-filtered="filtered">设置数据恢复时限制的带宽,如入100mb,默认为0,即无限制。<br data-filtered="filtered">indices.recovery.concurrent_streams: 5<br data-filtered="filtered">设置这个参数来限制从其它分片恢复数据时最大同时打开并发流的个数,默认为5。<br data-filtered="filtered">discovery.zen.minimum_master_nodes: 1<br data-filtered="filtered">设置这个参数来保证集群中的节点可以知道其它N个有master资格的节点。默认为1,对于大的集群来说,可以设置大一点的值(2-4)<br data-filtered="filtered">discovery.zen.ping.timeout: 3s<br data-filtered="filtered">设置集群中自动发现其它节点时ping连接超时时间,默认为3秒,对于比较差的网络环境可以高点的值来防止自动发现时出错。<br data-filtered="filtered">discovery.zen.ping.multicast.enabled: false<br data-filtered="filtered">设置是否打开多播发现节点,默认是true。<br data-filtered="filtered">discovery.zen.ping.unicast.hosts: ["host1", "host2:port", "host3[portX-portY]"]<br data-filtered="filtered">设置集群中master节点的初始列表,可以通过这些节点来自动发现新加入集群的节点。<br data-filtered="filtered">下面是一些查询时的慢日志参数设置<br data-filtered="filtered">index.search.slowlog.level: TRACE<br data-filtered="filtered">index.search.slowlog.threshold.query.warn: 10s<br data-filtered="filtered">index.search.slowlog.threshold.query.info: 5s<br data-filtered="filtered">index.search.slowlog.threshold.query.debug: 2s<br data-filtered="filtered">index.search.slowlog.threshold.query.trace: 500ms<br data-filtered="filtered">index.search.slowlog.threshold.fetch.warn: 1s<br data-filtered="filtered">index.search.slowlog.threshold.fetch.info: 800ms<br data-filtered="filtered">index.search.slowlog.threshold.fetch.debug:500ms<br data-filtered="filtered">index.search.slowlog.threshold.fetch.trace: 200ms<br data-filtered="filtered"></span>

|