----------------------------------------------------------------------------------------------------------------------------------

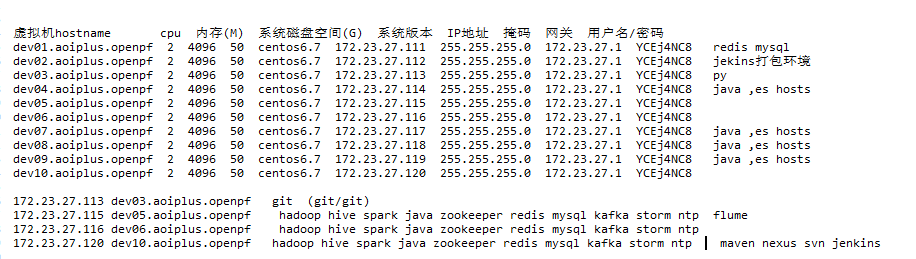

0.关闭防火墙

1.修改hosts

2.检查每台机器的 hostname

3.安装jdk

4.网络、hostname、hosts、防火墙、selinux、ntp等配置

5. 执行重启网络服务service network restart,使修改生效

6.安装ntp

7.安装cloudera前奏

8.生成免密钥登陆

9.安装java

10.创建 hadoop 用户

11.安装 cloudera

12.安装zookeeper

13.安装mysql

14.安装hive

15.安装spark

16.安装kafka

17. storm 安装

18.redis 安装

19.docker 安装

20.flume 安装

21.git安装

22.maven 安装

23.nexus 安装

24.svn 安装

25.jenkins 安装

26.ftp 安装

27.fastdfs 安装

28.php 安装

29.zabbix 安装

30.python 安装

31.yum 安装

32.lnmp 安装

33.discuz 安装

35.elk 安装(filebeat ,elasticsearch,logstash,kibana)

36.tomcat 安装

37.xmpp openfire spark smack

----------------------------------------------------------------------------------------------------------------------------------

0关闭防火墙

回到 顶部

service iptables status

service iptables stop

chkconfig --level 35 iptables off

1修改hosts

回到 顶部

vim /etc/hosts

172.23.27.111 dev01.aoiplus.openpf

172.23.27.112 dev02.aoiplus.openpf

172.23.27.113 dev03.aoiplus.openpf

172.23.27.114 dev04.aoiplus.openpf

172.23.27.115 dev05.aoiplus.openpf

172.23.27.116 dev06.aoiplus.openpf

172.23.27.117 dev07.aoiplus.openpf

172.23.27.118 dev08.aoiplus.openpf

172.23.27.119 dev09.aoiplus.openpf

172.23.27.120 dev10.aoiplus.openpf

2检查每台机器的 hostname

回到 顶部

hostname

3安装jdk

回到 顶部

卸载 java

rpm -qa|grep java

rpm -e --nodeps java-1.7.0-openjdk-1.7.0.141-2.6.10.1.el7_3.x86_64

rpm -e --nodeps java-1.7.0-openjdk-headless-1.7.0.141-2.6.10.1.el7_3.x86_64

rpm -e --nodeps java-1.8.0-openjdk-headless-1.8.0.141-1.b16.el7_3.x86_64

rpm -e --nodeps java-1.8.0-openjdk-1.8.0.141-1.b16.el7_3.x86_64

安装java

scp jdk1.7.0_79.tar.gz root@172.23.27.120:/home/baoy/package/

tar -xf jdk1.7.0_79.tar.gz

mv jdk1.7.0_79 ../soft/

vim /etc/profile

+ export JAVA_HOME=/home/baoy/soft/jdk1.7.0_79

+ export JRE_HOME=/home/baoy/soft/jdk1.7.0_79/jre

+ export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

+ export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

source /etc/profile

java -version

4网络、hostname、hosts、防火墙等配置

回到 顶部

vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE="eth0"

BOOTPROTO="static"

DNS1="172.23.11.231"

GATEWAY="172.23.27.1"

HOSTNAME="dev05.aoiplus.openpf"

HWADDR="52:54:00:7E:F9:4B"

IPADDR="172.23.27.115"

IPV6INIT="yes"

MTU="1500"

NETMASK="255.255.255.0"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

UUID="f41ec3b4-e3f6-49a3-bd8b-6426dffd06dd"

5执行重启网络服务service network restart,使修改生效

回到 顶部

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=dev05.aoiplus.openpf

GATEWAY=172.23.27.1

6安装ntp

回到 顶部

yum install ntp –y

chkconfig ntpd on

vi /etc/ntp.conf

服务器配置:

# 设置允许访问ntp-server进行校时的网段

restrict 172.23.27.120 mask 255.255.255.0 nomodify notrap

#本地时钟源

server 172.23.27.120

#当外部时钟不可用,使用本地时钟

fudge 172.23.27.120 stratum 10

客户端配置:

#设置上层时钟源,设置为ntp server地址

server 172.23.27.120

#允许与上层时钟服务器同步时间

restrict 172.23.27.120 nomodify notrap noquery

#本地时钟

server 172.23.27.115

#当上层时钟源不可用,使用本地时钟

fudge 172.23.27.115 stratum 10

运行

服务器端

service ntpd start

service ntpd stop

ntpstat

客户端

ntpdate –u 172.23.27.120

service ntpd start

ntpstat

查看

watch ntpq -p

7安装cloudera前奏

回到 顶部

7.1修改主机交换空间

vim /etc/sysctl.conf

vm.swappiness = 0

7.2关闭hadoop集群的selinux

setenforce 0

vi /etc/selinux/config

SELINUX=disabled

8生成免密钥登陆

回到 顶部

master

ssh-keygen -t rsa 空格 空格

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

slave

scp ~/.ssh/authorized_keys root@172.23.27.115:~/.ssh/

scp ~/.ssh/authorized_keys root@172.23.27.116:~/.ssh/

ssh 172.23.27.115

ssh 172.23.27.116

9安装java

回到 顶部

mkdir -p /usr/java

cd /home

tar zxvf jdk-7u80-linux-x64.tar.gz -C /usr/java

vim /etc/profile

#java

export JAVA_HOME=/usr/java/jdk1.7.0_80

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

source /etc/profile

10创建 hadoop 用户

回到 顶部

groupadd hdfs

useradd hadoop -g hdfs

passwd hadoop

chmod u+w /etc/sudoers

vim /etc/sudoers

hadoop ALL=(ALL) NOPASSWD: ALL

%hdfs ALL=(ALL) NOPASSWD: ALL

chmod u-w /etc/sudoers

11安装 cloudera

回到 顶部

cd /home/baoy/soft

wget http://archive.cloudera.com/cdh5/one-click-install/redhat/6/x86_64/cloudera-cdh-5-0.x86_64.rpm

yum --nogpgcheck localinstall cloudera-cdh-5-0.x86_64.rpm

rpm --import http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/RPM-GPG-KEY-cloudera

Master上安装namenode、resourcemanager、nodemanager、datanode、mapreduce、historyserver、proxyserver和hadoop-client

yum install hadoop hadoop-hdfs hadoop-client hadoop-doc hadoop-debuginfo hadoop-hdfs-namenode hadoop-yarn-resourcemanager hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce hadoop-mapreduce-historyserver hadoop-yarn-proxyserver -y

Slave1和 Slave2上安装yarn、nodemanager、datanode、mapreduce和hadoop-client

yum install hadoop hadoop-hdfs hadoop-client hadoop-doc hadoop-debuginfo hadoop-yarn hadoop-hdfs-datanode hadoop-yarn-nodemanager hadoop-mapreduce -y

namenode

mkdir -p /data/cache1/dfs/nn

chown -R hdfs:hadoop /data/cache1/dfs/nn

chmod 700 -R /data/cache1/dfs/nn

datenode

mkdir -p /data/cache1/dfs/dn

mkdir -p /data/cache1/dfs/mapred/local

chown -R hdfs:hadoop /data/cache1/dfs/dn

chmod 777 -R /data/

usermod -a -G mapred hadoop

chown -R mapred:hadoop /data/cache1/dfs/mapred/local

配置环境变量

vi /etc/profile

export HADOOP_HOME=/usr/lib/hadoop

export HIVE_HOME=/usr/lib/hive

export HBASE_HOME=/usr/lib/hbase

export HADOOP_HDFS_HOME=/usr/lib/hadoop-hdfs

export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=/usr/lib/hadoop-hdfs

export HADOOP_LIBEXEC_DIR=$HADOOP_HOME/libexec

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HDFS_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_YARN_HOME=/usr/lib/hadoop-yarn

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$HBASE_HOME/bin:$PATH

source /etc/profile

修改master配置

vim /etc/hadoop/conf/core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://dev10.aoiplus.openpf:9000</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>dev10.aoiplus.openpf</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>hdfs</value>

</property>

<property>

<name>hadoop.proxyuser.mapred.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.mapred.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.yarn.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.yarn.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.httpfs.hosts</name>

<value>httpfs-host.foo.com</value>

</property>

<property>

<name>hadoop.proxyuser.httpfs.groups</name>

<value>*</value>

</property>

vim /etc/hadoop/conf/hdfs-site.xml

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/cache1/dfs/nn/</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/cache1/dfs/dn/</value>

</property>

<property>

<name>dfs.hosts</name>

<value>/etc/hadoop/conf/slaves</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.permissions.superusergroup</name>

<value>hdfs</value>

</property>

vim /etc/hadoop/conf/mapred-site.xml

<property>

<name>mapreduce.jobhistory.address</name>

<value>dev10.aoiplus.openpf:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>dev10.aoiplus.openpf:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.joblist.cache.size</name>

<value>50000</value>

</property>

<!-- 前面在HDFS上创建的目录 -->

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/user/hadoop/done</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/user/hadoop/tmp</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

vim /etc/hadoop/conf/yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<description>List of directories to store localized files in.</description>

<name>yarn.nodemanager.local-dirs</name>

<value>/var/lib/hadoop-yarn/cache/${user.name}/nm-local-dir</value>

</property>

<property>

<description>Where to store container logs.</description>

<name>yarn.nodemanager.log-dirs</name>

<value>/var/log/hadoop-yarn/containers</value>

</property>

<property>

<description>Where to aggregate logs to.</description>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>hdfs://dev10.aoiplus.openpf:9000/var/log/hadoop-yarn/apps</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>dev10.aoiplus.openpf:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>dev10.aoiplus.openpf:8030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>dev10.aoiplus.openpf:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>dev10.aoiplus.openpf:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>dev10.aoiplus.openpf:8033</value>

</property>

<property>

<description>Classpath for typical applications.</description>

<name>yarn.application.classpath</name>

<value>

$HADOOP_CONF_DIR,

$HADOOP_COMMON_HOME/*,

$HADOOP_COMMON_HOME/lib/*,

$HADOOP_HDFS_HOME/*,

$HADOOP_HDFS_HOME/lib/*,

$HADOOP_MAPRED_HOME/*,

$HADOOP_MAPRED_HOME/lib/*,

$HADOOP_YARN_HOME/*,

$HADOOP_YARN_HOME/lib/*

</value>

</property>

<property>

<name>yarn.web-proxy.address</name>

<value>dev10.aoiplus.openpf:54315</value>

</property>

vim /etc/hadoop/conf/slaves

dev05.aoiplus.openpf

dev06.aoiplus.openpf

修改以上master配置文件拷贝到slave

scp -r /etc/hadoop/conf root@dev05.aoiplus.openpf:/etc/hadoop/

scp -r /etc/hadoop/conf root@dev06.aoiplus.openpf:/etc/hadoop/

启动服务

namenode启动

hdfs namenode -format

service hadoop-hdfs-namenode init

service hadoop-hdfs-namenode start

service hadoop-yarn-resourcemanager start

service hadoop-yarn-proxyserver start

service hadoop-mapreduce-historyserver start

datanode启动

service hadoop-hdfs-datanode start

service hadoop-yarn-nodemanager start

浏览器查看

http://192.168.13.74:50070 HDFS

http://192.168.13.74:8088 ResourceManager(Yarn)

http://192.168.13.74:8088/cluster/nodes 在线的节点

http://192.168.13.74:8042 NodeManager

http://192.168.13.75:8042 NodeManager

http://192.168.13.76:8042 NodeManager

http://192.168.13.74:19888/ JobHistory

hdfs 创建

hdfs dfs -mkdir -p /user/hadoop/{done,tmp}

sudo -u hdfs hadoop fs -chown mapred:hadoop /user/hadoop/*

hdfs dfs -mkdir -p /var/log/hadoop-yarn/apps

sudo -u hdfs hadoop fs -chown hadoop:hdfs /var/log/hadoop-yarn/apps

hdfs dfs -mkdir -p /user/hive/warehouse

sudo -u hdfs hadoop fs -chown hive /user/hive/warehouse

sudo -u hdfs hadoop fs -chmod 1777 /user/hive/warehouse

hdfs dfs -mkdir -p /tmp/hive

sudo -u hdfs hadoop fs -chmod 777 /tmp/hive

12安装zookeeper

回到 顶部

yum install zookeeper* -y

vim /etc/zookeeper/conf/zoo.cfg

#clean logs

autopurge.snapRetainCount=3

autopurge.purgeInterval=1

server.1=dev10.aoiplus.openpf:2888:3888

server.2=dev06.aoiplus.openpf:2888:3888

server.3=dev05.aoiplus.openpf:2888:3888

scp -r /etc/zookeeper/conf root@dev05.aoiplus.openpf:/etc/zookeeper/

scp -r /etc/zookeeper/conf root@dev06.aoiplus.openpf:/etc/zookeeper/

master

service zookeeper-server init --myid=1

service zookeeper-server start

slave1

service zookeeper-server init --myid=2

service zookeeper-server start

slave2

service zookeeper-server init --myid=3

service zookeeper-server start

test master

zookeeper-client -server dev10.aoiplus.openpf:2181

windows zookeeper 下载地址 http://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.4.6/

修改zookeeper配置文件zoo-example.cfg改为zoo.cfg,zookeeper默认寻找zoo.cfg配置文件

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

#dataDir=/tmp/zookeeper

dataDir=F:\\log\\zookeeper\\data

dataLogDir=F:\\log\\zookeeper\\log

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

启动zookeeper

Window下命令:进入bin目录 ->zkServer.cmd

13安装mysql

回到 顶部

13.1 centos6 安装mysql

13.1 centos6 安装mysql

所有机器

yum install mysql-client mysql-server -y

mysql_install_db

vim /etc/my.cnf

[mysqld_safe]

socket = /var/run/mysqld/mysqld.sock

nice = 0

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

[mysqld]

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

user=mysql

tmpdir = /tmp

#lc-messages-dir = /usr/share/mysql

port = 3306

skip-external-locking

character-set-server =utf8

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

key_buffer = 64M

max_allowed_packet = 32M

table_open_cache = 4096

sort_buffer_size = 8M

read_buffer_size = 8M

read_rnd_buffer_size = 32M

myisam_sort_buffer_size = 64M

thread_stack = 192K

thread_cache_size = 16

bulk_insert_buffer_size = 64M

default-storage-engine=InnoDB

# This replaces the startup script and checks MyISAM tables if needed

# the first time they are touched

myisam-recover = BACKUP

#max_connections = 100

#table_cache = 64

#thread_concurrency = 10

#

# * Query Cache Configuration

#

#query_cache_limit = 4M

#query_cache_size = 64M

join_buffer_size = 2M

#

# * InnoDB

#

# InnoDB is enabled by default with a 10MB datafile in /var/lib/mysql/.

# Read the manual for more InnoDB related options. There are many!

#

innodb_buffer_pool_size = 1G

innodb_additional_mem_pool_size =32M

innodb_log_buffer_size = 256M

#innodb_log_file_size = 1024M

innodb_flush_log_at_trx_commit = 0

innodb_autoextend_increment =64

innodb_file_per_table =1

# * Security Features

#

# Read the manual, too, if you want chroot!

# chroot = /var/lib/mysql/

#

# For generating SSL certificates I recommend the OpenSSL GUI "tinyca".

#

# ssl-ca=/etc/mysql/cacert.pem

# ssl-cert=/etc/mysql/server-cert.pem

# ssl-key=/etc/mysql/server-key.pem

[mysqldump]

quick

quote-names

max_allowed_packet = 64M

[mysql]

socket=/var/lib/mysql/mysql.sock

[isamchk]

key_buffer = 16M

service mysqld start

mysqladmin -u root password root

mysql -uroot -proot

grant all privileges on *.* to 'root'@'%' identified by 'root';

grant all privileges on *.* to 'root'@'localhost' identified by 'root';

grant all privileges on *.* to 'root'@hostname identified by 'root';

grant all privileges on *.* to 'root'@IP identified by 'root';

flush privileges;

SET SESSION binlog_format = 'MIXED';

SET GLOBAL binlog_format = 'MIXED';

主

mkdir -p /var/log/mysql

chmod -R 777 /var/log/mysql

vim /etc/my.cnf

server-id = 1

log_bin = /var/log/mysql/mysql-bin.log

expire_logs_days = 10

max_binlog_size = 1024M

#binlog_do_db = datebase

#binlog_ignore_db = include_database_name

赋权给从库服务器IP

GRANT REPLICATION SLAVE ON *.* TO ’root’@slaveIP IDENTIFIED BY ‘root’;

service mysqld restart

show master status;

从

vim /etc/my.cnf

server-id = 2

expire_logs_days = 10

max_binlog_size = 1024M

#replicate_do_db = mydatebase

service mysqld restart

change master to master_host='dev10.aoiplus.openpf', master_user='root', master_password='root', master_log_file='mysql-bin.000001', master_log_pos=106;

slave start;

show slave status \G;

13.2 centos6 rpm安装mysql

rpm -ivh mysql-community-common-5.7.17-1.el6.x86_64.rpm

rpm -ivh mysql-community-libs-5.7.17-1.el6.x86_64.rpm

rpm -ivh mysql-community-client-5.7.17-1.el6.x86_64.rpm

rpm -ivh mysql-community-server-5.7.17-1.el6.x86_64.rpm

rpm -ivh mysql-community-devel-5.7.17-1.el6.x86_64.rpm

mysqld --user=mysql --initialize //可以看见返回默认密码

setenforce 0

service mysqld start

mysql -u root -p

set global validate_password_policy=0

SET PASSWORD = PASSWORD('Aa12345');

use mysql

update user set host='%' where user='root' and host='localhost';

flush privileges;

exit

13.2 centos7 yum安装mysql

wget http://dev.mysql.com/get/mysql57-community-release-el7-7.noarch.rpm

yum localinstall -y mysql57-community-release-el7-7.noarch.rpm

yum install -y mysql-community-server

systemctl start mysqld.service

grep 'temporary password' /var/log/mysqld.log 看见密码

【 Your password does not satisfy the current policy requirements】

set global validate_password_policy=0;

【Your password does not satisfy the current policy requirements】

select @@validate_password_length;

set global validate_password_policy=0

SET PASSWORD = PASSWORD('66666666');

use mysql

update user set host='%' where user='root' and host='localhost';

flush privileges;

exit

13.3 源码包安装

wget https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-5.7.18.tar.gz

tar xf mysql-5.7.18.tar.gz

mv mysql-5.7.18 mysql

wget https://jaist.dl.sourceforge.net/project/boost/boost/1.59.0/boost_1_59_0.tar.gz

cmake . -DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

-DMYSQL_UNIX_ADDR=/usr/local/mysql/mysql.sock \

-DDEFAULT_CHARSET=utf8 \

-DDEFAULT_COLLATION=utf8_general_ci \

-DWITH_INNOBASE_STORAGE_engine=1 \

-DWITH_ARCHIVE_STORAGE_ENGINE=1 \

-DWITH_BLACKHOLE_STORAGE_ENGINE=1 \

-DMYSQL_DATADIR=/usr/local/mysql/data \

-DMYSQL_TCP_PORT=3306 \

-DWITH_BOOST=/usr/local/boost_1_59_0 \

-DENABLE_DOWNLOADS=1 \

-DCURSES_INCLUDE_PATH=/usr/include \

-DCURSES_LIBRARY=/usr/lib64/libncurses.so

cd /usr/local/mysql/bin

./mysqld --initialize --user=mysql --datadir=/usr/local/mysql/data/ --basedir=/usr/local/mysql --socket=/usr/local/mysql/mysql.sock

cp -a support-files/mysql.server /etc/init.d/mysql

cp -a mysql.server /etc/init.d/mysql

vim /etc/my.cnf

[mysqld]

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES

service mysql start

SET PASSWORD = PASSWORD('66666666');

use mysql

update user set host='%' where user='root' and host='localhost';

flush privileges;

exit

14安装hive

回到 顶部

namenode

yum install hive hive-metastore hive-server2 hive-jdbc hive-hbase -y

datenode

yum install hive hive-server2 hive-jdbc hive-hbase -y

配置元数据

CREATE DATABASE metastore;

USE metastore;

SOURCE /usr/lib/hive/scripts/metastore/upgrade/mysql/hive-schema-1.1.0.mysql.sql;

CREATE USER 'hive'@'localhost' IDENTIFIED BY 'hive';

GRANT ALL PRIVILEGES ON metastore.* TO 'hive'@'localhost' IDENTIFIED BY 'hive';

GRANT ALL PRIVILEGES ON metastore.* TO 'hive'@'dev10.aoiplus.openpf' IDENTIFIED BY 'hive';

FLUSH PRIVILEGES;

vim /etc/hive/conf/hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://traceMaster:3306/metastore?useUnicode=true&characterEncoding=UTF-8</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

</property>

<property>

<name>datanucleus.autoCreateSchema</name>

<value>false</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>traceMaster:8031</value>

</property>

<property>

<name>hive.files.umask.value</name>

<value>0002</value>

</property>

<property>

<name>hive.exec.reducers.max</name>

<value>999</value>

</property>

<property>

<name>hive.auto.convert.join</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.warehouse.subdir.inherit.perms</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://traceMaster:9083</value>

</property>

<property>

<name>hive.metastore.server.min.threads</name>

<value>200</value>

</property>

<property>

<name>hive.metastore.server.max.threads</name>

<value>100000</value>

</property>

<property>

<name>hive.metastore.client.socket.timeout</name>

<value>3600</value>

</property>

<property>

<name>hive.support.concurrency</name>

<value>true</value>

</property>

<property>

<name>hive.zookeeper.quorum</name>

<value>traceMaster,traceSlave1,traceSlave2</value>

</property>

<!-- 最小工作线程数,默认为5 -->

<property>

<name>hive.server2.thrift.min.worker.threads</name>

<value>5</value>

</property>

<!-- 最大工作线程数,默认为500 -->

<property>

<name>hive.server2.thrift.max.worker.threads</name>

<value>500</value>

</property>

<!-- TCP的监听端口,默认为10000 -->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<!-- TCP绑定的主机,默认为localhost -->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>traceMaster</value>

</property>

<property>

<name>hive.server2.enable.impersonation</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>traceMaster:8031</value>

</property>

vim /etc/hadoop/conf/core-site.xml

<property>

<name>hadoop.proxyuser.hive.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hive.groups</name>

<value>*</value>

</property>

启动服务

service hive-metastore start

service hive-server start

service hive-server2 start

test

create database trace;

use trace;

create table app (appid int, appname string);

select * from app;

访问

beeline

beeline> !connect jdbc:hive2://traceMaster:10000 hive hive org.apache.hive.jdbc.HiveDriver

组名 用户名

beeline>!q(退出)

15安装spark

回到 顶部

vim /etc/profile

export SPARK_HOME=/usr/lib/spark

master

vim /etc/spark/conf/spark-env.sh

export STANDALONE_SPARK_MASTER_HOST=dev10.aoiplus.openpf

export SPARK_MASTER_IP=$STANDALONE_SPARK_MASTER_HOST

export SCALA_LIBRARY_PATH=${SPARK_HOME}/lib

export HIVE_CONF_DIR=${HIVE_CONF_DIR:-/etc/hive/conf}

export SPARK_DAEMON_MEMORY=256m

scp -r /etc/spark/conf root@dev05.aoiplus.openpf:/etc/spark/

scp -r /etc/spark/conf root@dev06.aoiplus.openpf:/etc/spark/

sudo -u hdfs hadoop fs -mkdir /user/spark

sudo -u hdfs hadoop fs -mkdir /user/spark/applicationHistory

sudo -u hdfs hadoop fs -chown -R spark:spark /user/spark

sudo -u hdfs hadoop fs -chmod 1777 /user/spark/applicationHistory

cp /etc/spark/conf/spark-defaults.conf.template /etc/spark/conf/spark-defaults.conf

vim /etc/spark/conf/spark-defaults.conf

spark.eventLog.dir=/user/spark/applicationHistory

spark.eventLog.enabled=true

spark.yarn.historyServer.address=http://dev10.aoiplus.openpf:19888

16安装kafka

回到 顶部

cd /usr/local/

wget http://mirror.bit.edu.cn/apache/kafka/0.10.0.0/kafka_2.10-0.10.0.0.tgz

tar xf kafka_2.10-0.10.0.0.tgz

ln -s /usr/local/kafka_2.10-0.10.0.0 /usr/local/kafka

chown -R hdfs:hadoop /usr/local/kafka_2.10-0.10.0.0 /usr/local/kafka

chown -R root:root /usr/local/kafka_2.10-0.10.0.0 /usr/local/kafka

vi /usr/local/kafka/config/server.properties

broker.id=0

zookeeper.connect=dev10.aoiplus.openpf:2181,dev06.aoiplus.openpf:2181,dev05.aoiplus.openpf:2181/kafka

scp -r /usr/local/kafka_2.10-0.10.0.0.tgz root@dev05.aoiplus.openpf:/usr/local/

scp -r /usr/local/kafka_2.10-0.10.0.0.tgz root@dev06.aoiplus.openpf:/usr/local/

scp -r /usr/local/kafka/config/server.properties root@dev05.aoiplus.openpf:/usr/local/kafka/config/server.properties

scp -r /usr/local/kafka/config/server.properties root@dev06.aoiplus.openpf:/usr/local/kafka/config/server.properties

master slave 启动

/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties &

创建topic

/usr/local/kafka/bin/kafka-topics.sh --create --zookeeper dev10.aoiplus.openpf:2181,dev06.aoiplus.openpf:2181,dev05.aoiplus.openpf:2181/kafka --replication-factor 3 --partitions 5 --topic baoy-topic

/usr/local/kafka/bin/kafka-topics.sh --describe --zookeeper dev10.aoiplus.openpf:2181,dev06.aoiplus.openpf:2181,dev05.aoiplus.openpf:2181/kafka --topic baoy-topic

/usr/local/kafka/bin/kafka-console-producer.sh --broker-list dev10.aoiplus.openpf:9092,dev05.aoiplus.openpf:9092,dev06.aoiplus.openpf:9092 --topic baoy-topic

/usr/local/kafka/bin/kafka-console-consumer.sh --zookeeper dev10.aoiplus.openpf:2181,dev05.aoiplus.openpf:2181,dev06.aoiplus.openpf:2181/kafka --from-beginning --topic baoy-topic

17安装storm

回到 顶部

cd /usr/local/

wget http://mirrors.cnnic.cn/apache/storm/apache-storm-0.10.0/apache-storm-0.10.0.tar.gz

tar xf apache-storm-0.10.0.tar.gz

ln -s /usr/local/apache-storm-0.10.0 /usr/local/storm

chown -R storm:storm /usr/local/apache-storm-0.10.0 /usr/local/storm

chown -R root:root /usr/local/apache-storm-0.10.0 /usr/local/storm

mkdir -p /tmp/storm/data/

cd storm

vim conf/storm.yaml

storm.zookeeper.servers:

- "dev10.aoiplus.openpf"

- "dev05.aoiplus.openpf"

- "dev06.aoiplus.openpf"

storm.zookeeper.port: 2181

nimbus.host: "dev10.aoiplus.openpf"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

storm.local.dir: "/tmp/storm/data"

scp -r /usr/local/storm/conf/storm.yaml root@dev06.aoiplus.openpf:/usr/local/storm/conf/

scp -r /usr/local/storm/conf/storm.yaml root@dev05.aoiplus.openpf:/usr/local/storm/conf/

启动

master

/usr/local/storm/bin/storm nimbus >/dev/null 2>&1 &

/usr/local/storm/bin/storm ui >/dev/null 2>&1 &

slaves

/usr/local/storm/bin/storm supervisor >/dev/null 2>&1 &

查看

http://dev10.aoiplus.openpf/index.html

cp /usr/local/kafka/libs/kafka_2.10-0.10.0.0.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/scala-library-2.10.6.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/metrics-core-2.2.0.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/snappy-java-1.1.2.4.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/zkclient-0.8.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/log4j-1.2.17.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/slf4j-api-1.7.21.jar /usr/local/storm/lib/

cp /usr/local/kafka/libs/jopt-simple-3.2.jar /usr/local/storm/lib/

/usr/local/storm/bin/storm jar /home/baoy/soft/storm/KafkaStormJavaDemo_main_start.jar com.curiousby.baoyou.cn.storm.TerminalInfosAnalysisTopology "terminalInfosAnalysisTopology"

mkdir -p /home/baoy/soft/storm/logs

chmod -R 777 /home/baoy/soft/storm/logs

cd /usr/local/storm/log4j2/

vim cluster.xml

<property name="logpath">/home/baoy/soft/storm</property>

关闭 storm

/usr/local/storm/bin/storm kill terminalInfosAnalysisTopology

18redis安装

回到 顶部

redis 安装

cd /home/baoy/package/

scp -r /home/baoy/package/redis-2.6.17.tar.gz root@dev05.aoiplus.openpf:/home/baoy/package/

scp -r /home/baoy/package/redis-2.6.17.tar.gz root@dev06.aoiplus.openpf:/home/baoy/package/

tar xf redis-2.6.17.tar.gz

mv redis-2.6.17 ../soft/redis-2.6.17

cd ../soft/

ln -s /home/baoy/soft/redis-2.6.17 /home/baoy/soft/redis

chown -R root:root /home/baoy/soft/redis-2.6.17 /home/baoy/soft/redis

cd redis

yum install gcc

make 出错后 使用 make MALLOC=libc

sudo make install

配置

master

vim redis.conf

appendonly yes

daemonize yes

slave

vim redis.conf

slaveof 172.23.27.120 6397 (主ip)

appendonly yes

daemonize yes

启动

master

redis-server redis.conf

slave

redis-server redis.conf

19.docker 安装

方法一

yum -y install http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

sudo yum update

yum install docker-io

docker version

service docker start

service docker stop

chkconfig docker on

docker pull centos:latest

方法二

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum makecache fast

yum install docker-ce

systemctl start docker

docker -v

docker version

docker images

docker pull hello-world

docker run hello-world

docker pull centos:latest

docker pull hub.c.163.com/library/tomcat:latest

docker pull hub.c.163.com/library/nginx:latest

docker ps # 查看后台进程

#后台运行 docker run -d

docker run -d hub.c.163.com/library/nginx:latest

#进入容器

docker exec --help

docker exec -it [imagesid] bash

#映射ip端口服务器8080给容器80

docker run -d -p 8080:80 hub.c.163.com/library/nginx:latest

netstat -na | grep 8080

#根据容器id 停用容器

docker stop [imagesid]

#构建自己的容器

docker build -t jpress:lasted .

Dockerfile

from hub.c.163.com/library/tomcat

MAINTAINER baoyou curiousby@163.com

COPY jenkins.war /usr/local/tomcat/webapps

docker build -t jenkins:lasted .

docker run -d -p 8080:8080 jenkins:lasted

20 flume安装

回到 顶部

20.flume 安装

tar -xf flume-ng-1.6.0-cdh5.5.0.tar.gz

mv apache-flume-1.6.0-cdh5.5.0-bin ../soft/flume-1.6.0

cd ../soft/

ln -s /home/baoy/soft/flume-1.6.0 /home/baoy/soft/flume

chown -R root:root /home/baoy/soft/flume-1.6.0 /home/baoy/soft/flume

cd /home/baoy/soft/flume

cd /home/baoy/soft/flume/config

cp /home/baoy/soft/flume/config/flume-env.sh.template /home/baoy/soft/flume/config/flume-env.sh

vim flume-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_79

/home/baoy/soft/flume/bin/flume-ng version

cp /usr/local/kafka/libs/kafka_2.10-0.10.0.0.jar /home/baoy/soft/flume/lib/

cp /usr/local/kafka/libs/scala-library-2.10.6.jar /home/baoy/soft/flume/lib/

cp /usr/local/kafka/libs/metrics-core-2.2.0.jar /home/baoy/soft/flume/lib/

vim /home/baoy/soft/flume/config/exec_tail_kafka.conf

agent.sources = s1

agent.sinks = k1

agent.channels = c1

agent.sources.s1.type=exec

agent.sources.s1.command=tail -F /home/project/flume/logs/flume.log

agent.sources.s1.channels=c1

agent.channels.c1.type=memory

agent.channels.c1.capacity=10000

agent.channels.c1.transactionCapacity=100

#设置Kafka接收器

agent.sinks.k1.type= org.apache.flume.sink.kafka.KafkaSink

#设置Kafka的broker地址和端口号

agent.sinks.k1.brokerList=dev10.aoiplus.openpf:9092

#设置Kafka的Topic

agent.sinks.k1.topic=baoy-topic

#设置序列化方式

agent.sinks.k1.serializer.class=kafka.serializer.StringEncoder

agent.sinks.k1.channel=c1

/home/baoy/soft/flume/bin/flume-ng agent -n agent -c conf -f /home/baoy/soft/flume/conf/exec_tail_kafka.conf -Dflume.root.logger=INFO,console

21 git 安装

回到 顶部

21.git 服务器端安装 centos yum

21.1安装服务器端

yum install -y git

git --version 查看安装

21.2安装客户端下载 Git for Windows,地址:https://git-for-windows.github.io/

git --version

21.3 创建 git 用户

[root@localhost home]# id git

[root@localhost home]# useradd git

[root@localhost home]# passwd git

21.4服务器端创建 Git 仓库

设置 /home/data/git/gittest.git 为 Git 仓库 然后把 Git 仓库的 owner 修改为 git

[root@localhost home]# mkdir -p data/git/gittest.git

[root@localhost home]# git init --bare data/git/gittest.git

Initialized empty Git repository in /home/data/git/gittest.git/

[root@localhost home]# cd data/git/

[root@localhost git]# chown -R git:git gittest.git/

21.5客户端 clone 远程仓库

进入 Git Bash 命令行客户端,创建项目地址(设置在 I:\gitrespository)并进入

git clone git@172.23.27.113:/home/data/git/gittest.git

The authenticity of host '192.168.56.101 (192.168.56.101)' can't be established.

RSA key fingerprint is SHA256:Ve6WV/SCA059EqoUOzbFoZdfmMh3B259nigfmvdadqQ.

Are you sure you want to continue connecting (yes/no)?

选择 yes:

Warning: Permanently added '192.168.56.101' (RSA) to the list of known hosts.

ssh-keygen -t rsa -C "curiousby@163.com"

21.6服务器端 Git 打开 RSA 认证

进入 /etc/ssh 目录,编辑 sshd_config,打开以下三个配置的注释:

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

保存并重启 sshd 服务:

[root@localhost ssh]# /etc/rc.d/init.d/sshd restart

由 AuthorizedKeysFile 得知公钥的存放路径是 .ssh/authorized_keys,实际上是 $Home/.ssh/authorized_keys,由于管理 Git 服务的用户是 git,所以实际存放公钥的路径是 /home/git/.ssh/authorized_keys

在 /home/git/ 下创建目录 .ssh

[root@localhost git]#pwd

/home/git

[root@localhost git]# mkdir .ssh

[root@localhost git]# chown -R git:git .ssh

[root@localhost git]# ll -a

总用量 32

drwx------. 5 git git 4096 8月 28 20:04 .

drwxr-xr-x. 8 root root 4096 8月 28 19:32 ..

-rw-r--r--. 1 git git 18 10月 16 2014 .bash_logout

-rw-r--r--. 1 git git 176 10月 16 2014 .bash_profile

-rw-r--r--. 1 git git 124 10月 16 2014 .bashrc

drwxr-xr-x. 2 git git 4096 11月 12 2010 .gnome2

drwxr-xr-x. 4 git git 4096 5月 8 12:22 .mozilla

drwxr-xr-x. 2 git git 4096 8月 28 20:08 .ssh

21.7将客户端公钥导入服务器端 /home/git/.ssh/authorized_keys 文件

回到 Git Bash 下,导入文件:

$ ssh git@192.168.56.101 'cat >> .ssh/authorized_keys' < ~/.ssh/id_rsa.pub

需要输入服务器端 git 用户的密码

21.8回到服务器端,查看 .ssh 下是否存在 authorized_keys 文件

修改 .ssh 目录的权限为 700 修改 .ssh/authorized_keys 文件的权限为 600

[root@localhost git]# chmod 700 .ssh

[root@localhost git]# cd .ssh

[root@localhost .ssh]# chmod 600 authorized_keys

21.9客户端再次 clone 远程仓库

git clone git@172.23.27.113:/home/data/git/gittest.git

ok

22 maven 安装

回到 顶部

22.maven 安装

cd /home/baoy/package/

tar -xf apache-maven-3.2.3-bin.tar.gz

mv apache-maven-3.2.3 ../soft/

cd /home/baoy/soft/

cd apache-maven-3.2.3/

pwd

/home/baoy/soft/apache-maven-3.2.3

#maven

export MAVEN_HOME=/home/baoy/soft/apache-maven-3.2.3

export MAVEN_HOME

export PATH=${PATH}:${MAVEN_HOME}/bin

mvn -v

23 nexus安装

回到 顶部

23.nexus 安装

cd /home/baoy/package/

tar -xf nexus-2.11.4-01-bundle.tar.gz

mv nexus-2.11.4-01 ../soft/

cd ../soft/

cd bin/

vim nexus

RUN_AS_USER=root

sh nexus start

访问

http://172.23.27.120:8081/nexus/

用户名admin 默认密码:admin123

http://172.23.27.120:8081/nexus/content/groups/public/

24 svn安装

回到 顶部

24.svn 安装

cd /home/baoy/soft/

mkdir svn

cd svn

24.1 安装apr

下载地址 http://apr.apache.org/

cd /home/baoy/package

tar -xf apr-1.5.2.tar.gz

mv apr-1.5.2 ../soft/svn/

cd /home/baoy/soft/svn/apr-1.5.2

./configure

make && make install

24.2 安装apr-util

下载地址 http://apr.apache.org/

cd /home/baoy/package

tar -xf apr-util-1.5.4.tar.gz

mv apr-util-1.5.4 ../soft/svn/

cd /home/baoy/soft/svn/apr-util-1.5.4

./configure --with-apr=/home/baoy/soft/svn/apr-1.5.2

make && make install

24.3 安装zlib

下载地址 http://www.zlib.net/

cd /home/baoy/package

tar -xf zlib-1.2.11.tar.gz

mv zlib-1.2.11 ../soft/svn/

cd /home/baoy/soft/svn/zlib-1.2.11

./configure

make && make install

24.4 安装openssl

下载地址 http://www.openssl.org/

cd /home/baoy/package

tar -xf openssl-1.0.0e.tar.gz

mv openssl-1.0.0e ../soft/svn/

cd /home/baoy/soft/svn/openssl-1.0.0e

./config -t

make && make install

24.5 安装expat

下载地址 http://sourceforge.net/projects/expat/files/expat/2.1.0/

cd /home/baoy/package

tar -xf expat-2.1.0.tar.gz

mv expat-2.1.0 ../soft/svn/

cd /home/baoy/soft/svn/expat-2.1.0

./configure

make && make install

24.6 安装serf

下载地址 http://download.csdn.net/detail/attagain/8071513

cd /home/baoy/package

tar xjvf serf-1.2.1.tar.bz2

mv serf-1.2.1 ../soft/svn/

cd /home/baoy/soft/svn/serf-1.2.1

./configure

./configure --with-apr=/home/baoy/soft/svn/apr-1.5.2 \

--with-apr-util=/home/baoy/soft/svn/apr-util-1.5.4

make && make install

24.7 安装 sqlite-amalgamation

下载地址 http://www.sqlite.org/download.html

cd /home/baoy/package

unzip sqlite-amalgamation-3170000.zip

cd /home/baoy/package

tar -xf subversion-1.8.17.tar.gz

mv subversion-1.8.17 ../soft/svn/

mv sqlite-amalgamation-3170000 ../soft/svn/subversion-1.8.17/

cd ../soft/svn/subversion-1.8.17/sqlite-amalgamation-3170000

24.8 安装subversion

下载地址 http://subversion.apache.org/download/

cd /home/baoy/soft/svn/subversion-1.8.17

./configure --prefix=/home/baoy/soft/svn/subversion-1.8.10 \

--with-apr=/home/baoy/soft/svn/apr-1.5.2 \

--with-apr-util=/home/baoy/soft/svn/apr-util-1.5.4 \

--with-serf=/home/baoy/soft/svn/serf-1.2.1 \

--with-openssl=/home/baoy/soft/svn/openssl-1.0.0e

make && make install

24.9 修改配置

vim /etc/profile

#svn

SVN_HOME=/home/baoy/soft/svn/subversion-1.8.10

export PATH=${PATH}:${SVN_HOME}/bin

source /etc/profile

test

svn -version

mkdir -p /data/svn/repos

svnadmin create /data/svn/repos

vim /data/svn/repos/conf/svnserve.conf

[general]

anon-access = none # 使非授权用户无法访问

auth-access = write # 使授权用户有写权限

password-db = /data/svn/repos/conf/pwd.conf # 指明密码文件路径

authz-db = /data/svn/repos/conf/authz.conf # 访问控制文件

realm = /data/svn/repos # 认证命名空间,subversion会在认证提示里显示,并且作为凭证缓存的关键字

vim /data/svn/repos/conf/pwd.conf

[users]

baoyou=123456

vim /data/svn/repos/conf/authz.conf

[/opt/svndata/repos]

baoyou = rw

以上总是有问题

yum install -y subversion

mkdir -p /data/svn/

svnadmin create /data/svn/repo

修改配置

vim /data/svn/repo/conf/svnserve.conf

[general]

anon-access=none #匿名访问的权限,可以是read,write,none,默认为read

auth-access=write #使授权用户有写权限

password-db=passwd #密码数据库的路径

authz-db=authz #访问控制文件

realm=/data/svn/repo #认证命名空间,subversion会在认证提示里显示,并且作为凭证缓存的关键字

vim /data/svn/repo/conf/passwd

[users]

baoyou=123456

vim /data/svn/repo/conf/authz

[/]

baoyou = rw

启动

ps -ef|grep svn

svnserve -d -r /data/svn/repo --listen-port=3690

开通端口

/sbin/iptables -I INPUT -p tcp --dport 3690 -j ACCEPT

/etc/rc.d/init.d/iptables save

/etc/init.d/iptables restart

/etc/init.d/iptables status

访问

svn://172.23.27.120/

25 jenkins安装

回到 顶部

25.jenkins安装

上传tomcat

cd /home/baoy/package

unzip apache-tomcat-7.0.54.zip

mv apache-tomcat-7.0.54 ../soft/

cd /home/baoy/soft/apache-tomcat-7.0.54/

cd webapp/

wget http://mirrors.tuna.tsinghua.edu.cn/jenkins/war-stable/2.32.2/jenkins.war

wget http://mirrors.jenkins-ci.org/war/latest/jenkins.war

cd ../bin/

sh start.sh

密码

vim /root/.jenkins/secrets/initialAdminPassword

4304c3e16a884daf876358b2bd48314b

http://172.23.27.120:8082/jenkins

26 ftp 安装

回到 顶部

26.ftp 安装

jar包安装 安装移步http://knight-black-bob.iteye.com/blog/2244731

下面介绍 yum 安装

yum install vsftpd

yum install ftp

启动 关闭

service vsftpd start

service vsftpd stop

重启服务器

/etc/init.d/vsftpd restart

修改配置文件

vim /etc/vsftpd/vsftpd.conf

打开注释

chroot_list_file=/etc/vsftpd/chroot_list

添加用户

useradd -d /home/baoy/soft/vsftp/curiousby -g ftp -s /sbin/nologin curiousby

passwd curiousby

baoyou81

vim vi /etc/vsftpd/chroot_list

baoyou

jenkis 添加

# Put the apk on ftp Server.

#Put apk from local to ftp server

if ping -c 3 172.23.27.120;then

echo "Ftp server works normally!"

else

echo "Ftp server is down again!"

exit 1

fi

ftp -nv <<EOC

open 172.23.27.120

user curiousby baoyou81

prompt

binary

cd /home/baoy/soft/vsftp/curiousby

cd ./${}

mkdir `date +%Y%m%d`

cd "`date +%Y%m%d`"

lcd ${PAK_PATH}

mput *.tar.gz

close

bye

EOC

echo "-> Done: Put package file successfully!"

27 fastdfs 安装

回到 顶部

27.安装 fastdfs

libfastcommon下载地址: https://github.com/happyfish100/libfastcommon.git

FastDFS的下载地址:https://github.com/happyfish100/fastdfs/releases/tag/V5.05

unzip libfastcommon-master.zip

mv libfastcommon-master ../soft

cd ../soft/libfastcommon-master

./make.sh

./make.sh install

tar -xf fastdfs-5.05.tar.gz

mv fastdfs-5.05/ ../soft

cd ../soft/fastdfs-5.05/

./make.sh

./make.sh install

cd /etc/fdfs

cp tracker.conf.sample tracker.conf

mkdir -p /data/fastdfs/tracker

vim /etc/fdfs/tracker.conf

disabled=false #启用配置文件

bind_addr=172.23.27.120

port=22122 #设置 tracker 的端口号

base_path=/data/fastdfs/tracker #设置 tracker 的数据文件和日志目录(需预先创建)

http.server_port=8085 #设置 http 端口号

重启

fdfs_trackerd /etc/fdfs/tracker.conf restart

观察端口

netstat -antp | grep trackerd

配置storage

cp -r /home/baoy/soft/fastdfs-5.05/conf/* /etc/fdfs/

vim /etc/fdfs/storage.conf

vim /etc/fdfs/storage.conf

disabled=false#启用配置文件

group_name=group1 #组名,根据实际情况修改

port=23000 #设置 storage 的端口号

base_path=/data/fastdfs/storage #设置 storage 的日志目录(需预先创建)

store_path_count=1 #存储路径个数,需要和 store_path 个数匹配

store_path0=/data/fastdfs/storage #存储路径

tracker_server=172.23.27.120:22122 #tracker 服务器的 IP 地址和端口号

http.server_port=8085 #设置storage上启动的http服务的端口号,如安装的nginx的端口号

运行 fdfs_storaged /etc/fdfs/storage.conf restart

检测storage是否注册到tracker

fdfs_monitor /etc/fdfs/storage.conf

28 php 安装

回到 顶部

28 php 安装

tar xf php-5.6.30.gz

mv php-5.6.30 ../soft/

cd ../soft/php-5.6.30/

yum install libxml2-devel

./configure --prefix=/usr/local/php --with-apxs2=/usr/local/apache/bin/apxs --with-mysql=/usr/local/mysql --with-pdo-mysql=/usr/local/mysql

make && make install

修改 apache2让支持php

vim /etc/httpd/httpd.conf

添加

LoadModule php5_module modules/libphp5.so

添加

AddType application/x-httpd-php .php

AddType application/x-httpd-php-source .phps

添加 DirectoryIndex

<IfModule dir_module>

DirectoryIndex index.html index.php

</IfModule>

测试

在/usr/local/apache/htdocs/info.php 创建

<?php

phpinfo();

?>

http://127.60.50.180/info.php

30 python 安装

回到 顶部

30.python 安装

30.1 安装python

yum groupinstall "Development tools"

yum install zlib-devel

yum install bzip2-devel

yum install openssl-devel

yum install ncurses-devel

yum install sqlite-devel

cd /opt

wget --no-check-certificate https://www.python.org/ftp/python/2.7.9/Python-2.7.9.tar.xz

tar xf Python-2.7.9.tar.xz

cd Python-2.7.9

./configure --prefix=/usr/local

make && make install

ln -s /usr/local/bin/python2.7 /usr/local/bin/python

python -V

30.2 安装 setuptools

wget https://pypi.python.org/packages/source/s/setuptools/setuptools-0.6c11.tar.gz#md5=7df2a529a074f613b509fb44feefe75e

tar xf setuptools-0.6c11.tar.gz

cd setuptools-0.6c11

python setup.py install

30.3 安装pip

wget "https://pypi.python.org/packages/source/p/pip/pip-1.5.4.tar.gz#md5=834b2904f92d46aaa333267fb1c922bb" --no-check-certificate

tar -xzvf pip-1.5.4.tar.gz

cd pip-1.5.4

python setup.py install

31 yum 安装

回到 顶部

31.yum 源修改

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-3.4.3-150.el7.centos.noarch.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-metadata-parser-1.1.4-10.el7.x86_64.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-utils-1.1.31-40.el7.noarch.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-updateonboot-1.1.31-40.el7.noarch.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-plugin-fastestmirror-1.1.31-40.el7.noarch.rpm

rpm -ivh yum-*

http://mirrors.163.com/.help/CentOS7-Base-163.repo

cd /etc/yum.repos.d/

wget http://mirrors.163.com/.help/CentOS6-Base-163.repo

vi CentOS6-Base-163.repo

$releasever全部替换为版本号 7

yum clean all

yum makecache

yum -y update

yum groupinstall "Development tools"

32 lnmp 安装

回到 顶部

32.lnmp 安装(Linux+Apache+MySQL+PHP)

32.1 apache 安装

wget http://mirrors.hust.edu.cn/apache//apr/apr-1.5.2.tar.gz

wget http://mirrors.hust.edu.cn/apache//apr/apr-util-1.5.4.tar.gz

wget http://mirrors.hust.edu.cn/apache//httpd/httpd-2.2.32.tar.gz

yum -y install pcre-devel

【No recognized SSL/TLS toolkit detected】

yum install openssl openssl-devel

./configure --prefix=/usr/local/apr

make && make install

./configure --prefix=/usr/local/apr-util --with-apr=/usr/local/apr

make && make install

./configure --prefix=/usr/local/apache --sysconfdir=/etc/httpd --enable-so --enable-rewirte --enable-ssl --enable-cgi --enable-cgid --enable-modules=most --enable-mods-shared=most --enable-mpms-shared=all --with-apr=/usr/local/apr --with-apr-util=/usr/local/apr-util

make && make install

vim /etc/httpd/httpd.conf

在ServerRoot下面添加一行

PidFile "/var/run/httpd.pid"

vim /etc/init.d/httpd

#!/bin/bash

#

# httpd Startup script for the Apache HTTP Server

#

# chkconfig: - 85 15

# description: Apache is a World Wide Web server. It is used to serve \

# HTML files and CGI.

# processname: httpd

# config: /etc/httpd/conf/httpd.conf

# config: /etc/sysconfig/httpd

# pidfile: /var/run/httpd.pid

# Source function library.

. /etc/rc.d/init.d/functions

if [ -f /etc/sysconfig/httpd ]; then

. /etc/sysconfig/httpd

fi

# Start httpd in the C locale by default.

HTTPD_LANG=${HTTPD_LANG-"C"}

# This will prevent initlog from swallowing up a pass-phrase prompt if

# mod_ssl needs a pass-phrase from the user.

INITLOG_ARGS=""

# Set HTTPD=/usr/sbin/httpd.worker in /etc/sysconfig/httpd to use a server

# with the thread-based "worker" MPM; BE WARNED that some modules may not

# work correctly with a thread-based MPM; notably PHP will refuse to start.

# Path to the apachectl script, server binary, and short-form for messages.

apachectl=/usr/local/apache/bin/apachectl

httpd=${HTTPD-/usr/local/apache/bin/httpd}

prog=httpd

pidfile=${PIDFILE-/var/run/httpd.pid}

lockfile=${LOCKFILE-/var/lock/subsys/httpd}

RETVAL=0

start() {

echo -n $"Starting $prog: "

LANG=$HTTPD_LANG daemon --pidfile=${pidfile} $httpd $OPTIONS

RETVAL=$?

echo

[ $RETVAL = 0 ] && touch ${lockfile}

return $RETVAL

}

stop() {

echo -n $"Stopping $prog: "

killproc -p ${pidfile} -d 10 $httpd

RETVAL=$?

echo

[ $RETVAL = 0 ] && rm -f ${lockfile} ${pidfile}

}

reload() {

echo -n $"Reloading $prog: "

if ! LANG=$HTTPD_LANG $httpd $OPTIONS -t >&/dev/null; then

RETVAL=$?

echo $"not reloading due to configuration syntax error"

failure $"not reloading $httpd due to configuration syntax error"

else

killproc -p ${pidfile} $httpd -HUP

RETVAL=$?

fi

echo

}

# See how we were called.

case "$1" in

start)

start

;;

stop)

stop

;;

status)

status -p ${pidfile} $httpd

RETVAL=$?

;;

restart)

stop

start

;;

condrestart)

if [ -f ${pidfile} ] ; then

stop

start

fi

;;

reload)

reload

;;

graceful|help|configtest|fullstatus)

$apachectl $@

RETVAL=$?

;;

*)

echo $"Usage: $prog {start|stop|restart|condrestart|reload|status|fullstatus|graceful|help|configtest}"

exit 1

esac

exit $RETVAL

为此脚本赋予执行权限: chmod +x /etc/rc.d/init.d/httpd

加入服务列表: chkconfig --add httpd

给3,5启动 chkconfig --level 3 httpd on chkconfig --level 5 httpd on

最后加路径 export PATH=$PATH:/usr/local/apache/bin

vim /etc/profile.d/httpd.sh完成后重新登录就可以了

httpd -k start

httpd -k stop

/usr/local/apache/bin/apachectl start

32.2 mysql 安装 centos7 yum安装mysql

32.2.1yum安装mysql

wget http://dev.mysql.com/get/mysql57-community-release-el7-7.noarch.rpm

yum localinstall -y mysql57-community-release-el7-7.noarch.rpm

yum install -y mysql-community-server

systemctl start mysqld.service

grep 'temporary password' /var/log/mysqld.log 看见密码

【 Your password does not satisfy the current policy requirements】

set global validate_password_policy=0;

【Your password does not satisfy the current policy requirements】

select @@validate_password_length;

set global validate_password_policy=0

SET PASSWORD = PASSWORD('66666666');

use mysql

update user set host='%' where user='root' and host='localhost';

flush privileges;

exit

32.2.2 mysql 源码安装

yum groupinstall "Development tools"

yum -y install gcc* gcc-c++ ncurses* ncurses-devel* cmake* bison* libgcrypt* perl*

yum install ncurses-devel

wget https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-5.7.18.tar.gz

tar xf mysql-5.7.18.tar.gz

mv mysql-5.7.18 mysql

wget https://jaist.dl.sourceforge.net/project/boost/boost/1.59.0/boost_1_59_0.tar.gz

cmake . -DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

-DMYSQL_UNIX_ADDR=/usr/local/mysql/mysql.sock \

-DDEFAULT_CHARSET=utf8 \

-DDEFAULT_COLLATION=utf8_general_ci \

-DWITH_INNOBASE_STORAGE_engine=1 \

-DWITH_ARCHIVE_STORAGE_ENGINE=1 \

-DWITH_BLACKHOLE_STORAGE_ENGINE=1 \

-DMYSQL_DATADIR=/usr/local/mysql/data \

-DMYSQL_TCP_PORT=3306 \

-DWITH_BOOST=/usr/local/boost_1_59_0 \

-DENABLE_DOWNLOADS=1 \

-DCURSES_INCLUDE_PATH=/usr/include \

-DCURSES_LIBRARY=/usr/lib64/libncurses.so

cd /usr/local/mysql/bin

./mysqld --initialize --user=mysql --datadir=/usr/local/mysql/data/ --basedir=/usr/local/mysql --socket=/usr/local/mysql/mysql.sock

cp -a support-files/mysql.server /etc/init.d/mysql

cp -a mysql.server /etc/init.d/mysql

vim /etc/my.cnf

[mysqld]

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES

service mysql start

SET PASSWORD = PASSWORD('66666666');

use mysql

update user set host='%' where user='root' and host='localhost';

flush privileges;

exit

33 discuz 安装

回到 顶部

32.3 php 安装

tar xf php-5.6.30.gz

mv php-5.6.30 ../soft/

cd ../soft/php-5.6.30/

yum install libxml2-devel

./configure --prefix=/usr/local/php --with-apxs2=/usr/local/apache/bin/apxs --with-mysql=/usr/local/mysql --with-pdo-mysql=/usr/local/mysql

./configure --prefix=/usr/local/php \

--with-apxs2=/usr/local/apache/bin/apxs \

--enable-zip --enable-calendar \

--with-mysql=/usr/local/mysql \

--with-pdo-mysql=/usr/local/mysql \

--with-curl \

--with-gd=/usr/local/gd2 \

--with-png --with-zlib \

--with-freetype \

--enable-soap \

--enable-sockets \

--with-mcrypt=/usr/local/libmcrypt \

--with-mhash \

--with-zlib \

--enable-track-vars \

--enable-ftp \

--with-openssl \

--enable-dba=shared \

--with-libxml-dir \

--with-gettext \

--enable-gd-native-ttf \

--with-openssl \

--enable-mbstring

make && make install

修改 apache2让支持php

vim /etc/httpd/httpd.conf

添加

LoadModule php5_module modules/libphp5.so

添加

AddType application/x-httpd-php .php

AddType application/x-httpd-php-source .phps

添加 DirectoryIndex

<IfModule dir_module>

DirectoryIndex index.html index.php

</IfModule>

测试

在/usr/local/apache/htdocs/info.php 创建

<?php

phpinfo();

?>

http://172.23.24.180/info.php

33.discuz 安装

【乱码】

cp php.ini-development /usr/local/php/lib/php.ini

vim /usr/local/php/lib/php.ini

default_charset = "GBK"

wget http://download.comsenz.com/DiscuzX/3.2/Discuz_X3.2_SC_GBK.zip

unzip Discuz_X3.2_SC_GBK.zip -d discuz

mv discuz /usr/local/apache/htdocs/

vim /etc/httpd/httpd.conf

添加上传文件虚拟目录

Alias /forum "/usr/local/apache/htdocs/discuz/upload"

<Directory "/usr/local/apache/htdocs/discuz/upload">

</Directory>

35 elk 安装(filebeat,elasticsearch,logstash,kibana)

回到 顶部

35. elk 安装

wget -c https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/rpm/elasticsearch/2.3.3/elasticsearch-2.3.3.rpm

wget -c https://download.elastic.co/logstash/logstash/packages/centos/logstash-2.3.2-1.noarch.rpm

wget https://download.elastic.co/kibana/kibana/kibana-4.5.1-1.x86_64.rpm

wget -c https://download.elastic.co/beats/filebeat/filebeat-1.2.3-x86_64.rpm

35.0 java 安装

yum install java-1.8.0-openjdk -y

35.1 elasticsearch 安装

yum localinstall elasticsearch-2.3.3.rpm -y

systemctl daemon-reload

systemctl enable elasticsearch

systemctl start elasticsearch

systemctl status elasticsearch

systemctl status elasticsearch -l

检查 es 服务

rpm -qc elasticsearch

/etc/elasticsearch/elasticsearch.yml

/etc/elasticsearch/logging.yml

/etc/init.d/elasticsearch

/etc/sysconfig/elasticsearch

/usr/lib/sysctl.d/elasticsearch.conf

/usr/lib/systemd/system/elasticsearch.service

/usr/lib/tmpfiles.d/elasticsearch.conf

修改防火墙对外

firewall-cmd --permanent --add-port={9200/tcp,9300/tcp}

firewall-cmd --reload

firewall-cmd --list-all

35.2 安装 kibana

yum localinstall kibana-4.5.1-1.x86_64.rpm –y

systemctl enable kibana

systemctl start kibana

systemctl status kibana

systemctl status kibana -l

检查kibana服务运行

netstat -nltp

firewall-cmd --permanent --add-port=5601/tcp

firewall-cmd --reload

firewall-cmd --list-all

访问地址 http://192.168.206.130:5601/

35.3 安装 logstash

yum localinstall logstash-2.3.2-1.noarch.rpm –y

cd /etc/pki/tls/ && ls

创建证书

openssl req -subj '/CN=baoyou.com/' -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt

cat /etc/logstash/conf.d/01-logstash-initial.conf

input {

beats {

port => 5000

type => "logs"

ssl => true

ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt"

ssl_key => "/etc/pki/tls/private/logstash-forwarder.key"

}

}

filter {

if [type] == "syslog-beat" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

geoip {

source => "clientip"

}

syslog_pri {}

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

output {

elasticsearch { }

stdout { codec => rubydebug }

}

启动logstash

systemctl start logstash

/sbin/chkconfig logstash on

检查服务

netstat -ntlp

添加防火墙对外

firewall-cmd --permanent --add-port=5000/tcp

firewall-cmd --reload

firewall-cmd --list-all

配置 es

cd /etc/elasticsearch/

mkdir es-01

mv *.yml es-01

vim elasticsearch.yml

http:

port: 9200

network:

host: baoyou.com

node:

name: baoyou.com

path:

data: /etc/elasticsearch/data/es-01

systemctl restart elasticsearch

systemctl restart logstash

3.4 filebeat 安装

yum localinstall filebeat-1.2.3-x86_64.rpm -y

cp logstash-forwarder.crt /etc/pki/tls/certs/.

cd /etc/filebeat/ && tree

vim filebeat.yml

filebeat:

spool_size: 1024

idle_timeout: 5s

registry_file: .filebeat

config_dir: /etc/filebeat/conf.d

output:

logstash:

hosts:

- elk.test.com:5000

tls:

certificate_authorities: ["/etc/pki/tls/certs/logstash-forwarder.crt"]

enabled: true

shipper: {}

logging: {}

runoptions: {}

mkdir conf.d && cd conf.d

vim authlogs.yml

filebeat:

prospectors:

- paths:

- /var/log/secure

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

vim syslogs.yml

filebeat:

prospectors:

- paths:

- /var/log/messages

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

service filebeat start

chkconfig filebeat on

netstat -aulpt

访问地址 http://192.168.206.130:5601/

36 tomcat 安装

回到 顶部

36.安装tomcat

vim /etc/rc.d/init.d/tomcat

#!/bin/bash

#

# baoyou curiousby@163.com 15010666051

# /etc/rc.d/init.d/tomcat

# init script for tomcat precesses

#

# processname: tomcat

# description: tomcat is a j2se server

# chkconfig: 2345 86 16

# description: Start up the Tomcat servlet engine.

if [ -f /etc/init.d/functions ]; then

. /etc/init.d/functions

elif [ -f /etc/rc.d/init.d/functions ]; then

. /etc/rc.d/init.d/functions

else

echo -e "/atomcat: unable to locate functions lib. Cannot continue."

exit -1

fi

RETVAL=$?

CATALINA_HOME="/home/baoyou/soft/apache-tomcat-7.0.75"

case "$1" in

start)

if [ -f $CATALINA_HOME/bin/startup.sh ];

then

echo $"Starting Tomcat"

$CATALINA_HOME/bin/startup.sh

fi

;;

stop)

if [ -f $CATALINA_HOME/bin/shutdown.sh ];

then

echo $"Stopping Tomcat"

$CATALINA_HOME/bin/shutdown.sh

fi

;;

*)

echo $"Usage: $0 {start|stop}"

exit 1

;;

esac

exit $RETVAL

chmod 755 /etc/rc.d/init.d/tomcat

chkconfig --add tomcat

vim /home/baoyou/soft/apache-tomcat-7.0.75/bin/catalina.sh

export JAVA_HOME=/home/baoyou/soft/jdk1.7.0_79

export JRE_HOME=/home/baoyou/soft/jdk1.7.0_79/jre

export CATALINA_HOME=/home/baoyou/soft/apache-tomcat-7.0.75

export CATALINA_BASE=/home/baoyou/soft/apache-tomcat-7.0.75

export CATALINA_TMPDIR=/home/baoyou/soft/apache-tomcat-7.0.75/temp

/home/baoyou/soft/apache-tomcat-7.0.75/bin/catalina.sh start

service tomcat start

service tomcat stop

37.xmpp openfire spark smack 安装

回到 顶部

1.安装 java

jdk-7u79-linux-x64.tar.gz

tar xf jdk-7u79-linux-x64.tar.gz

vim /etc/profile

export JAVA_HOME=/usr/java/jdk1.7.0_79

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

source /etc/profile

java -version

2.mysql 安装

wget http://dev.mysql.com/get/mysql57-community-release-el7-7.noarch.rpm

yum localinstall -y mysql57-community-release-el7-7.noarch.rpm

yum install -y mysql-community-server

systemctl start mysqld.service

grep 'temporary password' /var/log/mysqld.log 看见密码

【 Your password does not satisfy the current policy requirements】

set global validate_password_policy=0;

【Your password does not satisfy the current policy requirements】

select @@validate_password_length;

set global validate_password_policy=0

SET PASSWORD = PASSWORD('66666666');

use mysql

update user set host='%' where user='root' and host='localhost';

flush privileges;

exit

firewall-cmd --permanent --add-port=3306/tcp

firewall-cmd --reload

firewall-cmd --list-all

mysql -uroot -p66666666

create database openfire;

use openfire ;

source openfire_mysql.sql

update grant all on openfire.* to admin@"%" identified by '66666666'

flush privileges;

exit

3.openfire 安装

tar xf openfire_3_8_2.tar.gz

cp openfire_3_8_2 /home/baoyou/soft/openfire_3_8_2

bin/openfire start

firewall-cmd --permanent --add-port=9090/tcp

firewall-cmd --reload

firewall-cmd --list-all

访问 openfire

http://192.168.206.237:9090/

捐助开发者

在兴趣的驱动下,写一个免费的东西,有欣喜,也还有汗水,希望你喜欢我的作品,同时也能支持一下。 当然,有钱捧个钱场(支持支付宝和微信 以及扣扣群),没钱捧个人场,谢谢各位。

个人主页:http://knight-black-bob.iteye.com/

![]()

![]()

![]()

谢谢您的赞助,我会做的更好!