一.Apache Druid简述

Apache Druid是MetaMarket公司研发,专门为做海量数据集上的高性能OLAP(OnLine Analysis Processing)而设计的数据存储和分析系统,目前在Apache基金会下孵化。

Apache Druid采用Lambda架构,分为实时层(Overlad、MiddleManager)和批处理层(Coordinator、Historical),通过Broker节点客户端提供查询服务,Router节点Overlad、Coordinator和Broker提供统一的API网关服务,系统架构如下:

![]()

二.主要角色简述

Ⅰ).Overload

Overload进程负责监控MiddleManager进程,它负责将摄取任务分配给MiddleManager并协调segment的发布;它就是数据摄入到Dirid的控制器

Ⅱ).Coordinator

Coordinator进程负责监控Historical进程,它负责将segment分配到指定的Historical服务上,确保所有Historical节点的数据均衡

Ⅲ).MiddleManager

MiddleManager进程负责将新的数据摄入到集群中,将外部数据源数据转换为Druid所识别的segment

Ⅳ).Broker

Broker进程负责接受Client的查询请求,并将查询转发到Historical和MiddleManager中;Broker会接受所有的子查询的结果,并将数据进行合并然后返回给Client

Ⅴ).Historical

Historical是用于处理存储和查询历史数据的进程,它会从Deep Storage中下载查询区间数据,然后响应该段数据的查询

Ⅵ).Router

Router进程是一个可选的进程,它为Broker、Overload和Coordinator提供统一的API网关服务。如果不启动该进程,也可以直接连接Broker、Overload和Coordinator服务

三.安装部署

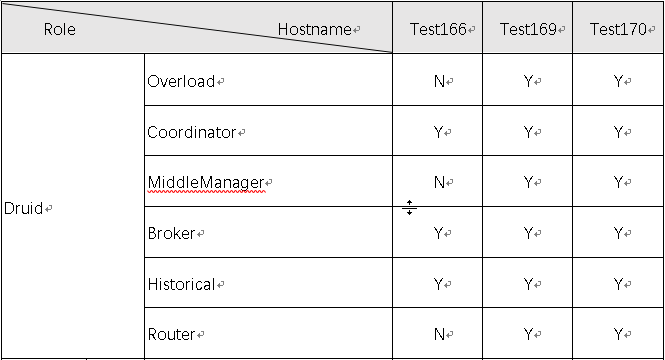

Ⅰ).角色分布

![]()

Ⅱ).下载

下载地址:https://druid.apache.org/downloads.html

apache-druid-0.15.0

Ⅲ).解压

tar -zxvf apache-druid-0.15.0-incubating-bin.tar.gz

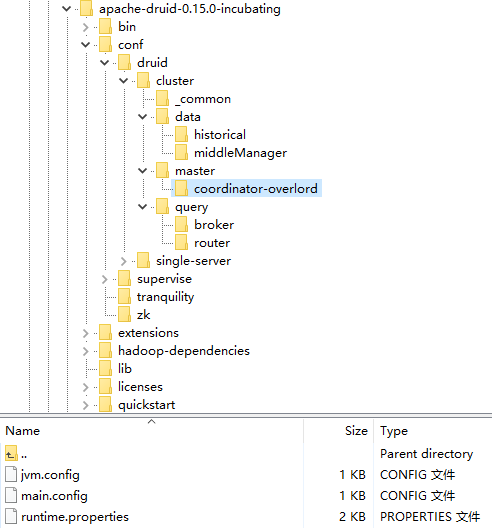

Ⅳ).目录

| PATH |

DESCRIPTION |

| bin |

执行脚本 |

| conf |

角色配置 |

| extensions |

扩展插件 |

| lib |

依赖jar包 |

| log |

日志 |

| quickstart |

测试样例数据 |

| hadoop-dependencies |

hadoop集群依赖 |

![]()

Ⅴ).配置

a)../_common/common.runtime.properties

#

# Extensions

#

# This is not the full list of Druid extensions, but common ones that people often use. You may need to change this list

# based on your particular setup.

druid.extensions.loadList=[ "druid-datasketches", "druid-hdfs-storage","druid-kafka-eight", "mysql-metadata-storage","druid-kafka-indexing-service"]

# If you have a different version of Hadoop, place your Hadoop client jar files in your hadoop-dependencies directory

# and uncomment the line below to point to your directory.

druid.extensions.hadoopDependenciesDir=/druid/druid/hadoop-dependencies/

#

# Logging

#

# Log all runtime properties on startup. Disable to avoid logging properties on startup:

druid.startup.logging.logProperties=true

#

# Zookeeper

#

druid.zk.service.host=hostname1:2181,hostname2:2181,hostname3:2181

druid.zk.paths.base=/druid

#

# Metadata storage

#

# For Derby server on your Druid Coordinator (only viable in a cluster with a single Coordinator, no fail-over):

#druid.metadata.storage.type=derby

#druid.metadata.storage.connector.connectURI=jdbc:derby://metadata.store.ip:1527/var/druid/metadata.db;create=true

#druid.metadata.storage.connector.host=metadata.store.ip

#druid.metadata.storage.connector.port=1527

# For MySQL:

druid.metadata.storage.type=mysql

druid.metadata.storage.connector.connectURI=jdbc:mysql://hostname1:3306/druid

druid.metadata.storage.connector.user=username

druid.metadata.storage.connector.password=password

# For PostgreSQL (make sure to additionally include the Postgres extension):

#druid.metadata.storage.type=postgresql

#druid.metadata.storage.connector.connectURI=jdbc:postgresql://db.example.com:5432/druid

#druid.metadata.storage.connector.user=...

#druid.metadata.storage.connector.password=...

#

# Deep storage

#

# For local disk (only viable in a cluster if this is a network mount):

#druid.storage.type=local

#druid.storage.storageDirectory=var/druid/segments

# For HDFS (make sure to include the HDFS extension and that your Hadoop config files in the cp):

druid.storage.type=hdfs

druid.storage.storageDirectory=/druid/segments

# For S3:

#druid.storage.type=s3

#druid.storage.bucket=your-bucket

#druid.storage.baseKey=druid/segments

#druid.s3.accessKey=...

#druid.s3.secretKey=...

#

# Indexing service logs

#

# For local disk (only viable in a cluster if this is a network mount):

#druid.indexer.logs.type=file

#druid.indexer.logs.directory=var/druid/indexing-logs

# For HDFS (make sure to include the HDFS extension and that your Hadoop config files in the cp):

druid.indexer.logs.type=hdfs

druid.indexer.logs.directory=/druid/indexing-logs

# For S3:

#druid.indexer.logs.type=s3

#druid.indexer.logs.s3Bucket=your-bucket

#druid.indexer.logs.s3Prefix=druid/indexing-logs

#

# Service discovery

#

druid.selectors.indexing.serviceName=druid/overlord

druid.selectors.coordinator.serviceName=druid/coordinator

#

# Monitoring

#

druid.monitoring.monitors=["io.druid.java.util.metrics.JvmMonitor"]

druid.emitter=logging

druid.emitter.logging.logLevel=info

# Storage type of double columns

# ommiting this will lead to index double as float at the storage layer

druid.indexing.doubleStorage=double

b)../overlord/runtime.properties

druid.service=druid/overlord

druid.port=8065

druid.indexer.queue.startDelay=PT30S

druid.indexer.runner.type=remote

druid.indexer.storage.type=metadata

c)../coordinator/runtime.properties

druid.service=druid/coordinator

druid.port=8062

druid.coordinator.startDelay=PT30S

druid.coordinator.period=PT30S

d)../broker/runtime.properties

druid.service=druid/broker

druid.port=8061

# HTTP server threads

druid.broker.http.numConnections=5

druid.server.http.numThreads=25

# Processing threads and buffers

druid.processing.buffer.sizeBytes=536870912

druid.processing.numThreads=7

# Query cache

druid.broker.cache.useCache=true

druid.broker.cache.populateCache=true

druid.cache.type=local

druid.cache.sizeInBytes=2000000000

e)../middleManager/runtime.properties

druid.service=druid/middleManager

druid.port=8064

# Number of tasks per middleManager

druid.worker.capacity=100

# Task launch parameters

druid.indexer.runner.javaOpts=-server -Xmx8g -Duser.timezone=UTC+0800 -Dfile.encoding=UTF-8 -Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager

druid.indexer.task.baseTaskDir=var/druid/task

# HTTP server threads

druid.server.http.numThreads=25

# Processing threads and buffers on Peons

druid.indexer.fork.property.druid.processing.buffer.sizeBytes=536870912

druid.indexer.fork.property.druid.processing.numThreads=5

# Hadoop indexing

druid.indexer.task.hadoopWorkingPath=var/druid/hadoop-tmp

druid.indexer.task.defaultHadoopCoordinates=["org.apache.hadoop:hadoop-client:2.6.0"]

f)../historical/runtime.properties

druid.service=druid/historical

druid.port=8063

# HTTP server threads

druid.server.http.numThreads=25

# Processing threads and buffers

druid.processing.buffer.sizeBytes=536870912

druid.processing.numThreads=7

# Segment storage

druid.segmentCache.locations=[{"path":"var/druid/segment-cache","maxSize":130000000000}]

druid.server.maxSize=130000000000

g)../router/runtime.properties

druid.service=druid/router

druid.plaintextPort=8888

# HTTP proxy

druid.router.http.numConnections=50

druid.router.http.readTimeout=PT5M

druid.router.http.numMaxThreads=100

druid.server.http.numThreads=100

# Service discovery

druid.router.defaultBrokerServiceName=druid/broker

druid.router.coordinatorServiceName=druid/coordinator

# Management proxy to coordinator / overlord: required for unified web console.

druid.router.managementProxy.enabled=true

Ⅵ).启动服务

## start broker

./bin/broker.sh start

## start coordinator

./bin/coordinator.sh start

## start historical

./bin/historical.sh start

## start middleManager

./bin/middleManager.sh start

## start overlord

./bin/overlord.sh start

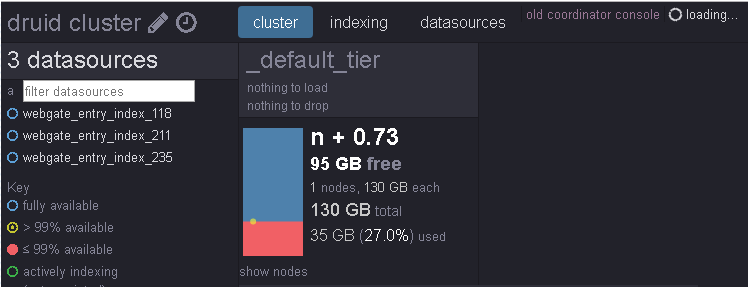

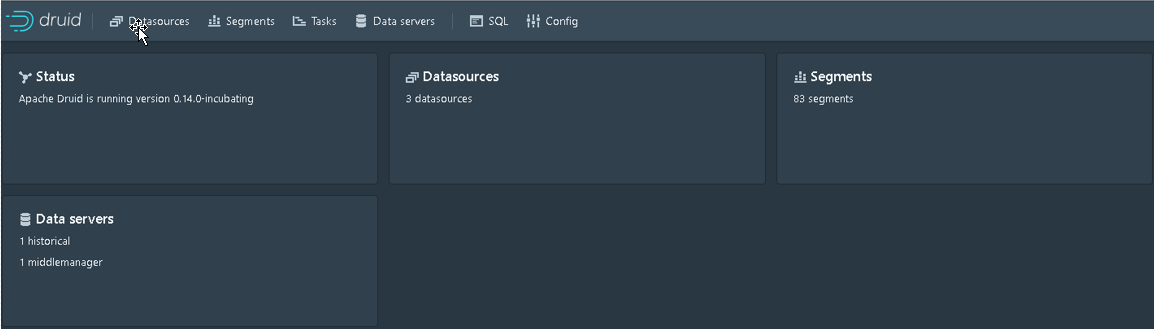

Ⅶ).验证

Coordinator URL: http://hostname:8062

![]()

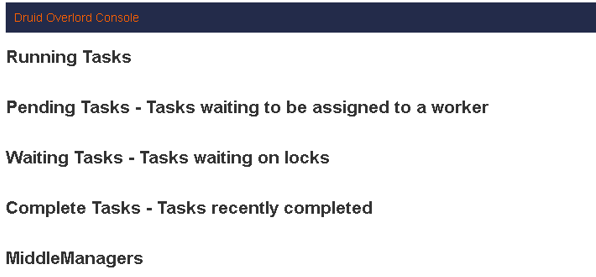

Overload URL: http://hostname:8065

![]()

Router URL: http://hostname:8888

![]()

四.hadoop依赖

如果使用hadoop集群做为结果集数据存储时,需与hadoop建立关联

ln -s /etc/hadoop/core-site.xml ./conf/druid/_common/core-site.xml

ln -s /etc/hadoop/hdfs-site.xml ./conf/druid/_common/hdfs-site.xml

ln -s /etc/hadoop/mapred-site.xml ./conf/druid/_common/mapred-site.xml

ln -s /etc/hadoop/yarn-site.xml ./conf/druid/_common/yarn-site.xml