- Dashboard是kubernetes的官方WEB UI。

- Heapster为集群添加使用统计和监控功能,为Dashboard添加仪表盘。 使用InfluxDB做为Heapster的后端存储。

Dashboard 安装

kubernetes dashboard 官方资源定义文档:https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

注意点:

- 默认资源定义文档中Service 定义没有使用NodePort,不能服务器外部访问

- 默认资源定义文档中的权限定义,仅包含了dashboard需要的最小权限,不支持本地访问外的其他方式访问,需要创建身份令牌(Create An Authentication Token)才能独立的提供访问。

通过查看dashboard的定义文档,需要的镜像是k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0, 我们在所有node节点上pull该镜像:

docker pull mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.0

docker tag mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.0 k8s.gcr.io/kubernetes-dashboard:v1.10.0

使用anbile-playbook,脚本如下:

---

- hosts: slave

remote_user: root

tasks:

- name: copy pull-images-nodes-dashboard.sh to remote nodes

copy: src=../pull-images-nodes-dashboard.sh dest=/tmp/pull-images-nodes-dashboard.sh

- name: pull images for node

shell: sh /tmp/pull-images-nodes-dashboard.sh

由于之前使用kubeadm安装kubernetes时,均没有-adm64后缀,为保持统一,此时需要修改kubernetes-dashboard.yaml文档中使用的镜像名。

在镜像中添加镜像的拉取策略:imagePullPolicy: IfNotPresent,保证在本地有镜像的情况下不去网络上拉取。

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard:v1.10.0

imagePullPolicy: IfNotPresent

此处,也可以将镜像下载下来后存到本地仓库中,然后将配置的镜像地址改为私有仓库的地址。

Service 外网访问

修改Service 的定义,type为NodePort,如下:

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 8443

selector:

k8s-app: kubernetes-dashboard

dashboard外部访问仅支持https协议。

修改权限配置

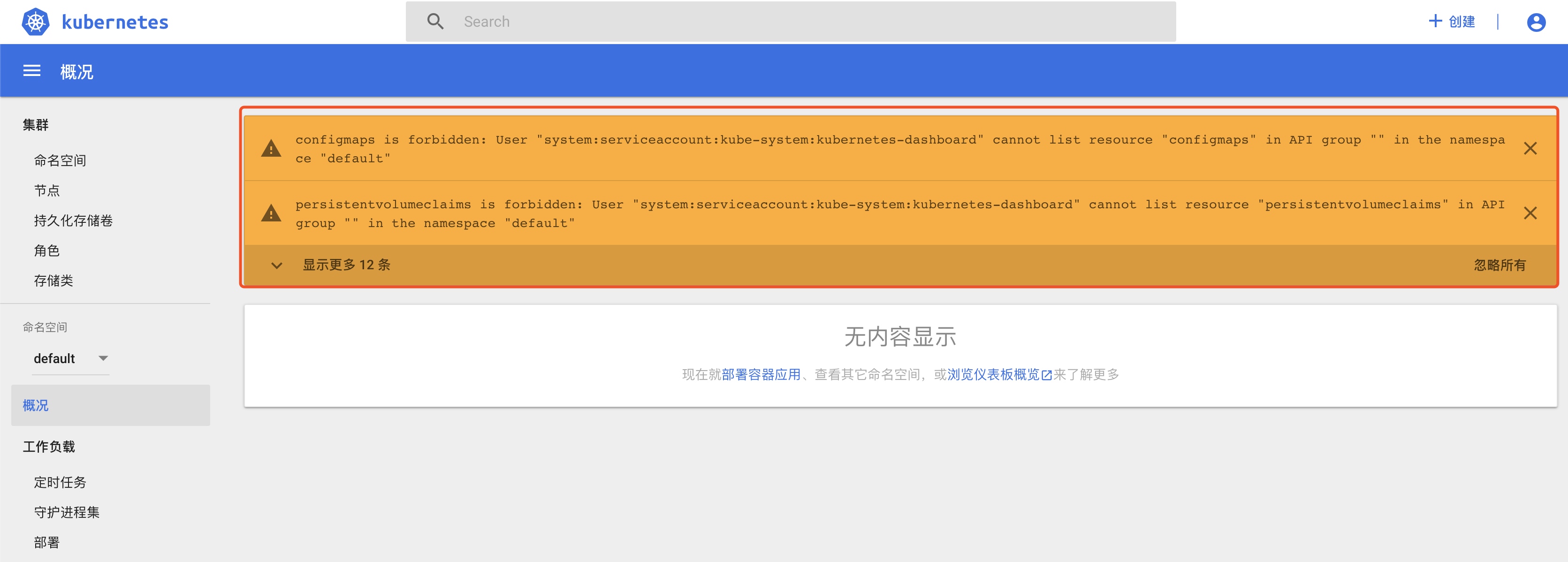

默认的角色权限登陆后,会出现如下图的问题:

![kubernetes dashboard 黄色无权限警告]()

可以依据实际的使用情况调整kubernetes-dashboard的权限。

主要修改Role 以及RoleBinding两个部分。

注释原kubernetes-dashboard.yml中Role 以及RoleBinding部分。

原RBAC授权是基于namespace的授权(使用的Role 和 RoleBinding),改为基于集群的授权(使用ClusterRole和ClusterRoleBinding)。基于集群授权admin登陆后,可管理整个集群的各个namespace下的资源。但是在实际生产使用中,应该还是区分用户和namespace 授权。

详细的RBAC说明,参考kubernetes 官网:Using RBAC Authorization

授权资源配置改为:

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

使用kubectl apply -f dashboard/使用新的配置部署kubernetes dashboard。

访问dashboard:登陆https://10.20.13.24:30443。

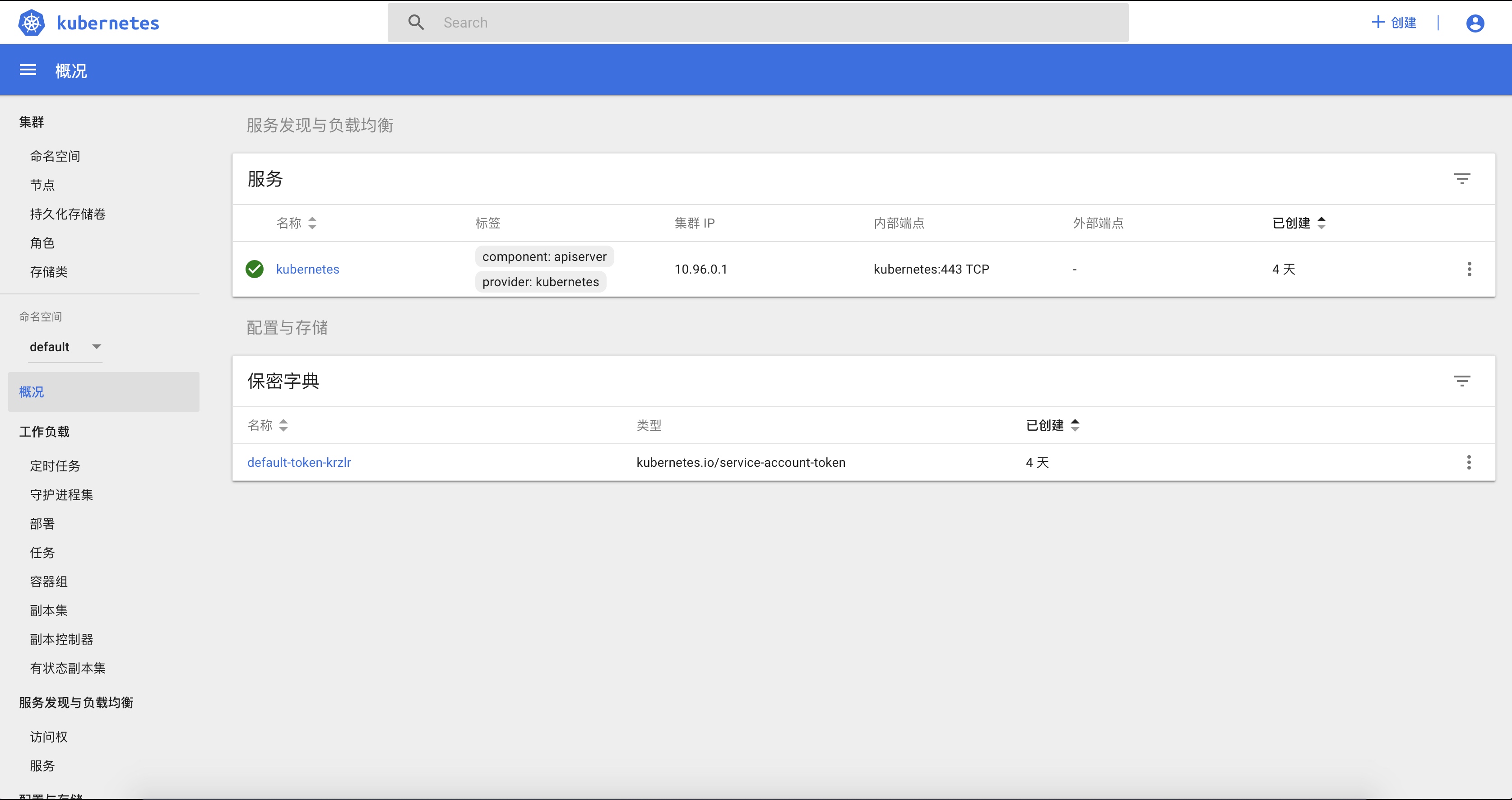

可查看各类kubernetes集群的资源。 ![登陆成功]()

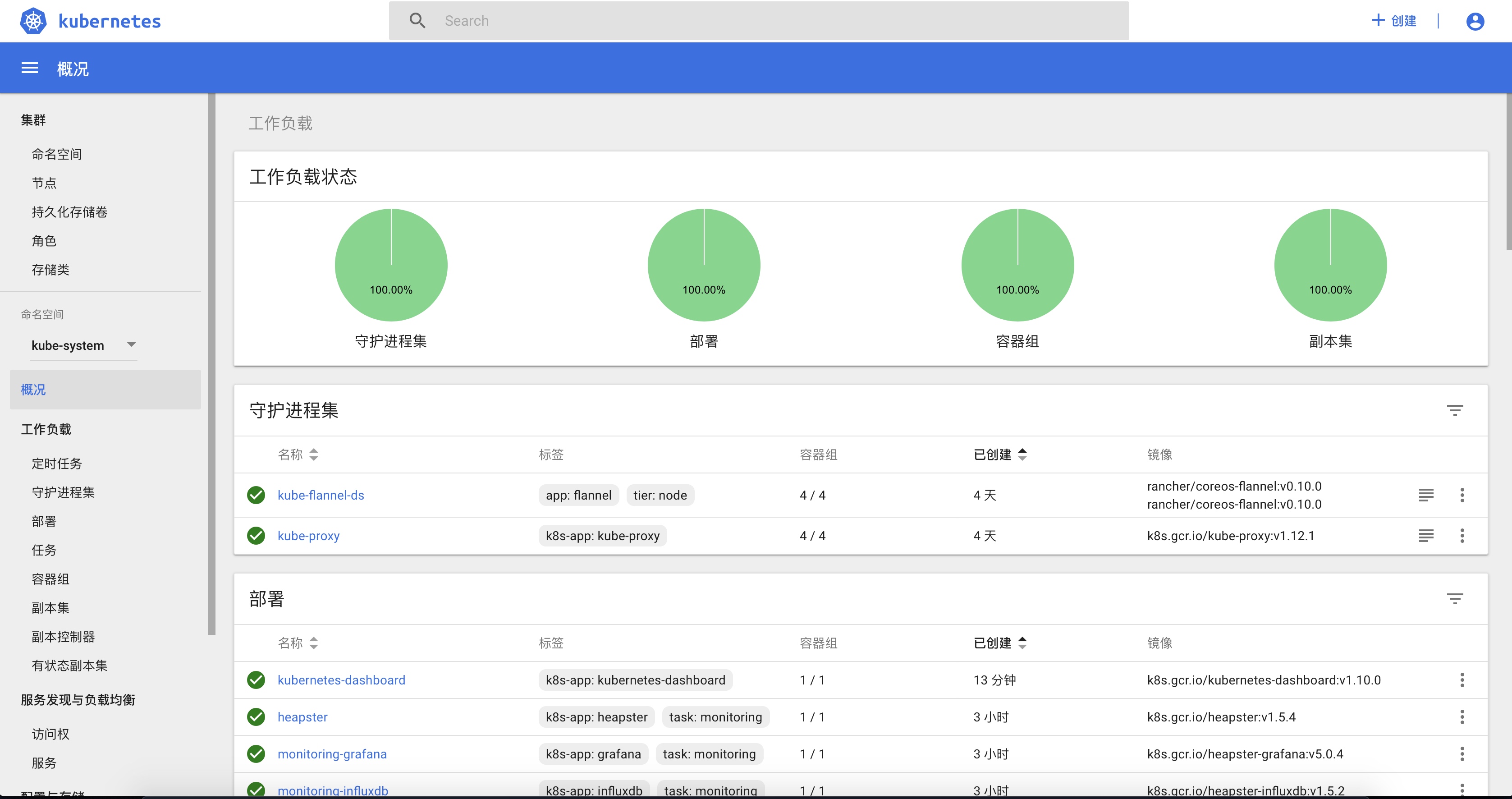

kube-system空间的负载:![kubernetes kube-system空间的负载情况]()

完整的kubernetes dashboard 配置参考文末。

启动dashboard

启动dashboard:kubectl apply -f kubernetes-dashboard.yaml

查看pod运行状态:

[root@kuber24 dashboard]# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kube-system coredns-576cbf47c7-75gcc 1/1 Running 0 4d19h 10.1.0.3 kuber24 <none>

kube-system coredns-576cbf47c7-v242w 1/1 Running 0 4d19h 10.1.0.2 kuber24 <none>

kube-system etcd-kuber24 1/1 Running 2 4d19h 10.20.13.24 kuber24 <none>

kube-system kube-apiserver-kuber24 1/1 Running 1 4d19h 10.20.13.24 kuber24 <none>

kube-system kube-controller-manager-kuber24 1/1 Running 2 4d19h 10.20.13.24 kuber24 <none>

kube-system kube-flannel-ds-6hqc4 1/1 Running 0 3d19h 10.20.13.25 kuber25 <none>

kube-system kube-flannel-ds-bs4b7 1/1 Running 0 3d19h 10.20.13.27 kuber27 <none>

kube-system kube-flannel-ds-gwcj5 1/1 Running 0 4d16h 10.20.13.24 kuber24 <none>

kube-system kube-flannel-ds-tmsbc 1/1 Running 0 3d19h 10.20.13.26 kuber26 <none>

kube-system kube-proxy-fqm89 1/1 Running 0 3d19h 10.20.13.27 kuber27 <none>

kube-system kube-proxy-nd875 1/1 Running 2 4d19h 10.20.13.24 kuber24 <none>

kube-system kube-proxy-qsf9z 1/1 Running 0 3d19h 10.20.13.25 kuber25 <none>

kube-system kube-proxy-ww8x7 1/1 Running 0 3d19h 10.20.13.26 kuber26 <none>

kube-system kube-scheduler-kuber24 1/1 Running 2 4d19h 10.20.13.24 kuber24 <none>

kube-system kubernetes-dashboard-68bbb49dc-kl5gn 1/1 Running 0 16s 10.1.3.2 kuber27 <none>

dashboard的访问地址为:https://<master-ip>:<dashboard-nodeport>

如果发生ErrImagePull,先查看pod部署的物理节点是否有dashboard镜像,然后确定镜像名和版本信息等是否与yml定义一致。

使用kubectl get secret --all-namespaces|grep dashboard查看dashboard关联的身份令牌token。

[root@kuber24 dashboard]# kubectl get Secret --all-namespaces|grep dashboard

kube-system kubernetes-dashboard-certs Opaque 0 152m

kube-system kubernetes-dashboard-key-holder Opaque 2 75m

kube-system kubernetes-dashboard-token-9msgn kubernetes.io/service-account-token 3 152m

[root@kuber24 dashboard]# kubectl describe secret/kubernetes-dashboard-token-9msgn -n kube-system

Name: kubernetes-dashboard-token-9msgn

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 43b5fdcf-d67d-11e8-8f15-00259029d7a2

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi05bXNnbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQzYjVmZGNmLWQ2N2QtMTFlOC04ZjE1LTAwMjU5MDI5ZDdhMiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.LjBwNW93Gn-XRmJvkpHpPkpYhE3v7CB3Vm5GE1VvXRDSMtme7q7K-E522BS__I6BCqLTtmncN1rSkEYtBKgmfhUf6UhABL3vW8zoPYneFZINrcWA1wrlLx5TlIIcdDLVGrWQUbv3X5NYVfP-yhCuLMv7K3glXa01-B6L8Mgm8EiuMJqZ6ypiGUySl3dLld0vu4reT5fIHgipziuChZWLrYd2mPHXNesVv4UHw_UGASD0-CCEtMvTZ5Bgvs3IP278qOw8AyAioBDNMjPTqri4MDBbkzuXjmXhBiknA6yBDYD4piBt_cjVWq6diTwV2veFCiGMxfetz36AkgMFSSQjKA

其中前面是kubernetes dashboard 的默认安装的token。

Heapster 安装

heapster 依赖 influxdb,下载heapster运行的配置资源定义文档和授权定义文档。

mkdir heapster

cd heapster

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/grafana.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

准备镜像

查看资源定义文档,找到需要使用的镜像,如下:

k8s.gcr.io/heapster-grafana-amd64:v5.0.4

k8s.gcr.io/heapster-amd64:v1.5.4

k8s.gcr.io/heapster-influxdb-amd64:v1.5.2

使用脚本在node上pull镜像:

#!/bin/bash

images=(kube-proxy-amd64:v1.12.1 pause-amd64:3.1 kubernetes-dashboard-amd64:v1.10.0 heapster-grafana-amd64:v5.0.4 heapster-amd64:v1.5.4 heapster-influxdb-amd64:v1.5.2)

for imageName in ${images[@]} ; do

docker pull mirrorgooglecontainers/$imageName

if [[ $imageName =~ "amd64" ]]; then

docker tag mirrorgooglecontainers/$imageName "k8s.gcr.io/${imageName//-amd64/}"

else

docker tag mirrorgooglecontainers/$imageName k8s.gcr.io/$imageName

fi

# docker rmi mirrorgooglecontainers/$imageName

done

由于之前使用kubeadm安装kubernetes时,均没有-adm64后缀,为保持统一,此时需要修改kubernetes-dashboard.yaml文档中使用的镜像名。

在上文创建的heapster文件夹上级目录,运行:

kubectl apply -f ./heapster/

删除kubernetes dashboard 的相关资源

使用官方的kubernetes dashboard 配置后,登陆系统没有任何的权限,需要更改权限。更改前,清理之前配置和运行的资源。

- 删除secret:

kubectl delete secret $(kubectl get secret -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

- 删除ServiceAccount:

kubectl delete ServiceAccount $(kubectl get ServiceAccount -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

- 删除Role:

kubectl delete Role $(kubectl get Role -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

- 删除RoleBinding:

kubectl delete RoleBinding $(kubectl get RoleBinding -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

- 删除Deployment:

kubectl delete Deployment $(kubectl get Deployment -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

- 删除Service:

kubectl delete Service $(kubectl get Service -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

清理:

kubectl delete secret $(kubectl get secret -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

kubectl delete ServiceAccount $(kubectl get ServiceAccount -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

kubectl delete Role $(kubectl get Role -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

kubectl delete RoleBinding $(kubectl get RoleBinding -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

kubectl delete RoleBinding $(kubectl get RoleBinding -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

kubectl delete Deployment $(kubectl get Deployment -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

kubectl delete Service $(kubectl get Service -n kube-system|grep dashboard| awk '{print $1}') -n kube-system

完整的kubernetes dashboard 配置

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# # ------------------- Dashboard Role & Role Binding ------------------- #

#

# kind: Role

# apiVersion: rbac.authorization.k8s.io/v1

# metadata:

# name: kubernetes-dashboard-minimal

# namespace: kube-system

# rules:

# # Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

# - apiGroups: [""]

# resources: ["secrets"]

# verbs: ["create"]

# # Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

# - apiGroups: [""]

# resources: ["configmaps"]

# verbs: ["create"]

# # Allow Dashboard to get, update and delete Dashboard exclusive secrets.

# - apiGroups: [""]

# resources: ["secrets"]

# resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

# verbs: ["get", "update", "delete"]

# # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

# - apiGroups: [""]

# resources: ["configmaps"]

# resourceNames: ["kubernetes-dashboard-settings"]

# verbs: ["get", "update"]

# # Allow Dashboard to get metrics from heapster.

# - apiGroups: [""]

# resources: ["services"]

# resourceNames: ["heapster"]

# verbs: ["proxy"]

# - apiGroups: [""]

# resources: ["services/proxy"]

# resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

# verbs: ["get"]

#

# ---

# apiVersion: rbac.authorization.k8s.io/v1

# kind: RoleBinding

# metadata:

# name: kubernetes-dashboard-minimal

# namespace: kube-system

# roleRef:

# apiGroup: rbac.authorization.k8s.io

# kind: Role

# name: kubernetes-dashboard-minimal

# subjects:

# - kind: ServiceAccount

# name: kubernetes-dashboard

# namespace: kube-system

#

---

# ---------- Dashboard ClusterRole & ClusterRoleBinding --------- #

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard:v1.10.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30443

selector:

k8s-app: kubernetes-dashboard

参考

- kubernetes dashboard 官方说明

- kubernetes 安装博客

最后

感谢大家的阅读,如果有什么疑问️,请您留言。

欢迎大家来我的github,查看更多关于kubernetes的个人经验,共同进步。