HDFS HA实战v1.0

当前环境:

hadoop+zookeeper(namenode,resourcemanager HA)

| namenode |

serviceId |

Init status |

| sht-sgmhadoopnn-01 |

nn1 |

active |

| sht-sgmhadoopnn-02 |

nn2 |

standby |

参考: http://blog.csdn.net/u011414200/article/details/50336735

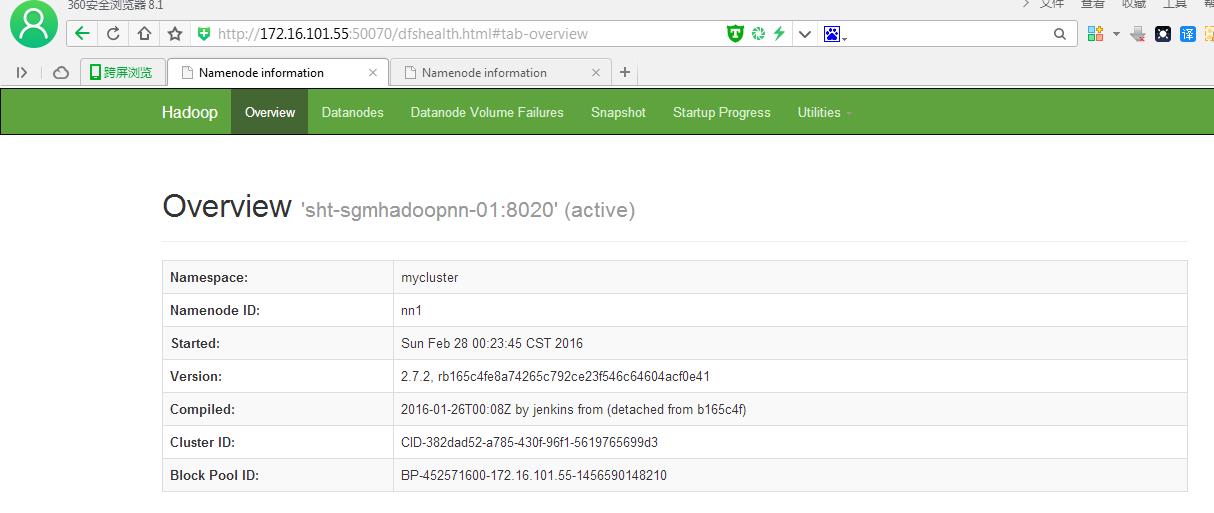

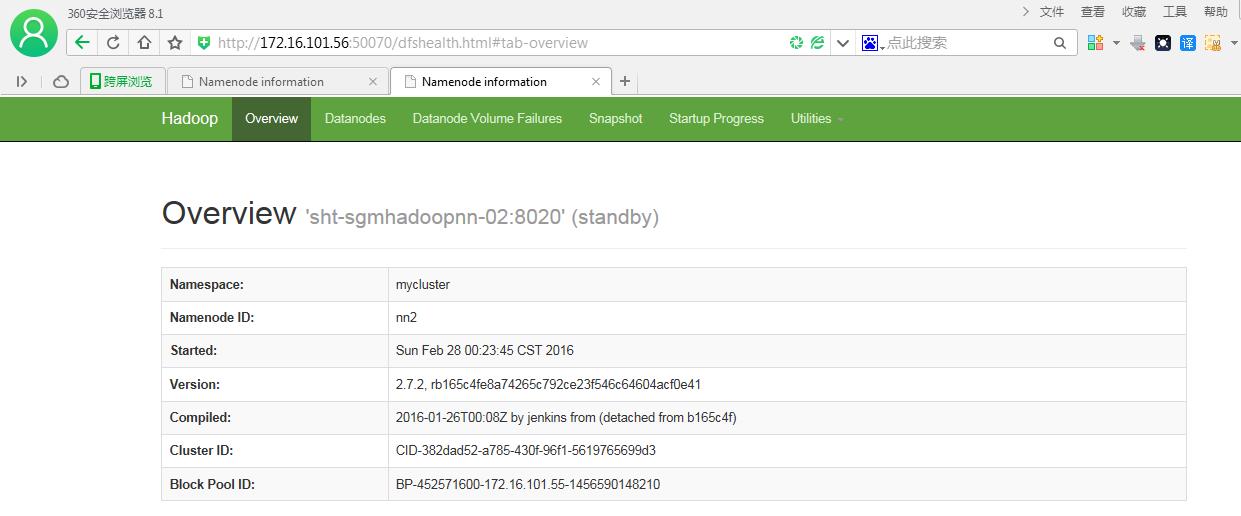

一.查看namenode是active还是standby

1.打开网页

![]()

![]()

2.查看zkfc日志

- [root@sht-sgmhadoopnn-01 logs]# more hadoop-root-zkfc-sht-sgmhadoopnn-01.telenav.cn.log

-

- …………………..

-

- 2016-02-28 00:24:00,692 INFO org.apache.hadoop.ha.ZKFailoverController: Trying to make NameNode at sht-sgmhadoopnn-01/172.16.101.55:8020 active...

-

- 2016-02-28 00:24:01,762 INFO org.apache.hadoop.ha.ZKFailoverController: Successfully transitioned NameNode at sht-sgmhadoopnn-01/172.16.101.55:8020 to active state

-

-

- [root@sht-sgmhadoopnn-02 logs]# more hadoop-root-zkfc-sht-sgmhadoopnn-01.telenav.cn.log

-

- …………………..

-

- 2016-02-28 00:24:01,186 INFO org.apache.hadoop.ha.ZKFailoverController: ZK Election indicated that NameNode at sht-sgmhadoopnn-02/172.16.101.56:8020 should become standby

-

- 2016-02-28 00:24:01,209 INFO org.apache.hadoop.ha.ZKFailoverController: Successfully transitioned NameNode at sht-sgmhadoopnn-02/172.16.101.56:8020 to standby state

3. 通过命令hdfs haadmin –getServiceState

###$HADOOP_HOME/etc/hadoop/hdfs-site.xml, dfs.ha.namenodes.[dfs.nameservices]

<!--设置NameNode IDs 此版本最大只支持两个NameNode -->

dfs.ha.namenodes.mycluster

nn1,nn2

- [root@sht-sgmhadoopnn-02 logs]# hdfs haadmin -getServiceState nn1

-

- active

-

- [root@sht-sgmhadoopnn-02 logs]# hdfs haadmin -getServiceState nn2

-

- standby

二.基本命令

[root@sht-sgmhadoopnn-02 logs]# hdfs --help

Usage: hdfs [--config confdir] [--loglevel loglevel] COMMAND

where COMMAND is one of:

dfs run a filesystem command on the file systems supported in Hadoop.

classpath prints the classpath

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

journalnode run the DFS journalnode

zkfc run the ZK Failover Controller daemon

datanode run a DFS datanode

dfsadmin run a DFS admin client

haadmin run a DFS HA admin client

fsck run a DFS filesystem checking utility

balancer run a cluster balancing utility

jmxget get JMX exported values from NameNode or DataNode.

mover run a utility to move block replicas across

storage types

oiv apply the offline fsimage viewer to an fsimage

oiv_legacy apply the offline fsimage viewer to an legacy fsimage

oev apply the offline edits viewer to an edits file

fetchdt fetch a delegation token from the NameNode

getconf get config values from configuration

groups get the groups which users belong to

snapshotDiff diff two snapshots of a directory or diff the

current directory contents with a snapshot

lsSnapshottableDir list all snapshottable dirs owned by the current user

Use -help to see options

portmap run a portmap service

nfs3 run an NFS version 3 gateway

cacheadmin configure the HDFS cache

crypto configure HDFS encryption zones

storagepolicies list/get/set block storage policies

version print the version

###########################################################################

[root@sht-sgmhadoopnn-02 logs]# hdfs namenode --help

Usage: java NameNode [-backup] |

[-checkpoint] |

[-format [-clusterid cid ] [-force] [-nonInteractive] ] |

[-upgrade [-clusterid cid] [-renameReserved<k-v pairs>] ] |

[-upgradeOnly [-clusterid cid] [-renameReserved<k-v pairs>] ] |

[-rollback] |

[-rollingUpgrade <rollback|downgrade|started> ] |

[-finalize] |

[-importCheckpoint] |

[-initializeSharedEdits] |

[-bootstrapStandby] |

[-recover [ -force] ] |

[-metadataVersion ] ]

###########################################################################

[root@sht-sgmhadoopnn-02 logs]# hdfs haadmin --help

-help: Unknown command

Usage: haadmin

[-transitionToActive [--forceactive] <serviceId>]

[-transitionToStandby <serviceId>]

[-failover [--forcefence] [--forceactive] <serviceId> <serviceId>]

[-getServiceState <serviceId>]

[-checkHealth <serviceId>]

[-help <command>]

transitionToActive

和

![]()