准备工作

- 准备四台机器,基本信息如下:

| IP |

hostname |

Role |

OS |

Memery |

| 192.168.242.136 |

k8smaster |

Kubernetes master 节点 |

CentOS 7.2 |

3G |

| 192.168.242.137 |

k8snode1 |

Kubernetes node 节点 |

CentOS 7.2 |

2G |

| 192.168.242.138 |

k8snode2 |

Kubernetes node 节点 |

CentOS 7.2 |

2G |

| 192.168.242.139 |

k8snode3 |

Kubernetes node 节点 |

CentOS 7.2 |

2G |

- 设置master节点到node节点的免密登录,具体方法请参考这里

- 每台机器【/etc/hosts】文件需包含:

192.168.242.136 k8smaster

192.168.242.137 k8snode1

192.168.242.138 k8snode2

192.168.242.139 k8snode3

CentOS修改机器名参考这里

- 每台机器预装【docker 17.03.2-ce】,安装步骤参考这里

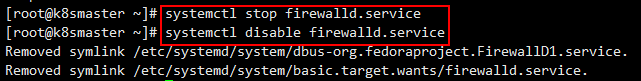

- 关闭所有机器防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

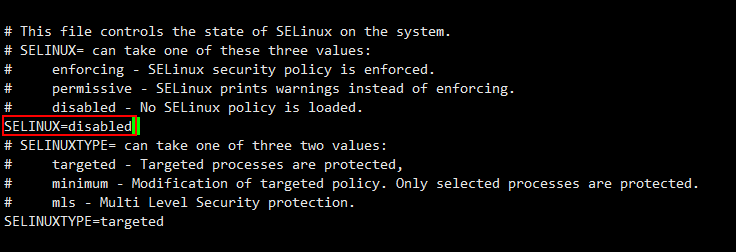

- 所有机器关闭selinux,使容器能够访问到宿主机文件系统

vim /etc/selinux/config

将【SELINUX】设置为【disabled】

临时关闭selinux

setenforce 0

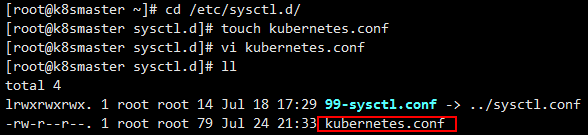

- 配置系统路由参数,防止kubeadm报路由警告

在【/etc/sysctl.d/】目录下新建一个Kubernetes的配置文件【kubernetes.conf】,并写入如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

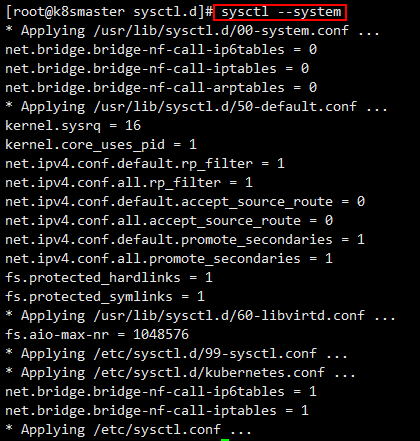

运行如下命令使配置生效

sysctl --system

【注意】我这里是新增了一个配置文件,而不是直接写到文件【/etc/sysctl.conf】中,所以生效配置的命令参数是【--system】,如果是直接写到文件【/etc/sysctl.conf】中,那么生效命令的参数是【-p】。

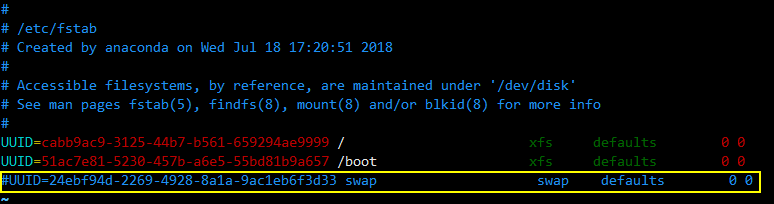

- 关闭虚拟内存

修改配置文件【/etc/fstab】

vim /etc/fstab

注释掉swap那一行

然后通过命令临时关闭虚拟内存

swapoff -a

如果不关闭swap,就会在kubeadm初始化Kubernetes的时候报错

[ERROR Swap]: running with swap on is not supported. Please disable swap

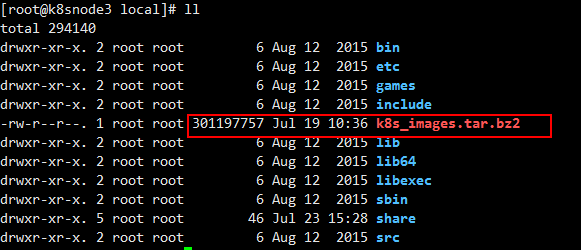

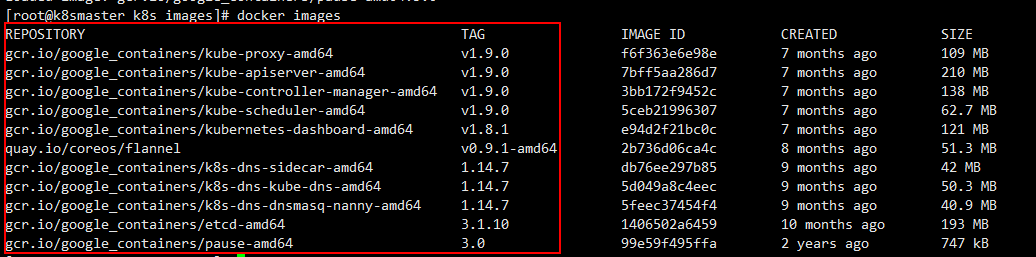

- 准备镜像

我是参考的这篇博客进行搭建的,所以我这里的镜像都是从该博客提供的地址下载的,将镜像压缩包上传到各节点。

使用解压命令解压

tar -jxvf k8s_images.tar.bz2

然后导入镜像

docker load -i /usr/local/k8s_images/docker_images/etcd-amd64_v3.1.10.tar

docker load -i /usr/local/k8s_images/docker_images/flannel:v0.9.1-amd64.tar

docker load -i /usr/local/k8s_images/docker_images/k8s-dns-dnsmasq-nanny-amd64_v1.14.7.tar

docker load -i /usr/local/k8s_images/docker_images/k8s-dns-kube-dns-amd64_1.14.7.tar

docker load -i /usr/local/k8s_images/docker_images/k8s-dns-sidecar-amd64_1.14.7.tar

docker load -i /usr/local/k8s_images/docker_images/kube-apiserver-amd64_v1.9.0.tar

docker load -i /usr/local/k8s_images/docker_images/kube-controller-manager-amd64_v1.9.0.tar

docker load -i /usr/local/k8s_images/docker_images/kube-proxy-amd64_v1.9.0.tar

docker load -i /usr/local/k8s_images/docker_images/kube-scheduler-amd64_v1.9.0.tar

docker load -i /usr/local/k8s_images/docker_images/pause-amd64_3.0.tar

docker load -i /usr/local/k8s_images/kubernetes-dashboard_v1.8.1.tar

路径请按照镜像解压路径填写,全部导入成功后通过命令【docker images】可查看到导入成功的镜像。

到这里,前期的准备工作就全部完成了,下面就要开始安装了。

搭建Kubernetes集群

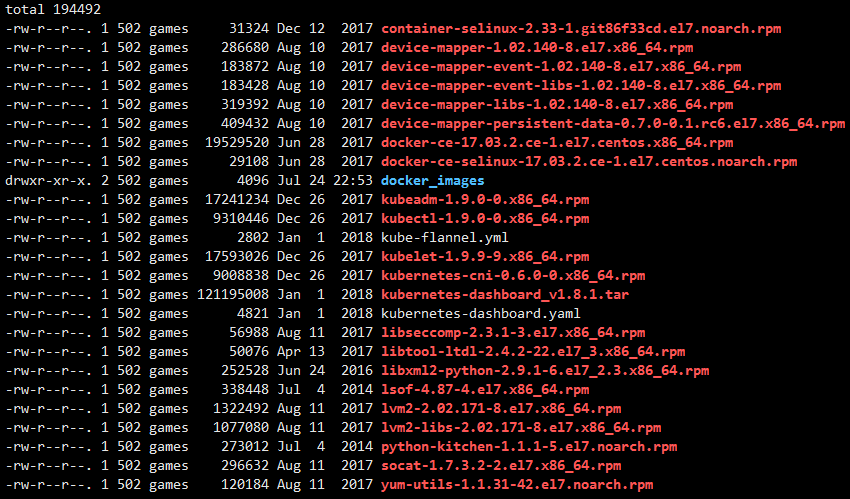

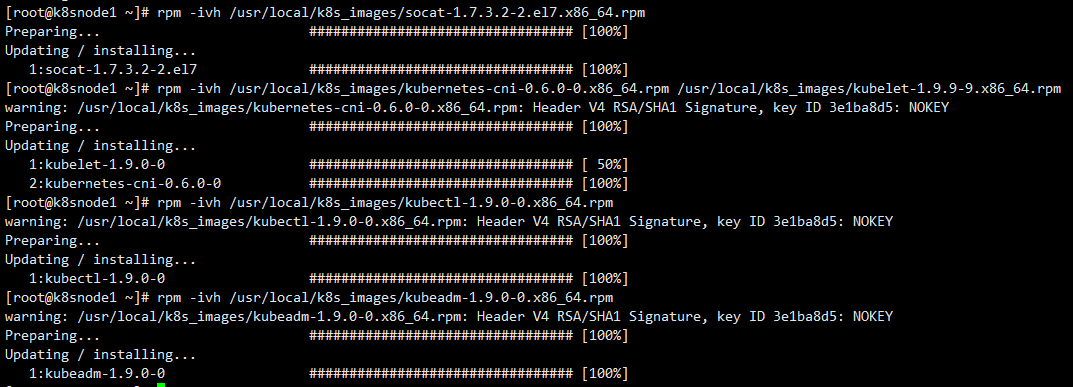

- 在所有节点上部署socat、kubernetes-cni、kubelet、kubectl、kubeadm。

rpm -ivh /usr/local/k8s_images/socat-1.7.3.2-2.el7.x86_64.rpm

rpm -ivh /usr/local/k8s_images/kubernetes-cni-0.6.0-0.x86_64.rpm /usr/local/k8s_images/kubelet-1.9.9-9.x86_64.rpm

rpm -ivh /usr/local/k8s_images/kubectl-1.9.0-0.x86_64.rpm

rpm -ivh /usr/local/k8s_images/kubeadm-1.9.0-0.x86_64.rpm

install kubelet/kubectl/kubeadm.png

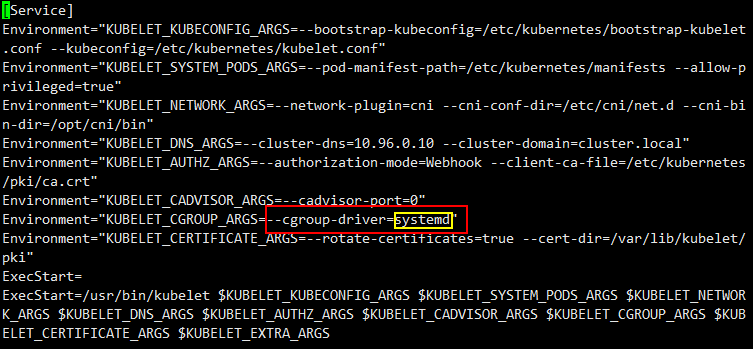

接着修改kubelet的配置文件

vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

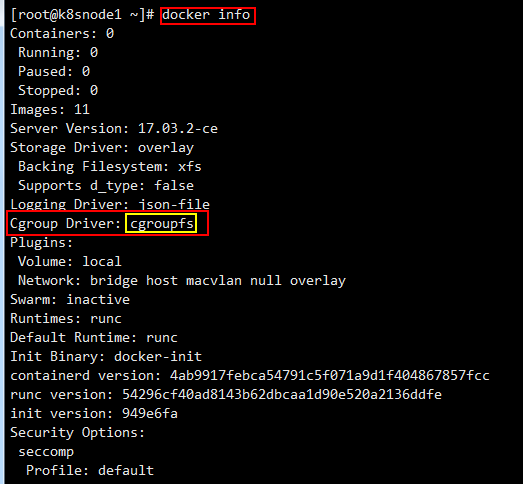

kubelet的【cgroup-driver】需要和docker的保持一致,通过命令【docker info】可以查看docker的【Cgroup Driver】属性值。

这里可以看到docker的【Cgroup Driver】是【cgroupfs】,所以这里需要将kubelet的【cgroup-driver】也修改为【cgroupfs】。

修改完成后重载配置文件

systemctl daemon-reload

设置kubelet开机启动

systemctl enable kubelet

- 配置master节点

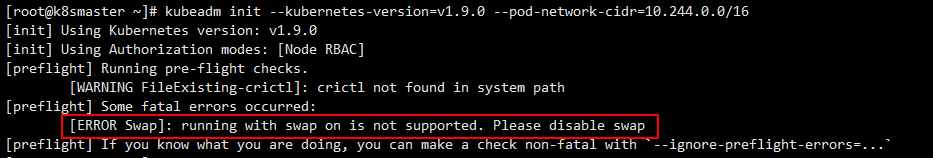

2.1 初始化Kubernetes

kubeadm init --kubernetes-version=v1.9.0 --pod-network-cidr=10.244.0.0/16

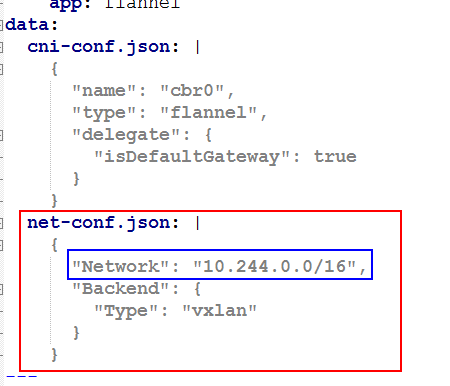

kubernetes默认支持多重网络插件如flannel、weave、calico,这里使用flanne,就必须要设置【--pod-network-cidr】参数,10.244.0.0/16是kube-flannel.yml里面配置的默认网段,这里的【--pod-network-cidr】参数要和【kube-flannel.yml】文件中的【Network】参数对应。

初始化输入如下:

[root@k8smaster ~]# kubeadm init --kubernetes-version=v1.9.0 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.9.0

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [k8smaster kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.242.136]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 44.002305 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node k8smaster as master by adding a label and a taint

[markmaster] Master k8smaster tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: abb43a.62186b817d71bcd2

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token abb43a.62186b817d71bcd2 192.168.242.136:6443 --discovery-token-ca-cert-hash sha256:6a7625aa2928085fde84cfd918398408771dfe6af5c88c73b2d47527a00a8dad

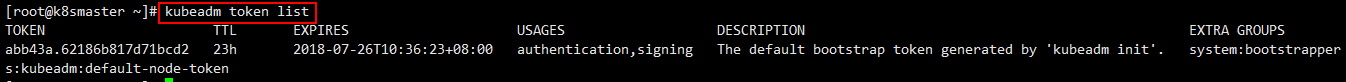

将【 kubeadm join --token xxxx】这段记下来,加入node节点需要用到这个令牌,如果忘记了可以使用如下命令查看

kubeadm token list

令牌的时效性是24个小时,如果过期了可以使用如下命令创建

kubeadm token create

2.2 配置环境变量

此时root用户还不能使用kubelet控制集群,需要按照以下方法配置环境变量

将信息写入bash_profile文件

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

运行命令立即生效

source ~/.bash_profile

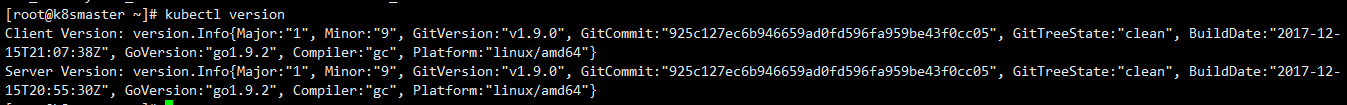

查看版本测试下

kubectl version

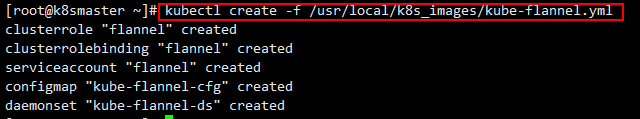

2.3 安装flannel

直接使用离线包里面的【kube-flannel.yml】

kubectl create -f /usr/local/k8s_images/kube-flannel.yml

- 配置node节点

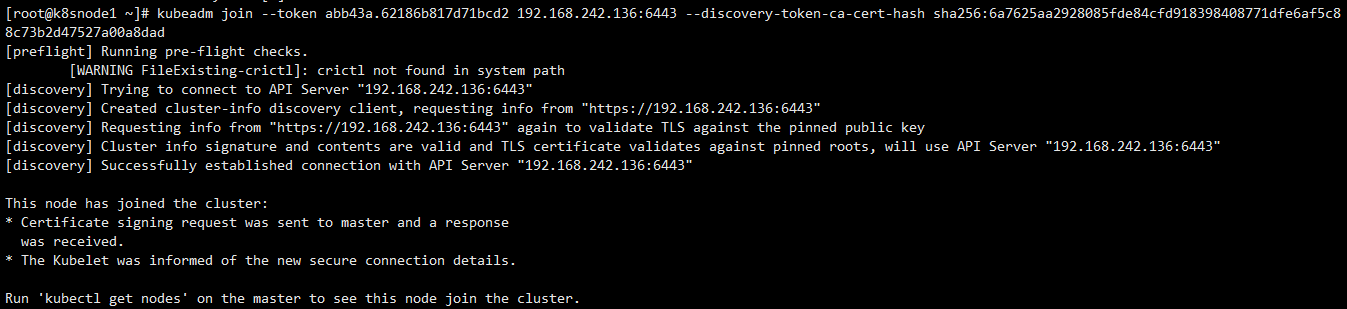

使用配置master节点初始化Kubernetes生成的token将3个node节点加入master,参见2.1,分别在每个node节点上运行如下命令:

kubeadm join --token abb43a.62186b817d71bcd2 192.168.242.136:6443 --discovery-token-ca-cert-hash sha256:6a7625aa2928085fde84cfd918398408771dfe6af5c88c73b2d47527a00a8dad

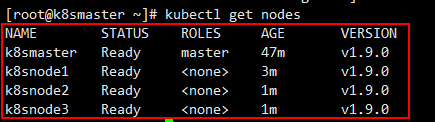

全部加入后就可以到master节点上通过如下命令查看是否加入成功

kubectl get nodes

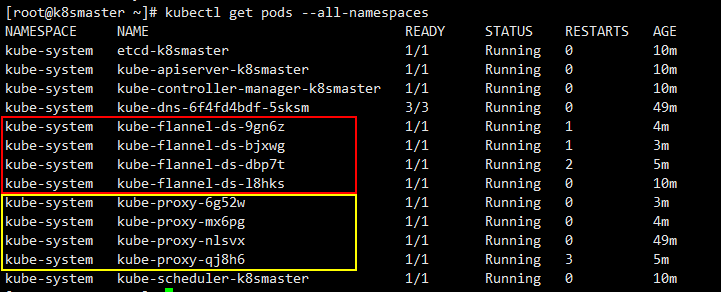

kubernetes会在每个node节点创建flannel和kube-proxy的pod,通过如下命令查看pods

kubectl get pods --all-namespaces

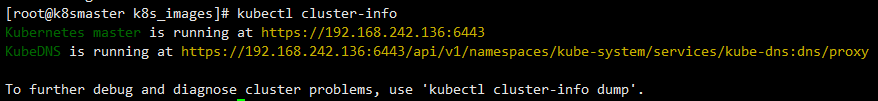

查看集群信息

kubectl cluster-info

搭建dashboard

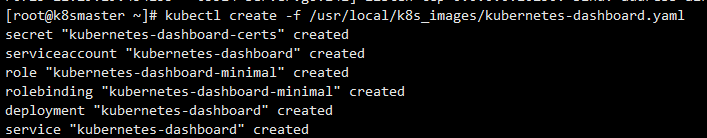

在master节点上,直接使用离线包里面的【kubernetes-dashboard.yaml】来创建

kubectl create -f /usr/local/k8s_images/kubernetes-dashboard.yaml

接着设置验证方式,默认验证方式有kubeconfig和token,这里使用basicauth的方式进行apiserver的验证。

创建【/etc/kubernetes/pki/basic_auth_file】用于存放用户名、密码、用户ID。

admin,admin,2

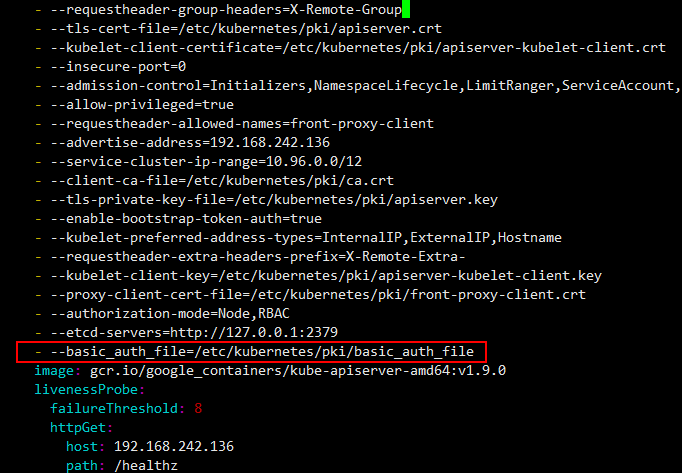

编辑【/etc/kubernetes/manifests/kube-apiserver.yaml】文件,添加basic_auth验证

vim /etc/kubernetes/manifests/kube-apiserver.yaml

添加一行

- --basic_auth_file=/etc/kubernetes/pki/basic_auth_file

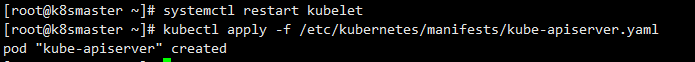

重启kubelet

systemctl restart kubelet

更新kube-apiserver容器

kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yaml

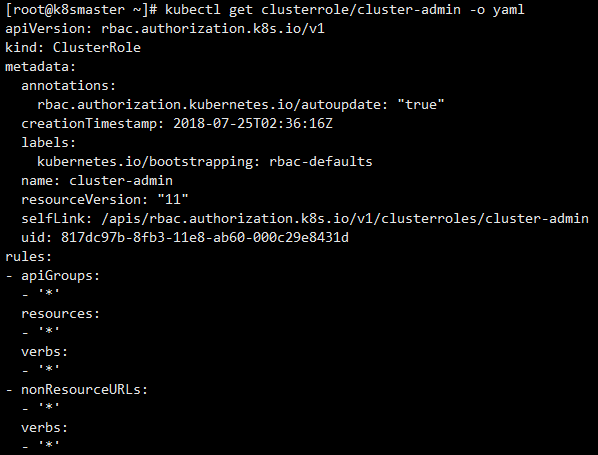

接下来给admin用户授权,k8s1.6后版本都采用RBAC授权模型,默认cluster-admin是拥有全部权限的,将admin和cluster-admin bind这样admin就有cluster-admin的权限。

先查看cluster-admin

kubectl get clusterrole/cluster-admin -o yaml

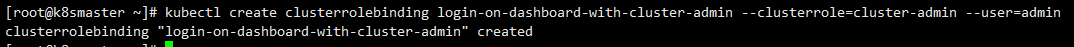

将admin和cluster-admin绑定

kubectl create clusterrolebinding login-on-dashboard-with-cluster-admin --clusterrole=cluster-admin --user=admin

然后查看一下

kubectl get clusterrolebinding/login-on-dashboard-with-cluster-admin -o yaml

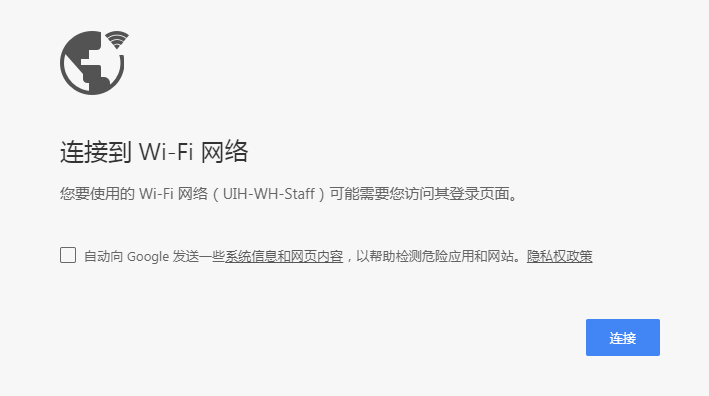

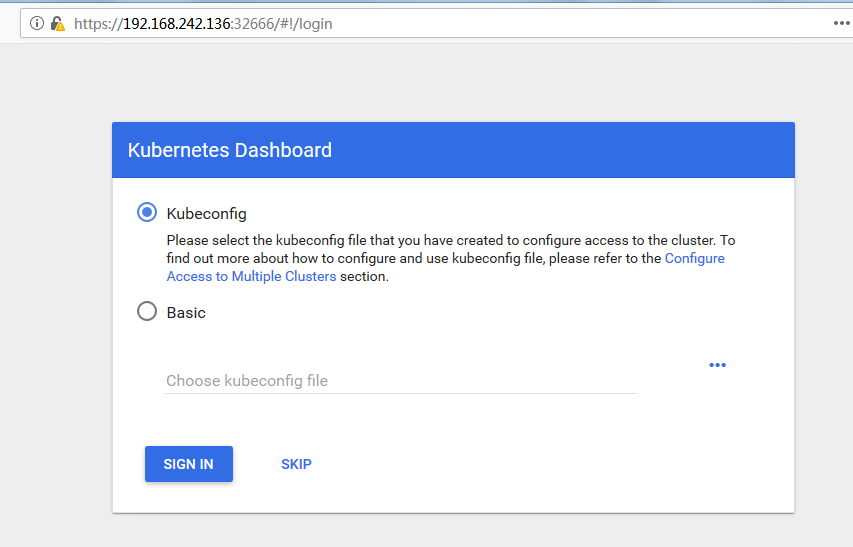

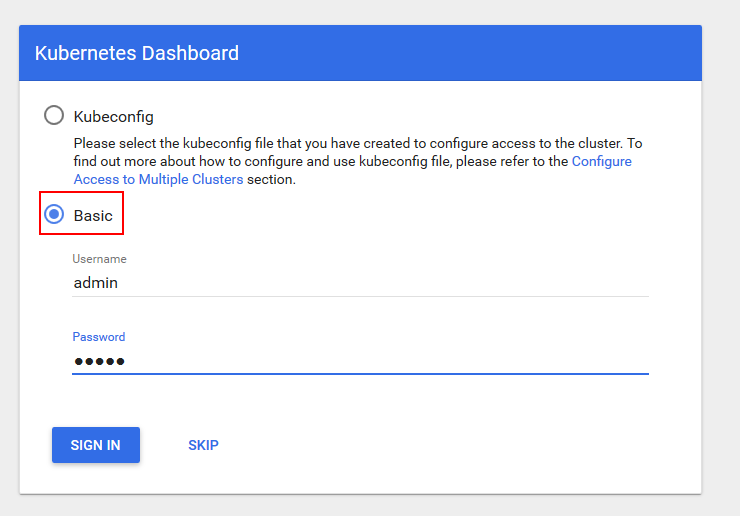

现在可以登录试试,在浏览器中输入地址【https://192.168.242.136:32666】,这里需要用Firefox,Chrome由于安全机制访问不了。

通过Firefox可以看到如下界面

选择【Basic】认证方式,输入【/etc/kubernetes/pki/basic_auth_file】文件中配置的用户名和密码登录。

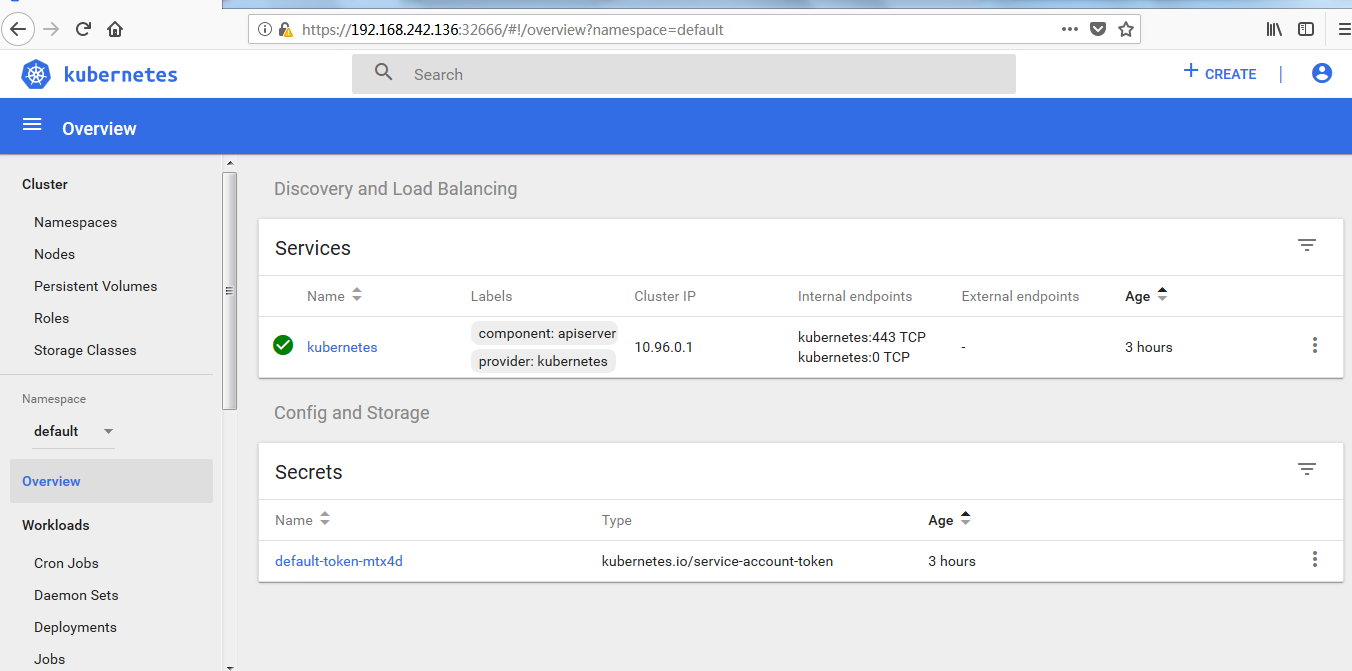

登录成功可以看到如下界面

至此,部署全部完成。