在PAI-Notebook下训练DeepFM

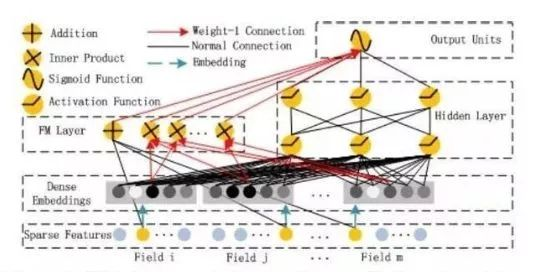

应该说,DeepFM是目前最普遍的CTR预估模型之一,对于一个基于CTR预估的推荐系统,最重要的是学习到用户点击行为背后隐含的特征组合。在不同的推荐场景中,低阶组合特征或者高阶组合特征可能都会对最终的CTR产生影响。广度模型(LR/ FM/ FFM)一般只能学习1阶和2阶特征组合;而深度模型(FNN/PNN)一般学习的是高阶特征组合。而DeepFM模型同时考虑了两者,先来回顾一下DeepFM的模型结构:

![image image]()

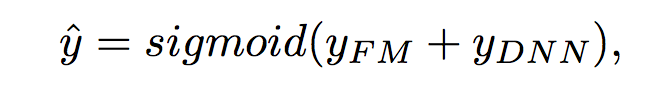

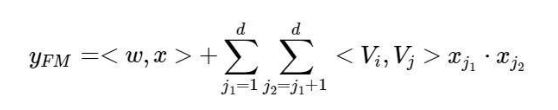

如图所示,DeepFM包含两部分:因子分解机(FM)部分与神经网络部分(DNN),分别负责低阶特征的提取和高阶特征的提取。这两部分共享同样的嵌入层输入。DeepFM的预测结果可以写为

![image image]()

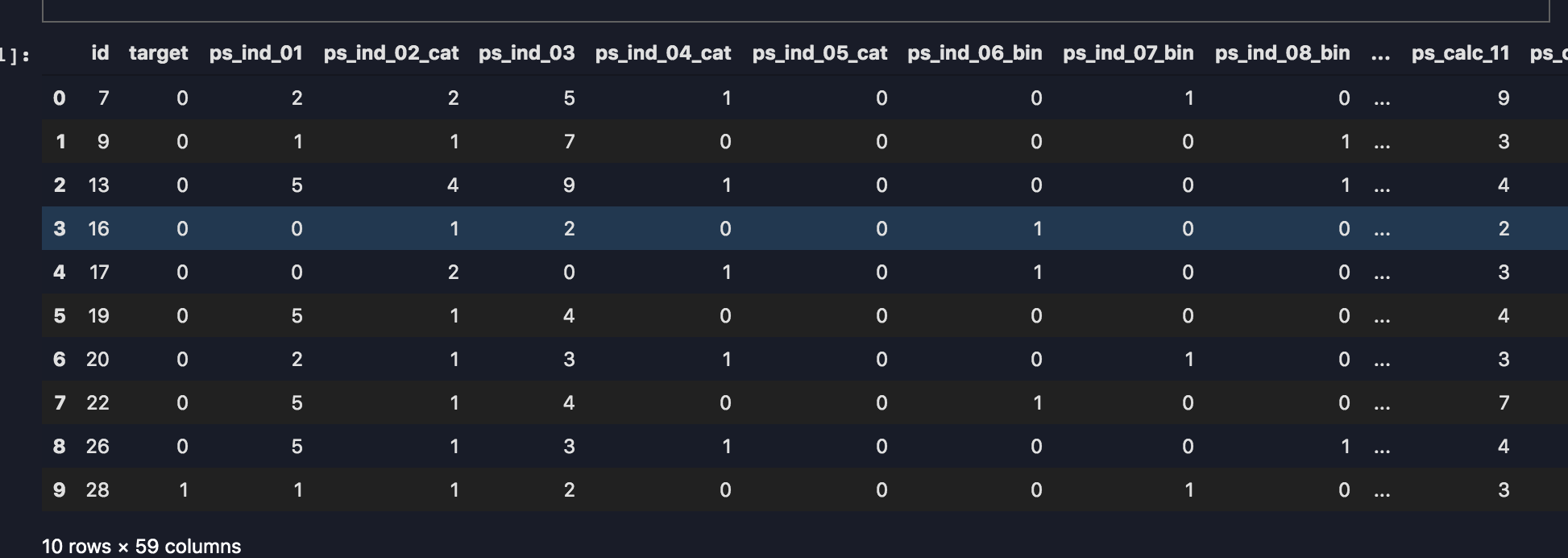

数据集

我们先以下面的数据集作为示例

import pandas as pd

TRAIN_FILE = "data/train.csv"

TEST_FILE = "data/test.csv"

NUMERIC_COLS = [

"ps_reg_01", "ps_reg_02", "ps_reg_03",

"ps_car_12", "ps_car_13", "ps_car_14", "ps_car_15"

]

IGNORE_COLS = [

"id", "target",

"ps_calc_01", "ps_calc_02", "ps_calc_03", "ps_calc_04",

"ps_calc_05", "ps_calc_06", "ps_calc_07", "ps_calc_08",

"ps_calc_09", "ps_calc_10", "ps_calc_11", "ps_calc_12",

"ps_calc_13", "ps_calc_14",

"ps_calc_15_bin", "ps_calc_16_bin", "ps_calc_17_bin",

"ps_calc_18_bin", "ps_calc_19_bin", "ps_calc_20_bin"

]

dfTrain = pd.read_csv(TRAIN_FILE)

dfTest = pd.read_csv(TEST_FILE)

如下是数据集展示

![_2020_05_14_11_16_47 _2020_05_14_11_16_47]()

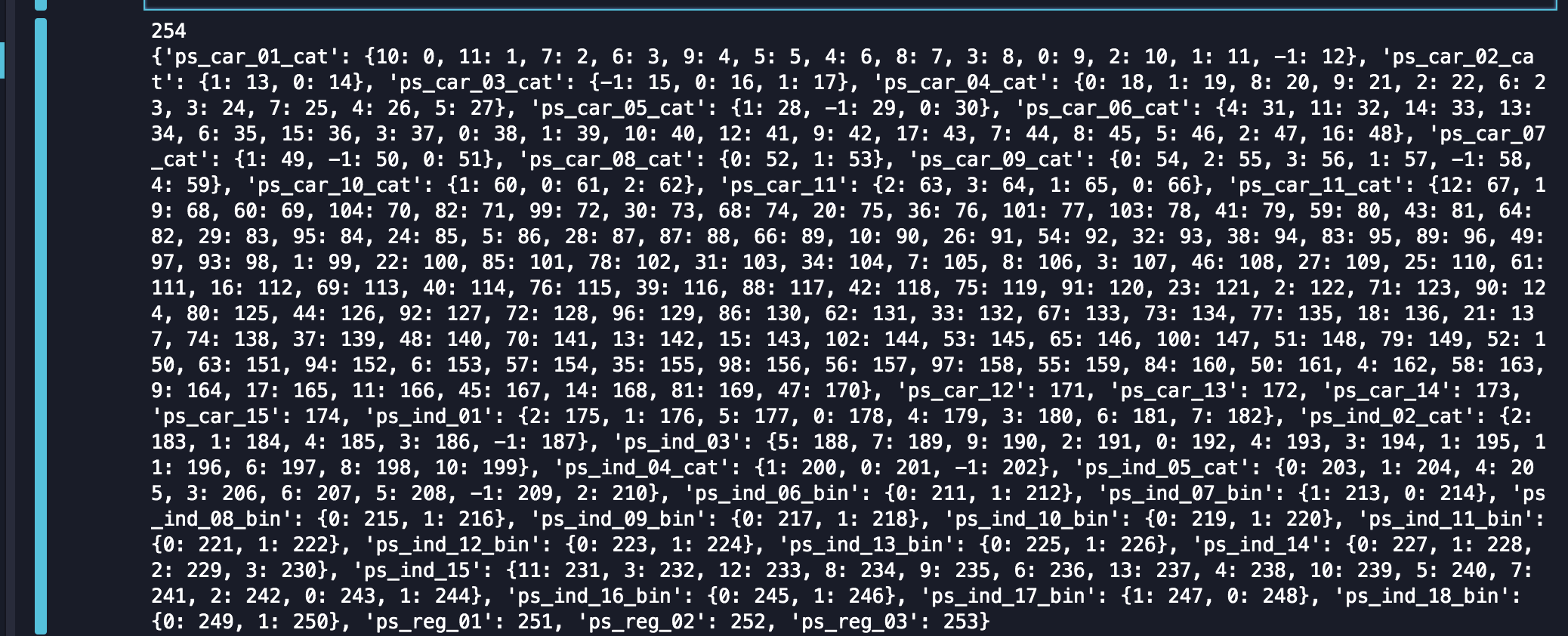

接下来,我们将计算出feature-map。这个featrue-map定义了如何将变量的值转换为其对应的特征索引feature-index。

feature_dict = {}

total_feature = 0

for col in df.columns:

if col in IGNORE_COLS:

continue

elif col in NUMERIC_COLS:

feature_dict[col] = total_feature

total_feature += 1

else:

unique_val = df[col].unique()

feature_dict[col] = dict(zip(unique_val,range(total_feature,len(unique_val) + total_feature)))

total_feature += len(unique_val)

print(total_feature)

print(feature_dict)

如图所示为feature_dict中包含的feature-index以及feature-value 的对应关系,结果如下

![_2020_05_14_11_38_54 _2020_05_14_11_38_54]()

FM实现

下一步,需要将训练集转换为新的数组,将每一条数据转换为对应的feature-index以及feature-value

print(dfTrain.columns)

train_y = dfTrain[['target']].values.tolist()

dfTrain.drop(['target','id'],axis=1,inplace=True)

train_feature_index = dfTrain.copy()

train_feature_value = dfTrain.copy()

for col in train_feature_index.columns:

if col in IGNORE_COLS:

train_feature_index.drop(col,axis=1,inplace=True)

train_feature_value.drop(col,axis=1,inplace=True)

continue

elif col in NUMERIC_COLS:

train_feature_index[col] = feature_dict[col]

else:

train_feature_index[col] = train_feature_index[col].map(feature_dict[col])

train_feature_value[col] = 1

接下来定义模型的一些参数,如学习率、embedding的大小、深度网络的参数、激活函数等等;并启动模型训练,训练模型的输入有三个,分别是刚才转换得到的特征索引和特征值,以及label:

import tensorflow as tf

import numpy as np

"""模型参数"""

dfm_params = {

"use_fm":True,

"use_deep":True,

"embedding_size":8,

"dropout_fm":[1.0,1.0],

"deep_layers":[32,32],

"dropout_deep":[0.5,0.5,0.5],

"deep_layer_activation":tf.nn.relu,

"epoch":30,

"batch_size":1024,

"learning_rate":0.001,

"optimizer":"adam",

"batch_norm":1,

"batch_norm_decay":0.995,

"l2_reg":0.01,

"verbose":True,

"eval_metric":'gini_norm',

"random_seed":3

}

dfm_params['feature_size'] = total_feature

dfm_params['field_size'] = len(train_feature_index.columns)

feat_index = tf.placeholder(tf.int32,shape=[None,None],name='feat_index')

feat_value = tf.placeholder(tf.float32,shape=[None,None],name='feat_value')

label = tf.placeholder(tf.float32,shape=[None,1],name='label')

定义好输入之后 我们可以按照如下的公式,构建FM模型:

![image image]()

如下所示我们将输入转为Embedding,这也是FM部分计算时所用到的一次项的权重参数;接下来进行FM计算

"""embedding"""

embeddings = tf.nn.embedding_lookup(weights['feature_embeddings'],feat_index)

reshaped_feat_value = tf.reshape(feat_value,shape=[-1,dfm_params['field_size'],1])

embeddings = tf.multiply(embeddings,reshaped_feat_value)

"""fm part"""

fm_first_order = tf.nn.embedding_lookup(weights['feature_bias'],feat_index)

fm_first_order = tf.reduce_sum(tf.multiply(fm_first_order,reshaped_feat_value),2)

summed_features_emb = tf.reduce_sum(embeddings,1)

summed_features_emb_square = tf.square(summed_features_emb)

squared_features_emb = tf.square(embeddings)

squared_sum_features_emb = tf.reduce_sum(squared_features_emb,1)

fm_second_order = 0.5 * tf.subtract(summed_features_emb_square,squared_sum_features_emb)

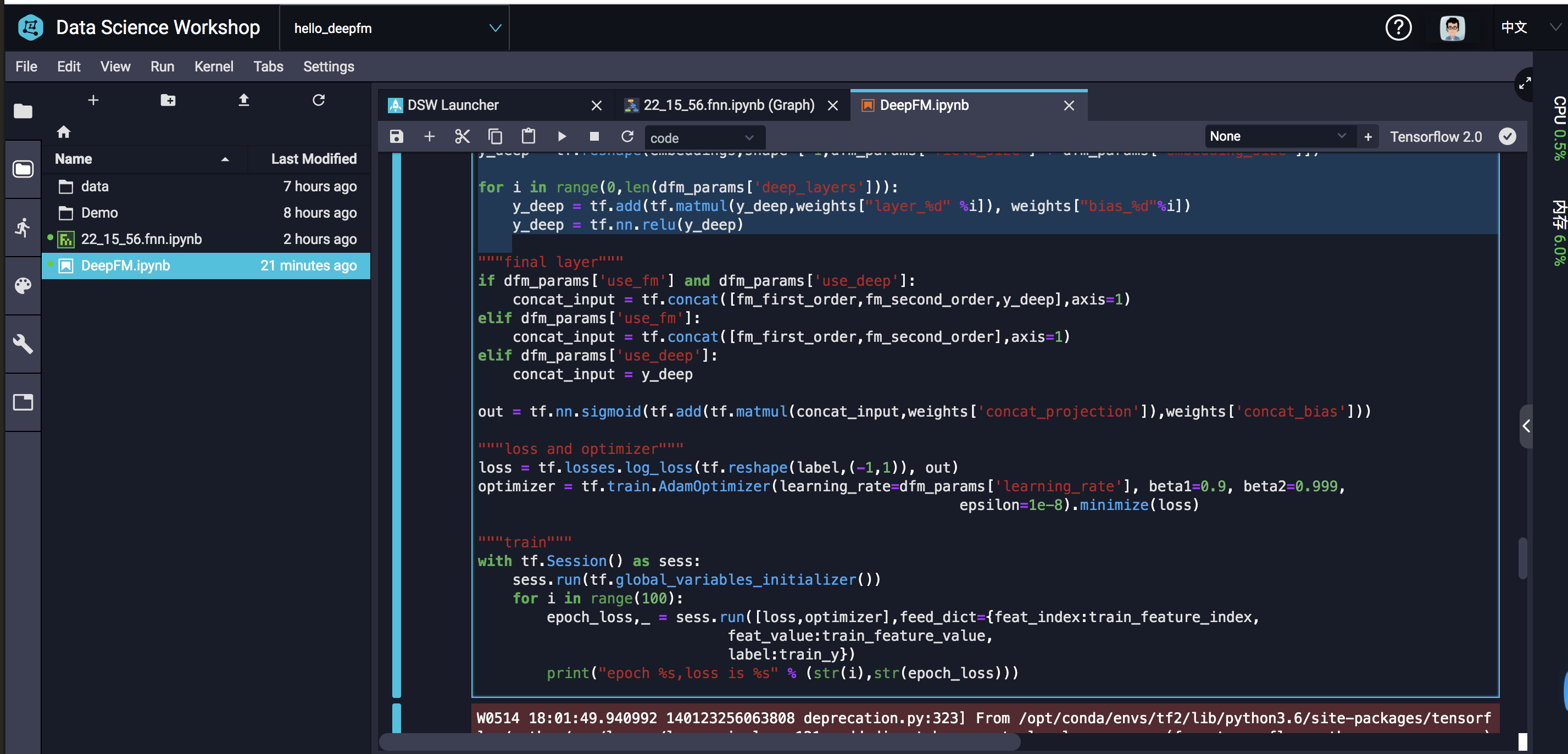

Deep

Deep部分很简单了,就是几层全连接的神经网络:

y_deep = tf.reshape(embeddings,shape=[-1,dfm_params['field_size'] * dfm_params['embedding_size']])

for i in range(0,len(dfm_params['deep_layers'])):

y_deep = tf.add(tf.matmul(y_deep,weights["layer_%d" %i]), weights["bias_%d"%i])

y_deep = tf.nn.relu(y_deep)

最后的输出部分,论文中的公式如下:

![image image]()

"""final layer"""

if dfm_params['use_fm'] and dfm_params['use_deep']:

concat_input = tf.concat([fm_first_order,fm_second_order,y_deep],axis=1)

elif dfm_params['use_fm']:

concat_input = tf.concat([fm_first_order,fm_second_order],axis=1)

elif dfm_params['use_deep']:

concat_input = y_deep

out = tf.nn.sigmoid(tf.add(tf.matmul(concat_input,weights['concat_projection']),weights['concat_bias']))

至此,我们整个DeepFM模型的架构就搭起来了,接下来,我们可以测试我们的模型结果:

"""train"""

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(100):

epoch_loss,_ = sess.run([loss,optimizer],feed_dict={feat_index:train_feature_index,

feat_value:train_feature_value,

label:train_y})

print("epoch %s,loss is %s" % (str(i),str(epoch_loss))

以上为DeepFM的实现部分,整个流程全部在PAI-Nootbook中实现

![_2020_05_15_12_13_46 _2020_05_15_12_13_46]()

现在在PAI-Studio中,https://yq.aliyun.com/articles/742753?spm=a2c4e.11157919.spm-cont-list.8.146cf2042NrSK5 也已经支持了FM操作,详情见,我们与TensorFlow DNN做拼接,也可以实现类似DeepFM的效果;

后续也希望PAI-Studio能够更灵活的支持模块定制, 当前仅支持Pyspark spark和Sql, 不支持上传py代码